Rui Luo

Who You Play Affects How You Play: Predicting Sports Performance Using Graph Attention Networks With Temporal Convolution

Mar 29, 2023

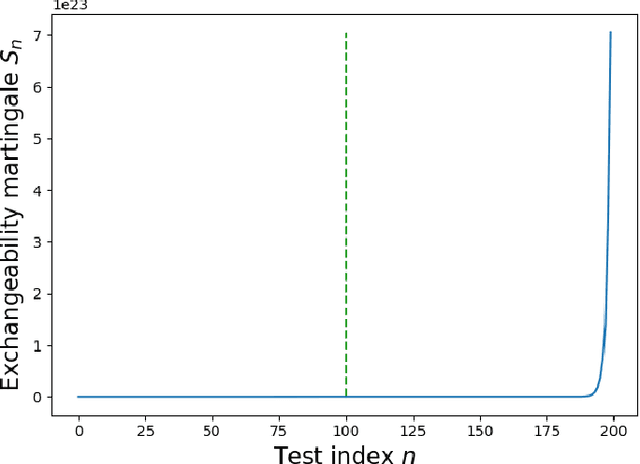

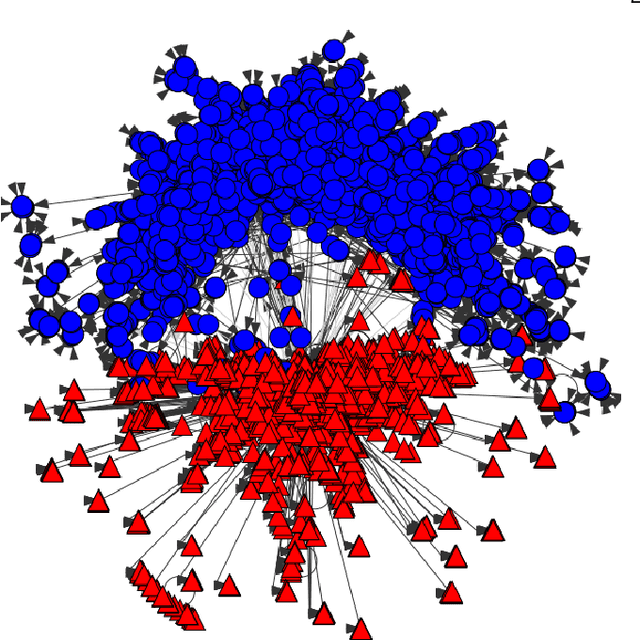

Abstract:This study presents a novel deep learning method, called GATv2-GCN, for predicting player performance in sports. To construct a dynamic player interaction graph, we leverage player statistics and their interactions during gameplay. We use a graph attention network to capture the attention that each player pays to each other, allowing for more accurate modeling of the dynamic player interactions. To handle the multivariate player statistics time series, we incorporate a temporal convolution layer, which provides the model with temporal predictive power. We evaluate the performance of our model using real-world sports data, demonstrating its effectiveness in predicting player performance. Furthermore, we explore the potential use of our model in a sports betting context, providing insights into profitable strategies that leverage our predictive power. The proposed method has the potential to advance the state-of-the-art in player performance prediction and to provide valuable insights for sports analytics and betting industries.

Fréchet Statistics Based Change Point Detection in Dynamic Social Networks

Mar 19, 2023

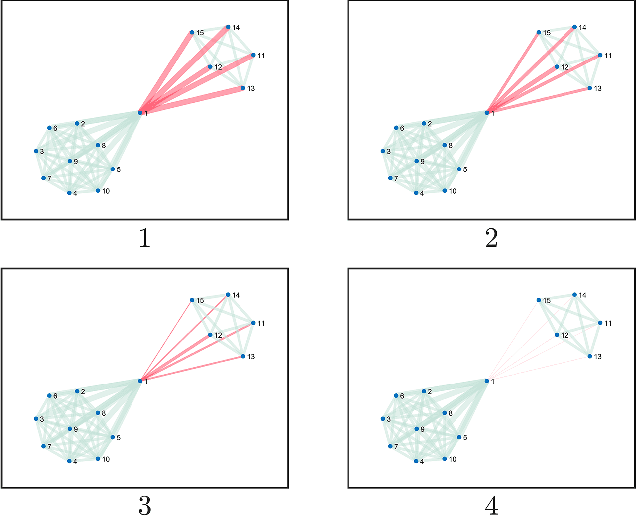

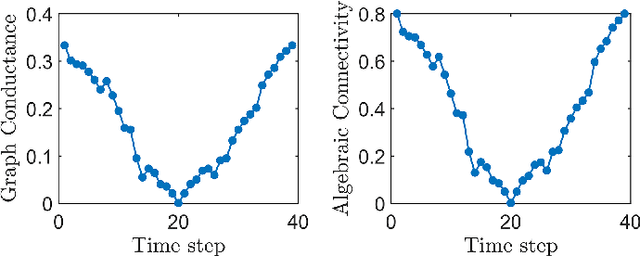

Abstract:This paper proposes a method to detect change points in dynamic social networks using Fr\'echet statistics. We address two main questions: (1) what metric can quantify the distances between graph Laplacians in a dynamic network and enable efficient computation, and (2) how can the Fr\'echet statistics be extended to detect multiple change points while maintaining the significance level of the hypothesis test? Our solution defines a metric space for graph Laplacians using the Log-Euclidean metric, enabling a closed-form formula for Fr\'echet mean and variance. We present a framework for change point detection using Fr\'echet statistics and extend it to multiple change points with binary segmentation. The proposed algorithm uses incremental computation for Fr\'echet mean and variance to improve efficiency and is validated on simulated and two real-world datasets, namely the UCI message dataset and the Enron email dataset.

Team Northeastern's Approach to ANA XPRIZE Avatar Final Testing: A Holistic Approach to Telepresence and Lessons Learned

Mar 08, 2023

Abstract:This paper reports on Team Northeastern's Avatar system for telepresence, and our holistic approach to meet the ANA Avatar XPRIZE Final testing task requirements. The system features a dual-arm configuration with hydraulically actuated glove-gripper pair for haptic force feedback. Our proposed Avatar system was evaluated in the ANA Avatar XPRIZE Finals and completed all 10 tasks, scored 14.5 points out of 15.0, and received the 3rd Place Award. We provide the details of improvements over our first generation Avatar, covering manipulation, perception, locomotion, power, network, and controller design. We also extensively discuss the major lessons learned during our participation in the competition.

Detection of Strongly Lensed Arcs in Galaxy Clusters with Transformers

Nov 11, 2022Abstract:Strong lensing in galaxy clusters probes properties of dense cores of dark matter halos in mass, studies the distant universe at flux levels and spatial resolutions otherwise unavailable, and constrains cosmological models independently. The next-generation large scale sky imaging surveys are expected to discover thousands of cluster-scale strong lenses, which would lead to unprecedented opportunities for applying cluster-scale strong lenses to solve astrophysical and cosmological problems. However, the large dataset challenges astronomers to identify and extract strong lensing signals, particularly strongly lensed arcs, because of their complexity and variety. Hence, we propose a framework to detect cluster-scale strongly lensed arcs, which contains a transformer-based detection algorithm and an image simulation algorithm. We embed prior information of strongly lensed arcs at cluster-scale into the training data through simulation and then train the detection algorithm with simulated images. We use the trained transformer to detect strongly lensed arcs from simulated and real data. Results show that our approach could achieve 99.63 % accuracy rate, 90.32 % recall rate, 85.37 % precision rate and 0.23 % false positive rate in detection of strongly lensed arcs from simulated images and could detect almost all strongly lensed arcs in real observation images. Besides, with an interpretation method, we have shown that our method could identify important information embedded in simulated data. Next step, to test the reliability and usability of our approach, we will apply it to available observations (e.g., DESI Legacy Imaging Surveys) and simulated data of upcoming large-scale sky surveys, such as the Euclid and the CSST.

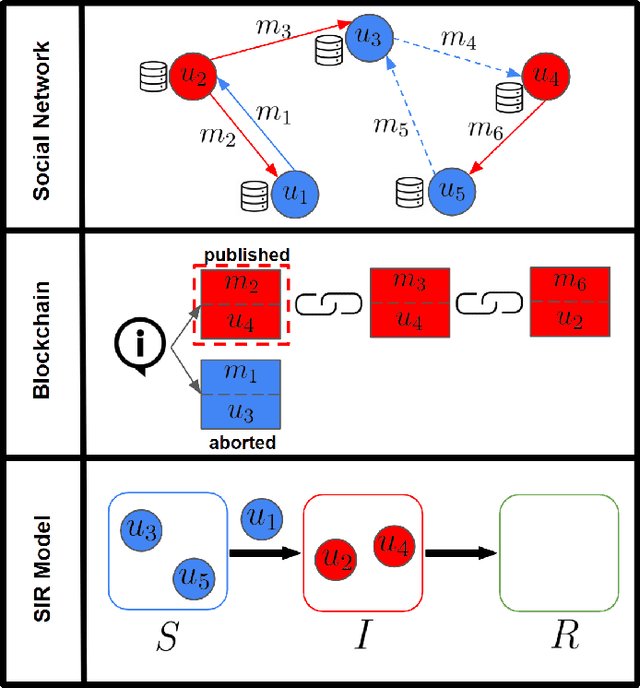

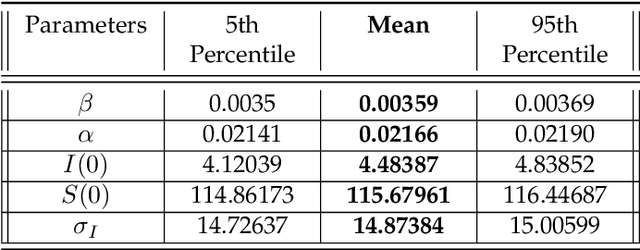

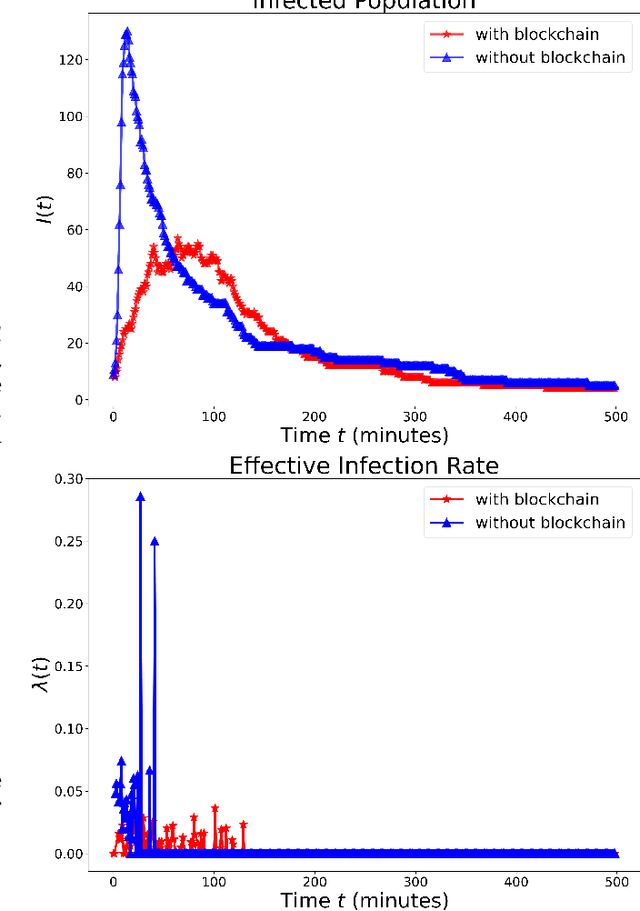

Mitigating Misinformation Spread on Blockchain Enabled Social Media Networks

Feb 09, 2022

Abstract:The paper develops a blockchain protocol for a social media network (BE-SMN) to mitigate the spread of misinformation. BE-SMN is derived based on the information transmission-time distribution by modeling the misinformation transmission as double-spend attacks on blockchain. The misinformation distribution is then incorporated into the SIR (Susceptible, Infectious, or Recovered) model, which substitutes the single rate parameter in the traditional SIR model. Then, on a multi-community network, we study the propagation of misinformation numerically and show that the proposed blockchain enabled social media network outperforms the baseline network in flattening the curve of the infected population.

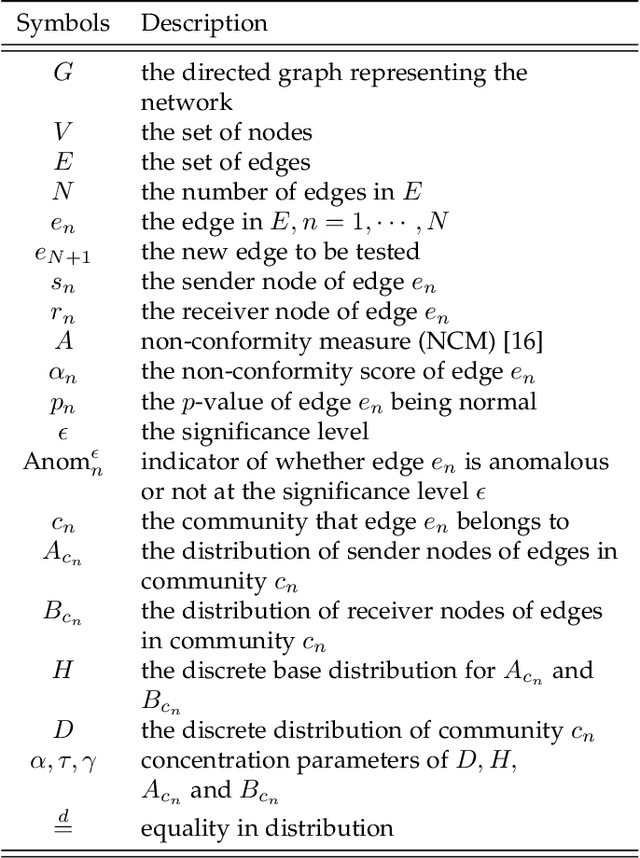

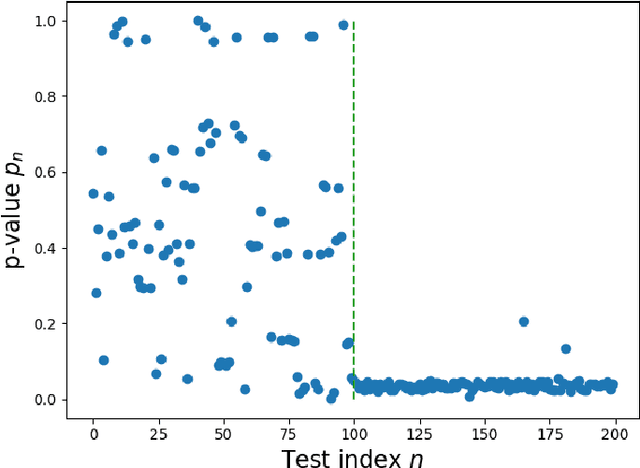

Anomalous Edge Detection in Edge Exchangeable Social Network Models

Sep 27, 2021

Abstract:This paper studies detecting anomalous edges in directed graphs that model social networks. We exploit edge exchangeability as a criterion for distinguishing anomalous edges from normal edges. Then we present an anomaly detector based on conformal prediction theory; this detector has a guaranteed upper bound for false positive rate. In numerical experiments, we show that the proposed algorithm achieves superior performance to baseline methods.

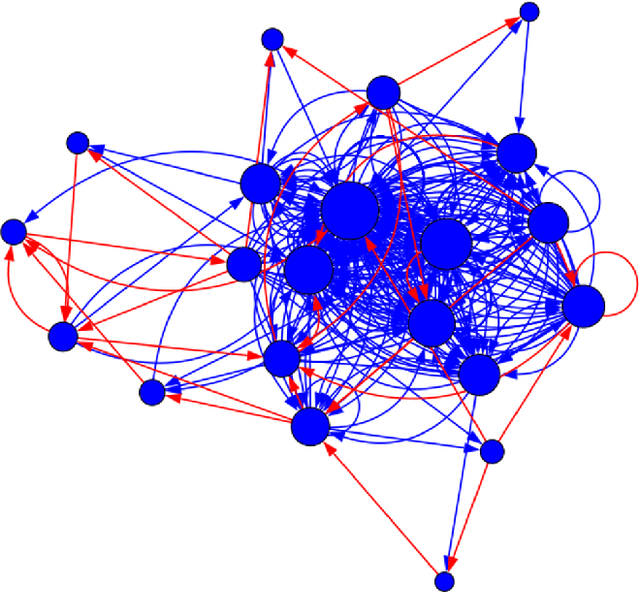

Revisiting the Characteristics of Stochastic Gradient Noise and Dynamics

Sep 20, 2021

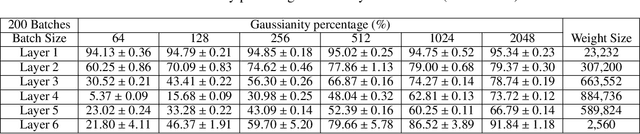

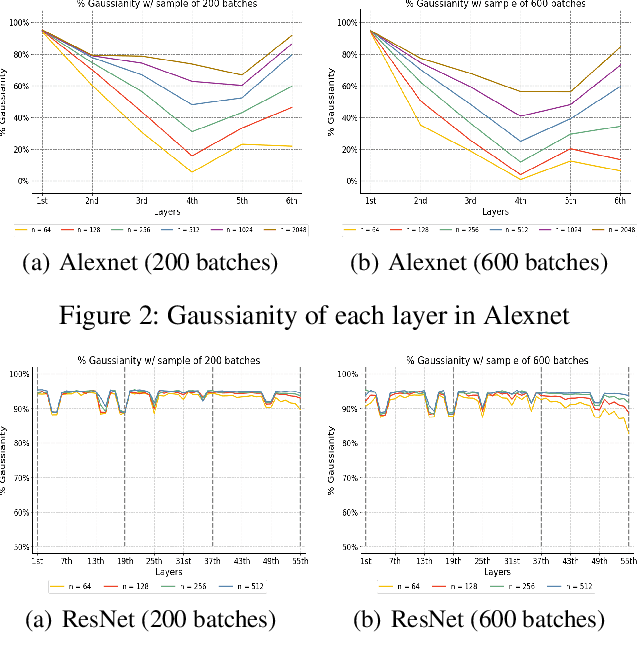

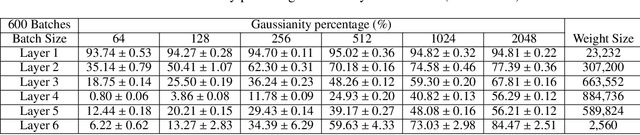

Abstract:In this paper, we characterize the noise of stochastic gradients and analyze the noise-induced dynamics during training deep neural networks by gradient-based optimizers. Specifically, we firstly show that the stochastic gradient noise possesses finite variance, and therefore the classical Central Limit Theorem (CLT) applies; this indicates that the gradient noise is asymptotically Gaussian. Such an asymptotic result validates the wide-accepted assumption of Gaussian noise. We clarify that the recently observed phenomenon of heavy tails within gradient noise may not be intrinsic properties, but the consequence of insufficient mini-batch size; the gradient noise, which is a sum of limited i.i.d. random variables, has not reached the asymptotic regime of CLT, thus deviates from Gaussian. We quantitatively measure the goodness of Gaussian approximation of the noise, which supports our conclusion. Secondly, we analyze the noise-induced dynamics of stochastic gradient descent using the Langevin equation, granting for momentum hyperparameter in the optimizer with a physical interpretation. We then proceed to demonstrate the existence of the steady-state distribution of stochastic gradient descent and approximate the distribution at a small learning rate.

More Than Just Attention: Learning Cross-Modal Attentions with Contrastive Constraints

May 20, 2021

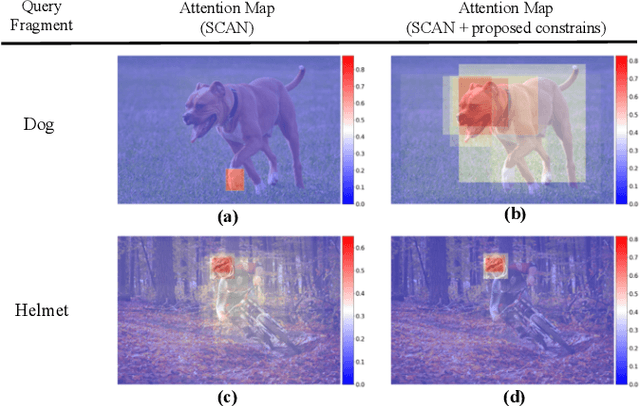

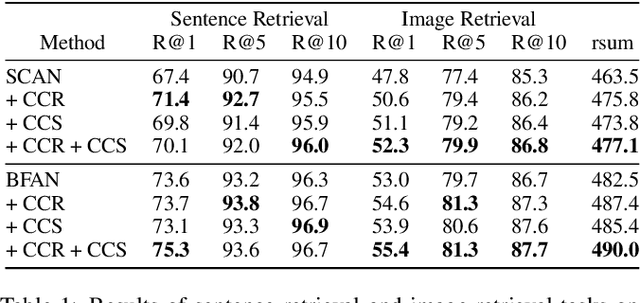

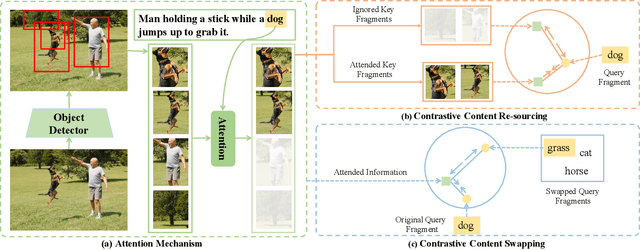

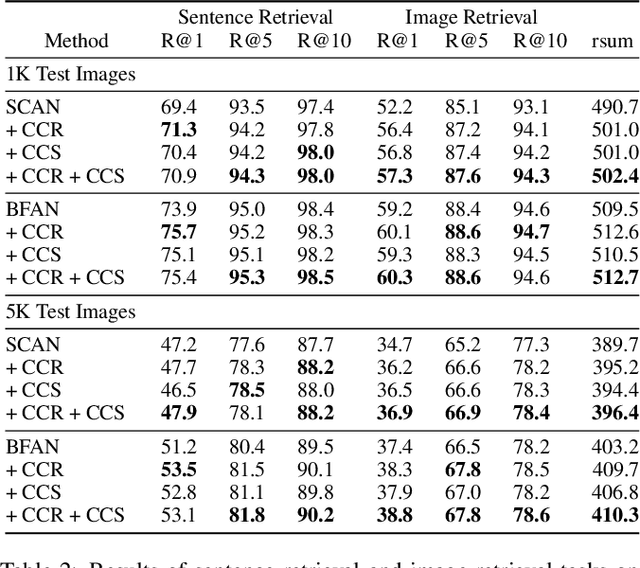

Abstract:Attention mechanisms have been widely applied to cross-modal tasks such as image captioning and information retrieval, and have achieved remarkable improvements due to its capability to learn fine-grained relevance across different modalities. However, existing attention models could be sub-optimal and lack preciseness because there is no direct supervision involved during training. In this work, we propose Contrastive Content Re-sourcing (CCR) and Contrastive Content Swapping (CCS) constraints to address such limitation. These constraints supervise the training of attention models in a contrastive learning manner without requiring explicit attention annotations. Additionally, we introduce three metrics, namely Attention Precision, Recall and F1-Score, to quantitatively evaluate the attention quality. We evaluate the proposed constraints with cross-modal retrieval (image-text matching) task. The experiments on both Flickr30k and MS-COCO datasets demonstrate that integrating these attention constraints into two state-of-the-art attention-based models improves the model performance in terms of both retrieval accuracy and attention metrics.

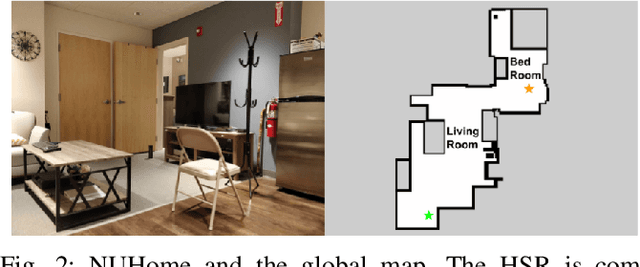

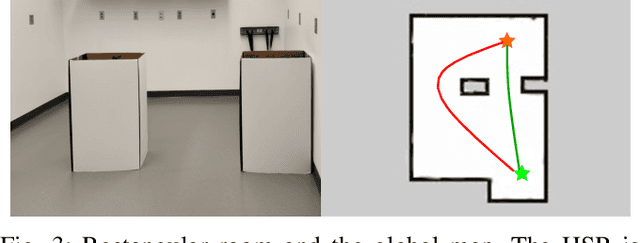

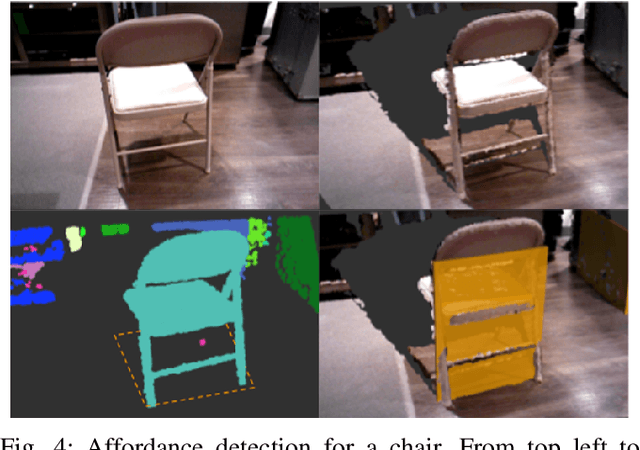

Affordance-Based Mobile Robot Navigation Among Movable Obstacles

Feb 09, 2021

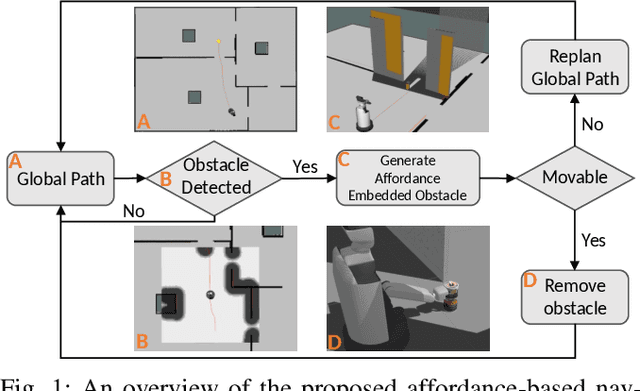

Abstract:Avoiding obstacles in the perceived world has been the classical approach to autonomous mobile robot navigation. However, this usually leads to unnatural and inefficient motions that significantly differ from the way humans move in tight and dynamic spaces, as we do not refrain interacting with the environment around us when necessary. Inspired by this observation, we propose a framework for autonomous robot navigation among movable obstacles (NAMO) that is based on the theory of affordances and contact-implicit motion planning. We consider a realistic scenario in which a mobile service robot negotiates unknown obstacles in the environment while navigating to a goal state. An affordance extraction procedure is performed for novel obstacles to detect their movability, and a contact-implicit trajectory optimization method is used to enable the robot to interact with movable obstacles to improve the task performance or to complete an otherwise infeasible task. We demonstrate the performance of the proposed framework by hardware experiments with Toyota's Human Support Robot.

Echo Chambers and Segregation in Social Networks: Markov Bridge Models and Estimation

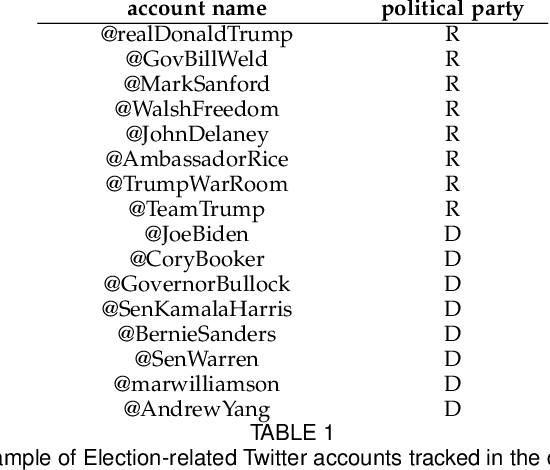

Dec 25, 2020

Abstract:This paper deals with the modeling and estimation of the sociological phenomena called echo chambers and segregation in social networks. Specifically, we present a novel community-based graph model that represents the emergence of segregated echo chambers as a Markov bridge process. A Markov bridge is a one-dimensional Markov random field that facilitates modeling the formation and disassociation of communities at deterministic times which is important in social networks with known timed events. We justify the proposed model with six real world examples and examine its performance on a recent Twitter dataset. We provide model parameter estimation algorithm based on maximum likelihood and, a Bayesian filtering algorithm for recursively estimating the level of segregation using noisy samples obtained from the network. Numerical results indicate that the proposed filtering algorithm outperforms the conventional hidden Markov modeling in terms of the mean-squared error. The proposed filtering method is useful in computational social science where data-driven estimation of the level of segregation from noisy data is required.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge