Qian Du

Convolution and Attention Mixer for Synthetic Aperture Radar Image Change Detection

Sep 21, 2023Abstract:Synthetic aperture radar (SAR) image change detection is a critical task and has received increasing attentions in the remote sensing community. However, existing SAR change detection methods are mainly based on convolutional neural networks (CNNs), with limited consideration of global attention mechanism. In this letter, we explore Transformer-like architecture for SAR change detection to incorporate global attention. To this end, we propose a convolution and attention mixer (CAMixer). First, to compensate the inductive bias for Transformer, we combine self-attention with shift convolution in a parallel way. The parallel design effectively captures the global semantic information via the self-attention and performs local feature extraction through shift convolution simultaneously. Second, we adopt a gating mechanism in the feed-forward network to enhance the non-linear feature transformation. The gating mechanism is formulated as the element-wise multiplication of two parallel linear layers. Important features can be highlighted, leading to high-quality representations against speckle noise. Extensive experiments conducted on three SAR datasets verify the superior performance of the proposed CAMixer. The source codes will be publicly available at https://github.com/summitgao/CAMixer .

Physical Knowledge Enhanced Deep Neural Network for Sea Surface Temperature Prediction

Apr 19, 2023Abstract:Traditionally, numerical models have been deployed in oceanography studies to simulate ocean dynamics by representing physical equations. However, many factors pertaining to ocean dynamics seem to be ill-defined. We argue that transferring physical knowledge from observed data could further improve the accuracy of numerical models when predicting Sea Surface Temperature (SST). Recently, the advances in earth observation technologies have yielded a monumental growth of data. Consequently, it is imperative to explore ways in which to improve and supplement numerical models utilizing the ever-increasing amounts of historical observational data. To this end, we introduce a method for SST prediction that transfers physical knowledge from historical observations to numerical models. Specifically, we use a combination of an encoder and a generative adversarial network (GAN) to capture physical knowledge from the observed data. The numerical model data is then fed into the pre-trained model to generate physics-enhanced data, which can then be used for SST prediction. Experimental results demonstrate that the proposed method considerably enhances SST prediction performance when compared to several state-of-the-art baselines.

Multi-scale Adaptive Fusion Network for Hyperspectral Image Denoising

Apr 19, 2023

Abstract:Removing the noise and improving the visual quality of hyperspectral images (HSIs) is challenging in academia and industry. Great efforts have been made to leverage local, global or spectral context information for HSI denoising. However, existing methods still have limitations in feature interaction exploitation among multiple scales and rich spectral structure preservation. In view of this, we propose a novel solution to investigate the HSI denoising using a Multi-scale Adaptive Fusion Network (MAFNet), which can learn the complex nonlinear mapping between clean and noisy HSI. Two key components contribute to improving the hyperspectral image denoising: A progressively multiscale information aggregation network and a co-attention fusion module. Specifically, we first generate a set of multiscale images and feed them into a coarse-fusion network to exploit the contextual texture correlation. Thereafter, a fine fusion network is followed to exchange the information across the parallel multiscale subnetworks. Furthermore, we design a co-attention fusion module to adaptively emphasize informative features from different scales, and thereby enhance the discriminative learning capability for denoising. Extensive experiments on synthetic and real HSI datasets demonstrate that the proposed MAFNet has achieved better denoising performance than other state-of-the-art techniques. Our codes are available at \verb'https://github.com/summitgao/MAFNet'.

Nearest Neighbor-Based Contrastive Learning for Hyperspectral and LiDAR Data Classification

Jan 09, 2023Abstract:The joint hyperspectral image (HSI) and LiDAR data classification aims to interpret ground objects at more detailed and precise level. Although deep learning methods have shown remarkable success in the multisource data classification task, self-supervised learning has rarely been explored. It is commonly nontrivial to build a robust self-supervised learning model for multisource data classification, due to the fact that the semantic similarities of neighborhood regions are not exploited in existing contrastive learning framework. Furthermore, the heterogeneous gap induced by the inconsistent distribution of multisource data impedes the classification performance. To overcome these disadvantages, we propose a Nearest Neighbor-based Contrastive Learning Network (NNCNet), which takes full advantage of large amounts of unlabeled data to learn discriminative feature representations. Specifically, we propose a nearest neighbor-based data augmentation scheme to use enhanced semantic relationships among nearby regions. The intermodal semantic alignments can be captured more accurately. In addition, we design a bilinear attention module to exploit the second-order and even high-order feature interactions between the HSI and LiDAR data. Extensive experiments on four public datasets demonstrate the superiority of our NNCNet over state-of-the-art methods. The source codes are available at \url{https://github.com/summitgao/NNCNet}.

SuperYOLO: Super Resolution Assisted Object Detection in Multimodal Remote Sensing Imagery

Sep 27, 2022

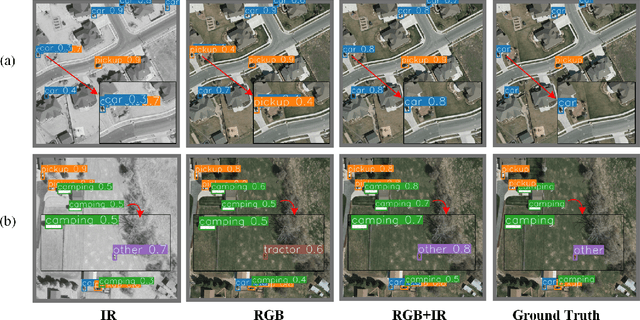

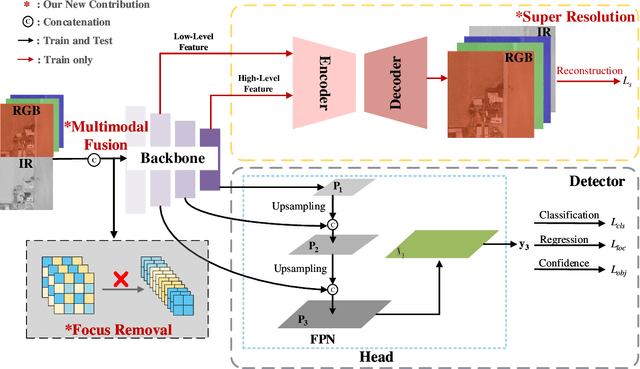

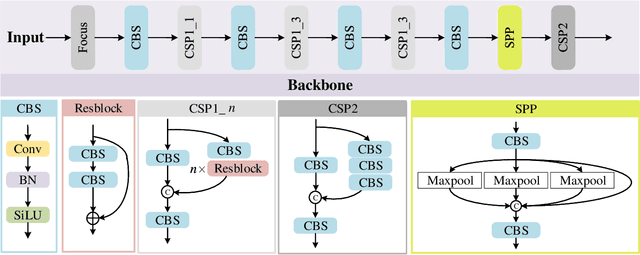

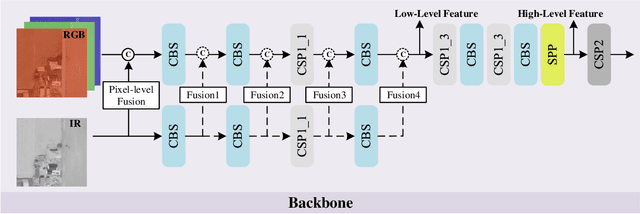

Abstract:In this paper, we propose an accurate yet fast small object detection method for RSI, named SuperYOLO, which fuses multimodal data and performs high resolution (HR) object detection on multiscale objects by utilizing the assisted super resolution (SR) learning and considering both the detection accuracy and computation cost. First, we construct a compact baseline by removing the Focus module to keep the HR features and significantly overcomes the missing error of small objects. Second, we utilize pixel-level multimodal fusion (MF) to extract information from various data to facilitate more suitable and effective features for small objects in RSI. Furthermore, we design a simple and flexible SR branch to learn HR feature representations that can discriminate small objects from vast backgrounds with low-resolution (LR) input, thus further improving the detection accuracy. Moreover, to avoid introducing additional computation, the SR branch is discarded in the inference stage and the computation of the network model is reduced due to the LR input. Experimental results show that, on the widely used VEDAI RS dataset, SuperYOLO achieves an accuracy of 73.61% (in terms of mAP50), which is more than 10% higher than the SOTA large models such as YOLOv5l, YOLOv5x and RS designed YOLOrs. Meanwhile, the GFOLPs and parameter size of SuperYOLO are about 18.1x and 4.2x less than YOLOv5x. Our proposed model shows a favorable accuracy-speed trade-off compared to the state-of-art models. The code will be open sourced at https://github.com/icey-zhang/SuperYOLO.

Single-source Domain Expansion Network for Cross-Scene Hyperspectral Image Classification

Sep 04, 2022

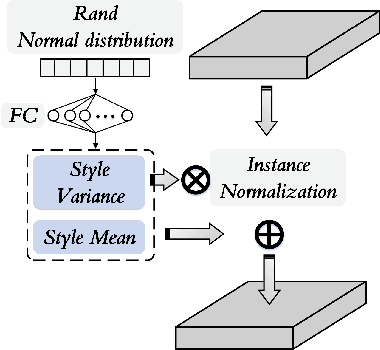

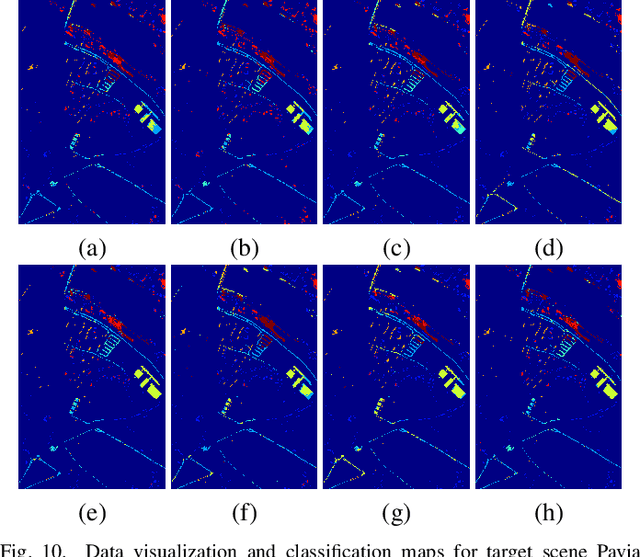

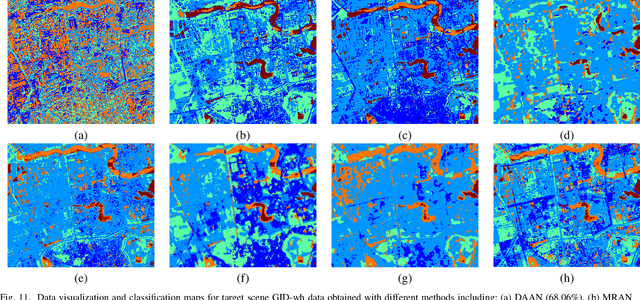

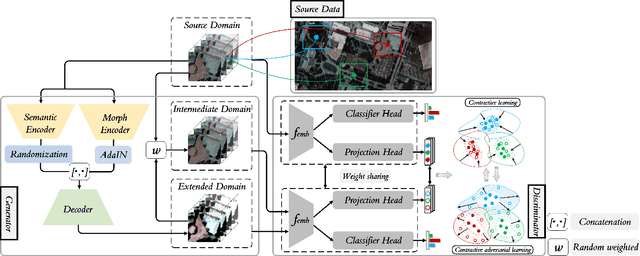

Abstract:Currently, cross-scene hyperspectral image (HSI) classification has drawn increasing attention. It is necessary to train a model only on source domain (SD) and directly transferring the model to target domain (TD), when TD needs to be processed in real time and cannot be reused for training. Based on the idea of domain generalization, a Single-source Domain Expansion Network (SDEnet) is developed to ensure the reliability and effectiveness of domain extension. The method uses generative adversarial learning to train in SD and test in TD. A generator including semantic encoder and morph encoder is designed to generate the extended domain (ED) based on encoder-randomization-decoder architecture, where spatial and spectral randomization are specifically used to generate variable spatial and spectral information, and the morphological knowledge is implicitly applied as domain invariant information during domain expansion. Furthermore, the supervised contrastive learning is employed in the discriminator to learn class-wise domain invariant representation, which drives intra-class samples of SD and ED. Meanwhile, adversarial training is designed to optimize the generator to drive intra-class samples of SD and ED to be separated. Extensive experiments on two public HSI datasets and one additional multispectral image (MSI) dataset demonstrate the superiority of the proposed method when compared with state-of-the-art techniques.

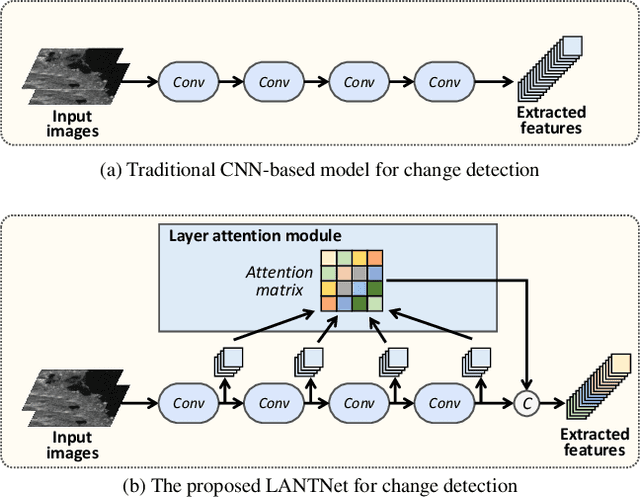

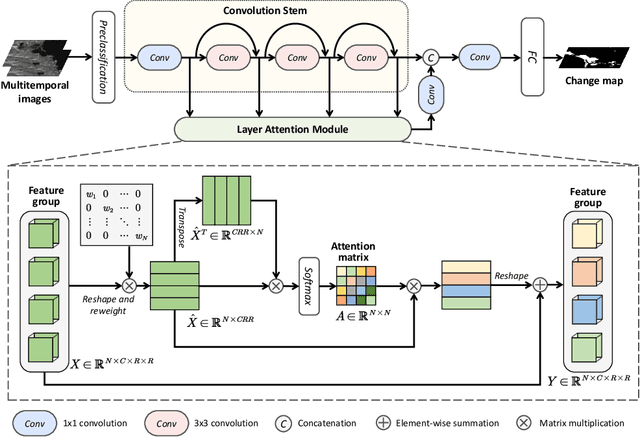

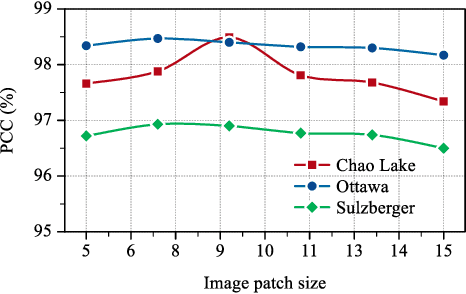

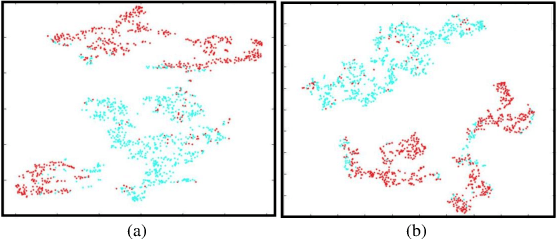

Synthetic Aperture Radar Image Change Detection via Layer Attention-Based Noise-Tolerant Network

Aug 09, 2022

Abstract:Recently, change detection methods for synthetic aperture radar (SAR) images based on convolutional neural networks (CNN) have gained increasing research attention. However, existing CNN-based methods neglect the interactions among multilayer convolutions, and errors involved in the preclassification restrict the network optimization. To this end, we proposed a layer attention-based noise-tolerant network, termed LANTNet. In particular, we design a layer attention module that adaptively weights the feature of different convolution layers. In addition, we design a noise-tolerant loss function that effectively suppresses the impact of noisy labels. Therefore, the model is insensitive to noisy labels in the preclassification results. The experimental results on three SAR datasets show that the proposed LANTNet performs better compared to several state-of-the-art methods. The source codes are available at https://github.com/summitgao/LANTNet

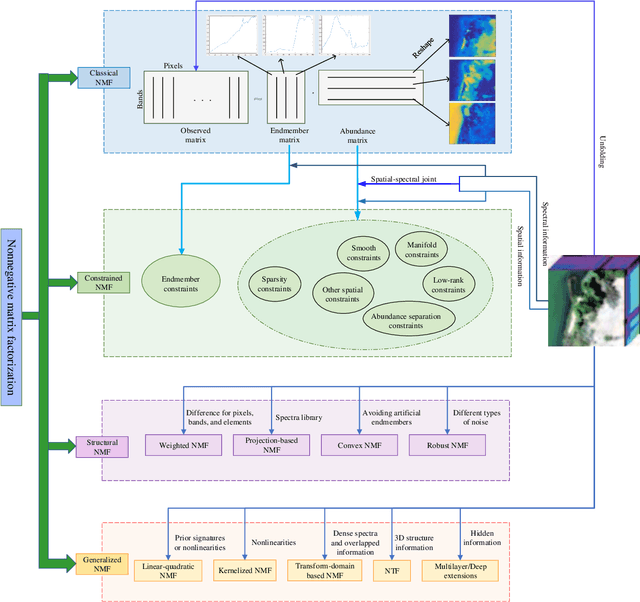

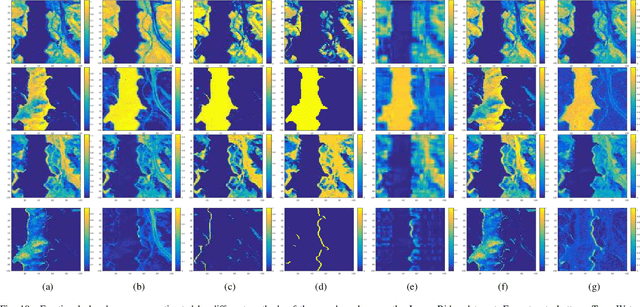

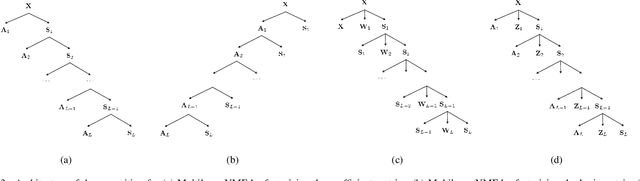

Hyperspectral Unmixing Based on Nonnegative Matrix Factorization: A Comprehensive Review

May 20, 2022

Abstract:Hyperspectral unmixing has been an important technique that estimates a set of endmembers and their corresponding abundances from a hyperspectral image (HSI). Nonnegative matrix factorization (NMF) plays an increasingly significant role in solving this problem. In this article, we present a comprehensive survey of the NMF-based methods proposed for hyperspectral unmixing. Taking the NMF model as a baseline, we show how to improve NMF by utilizing the main properties of HSIs (e.g., spectral, spatial, and structural information). We categorize three important development directions including constrained NMF, structured NMF, and generalized NMF. Furthermore, several experiments are conducted to illustrate the effectiveness of associated algorithms. Finally, we conclude the article with possible future directions with the purposes of providing guidelines and inspiration to promote the development of hyperspectral unmixing.

A Survey on Hyperspectral Image Restoration: From the View of Low-Rank Tensor Approximation

May 18, 2022

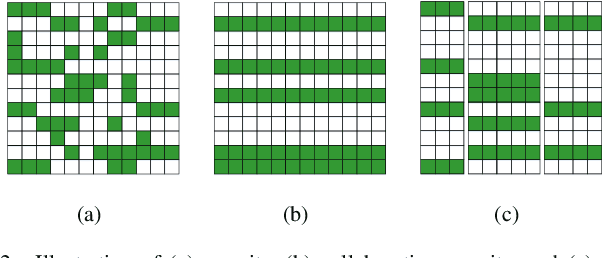

Abstract:The ability of capturing fine spectral discriminative information enables hyperspectral images (HSIs) to observe, detect and identify objects with subtle spectral discrepancy. However, the captured HSIs may not represent true distribution of ground objects and the received reflectance at imaging instruments may be degraded, owing to environmental disturbances, atmospheric effects and sensors' hardware limitations. These degradations include but are not limited to: complex noise (i.e., Gaussian noise, impulse noise, sparse stripes, and their mixtures), heavy stripes, deadlines, cloud and shadow occlusion, blurring and spatial-resolution degradation and spectral absorption, etc. These degradations dramatically reduce the quality and usefulness of HSIs. Low-rank tensor approximation (LRTA) is such an emerging technique, having gained much attention in HSI restoration community, with ever-growing theoretical foundation and pivotal technological innovation. Compared to low-rank matrix approximation (LRMA), LRTA is capable of characterizing more complex intrinsic structure of high-order data and owns more efficient learning abilities, being established to address convex and non-convex inverse optimization problems induced by HSI restoration. This survey mainly attempts to present a sophisticated, cutting-edge, and comprehensive technical survey of LRTA toward HSI restoration, specifically focusing on the following six topics: Denoising, Destriping, Inpainting, Deblurring, Super--resolution and Fusion. The theoretical development and variants of LRTA techniques are also elaborated. For each topic, the state-of-the-art restoration methods are compared by assessing their performance both quantitatively and visually. Open issues and challenges are also presented, including model formulation, algorithm design, prior exploration and application concerning the interpretation requirements.

Adaptive Cross-Attention-Driven Spatial-Spectral Graph Convolutional Network for Hyperspectral Image Classification

Apr 12, 2022

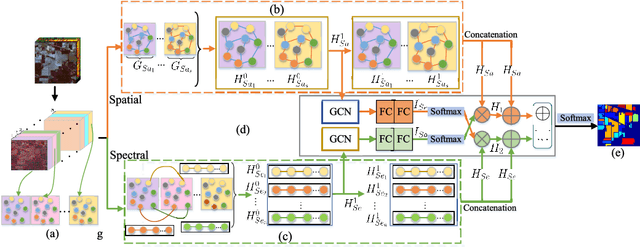

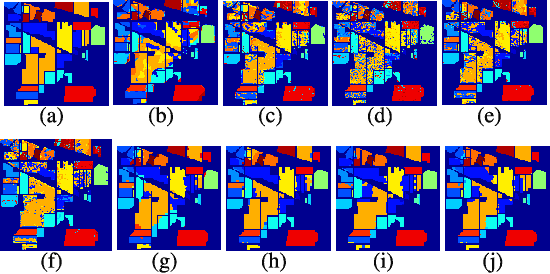

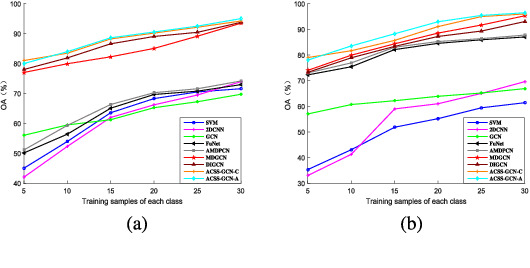

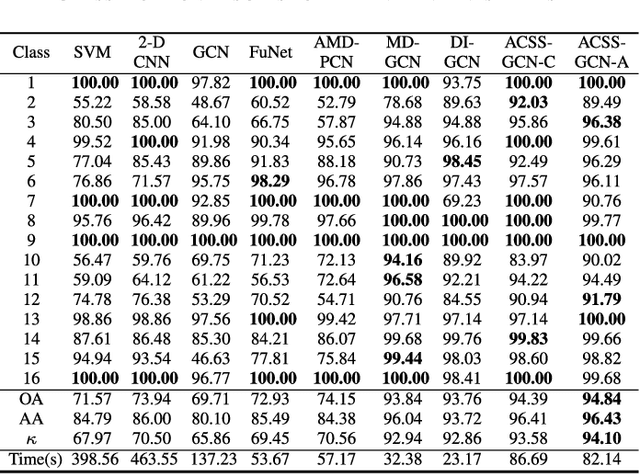

Abstract:Recently, graph convolutional networks (GCNs) have been developed to explore spatial relationship between pixels, achieving better classification performance of hyperspectral images (HSIs). However, these methods fail to sufficiently leverage the relationship between spectral bands in HSI data. As such, we propose an adaptive cross-attention-driven spatial-spectral graph convolutional network (ACSS-GCN), which is composed of a spatial GCN (Sa-GCN) subnetwork, a spectral GCN (Se-GCN) subnetwork, and a graph cross-attention fusion module (GCAFM). Specifically, Sa-GCN and Se-GCN are proposed to extract the spatial and spectral features by modeling correlations between spatial pixels and between spectral bands, respectively. Then, by integrating attention mechanism into information aggregation of graph, the GCAFM, including three parts, i.e., spatial graph attention block, spectral graph attention block, and fusion block, is designed to fuse the spatial and spectral features and suppress noise interference in Sa-GCN and Se-GCN. Moreover, the idea of the adaptive graph is introduced to explore an optimal graph through back propagation during the training process. Experiments on two HSI data sets show that the proposed method achieves better performance than other classification methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge