Peihong Guo

FactorPortrait: Controllable Portrait Animation via Disentangled Expression, Pose, and Viewpoint

Dec 12, 2025Abstract:We introduce FactorPortrait, a video diffusion method for controllable portrait animation that enables lifelike synthesis from disentangled control signals of facial expressions, head movement, and camera viewpoints. Given a single portrait image, a driving video, and camera trajectories, our method animates the portrait by transferring facial expressions and head movements from the driving video while simultaneously enabling novel view synthesis from arbitrary viewpoints. We utilize a pre-trained image encoder to extract facial expression latents from the driving video as control signals for animation generation. Such latents implicitly capture nuanced facial expression dynamics with identity and pose information disentangled, and they are efficiently injected into the video diffusion transformer through our proposed expression controller. For camera and head pose control, we employ Plücker ray maps and normal maps rendered from 3D body mesh tracking. To train our model, we curate a large-scale synthetic dataset containing diverse combinations of camera viewpoints, head poses, and facial expression dynamics. Extensive experiments demonstrate that our method outperforms existing approaches in realism, expressiveness, control accuracy, and view consistency.

Repurposing 2D Diffusion Models with Gaussian Atlas for 3D Generation

Mar 20, 2025Abstract:Recent advances in text-to-image diffusion models have been driven by the increasing availability of paired 2D data. However, the development of 3D diffusion models has been hindered by the scarcity of high-quality 3D data, resulting in less competitive performance compared to their 2D counterparts. To address this challenge, we propose repurposing pre-trained 2D diffusion models for 3D object generation. We introduce Gaussian Atlas, a novel representation that utilizes dense 2D grids, enabling the fine-tuning of 2D diffusion models to generate 3D Gaussians. Our approach demonstrates successful transfer learning from a pre-trained 2D diffusion model to a 2D manifold flattened from 3D structures. To support model training, we compile GaussianVerse, a large-scale dataset comprising 205K high-quality 3D Gaussian fittings of various 3D objects. Our experimental results show that text-to-image diffusion models can be effectively adapted for 3D content generation, bridging the gap between 2D and 3D modeling.

High-dimensional point forecast combinations for emergency department demand

Jan 20, 2025

Abstract:Current work on forecasting emergency department (ED) admissions focuses on disease aggregates or singular disease types. However, given differences in the dynamics of individual diseases, it is unlikely that any single forecasting model would accurately account for each disease and for all time, leading to significant forecast model uncertainty. Yet, forecasting models for ED admissions to-date do not explore the utility of forecast combinations to improve forecast accuracy and stability. It is also unknown whether improvements in forecast accuracy can be yield from (1) incorporating a large number of environmental and anthropogenic covariates or (2) forecasting total ED causes by aggregating cause-specific ED forecasts. To address this gap, we propose high-dimensional forecast combination schemes to combine a large number of forecasting individual models for forecasting cause-specific ED admissions over multiple causes and forecast horizons. We use time series data of ED admissions with an extensive set of explanatory lagged variables at the national level, including meteorological/ambient air pollutant variables and ED admissions of all 16 causes studied. We show that the simple forecast combinations yield forecast accuracies of around 3.81%-23.54% across causes. Furthermore, forecast combinations outperform individual forecasting models, in more than 50% of scenarios (across all ED admission categories and horizons) in a statistically significant manner. Inclusion of high-dimensional covariates and aggregating cause-specific forecasts to provide all-cause ED forecasts provided modest improvements in forecast accuracy. Forecasting cause-specific ED admissions can provide fine-scale forward guidance on resource optimization and pandemic preparedness and forecast combinations can be used to hedge against model uncertainty when forecasting across a wide range of admission categories.

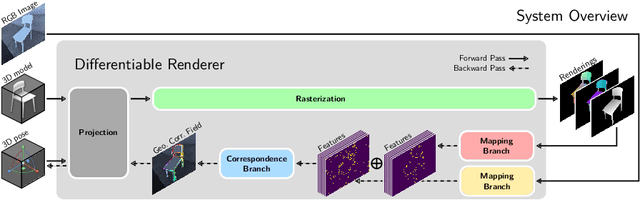

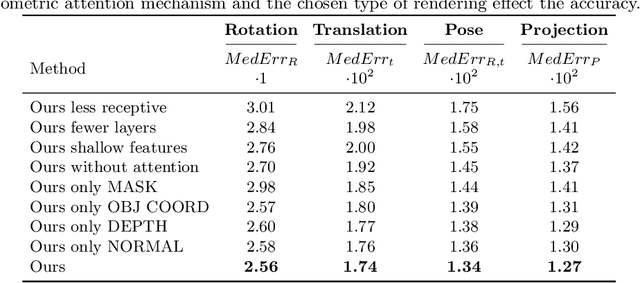

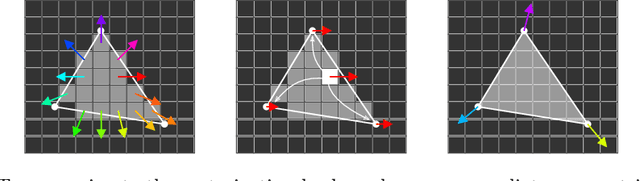

Geometric Correspondence Fields: Learned Differentiable Rendering for 3D Pose Refinement in the Wild

Jul 17, 2020

Abstract:We present a novel 3D pose refinement approach based on differentiable rendering for objects of arbitrary categories in the wild. In contrast to previous methods, we make two main contributions: First, instead of comparing real-world images and synthetic renderings in the RGB or mask space, we compare them in a feature space optimized for 3D pose refinement. Second, we introduce a novel differentiable renderer that learns to approximate the rasterization backward pass from data instead of relying on a hand-crafted algorithm. For this purpose, we predict deep cross-domain correspondences between RGB images and 3D model renderings in the form of what we call geometric correspondence fields. These correspondence fields serve as pixel-level gradients which are analytically propagated backward through the rendering pipeline to perform a gradient-based optimization directly on the 3D pose. In this way, we precisely align 3D models to objects in RGB images which results in significantly improved 3D pose estimates. We evaluate our approach on the challenging Pix3D dataset and achieve up to 55% relative improvement compared to state-of-the-art refinement methods in multiple metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge