Pei Wang

National Astronomical Observatories, Chinese Academy of Sciences

ShopSimulator: Evaluating and Exploring RL-Driven LLM Agent for Shopping Assistants

Jan 26, 2026Abstract:Large language model (LLM)-based agents are increasingly deployed in e-commerce shopping. To perform thorough, user-tailored product searches, agents should interpret personal preferences, engage in multi-turn dialogues, and ultimately retrieve and discriminate among highly similar products. However, existing research has yet to provide a unified simulation environment that consistently captures all of these aspects, and always focuses solely on evaluation benchmarks without training support. In this paper, we introduce ShopSimulator, a large-scale and challenging Chinese shopping environment. Leveraging ShopSimulator, we evaluate LLMs across diverse scenarios, finding that even the best-performing models achieve less than 40% full-success rate. Error analysis reveals that agents struggle with deep search and product selection in long trajectories, fail to balance the use of personalization cues, and to effectively engage with users. Further training exploration provides practical guidance for overcoming these weaknesses, with the combination of supervised fine-tuning (SFT) and reinforcement learning (RL) yielding significant performance improvements. Code and data will be released at https://github.com/ShopAgent-Team/ShopSimulator.

Let It Flow: Agentic Crafting on Rock and Roll, Building the ROME Model within an Open Agentic Learning Ecosystem

Dec 31, 2025Abstract:Agentic crafting requires LLMs to operate in real-world environments over multiple turns by taking actions, observing outcomes, and iteratively refining artifacts. Despite its importance, the open-source community lacks a principled, end-to-end ecosystem to streamline agent development. We introduce the Agentic Learning Ecosystem (ALE), a foundational infrastructure that optimizes the production pipeline for agent LLMs. ALE consists of three components: ROLL, a post-training framework for weight optimization; ROCK, a sandbox environment manager for trajectory generation; and iFlow CLI, an agent framework for efficient context engineering. We release ROME (ROME is Obviously an Agentic Model), an open-source agent grounded by ALE and trained on over one million trajectories. Our approach includes data composition protocols for synthesizing complex behaviors and a novel policy optimization algorithm, Interaction-based Policy Alignment (IPA), which assigns credit over semantic interaction chunks rather than individual tokens to improve long-horizon training stability. Empirically, we evaluate ROME within a structured setting and introduce Terminal Bench Pro, a benchmark with improved scale and contamination control. ROME demonstrates strong performance across benchmarks like SWE-bench Verified and Terminal Bench, proving the effectiveness of the ALE infrastructure.

FID-Net: A Feature-Enhanced Deep Learning Network for Forest Infestation Detection

Dec 15, 2025Abstract:Forest pests threaten ecosystem stability, requiring efficient monitoring. To overcome the limitations of traditional methods in large-scale, fine-grained detection, this study focuses on accurately identifying infected trees and analyzing infestation patterns. We propose FID-Net, a deep learning model that detects pest-affected trees from UAV visible-light imagery and enables infestation analysis via three spatial metrics. Based on YOLOv8n, FID-Net introduces a lightweight Feature Enhancement Module (FEM) to extract disease-sensitive cues, an Adaptive Multi-scale Feature Fusion Module (AMFM) to align and fuse dual-branch features (RGB and FEM-enhanced), and an Efficient Channel Attention (ECA) mechanism to enhance discriminative information efficiently. From detection results, we construct a pest situation analysis framework using: (1) Kernel Density Estimation to locate infection hotspots; (2) neighborhood evaluation to assess healthy trees' infection risk; (3) DBSCAN clustering to identify high-density healthy clusters as priority protection zones. Experiments on UAV imagery from 32 forest plots in eastern Tianshan, China, show that FID-Net achieves 86.10% precision, 75.44% recall, 82.29% mAP@0.5, and 64.30% mAP@0.5:0.95, outperforming mainstream YOLO models. Analysis confirms infected trees exhibit clear clustering, supporting targeted forest protection. FID-Net enables accurate tree health discrimination and, combined with spatial metrics, provides reliable data for intelligent pest monitoring, early warning, and precise management.

MMDCP: A Distribution-free Approach to Outlier Detection and Classification with Coverage Guarantees and SCW-FDR Control

Nov 15, 2025Abstract:We propose the Modified Mahalanobis Distance Conformal Prediction (MMDCP), a unified framework for multi-class classification and outlier detection under label shift, where the training and test distributions may differ. In such settings, many existing methods construct nonconformity scores based on empirical cumulative or density functions combined with data-splitting strategies. However, these approaches are often computationally expensive due to their heavy reliance on resampling procedures and tend to produce overly conservative prediction sets with unstable coverage, especially in small samples. To address these challenges, MMDCP combines class-specific distance measures with full conformal prediction to construct a score function, thereby producing adaptive prediction sets that effectively capture both inlier and outlier structures. Under mild regularity conditions, we establish convergence rates for the resulting sets and provide the first theoretical characterization of the gap between oracle and empirical conformal $p$-values, which ensures valid coverage and effective control of the class-wise false discovery rate (CW-FDR). We further introduce the Summarized Class-Wise FDR (SCW-FDR), a novel global error metric aggregating false discoveries across classes, and show that it can be effectively controlled within the MMDCP framework. Extensive simulations and two real-data applications support our theoretical findings and demonstrate the advantages of the proposed method.

PCRLLM: Proof-Carrying Reasoning with Large Language Models under Stepwise Logical Constraints

Nov 11, 2025Abstract:Large Language Models (LLMs) often exhibit limited logical coherence, mapping premises to conclusions without adherence to explicit inference rules. We propose Proof-Carrying Reasoning with LLMs (PCRLLM), a framework that constrains reasoning to single-step inferences while preserving natural language formulations. Each output explicitly specifies premises, rules, and conclusions, thereby enabling verification against a target logic. This mechanism mitigates trustworthiness concerns by supporting chain-level validation even in black-box settings. Moreover, PCRLLM facilitates systematic multi-LLM collaboration, allowing intermediate steps to be compared and integrated under formal rules. Finally, we introduce a benchmark schema for generating large-scale step-level reasoning data, combining natural language expressiveness with formal rigor.

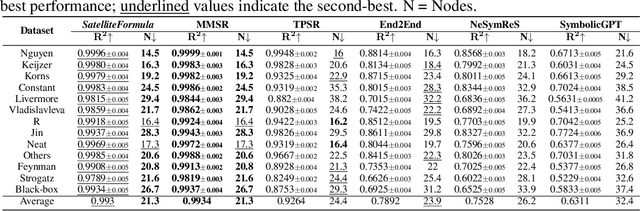

SatelliteFormula: Multi-Modal Symbolic Regression from Remote Sensing Imagery for Physics Discovery

Jun 06, 2025

Abstract:We propose SatelliteFormula, a novel symbolic regression framework that derives physically interpretable expressions directly from multi-spectral remote sensing imagery. Unlike traditional empirical indices or black-box learning models, SatelliteFormula combines a Vision Transformer-based encoder for spatial-spectral feature extraction with physics-guided constraints to ensure consistency and interpretability. Existing symbolic regression methods struggle with the high-dimensional complexity of multi-spectral data; our method addresses this by integrating transformer representations into a symbolic optimizer that balances accuracy and physical plausibility. Extensive experiments on benchmark datasets and remote sensing tasks demonstrate superior performance, stability, and generalization compared to state-of-the-art baselines. SatelliteFormula enables interpretable modeling of complex environmental variables, bridging the gap between data-driven learning and physical understanding.

Reinforcement Learning Optimization for Large-Scale Learning: An Efficient and User-Friendly Scaling Library

Jun 06, 2025Abstract:We introduce ROLL, an efficient, scalable, and user-friendly library designed for Reinforcement Learning Optimization for Large-scale Learning. ROLL caters to three primary user groups: tech pioneers aiming for cost-effective, fault-tolerant large-scale training, developers requiring flexible control over training workflows, and researchers seeking agile experimentation. ROLL is built upon several key modules to serve these user groups effectively. First, a single-controller architecture combined with an abstraction of the parallel worker simplifies the development of the training pipeline. Second, the parallel strategy and data transfer modules enable efficient and scalable training. Third, the rollout scheduler offers fine-grained management of each sample's lifecycle during the rollout stage. Fourth, the environment worker and reward worker support rapid and flexible experimentation with agentic RL algorithms and reward designs. Finally, AutoDeviceMapping allows users to assign resources to different models flexibly across various stages.

When Cloud Removal Meets Diffusion Model in Remote Sensing

Apr 21, 2025Abstract:Cloud occlusion significantly hinders remote sensing applications by obstructing surface information and complicating analysis. To address this, we propose DC4CR (Diffusion Control for Cloud Removal), a novel multimodal diffusion-based framework for cloud removal in remote sensing imagery. Our method introduces prompt-driven control, allowing selective removal of thin and thick clouds without relying on pre-generated cloud masks, thereby enhancing preprocessing efficiency and model adaptability. Additionally, we integrate low-rank adaptation for computational efficiency, subject-driven generation for improved generalization, and grouped learning to enhance performance on small datasets. Designed as a plug-and-play module, DC4CR seamlessly integrates into existing cloud removal models, providing a scalable and robust solution. Extensive experiments on the RICE and CUHK-CR datasets demonstrate state-of-the-art performance, achieving superior cloud removal across diverse conditions. This work presents a practical and efficient approach for remote sensing image processing with broad real-world applications.

Point-Driven Interactive Text and Image Layer Editing Using Diffusion Models

Apr 18, 2025Abstract:We present DanceText, a training-free framework for multilingual text editing in images, designed to support complex geometric transformations and achieve seamless foreground-background integration. While diffusion-based generative models have shown promise in text-guided image synthesis, they often lack controllability and fail to preserve layout consistency under non-trivial manipulations such as rotation, translation, scaling, and warping. To address these limitations, DanceText introduces a layered editing strategy that separates text from the background, allowing geometric transformations to be performed in a modular and controllable manner. A depth-aware module is further proposed to align appearance and perspective between the transformed text and the reconstructed background, enhancing photorealism and spatial consistency. Importantly, DanceText adopts a fully training-free design by integrating pretrained modules, allowing flexible deployment without task-specific fine-tuning. Extensive experiments on the AnyWord-3M benchmark demonstrate that our method achieves superior performance in visual quality, especially under large-scale and complex transformation scenarios.

SatelliteCalculator: A Multi-Task Vision Foundation Model for Quantitative Remote Sensing Inversion

Apr 18, 2025

Abstract:Quantitative remote sensing inversion plays a critical role in environmental monitoring, enabling the estimation of key ecological variables such as vegetation indices, canopy structure, and carbon stock. Although vision foundation models have achieved remarkable progress in classification and segmentation tasks, their application to physically interpretable regression remains largely unexplored. Furthermore, the multi-spectral nature and geospatial heterogeneity of remote sensing data pose significant challenges for generalization and transferability. To address these issues, we introduce SatelliteCalculator, the first vision foundation model tailored for quantitative remote sensing inversion. By leveraging physically defined index formulas, we automatically construct a large-scale dataset of over one million paired samples across eight core ecological indicators. The model integrates a frozen Swin Transformer backbone with a prompt-guided architecture, featuring cross-attentive adapters and lightweight task-specific MLP decoders. Experiments on the Open-Canopy benchmark demonstrate that SatelliteCalculator achieves competitive accuracy across all tasks while significantly reducing inference cost. Our results validate the feasibility of applying foundation models to quantitative inversion, and provide a scalable framework for task-adaptive remote sensing estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge