Mohd. Yamani Idna Idris

A Layered Self-Supervised Knowledge Distillation Framework for Efficient Multimodal Learning on the Edge

Jun 08, 2025Abstract:We introduce Layered Self-Supervised Knowledge Distillation (LSSKD) framework for training compact deep learning models. Unlike traditional methods that rely on pre-trained teacher networks, our approach appends auxiliary classifiers to intermediate feature maps, generating diverse self-supervised knowledge and enabling one-to-one transfer across different network stages. Our method achieves an average improvement of 4.54\% over the state-of-the-art PS-KD method and a 1.14% gain over SSKD on CIFAR-100, with a 0.32% improvement on ImageNet compared to HASSKD. Experiments on Tiny ImageNet and CIFAR-100 under few-shot learning scenarios also achieve state-of-the-art results. These findings demonstrate the effectiveness of our approach in enhancing model generalization and performance without the need for large over-parameterized teacher networks. Importantly, at the inference stage, all auxiliary classifiers can be removed, yielding no extra computational cost. This makes our model suitable for deploying small language models on affordable low-computing devices. Owing to its lightweight design and adaptability, our framework is particularly suitable for multimodal sensing and cyber-physical environments that require efficient and responsive inference. LSSKD facilitates the development of intelligent agents capable of learning from limited sensory data under weak supervision.

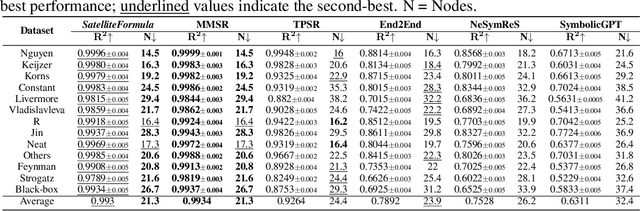

SatelliteFormula: Multi-Modal Symbolic Regression from Remote Sensing Imagery for Physics Discovery

Jun 06, 2025

Abstract:We propose SatelliteFormula, a novel symbolic regression framework that derives physically interpretable expressions directly from multi-spectral remote sensing imagery. Unlike traditional empirical indices or black-box learning models, SatelliteFormula combines a Vision Transformer-based encoder for spatial-spectral feature extraction with physics-guided constraints to ensure consistency and interpretability. Existing symbolic regression methods struggle with the high-dimensional complexity of multi-spectral data; our method addresses this by integrating transformer representations into a symbolic optimizer that balances accuracy and physical plausibility. Extensive experiments on benchmark datasets and remote sensing tasks demonstrate superior performance, stability, and generalization compared to state-of-the-art baselines. SatelliteFormula enables interpretable modeling of complex environmental variables, bridging the gap between data-driven learning and physical understanding.

SatelliteCalculator: A Multi-Task Vision Foundation Model for Quantitative Remote Sensing Inversion

Apr 18, 2025

Abstract:Quantitative remote sensing inversion plays a critical role in environmental monitoring, enabling the estimation of key ecological variables such as vegetation indices, canopy structure, and carbon stock. Although vision foundation models have achieved remarkable progress in classification and segmentation tasks, their application to physically interpretable regression remains largely unexplored. Furthermore, the multi-spectral nature and geospatial heterogeneity of remote sensing data pose significant challenges for generalization and transferability. To address these issues, we introduce SatelliteCalculator, the first vision foundation model tailored for quantitative remote sensing inversion. By leveraging physically defined index formulas, we automatically construct a large-scale dataset of over one million paired samples across eight core ecological indicators. The model integrates a frozen Swin Transformer backbone with a prompt-guided architecture, featuring cross-attentive adapters and lightweight task-specific MLP decoders. Experiments on the Open-Canopy benchmark demonstrate that SatelliteCalculator achieves competitive accuracy across all tasks while significantly reducing inference cost. Our results validate the feasibility of applying foundation models to quantitative inversion, and provide a scalable framework for task-adaptive remote sensing estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge