Rethinking Importance Weighting for Deep Learning under Distribution Shift

Jun 08, 2020Tongtong Fang, Nan Lu, Gang Niu, Masashi Sugiyama

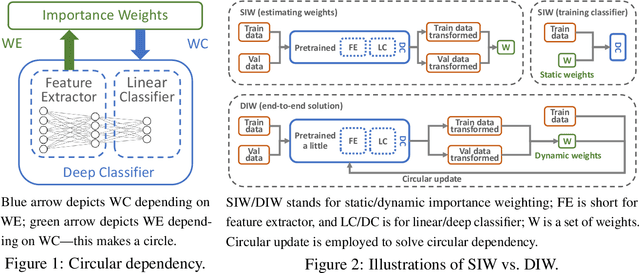

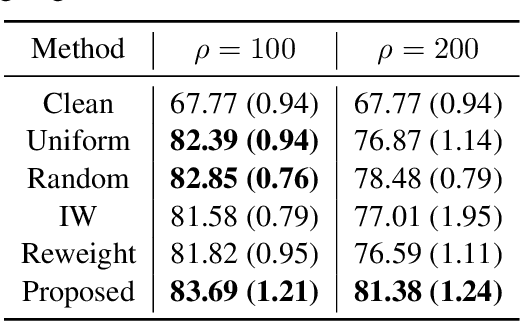

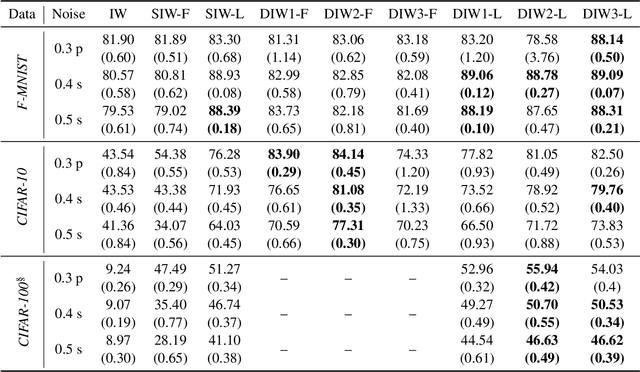

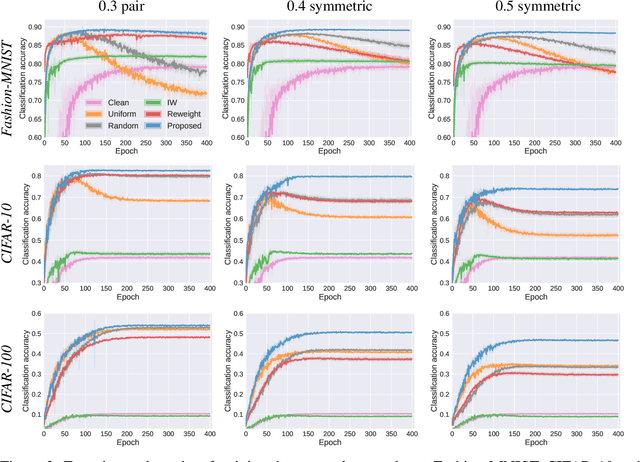

Under distribution shift (DS) where the training data distribution differs from the test one, a powerful technique is importance weighting (IW) which handles DS in two separate steps: weight estimation (WE) estimates the test-over-training density ratio and weighted classification (WC) trains the classifier from weighted training data. However, IW cannot work well on complex data, since WE is incompatible with deep learning. In this paper, we rethink IW and theoretically show it suffers from a circular dependency: we need not only WE for WC, but also WC for WE where a trained deep classifier is used as the feature extractor (FE). To cut off the dependency, we try to pretrain FE from unweighted training data, which leads to biased FE. To overcome the bias, we propose an end-to-end solution dynamic IW that iterates between WE and WC and combines them in a seamless manner, and hence our WE can also enjoy deep networks and stochastic optimizers indirectly. Experiments with two representative DSs on Fashion-MNIST and CIFAR-10/100 demonstrate that dynamic IW compares favorably with state-of-the-art methods.

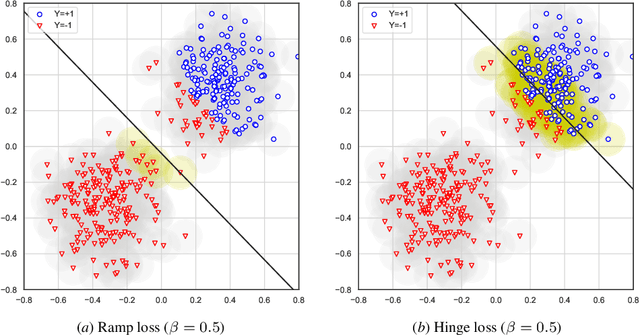

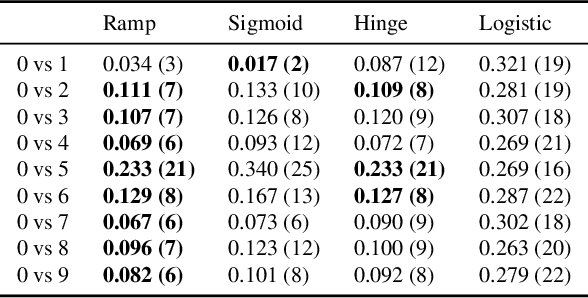

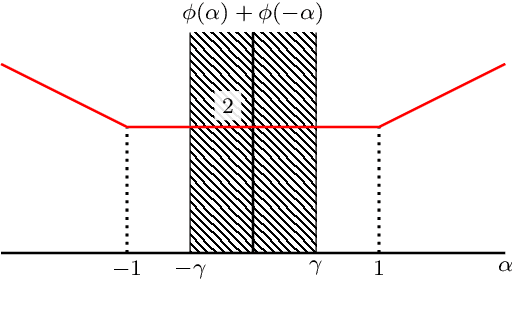

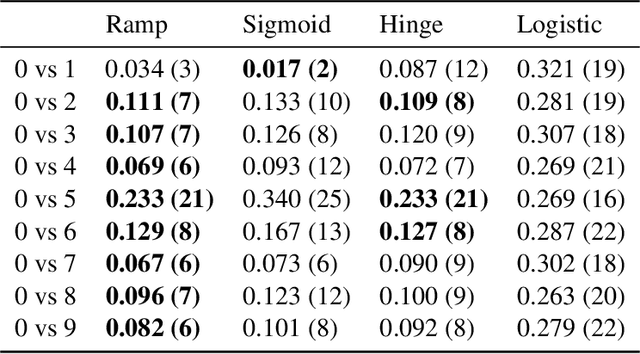

Calibrated Surrogate Losses for Adversarially Robust Classification

May 28, 2020Han Bao, Clayton Scott, Masashi Sugiyama

Adversarially robust classification seeks a classifier that is insensitive to adversarial perturbations of test patterns. This problem is often formulated via a minimax objective, where the target loss is the worst-case value of the 0-1 loss subject to a bound on the size of perturbation. Recent work has proposed convex surrogates for the adversarial 0-1 loss, in an effort to make optimization more tractable. In this work, we consider the question of which surrogate losses are calibrated with respect to the adversarial 0-1 loss, meaning that minimization of the former implies minimization of the latter. We show that no convex surrogate loss is calibrated with respect to the adversarial 0-1 loss when restricted to the class of linear models. We further introduce a class of nonconvex losses and offer necessary and sufficient conditions for losses in this class to be calibrated.

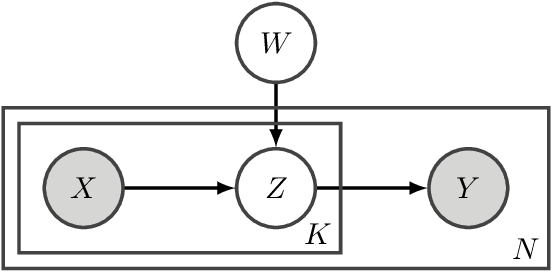

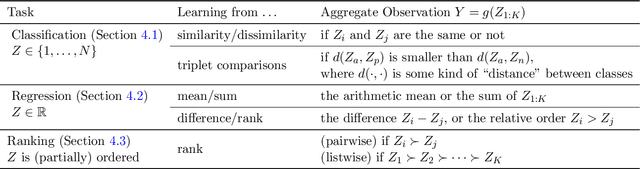

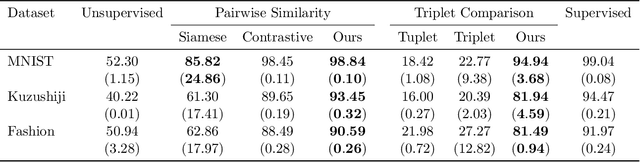

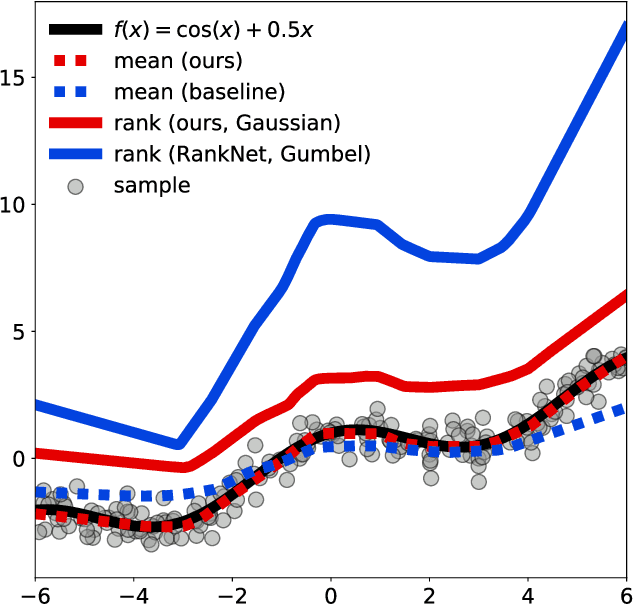

Learning from Aggregate Observations

Apr 14, 2020Yivan Zhang, Nontawat Charoenphakdee, Zhenguo Wu, Masashi Sugiyama

We study the problem of learning from aggregate observations where supervision signals are given to sets of instances instead of individual instances, while the goal is still to predict labels of unseen individuals. A well-known example is multiple instance learning (MIL). In this paper, we extend MIL beyond binary classification to other problems such as multiclass classification and regression. We present a probabilistic framework that is applicable to a variety of aggregate observations, e.g., pairwise similarity for classification and mean/difference/rank observation for regression. We propose a simple yet effective method based on the maximum likelihood principle, which can be simply implemented for various differentiable models such as deep neural networks and gradient boosting machines. Experiments on three novel problem settings -- classification via triplet comparison and regression via mean/rank observation indicate the effectiveness of the proposed method.

Do Public Datasets Assure Unbiased Comparisons for Registration Evaluation?

Mar 20, 2020Jie Luo, Guangshen Ma, Sarah Frisken, Parikshit Juvekar, Nazim Haouchine, Zhe Xu, Yiming Xiao, Alexandra Golby, Patrick Codd, Masashi Sugiyama, William Wells III

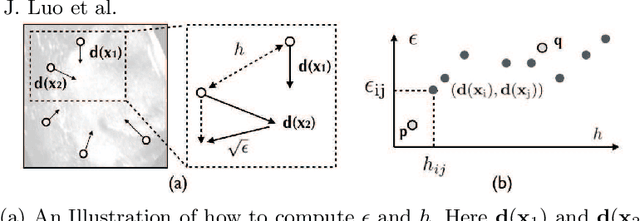

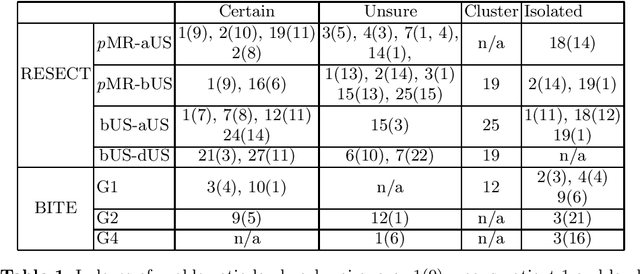

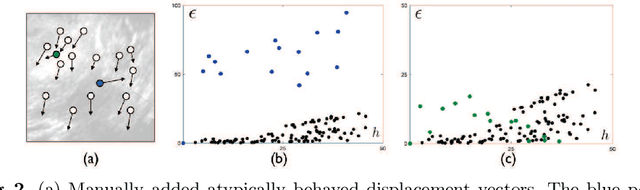

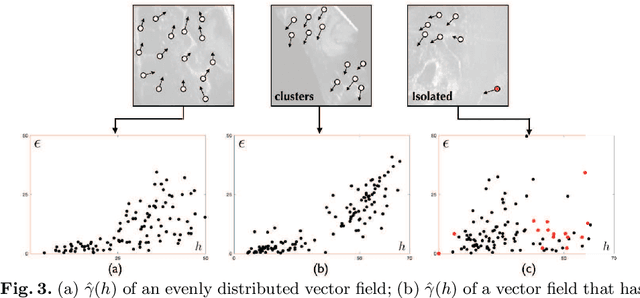

With the increasing availability of new image registration approaches, an unbiased evaluation is becoming more needed so that clinicians can choose the most suitable approaches for their applications. Current evaluations typically use landmarks in manually annotated datasets. As a result, the quality of annotations is crucial for unbiased comparisons. Even though most data providers claim to have quality control over their datasets, an objective third-party screening can be reassuring for intended users. In this study, we use the variogram to screen the manually annotated landmarks in two datasets used to benchmark registration in image-guided neurosurgeries. The variogram provides an intuitive 2D representation of the spatial characteristics of annotated landmarks. Using variograms, we identified potentially problematic cases and had them examined by experienced radiologists. We found that (1) a small number of annotations may have fiducial localization errors; (2) the landmark distribution for some cases is not ideal to offer fair comparisons. If unresolved, both findings could incur bias in registration evaluation.

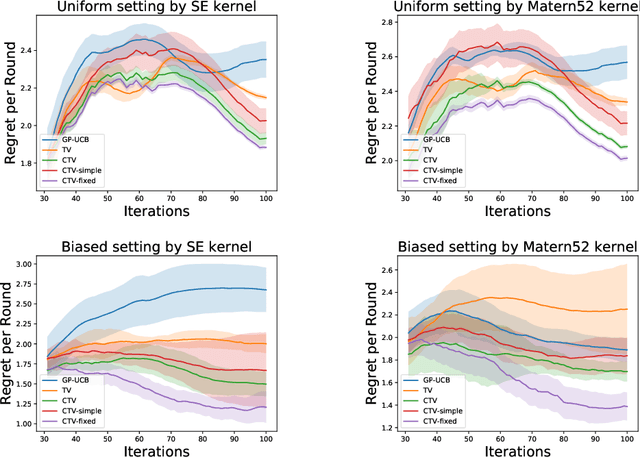

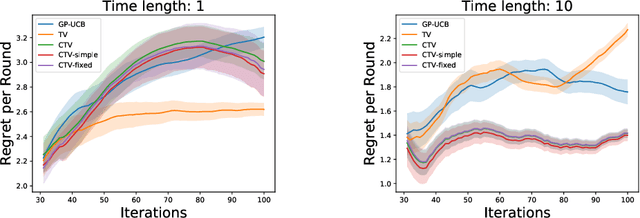

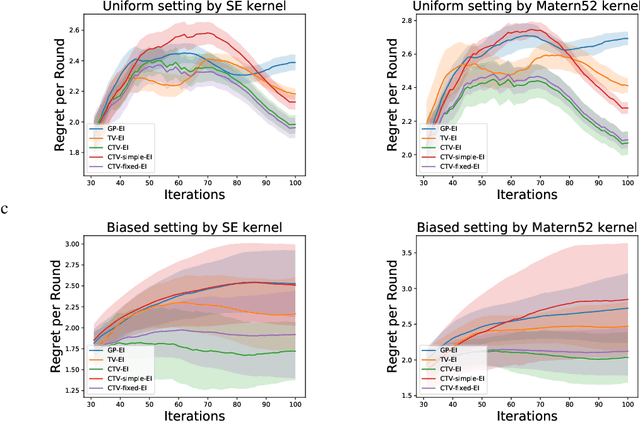

Time-varying Gaussian Process Bandit Optimization with Non-constant Evaluation Time

Mar 11, 2020Hideaki Imamura, Nontawat Charoenphakdee, Futoshi Futami, Issei Sato, Junya Honda, Masashi Sugiyama

The Gaussian process bandit is a problem in which we want to find a maximizer of a black-box function with the minimum number of function evaluations. If the black-box function varies with time, then time-varying Bayesian optimization is a promising framework. However, a drawback with current methods is in the assumption that the evaluation time for every observation is constant, which can be unrealistic for many practical applications, e.g., recommender systems and environmental monitoring. As a result, the performance of current methods can be degraded when this assumption is violated. To cope with this problem, we propose a novel time-varying Bayesian optimization algorithm that can effectively handle the non-constant evaluation time. Furthermore, we theoretically establish a regret bound of our algorithm. Our bound elucidates that a pattern of the evaluation time sequence can hugely affect the difficulty of the problem. We also provide experimental results to validate the practical effectiveness of the proposed method.

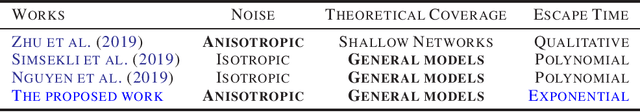

A Diffusion Theory for Deep Learning Dynamics: Stochastic Gradient Descent Escapes From Sharp Minima Exponentially Fast

Mar 05, 2020Zeke Xie, Issei Sato, Masashi Sugiyama

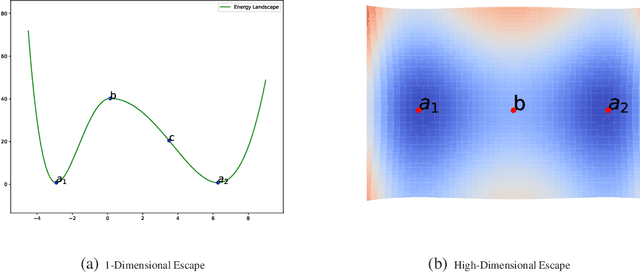

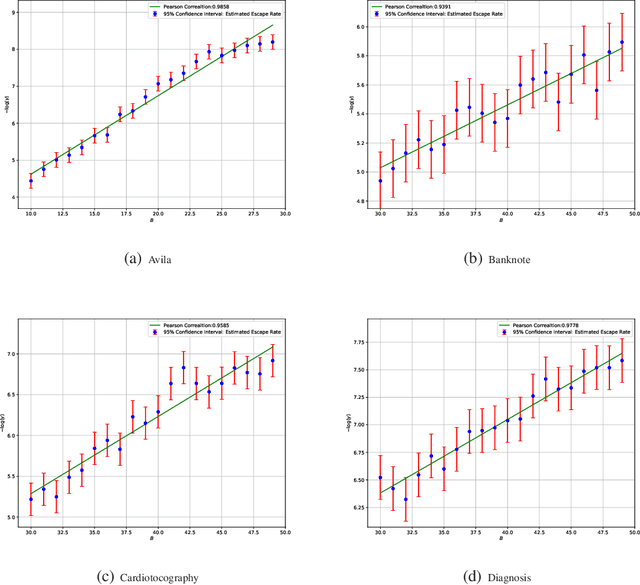

Stochastic optimization algorithms, such as Stochastic Gradient Descent (SGD) and its variants, are mainstream methods for training deep networks in practice. However, the theoretical mechanism behind gradient noise still remains to be further investigated. Deep learning is known to find flat minima with a large neighboring region in parameter space from which each weight vector has similar small error. In this paper, we focus on a fundamental problem in deep learning, "How can deep learning usually find flat minima among so many minima?" To answer the question, we develop a density diffusion theory (DDT) for revealing the fundamental dynamical mechanism of SGD and deep learning. More specifically, we study how escape time from loss valleys to the outside of valleys depends on minima sharpness, gradient noise and hyperparameters. One of the most interesting findings is that stochastic gradient noise from SGD can help escape from sharp minima exponentially faster than flat minima, while white noise can only help escape from sharp minima polynomially faster than flat minima. We also find large-batch training requires exponentially many iterations to pass through sharp minima and find flat minima. We present direct empirical evidence supporting the proposed theoretical results.

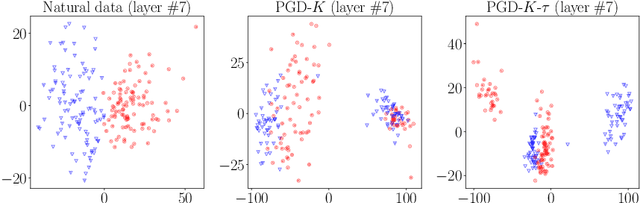

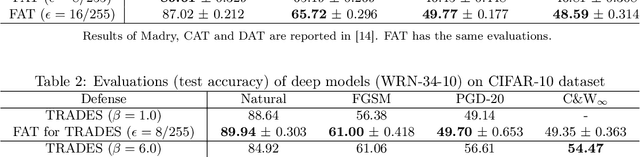

Attacks Which Do Not Kill Training Make Adversarial Learning Stronger

Feb 26, 2020Jingfeng Zhang, Xilie Xu, Bo Han, Gang Niu, Lizhen Cui, Masashi Sugiyama, Mohan Kankanhalli

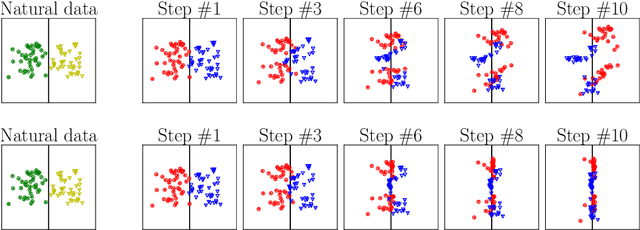

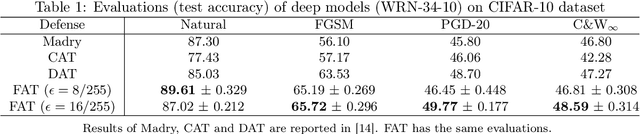

Adversarial training based on the minimax formulation is necessary for obtaining adversarial robustness of trained models. However, it is conservative or even pessimistic so that it sometimes hurts the natural generalization. In this paper, we raise a fundamental question---do we have to trade off natural generalization for adversarial robustness? We argue that adversarial training is to employ confident adversarial data for updating the current model. We propose a novel approach of friendly adversarial training (FAT): rather than employing most adversarial data maximizing the loss, we search for least adversarial (i.e., friendly adversarial) data minimizing the loss, among the adversarial data that are confidently misclassified. Our novel formulation is easy to implement by just stopping the most adversarial data searching algorithms such as PGD (projected gradient descent) early, which we call early-stopped PGD. Theoretically, FAT is justified by an upper bound of the adversarial risk. Empirically, early-stopped PGD allows us to answer the earlier question negatively---adversarial robustness can indeed be achieved without compromising the natural generalization.

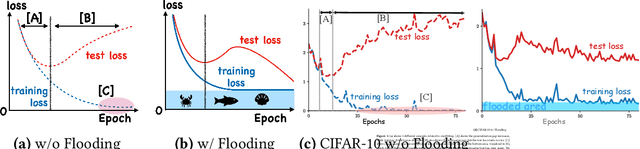

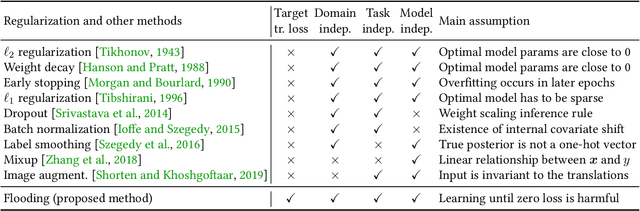

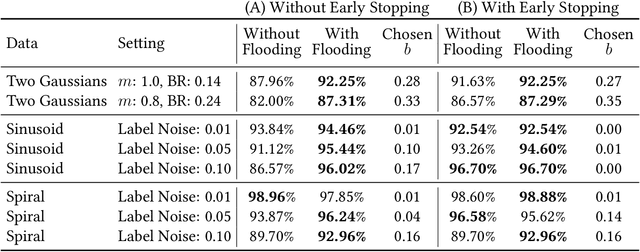

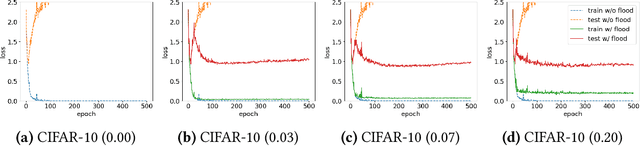

Do We Need Zero Training Loss After Achieving Zero Training Error?

Feb 20, 2020Takashi Ishida, Ikko Yamane, Tomoya Sakai, Gang Niu, Masashi Sugiyama

Overparameterized deep networks have the capacity to memorize training data with zero training error. Even after memorization, the training loss continues to approach zero, making the model overconfident and the test performance degraded. Since existing regularizers do not directly aim to avoid zero training loss, they often fail to maintain a moderate level of training loss, ending up with a too small or too large loss. We propose a direct solution called flooding that intentionally prevents further reduction of the training loss when it reaches a reasonably small value, which we call the flooding level. Our approach makes the loss float around the flooding level by doing mini-batched gradient descent as usual but gradient ascent if the training loss is below the flooding level. This can be implemented with one line of code, and is compatible with any stochastic optimizer and other regularizers. With flooding, the model will continue to "random walk" with the same non-zero training loss, and we expect it to drift into an area with a flat loss landscape that leads to better generalization. We experimentally show that flooding improves performance and as a byproduct, induces a double descent curve of the test loss.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge