Manish Sahu

An Endoscopic Chisel: Intraoperative Imaging Carves 3D Anatomical Models

Feb 19, 2024Abstract:Purpose: Preoperative imaging plays a pivotal role in sinus surgery where CTs offer patient-specific insights of complex anatomy, enabling real-time intraoperative navigation to complement endoscopy imaging. However, surgery elicits anatomical changes not represented in the preoperative model, generating an inaccurate basis for navigation during surgery progression. Methods: We propose a first vision-based approach to update the preoperative 3D anatomical model leveraging intraoperative endoscopic video for navigated sinus surgery where relative camera poses are known. We rely on comparisons of intraoperative monocular depth estimates and preoperative depth renders to identify modified regions. The new depths are integrated in these regions through volumetric fusion in a truncated signed distance function representation to generate an intraoperative 3D model that reflects tissue manipulation. Results: We quantitatively evaluate our approach by sequentially updating models for a five-step surgical progression in an ex vivo specimen. We compute the error between correspondences from the updated model and ground-truth intraoperative CT in the region of anatomical modification. The resulting models show a decrease in error during surgical progression as opposed to increasing when no update is employed. Conclusion: Our findings suggest that preoperative 3D anatomical models can be updated using intraoperative endoscopy video in navigated sinus surgery. Future work will investigate improvements to monocular depth estimation as well as removing the need for external navigation systems. The resulting ability to continuously update the patient model may provide surgeons with a more precise understanding of the current anatomical state and paves the way toward a digital twin paradigm for sinus surgery.

Beyond the Manual Touch: Situational-aware Force Control for Increased Safety in Robot-assisted Skullbase Surgery

Jan 22, 2024Abstract:Purpose - Skullbase surgery demands exceptional precision when removing bone in the lateral skull base. Robotic assistance can alleviate the effect of human sensory-motor limitations. However, the stiffness and inertia of the robot can significantly impact the surgeon's perception and control of the tool-to-tissue interaction forces. Methods - We present a situational-aware, force control technique aimed at regulating interaction forces during robot-assisted skullbase drilling. The contextual interaction information derived from the digital twin environment is used to enhance sensory perception and suppress undesired high forces. Results - To validate our approach, we conducted initial feasibility experiments involving a medical and two engineering students. The experiment focused on further drilling around critical structures following cortical mastoidectomy. The experiment results demonstrate that robotic assistance coupled with our proposed control scheme effectively limited undesired interaction forces when compared to robotic assistance without the proposed force control. Conclusions - The proposed force control techniques show promise in significantly reducing undesired interaction forces during robot-assisted skullbase surgery. These findings contribute to the ongoing efforts to enhance surgical precision and safety in complex procedures involving the lateral skull base.

Haptic-Assisted Collaborative Robot Framework for Improved Situational Awareness in Skull Base Surgery

Jan 22, 2024Abstract:Skull base surgery is a demanding field in which surgeons operate in and around the skull while avoiding critical anatomical structures including nerves and vasculature. While image-guided surgical navigation is the prevailing standard, limitation still exists requiring personalized planning and recognizing the irreplaceable role of a skilled surgeon. This paper presents a collaboratively controlled robotic system tailored for assisted drilling in skull base surgery. Our central hypothesis posits that this collaborative system, enriched with haptic assistive modes to enforce virtual fixtures, holds the potential to significantly enhance surgical safety, streamline efficiency, and alleviate the physical demands on the surgeon. The paper describes the intricate system development work required to enable these virtual fixtures through haptic assistive modes. To validate our system's performance and effectiveness, we conducted initial feasibility experiments involving a medical student and two experienced surgeons. The experiment focused on drilling around critical structures following cortical mastoidectomy, utilizing dental stone phantom and cadaveric models. Our experimental results demonstrate that our proposed haptic feedback mechanism enhances the safety of drilling around critical structures compared to systems lacking haptic assistance. With the aid of our system, surgeons were able to safely skeletonize the critical structures without breaching any critical structure even under obstructed view of the surgical site.

Integrating 3D Slicer with a Dynamic Simulator for Situational Aware Robotic Interventions

Jan 22, 2024

Abstract:Image-guided robotic interventions represent a transformative frontier in surgery, blending advanced imaging and robotics for improved precision and outcomes. This paper addresses the critical need for integrating open-source platforms to enhance situational awareness in image-guided robotic research. We present an open-source toolset that seamlessly combines a physics-based constraint formulation framework, AMBF, with a state-of-the-art imaging platform application, 3D Slicer. Our toolset facilitates the creation of highly customizable interactive digital twins, that incorporates processing and visualization of medical imaging, robot kinematics, and scene dynamics for real-time robot control. Through a feasibility study, we showcase real-time synchronization of a physical robotic interventional environment in both 3D Slicer and AMBF, highlighting low-latency updates and improved visualization.

A Quantitative Evaluation of Dense 3D Reconstruction of Sinus Anatomy from Monocular Endoscopic Video

Oct 22, 2023

Abstract:Generating accurate 3D reconstructions from endoscopic video is a promising avenue for longitudinal radiation-free analysis of sinus anatomy and surgical outcomes. Several methods for monocular reconstruction have been proposed, yielding visually pleasant 3D anatomical structures by retrieving relative camera poses with structure-from-motion-type algorithms and fusion of monocular depth estimates. However, due to the complex properties of the underlying algorithms and endoscopic scenes, the reconstruction pipeline may perform poorly or fail unexpectedly. Further, acquiring medical data conveys additional challenges, presenting difficulties in quantitatively benchmarking these models, understanding failure cases, and identifying critical components that contribute to their precision. In this work, we perform a quantitative analysis of a self-supervised approach for sinus reconstruction using endoscopic sequences paired with optical tracking and high-resolution computed tomography acquired from nine ex-vivo specimens. Our results show that the generated reconstructions are in high agreement with the anatomy, yielding an average point-to-mesh error of 0.91 mm between reconstructions and CT segmentations. However, in a point-to-point matching scenario, relevant for endoscope tracking and navigation, we found average target registration errors of 6.58 mm. We identified that pose and depth estimation inaccuracies contribute equally to this error and that locally consistent sequences with shorter trajectories generate more accurate reconstructions. These results suggest that achieving global consistency between relative camera poses and estimated depths with the anatomy is essential. In doing so, we can ensure proper synergy between all components of the pipeline for improved reconstructions that will facilitate clinical application of this innovative technology.

A force-sensing surgical drill for real-time force feedback in robotic mastoidectomy

Apr 05, 2023Abstract:Purpose: Robotic assistance in otologic surgery can reduce the task load of operating surgeons during the removal of bone around the critical structures in the lateral skull base. However, safe deployment into the anatomical passageways necessitates the development of advanced sensing capabilities to actively limit the interaction forces between the surgical tools and critical anatomy. Methods: We introduce a surgical drill equipped with a force sensor that is capable of measuring accurate tool-tissue interaction forces to enable force control and feedback to surgeons. The design, calibration and validation of the force-sensing surgical drill mounted on a cooperatively controlled surgical robot are described in this work. Results: The force measurements on the tip of the surgical drill are validated with raw-egg drilling experiments, where a force sensor mounted below the egg serves as ground truth. The average root mean square error (RMSE) for points and path drilling experiments are 41.7 (pm 12.2) mN and 48.3 (pm 13.7) mN respectively. Conclusions: The force-sensing prototype measures forces with sub-millinewton resolution and the results demonstrate that the calibrated force-sensing drill generates accurate force measurements with minimal error compared to the measured drill forces. The development of such sensing capabilities is crucial for the safe use of robotic systems in a clinical context.

TAToo: Vision-based Joint Tracking of Anatomy and Tool for Skull-base Surgery

Dec 29, 2022Abstract:Purpose: Tracking the 3D motion of the surgical tool and the patient anatomy is a fundamental requirement for computer-assisted skull-base surgery. The estimated motion can be used both for intra-operative guidance and for downstream skill analysis. Recovering such motion solely from surgical videos is desirable, as it is compliant with current clinical workflows and instrumentation. Methods: We present Tracker of Anatomy and Tool (TAToo). TAToo jointly tracks the rigid 3D motion of patient skull and surgical drill from stereo microscopic videos. TAToo estimates motion via an iterative optimization process in an end-to-end differentiable form. For robust tracking performance, TAToo adopts a probabilistic formulation and enforces geometric constraints on the object level. Results: We validate TAToo on both simulation data, where ground truth motion is available, as well as on anthropomorphic phantom data, where optical tracking provides a strong baseline. We report sub-millimeter and millimeter inter-frame tracking accuracy for skull and drill, respectively, with rotation errors below 1{\deg}. We further illustrate how TAToo may be used in a surgical navigation setting. Conclusion: We present TAToo, which simultaneously tracks the surgical tool and the patient anatomy in skull-base surgery. TAToo directly predicts the motion from surgical videos, without the need of any markers. Our results show that the performance of TAToo compares favorably to competing approaches. Future work will include fine-tuning of our depth network to reach a 1 mm clinical accuracy goal desired for surgical applications in the skull base.

Twin-S: A Digital Twin for Skull-base Surgery

Nov 21, 2022

Abstract:Purpose: Digital twins are virtual interactive models of the real world, exhibiting identical behavior and properties. In surgical applications, computational analysis from digital twins can be used, for example, to enhance situational awareness. Methods: We present a digital twin framework for skull-base surgeries, named Twin-S, which can be integrated within various image-guided interventions seamlessly. Twin-S combines high-precision optical tracking and real-time simulation. We rely on rigorous calibration routines to ensure that the digital twin representation precisely mimics all real-world processes. Twin-S models and tracks the critical components of skull-base surgery, including the surgical tool, patient anatomy, and surgical camera. Significantly, Twin-S updates and reflects real-world drilling of the anatomical model in frame rate. Results: We extensively evaluate the accuracy of Twin-S, which achieves an average 1.39 mm error during the drilling process. We further illustrate how segmentation masks derived from the continuously updated digital twin can augment the surgical microscope view in a mixed reality setting, where bone requiring ablation is highlighted to provide surgeons additional situational awareness. Conclusion: We present Twin-S, a digital twin environment for skull-base surgery. Twin-S tracks and updates the virtual model in real-time given measurements from modern tracking technologies. Future research on complementing optical tracking with higher-precision vision-based approaches may further increase the accuracy of Twin-S.

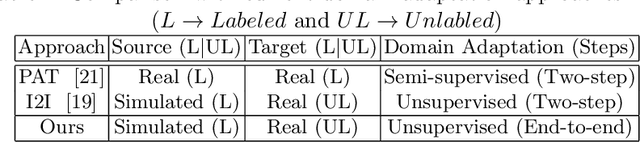

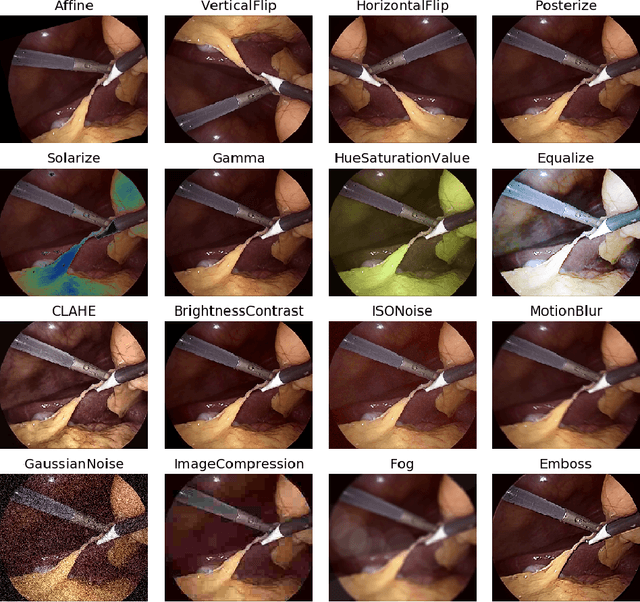

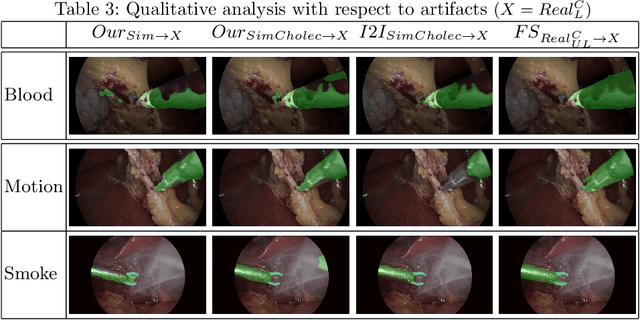

Simulation-to-Real domain adaptation with teacher-student learning for endoscopic instrument segmentation

Mar 02, 2021

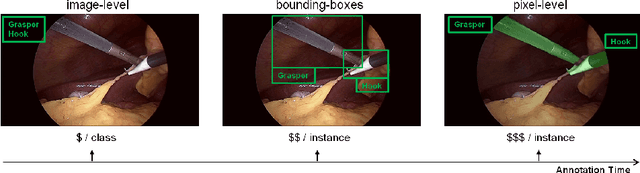

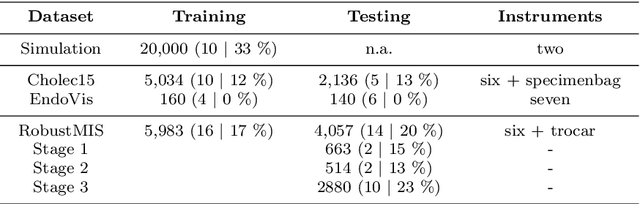

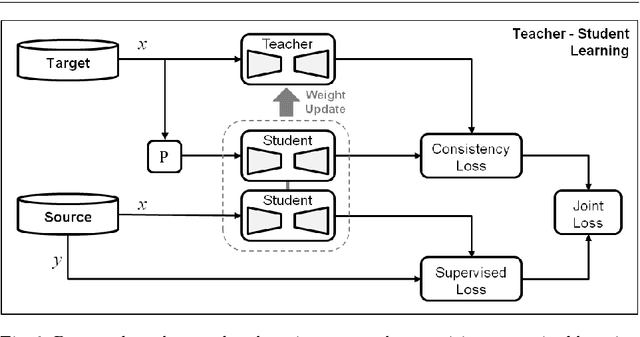

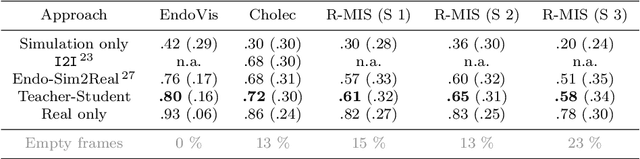

Abstract:Purpose: Segmentation of surgical instruments in endoscopic videos is essential for automated surgical scene understanding and process modeling. However, relying on fully supervised deep learning for this task is challenging because manual annotation occupies valuable time of the clinical experts. Methods: We introduce a teacher-student learning approach that learns jointly from annotated simulation data and unlabeled real data to tackle the erroneous learning problem of the current consistency-based unsupervised domain adaptation framework. Results: Empirical results on three datasets highlight the effectiveness of the proposed framework over current approaches for the endoscopic instrument segmentation task. Additionally, we provide analysis of major factors affecting the performance on all datasets to highlight the strengths and failure modes of our approach. Conclusion: We show that our proposed approach can successfully exploit the unlabeled real endoscopic video frames and improve generalization performance over pure simulation-based training and the previous state-of-the-art. This takes us one step closer to effective segmentation of surgical tools in the annotation scarce setting.

Endo-Sim2Real: Consistency learning-based domain adaptation for instrument segmentation

Jul 22, 2020

Abstract:Surgical tool segmentation in endoscopic videos is an important component of computer assisted interventions systems. Recent success of image-based solutions using fully-supervised deep learning approaches can be attributed to the collection of big labeled datasets. However, the annotation of a big dataset of real videos can be prohibitively expensive and time consuming. Computer simulations could alleviate the manual labeling problem, however, models trained on simulated data do not generalize to real data. This work proposes a consistency-based framework for joint learning of simulated and real (unlabeled) endoscopic data to bridge this performance generalization issue. Empirical results on two data sets (15 videos of the Cholec80 and EndoVis'15 dataset) highlight the effectiveness of the proposed \emph{Endo-Sim2Real} method for instrument segmentation. We compare the segmentation of the proposed approach with state-of-the-art solutions and show that our method improves segmentation both in terms of quality and quantity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge