Liang Zhao

School of Computer Science, Shenyang Aerospace University

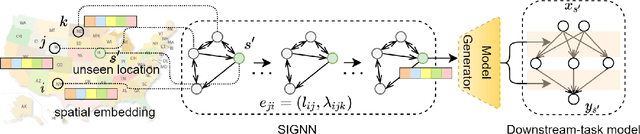

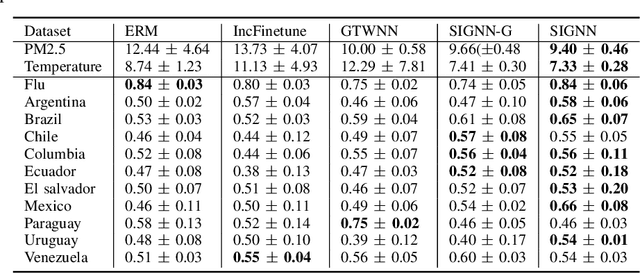

Deep Spatial Domain Generalization

Oct 03, 2022

Abstract:Spatial autocorrelation and spatial heterogeneity widely exist in spatial data, which make the traditional machine learning model perform badly. Spatial domain generalization is a spatial extension of domain generalization, which can generalize to unseen spatial domains in continuous 2D space. Specifically, it learns a model under varying data distributions that generalizes to unseen domains. Although tremendous success has been achieved in domain generalization, there exist very few works on spatial domain generalization. The advancement of this area is challenged by: 1) Difficulty in characterizing spatial heterogeneity, and 2) Difficulty in obtaining predictive models for unseen locations without training data. To address these challenges, this paper proposes a generic framework for spatial domain generalization. Specifically, We develop the spatial interpolation graph neural network that handles spatial data as a graph and learns the spatial embedding on each node and their relationships. The spatial interpolation graph neural network infers the spatial embedding of an unseen location during the test phase. Then the spatial embedding of the target location is used to decode the parameters of the downstream-task model directly on the target location. Finally, extensive experiments on thirteen real-world datasets demonstrate the proposed method's strength.

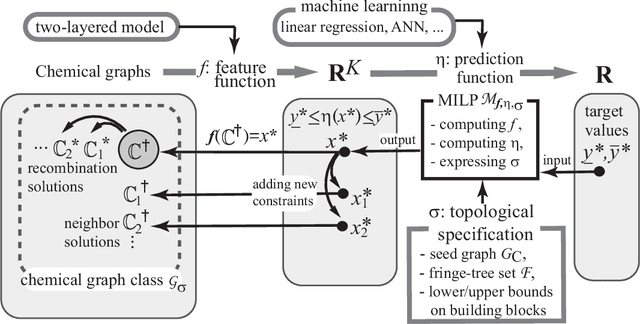

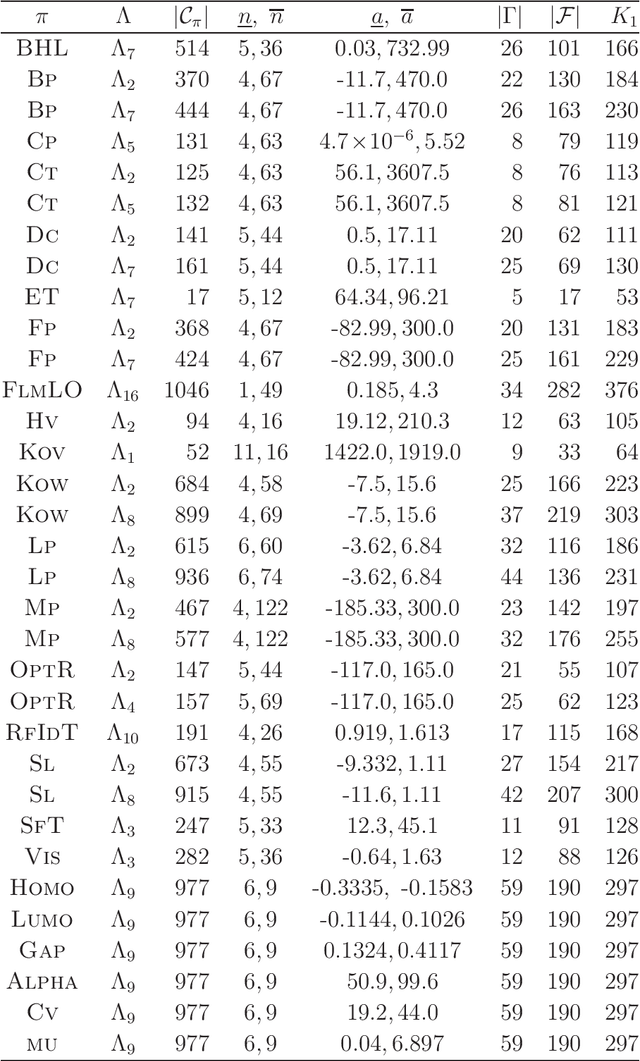

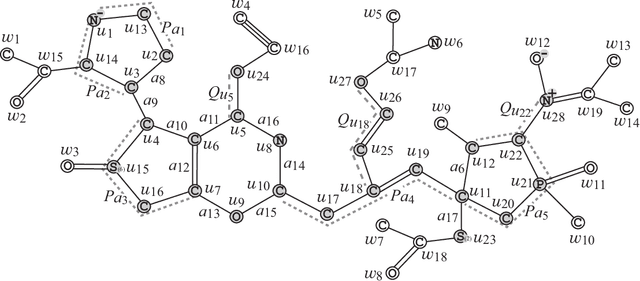

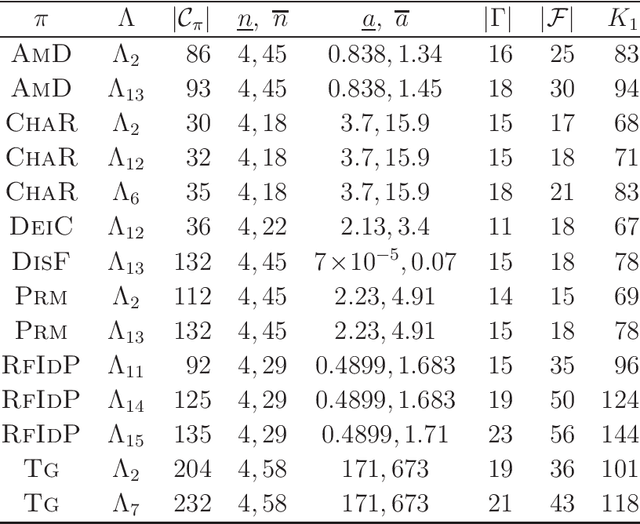

Molecular Design Based on Integer Programming and Quadratic Descriptors in a Two-layered Model

Sep 13, 2022

Abstract:A novel framework has recently been proposed for designing the molecular structure of chemical compounds with a desired chemical property, where design of novel drugs is an important topic in bioinformatics and chemo-informatics. The framework infers a desired chemical graph by solving a mixed integer linear program (MILP) that simulates the computation process of a feature function defined by a two-layered model on chemical graphs and a prediction function constructed by a machine learning method. A set of graph theoretical descriptors in the feature function plays a key role to derive a compact formulation of such an MILP. To improve the learning performance of prediction functions in the framework maintaining the compactness of the MILP, this paper utilizes the product of two of those descriptors as a new descriptor and then designs a method of reducing the number of descriptors. The results of our computational experiments suggest that the proposed method improved the learning performance for many chemical properties and can infer a chemical structure with up to 50 non-hydrogen atoms.

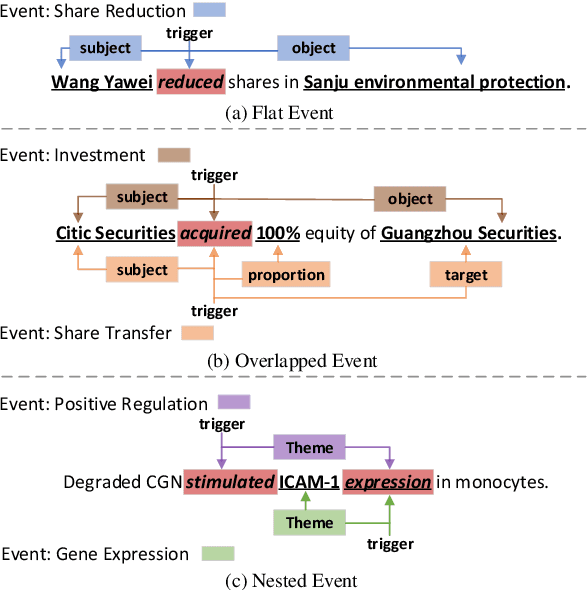

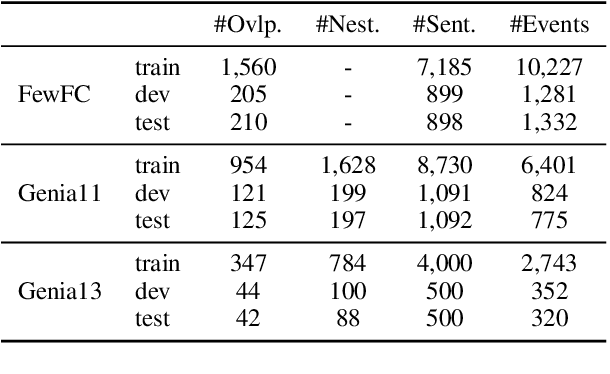

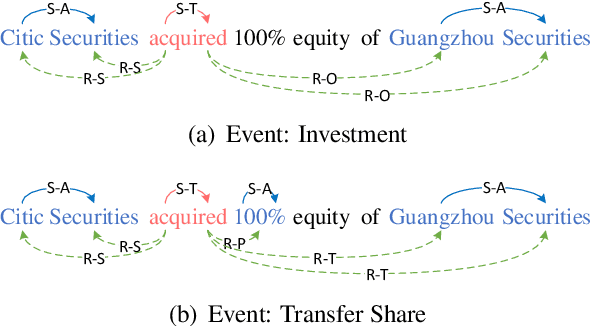

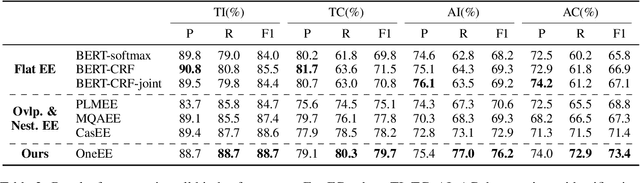

OneEE: A One-Stage Framework for Fast Overlapping and Nested Event Extraction

Sep 06, 2022

Abstract:Event extraction (EE) is an essential task of information extraction, which aims to extract structured event information from unstructured text. Most prior work focuses on extracting flat events while neglecting overlapped or nested ones. A few models for overlapped and nested EE includes several successive stages to extract event triggers and arguments,which suffer from error propagation. Therefore, we design a simple yet effective tagging scheme and model to formulate EE as word-word relation recognition, called OneEE. The relations between trigger or argument words are simultaneously recognized in one stage with parallel grid tagging, thus yielding a very fast event extraction speed. The model is equipped with an adaptive event fusion module to generate event-aware representations and a distance-aware predictor to integrate relative distance information for word-word relation recognition, which are empirically demonstrated to be effective mechanisms. Experiments on 3 overlapped and nested EE benchmarks, namely FewFC, Genia11, and Genia13, show that OneEE achieves the state-of-the-art (SOTA) results. Moreover, the inference speed of OneEE is faster than those of baselines in the same condition, and can be further substantially improved since it supports parallel inference.

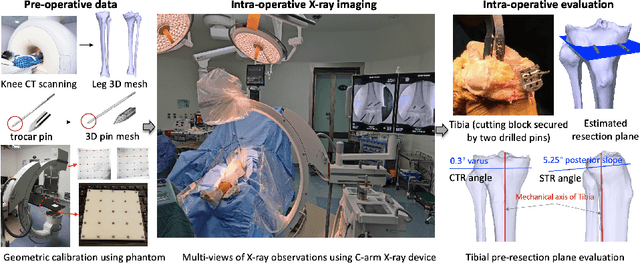

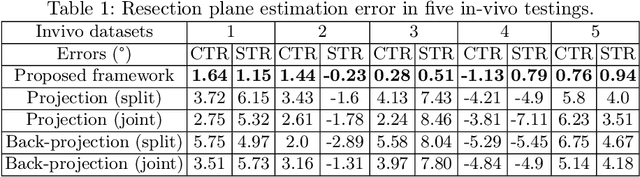

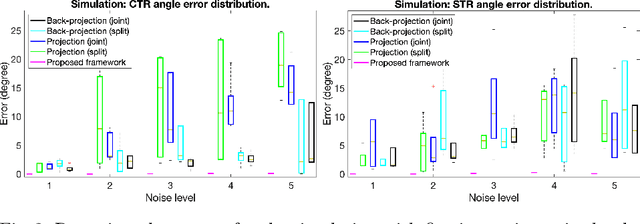

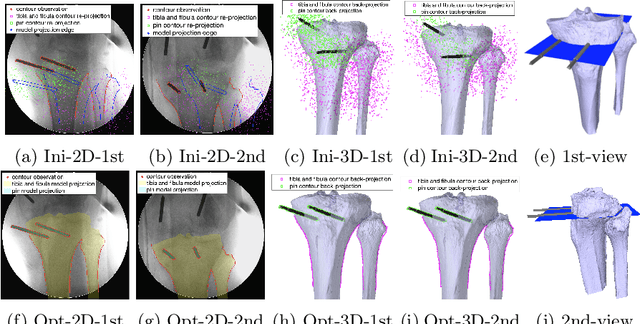

SLAM-TKA: Real-time Intra-operative Measurement of Tibial Resection Plane in Conventional Total Knee Arthroplasty

Aug 08, 2022

Abstract:Total knee arthroplasty (TKA) is a common orthopaedic surgery to replace a damaged knee joint with artificial implants. The inaccuracy of achieving the planned implant position can result in the risk of implant component aseptic loosening, wear out, and even a joint revision, and those failures most of the time occur on the tibial side in the conventional jig-based TKA (CON-TKA). This study aims to precisely evaluate the accuracy of the proximal tibial resection plane intra-operatively in real-time such that the evaluation processing changes very little on the CON-TKA operative procedure. Two X-ray radiographs captured during the proximal tibial resection phase together with a pre-operative patient-specific tibia 3D mesh model segmented from computed tomography (CT) scans and a trocar pin 3D mesh model are used in the proposed simultaneous localisation and mapping (SLAM) system to estimate the proximal tibial resection plane. Validations using both simulation and in-vivo datasets are performed to demonstrate the robustness and the potential clinical value of the proposed algorithm.

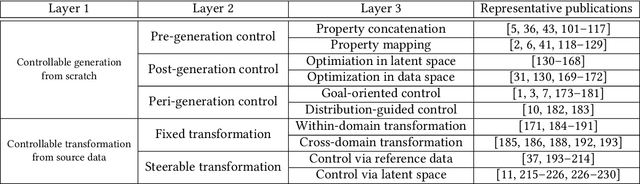

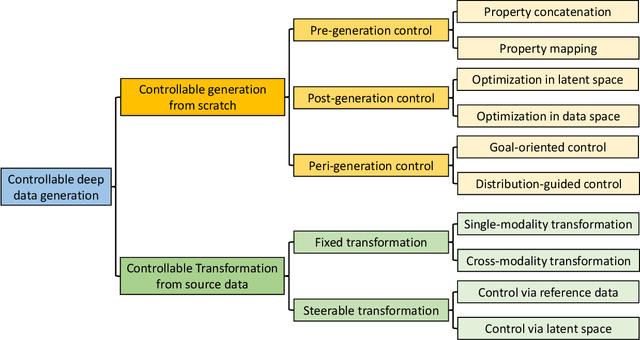

Controllable Data Generation by Deep Learning: A Review

Jul 25, 2022

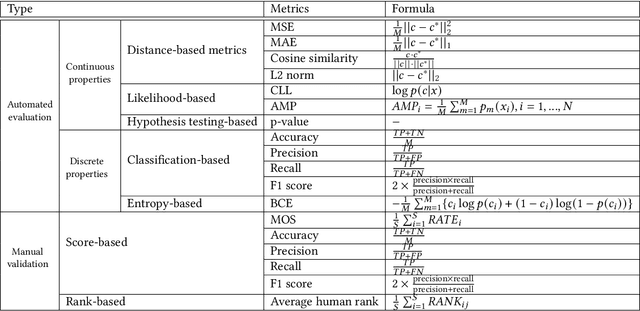

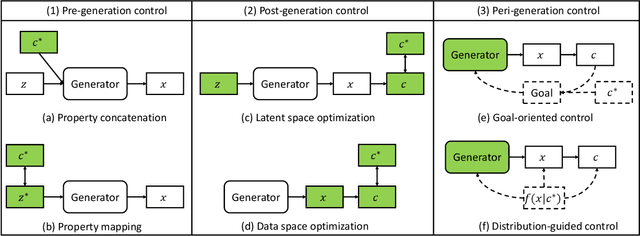

Abstract:Designing and generating new data under targeted properties has been attracting various critical applications such as molecule design, image editing and speech synthesis. Traditional hand-crafted approaches heavily rely on expertise experience and intensive human efforts, yet still suffer from the insufficiency of scientific knowledge and low throughput to support effective and efficient data generation. Recently, the advancement of deep learning induces expressive methods that can learn the underlying representation and properties of data. Such capability provides new opportunities in figuring out the mutual relationship between the structural patterns and functional properties of the data and leveraging such relationship to generate structural data given the desired properties. This article provides a systematic review of this promising research area, commonly known as controllable deep data generation. Firstly, the potential challenges are raised and preliminaries are provided. Then the controllable deep data generation is formally defined, a taxonomy on various techniques is proposed and the evaluation metrics in this specific domain are summarized. After that, exciting applications of controllable deep data generation are introduced and existing works are experimentally analyzed and compared. Finally, the promising future directions of controllable deep data generation are highlighted and five potential challenges are identified.

Two-Stage Fine-Tuning: A Novel Strategy for Learning Class-Imbalanced Data

Jul 22, 2022

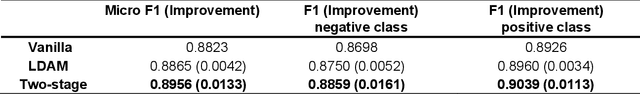

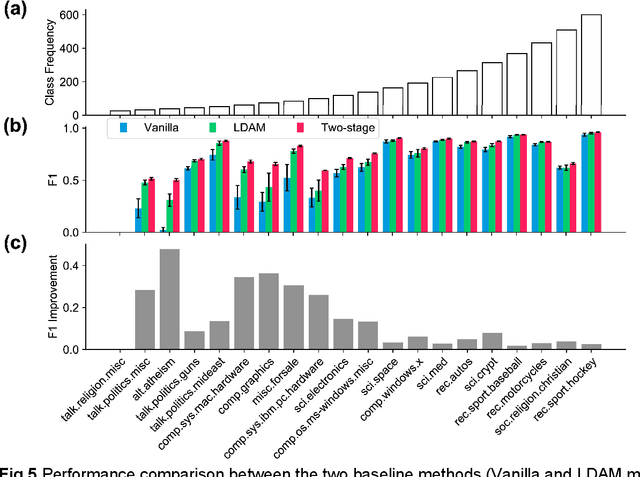

Abstract:Classification on long-tailed distributed data is a challenging problem, which suffers from serious class-imbalance and hence poor performance on tail classes with only a few samples. Owing to this paucity of samples, learning on the tail classes is especially challenging for the fine-tuning when transferring a pretrained model to a downstream task. In this work, we present a simple modification of standard fine-tuning to cope with these challenges. Specifically, we propose a two-stage fine-tuning: we first fine-tune the final layer of the pretrained model with class-balanced reweighting loss, and then we perform the standard fine-tuning. Our modification has several benefits: (1) it leverages pretrained representations by only fine-tuning a small portion of the model parameters while keeping the rest untouched; (2) it allows the model to learn an initial representation of the specific task; and importantly (3) it protects the learning of tail classes from being at a disadvantage during the model updating. We conduct extensive experiments on synthetic datasets of both two-class and multi-class tasks of text classification as well as a real-world application to ADME (i.e., absorption, distribution, metabolism, and excretion) semantic labeling. The experimental results show that the proposed two-stage fine-tuning outperforms both fine-tuning with conventional loss and fine-tuning with a reweighting loss on the above datasets.

Saliency-Regularized Deep Multi-Task Learning

Jul 03, 2022

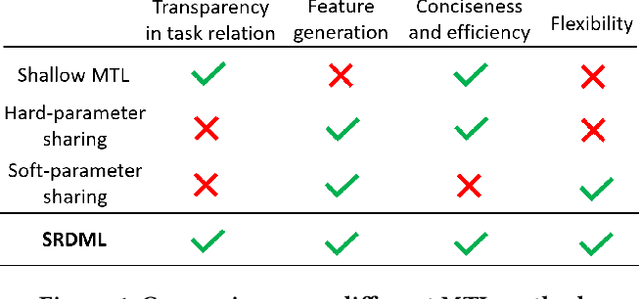

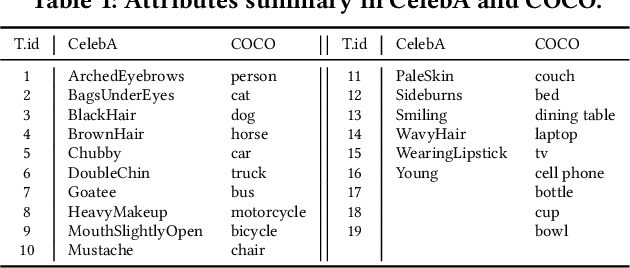

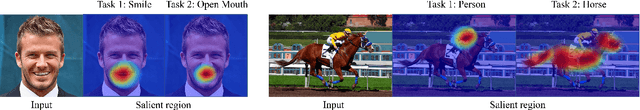

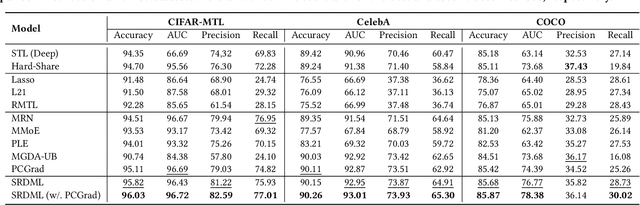

Abstract:Multitask learning is a framework that enforces multiple learning tasks to share knowledge to improve their generalization abilities. While shallow multitask learning can learn task relations, it can only handle predefined features. Modern deep multitask learning can jointly learn latent features and task sharing, but they are obscure in task relation. Also, they predefine which layers and neurons should share across tasks and cannot learn adaptively. To address these challenges, this paper proposes a new multitask learning framework that jointly learns latent features and explicit task relations by complementing the strength of existing shallow and deep multitask learning scenarios. Specifically, we propose to model the task relation as the similarity between task input gradients, with a theoretical analysis of their equivalency. In addition, we innovatively propose a multitask learning objective that explicitly learns task relations by a new regularizer. Theoretical analysis shows that the generalizability error has been reduced thanks to the proposed regularizer. Extensive experiments on several multitask learning and image classification benchmarks demonstrate the proposed method effectiveness, efficiency as well as reasonableness in the learned task relation patterns.

RES: A Robust Framework for Guiding Visual Explanation

Jun 27, 2022

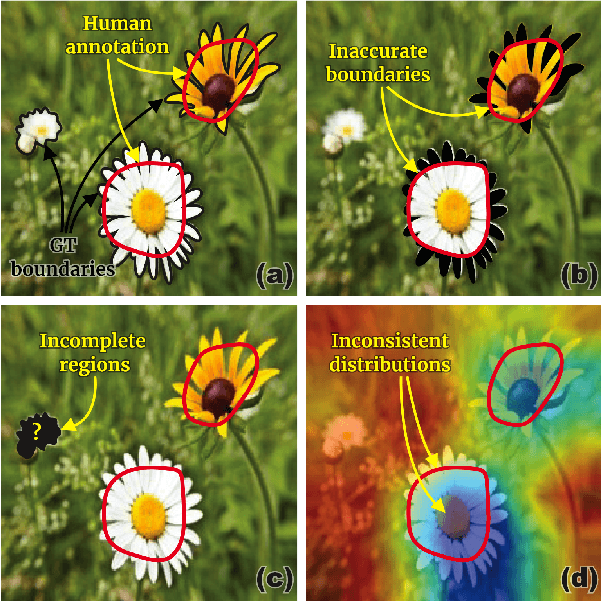

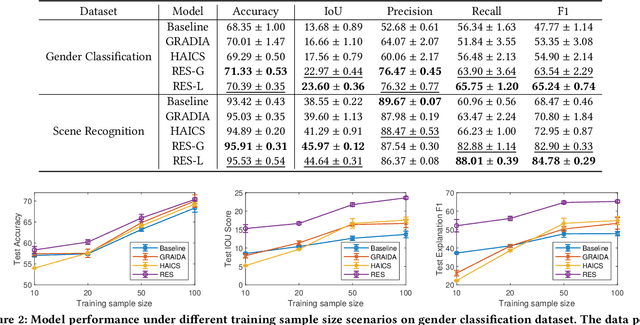

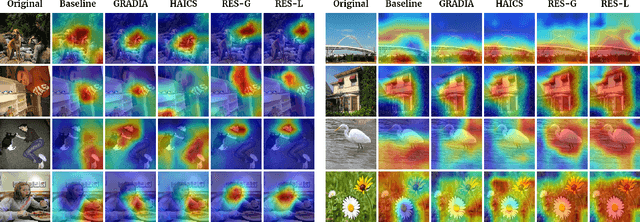

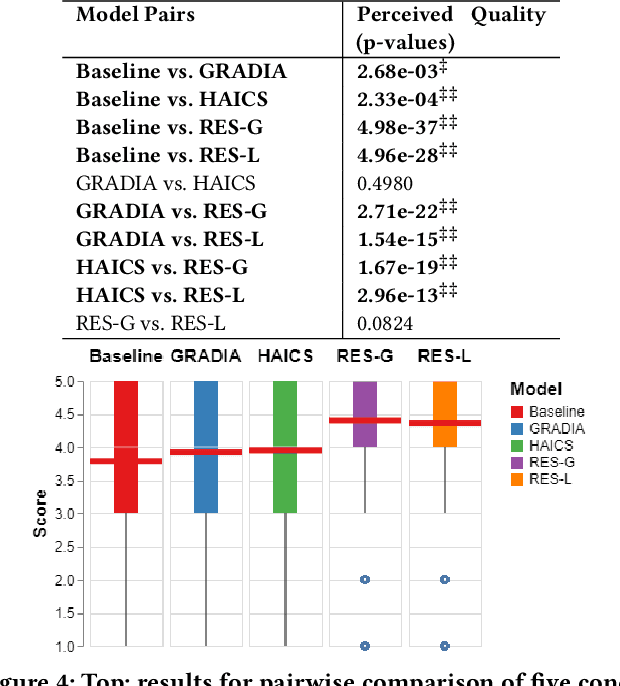

Abstract:Despite the fast progress of explanation techniques in modern Deep Neural Networks (DNNs) where the main focus is handling "how to generate the explanations", advanced research questions that examine the quality of the explanation itself (e.g., "whether the explanations are accurate") and improve the explanation quality (e.g., "how to adjust the model to generate more accurate explanations when explanations are inaccurate") are still relatively under-explored. To guide the model toward better explanations, techniques in explanation supervision - which add supervision signals on the model explanation - have started to show promising effects on improving both the generalizability as and intrinsic interpretability of Deep Neural Networks. However, the research on supervising explanations, especially in vision-based applications represented through saliency maps, is in its early stage due to several inherent challenges: 1) inaccuracy of the human explanation annotation boundary, 2) incompleteness of the human explanation annotation region, and 3) inconsistency of the data distribution between human annotation and model explanation maps. To address the challenges, we propose a generic RES framework for guiding visual explanation by developing a novel objective that handles inaccurate boundary, incomplete region, and inconsistent distribution of human annotations, with a theoretical justification on model generalizability. Extensive experiments on two real-world image datasets demonstrate the effectiveness of the proposed framework on enhancing both the reasonability of the explanation and the performance of the backbone DNNs model.

* Published in KDD 2022

Source Localization of Graph Diffusion via Variational Autoencoders for Graph Inverse Problems

Jun 24, 2022

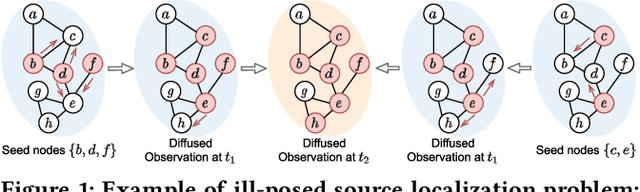

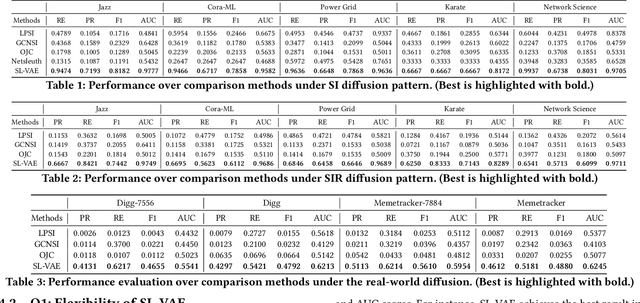

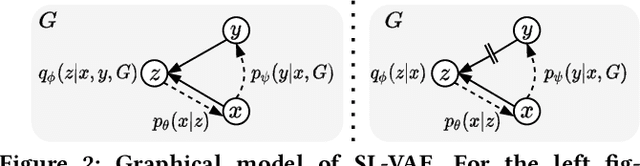

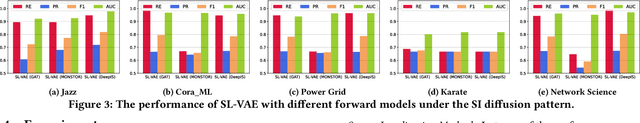

Abstract:Graph diffusion problems such as the propagation of rumors, computer viruses, or smart grid failures are ubiquitous and societal. Hence it is usually crucial to identify diffusion sources according to the current graph diffusion observations. Despite its tremendous necessity and significance in practice, source localization, as the inverse problem of graph diffusion, is extremely challenging as it is ill-posed: different sources may lead to the same graph diffusion patterns. Different from most traditional source localization methods, this paper focuses on a probabilistic manner to account for the uncertainty of different candidate sources. Such endeavors require overcoming challenges including 1) the uncertainty in graph diffusion source localization is hard to be quantified; 2) the complex patterns of the graph diffusion sources are difficult to be probabilistically characterized; 3) the generalization under any underlying diffusion patterns is hard to be imposed. To solve the above challenges, this paper presents a generic framework: Source Localization Variational AutoEncoder (SL-VAE) for locating the diffusion sources under arbitrary diffusion patterns. Particularly, we propose a probabilistic model that leverages the forward diffusion estimation model along with deep generative models to approximate the diffusion source distribution for quantifying the uncertainty. SL-VAE further utilizes prior knowledge of the source-observation pairs to characterize the complex patterns of diffusion sources by a learned generative prior. Lastly, a unified objective that integrates the forward diffusion estimation model is derived to enforce the model to generalize under arbitrary diffusion patterns. Extensive experiments are conducted on 7 real-world datasets to demonstrate the superiority of SL-VAE in reconstructing the diffusion sources by excelling other methods on average 20% in AUC score.

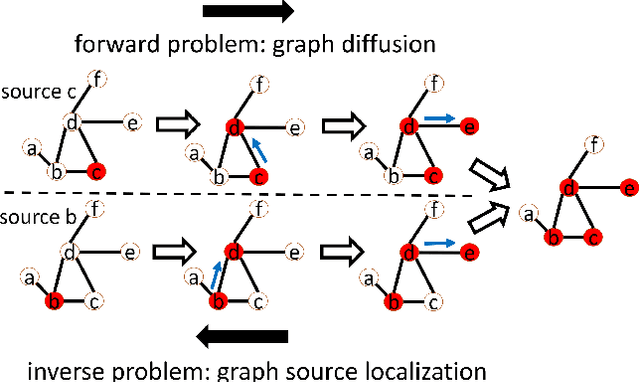

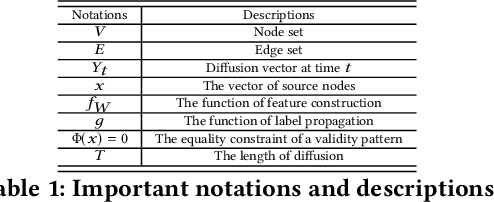

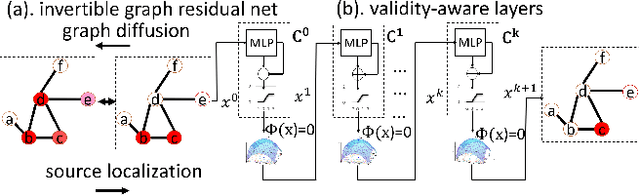

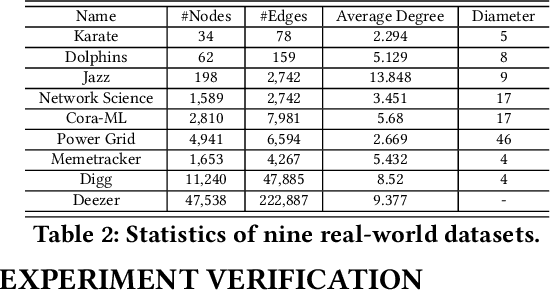

An Invertible Graph Diffusion Neural Network for Source Localization

Jun 18, 2022

Abstract:Localizing the source of graph diffusion phenomena, such as misinformation propagation, is an important yet extremely challenging task. Existing source localization models typically are heavily dependent on the hand-crafted rules. Unfortunately, a large portion of the graph diffusion process for many applications is still unknown to human beings so it is important to have expressive models for learning such underlying rules automatically. This paper aims to establish a generic framework of invertible graph diffusion models for source localization on graphs, namely Invertible Validity-aware Graph Diffusion (IVGD), to handle major challenges including 1) Difficulty to leverage knowledge in graph diffusion models for modeling their inverse processes in an end-to-end fashion, 2) Difficulty to ensure the validity of the inferred sources, and 3) Efficiency and scalability in source inference. Specifically, first, to inversely infer sources of graph diffusion, we propose a graph residual scenario to make existing graph diffusion models invertible with theoretical guarantees; second, we develop a novel error compensation mechanism that learns to offset the errors of the inferred sources. Finally, to ensure the validity of the inferred sources, a new set of validity-aware layers have been devised to project inferred sources to feasible regions by flexibly encoding constraints with unrolled optimization techniques. A linearization technique is proposed to strengthen the efficiency of our proposed layers. The convergence of the proposed IVGD is proven theoretically. Extensive experiments on nine real-world datasets demonstrate that our proposed IVGD outperforms state-of-the-art comparison methods significantly. We have released our code at https://github.com/xianggebenben/IVGD.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge