Kostas Alexis

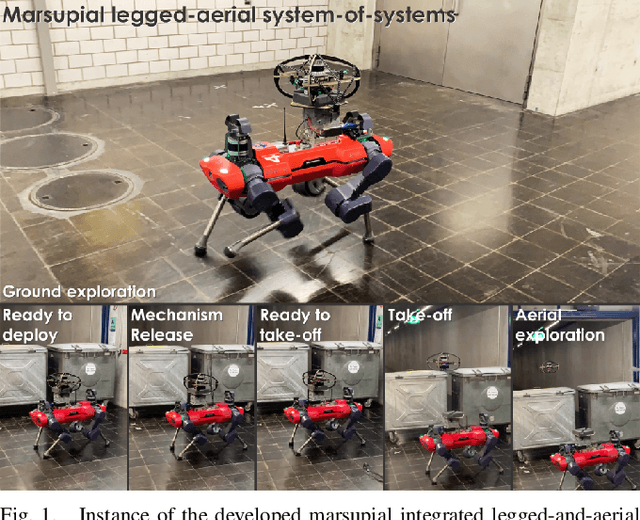

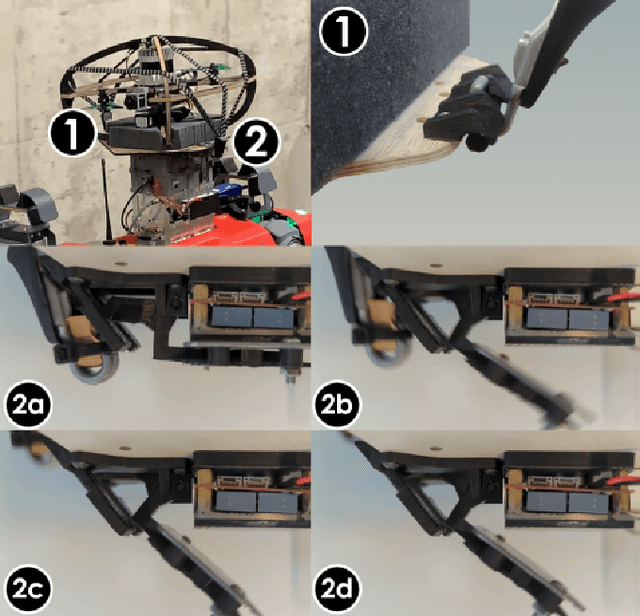

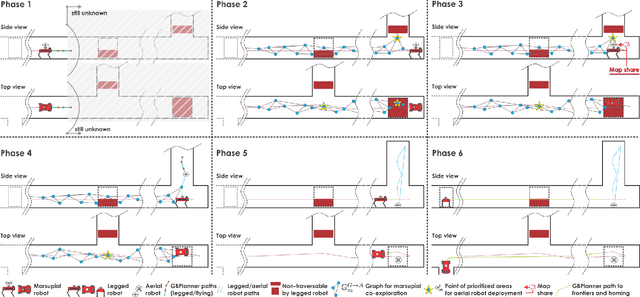

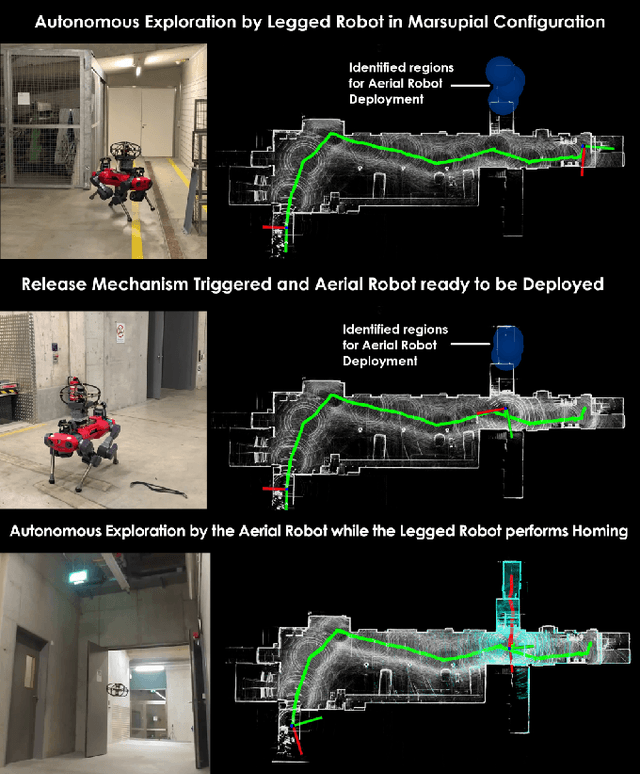

Marsupial Walking-and-Flying Robotic Deployment for Collaborative Exploration of Unknown Environments

May 11, 2022

Abstract:This work contributes a marsupial robotic system-of-systems involving a legged and an aerial robot capable of collaborative mapping and exploration path planning that exploits the heterogeneous properties of the two systems and the ability to selectively deploy the aerial system from the ground robot. Exploiting the dexterous locomotion capabilities and long endurance of quadruped robots, the marsupial combination can explore within large-scale and confined environments involving rough terrain. However, as certain types of terrain or vertical geometries can render any ground system unable to continue its exploration, the marsupial system can - when needed - deploy the flying robot which, by exploiting its 3D navigation capabilities, can undertake a focused exploration task within its endurance limitations. Focusing on autonomy, the two systems can co-localize and map together by sharing LiDAR-based maps and plan exploration paths individually, while a tailored graph search onboard the legged robot allows it to identify where and when the ferried aerial platform should be deployed. The system is verified within multiple experimental studies demonstrating the expanded exploration capabilities of the marsupial system-of-systems and facilitating the exploration of otherwise individually unreachable areas.

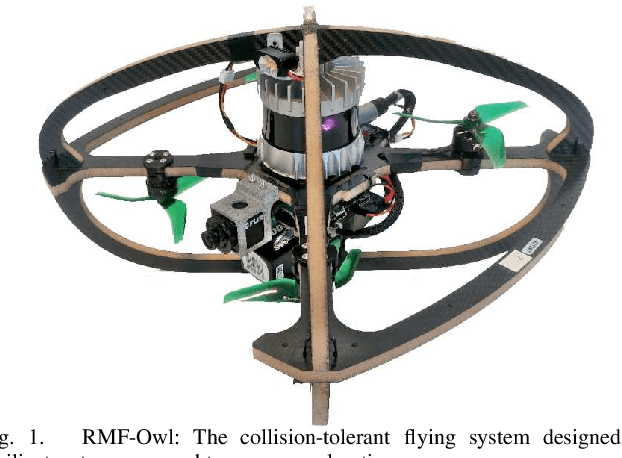

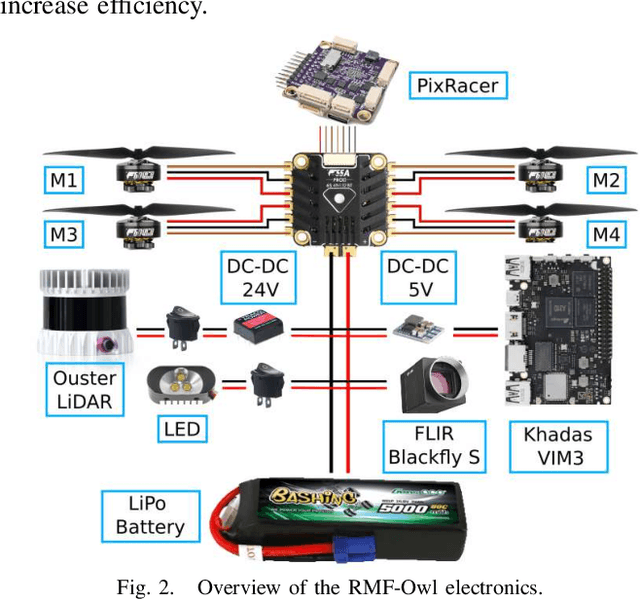

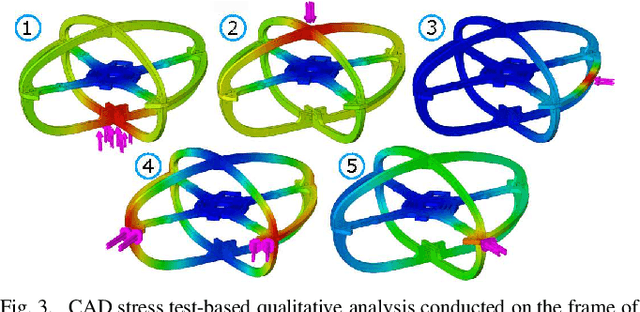

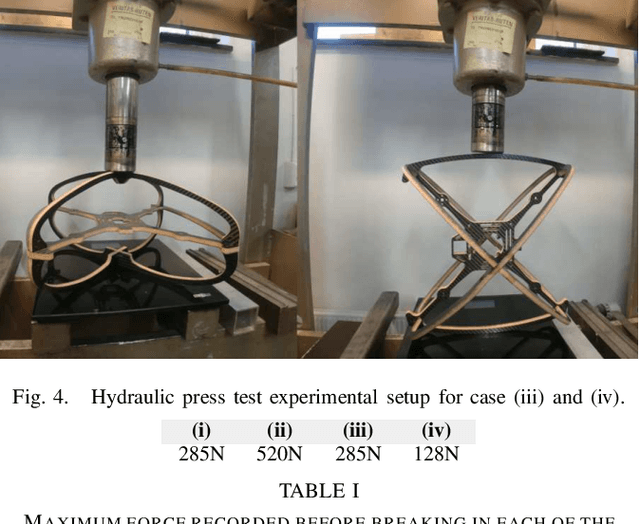

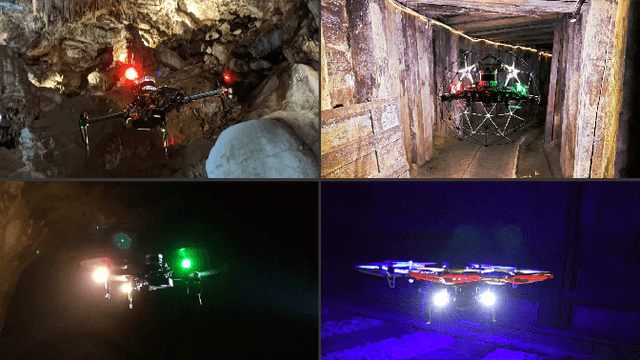

RMF-Owl: A Collision-Tolerant Flying Robot for Autonomous Subterranean Exploration

Feb 22, 2022

Abstract:This work presents the design, hardware realization, autonomous exploration and object detection capabilities of RMF-Owl, a new collision-tolerant aerial robot tailored for resilient autonomous subterranean exploration. The system is custom built for underground exploration with focus on collision tolerance, resilient autonomy with robust localization and mapping, alongside high-performance exploration path planning in confined, obstacle-filled and topologically complex underground environments. Moreover, RMF-Owl offers the ability to search, detect and locate objects of interest which can be particularly useful in search and rescue missions. A series of results from field experiments are presented in order to demonstrate the system's ability to autonomously explore challenging unknown underground environments.

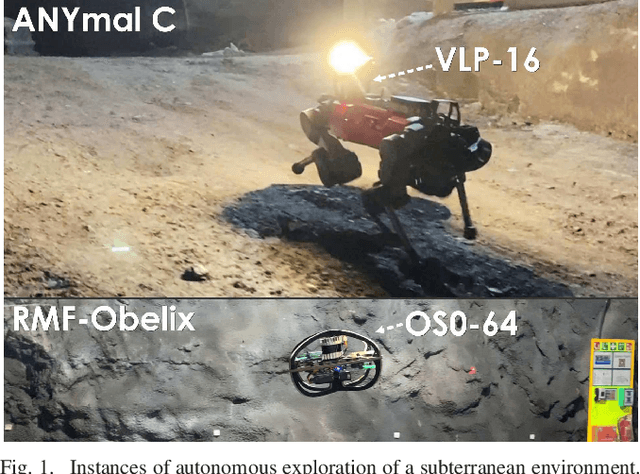

CERBERUS: Autonomous Legged and Aerial Robotic Exploration in the Tunnel and Urban Circuits of the DARPA Subterranean Challenge

Jan 18, 2022

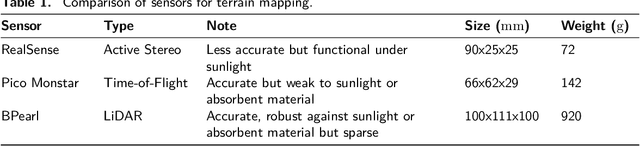

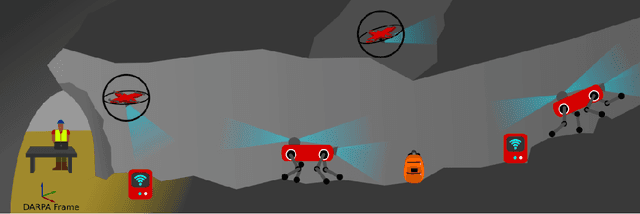

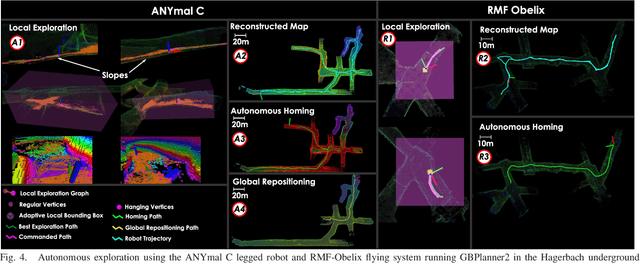

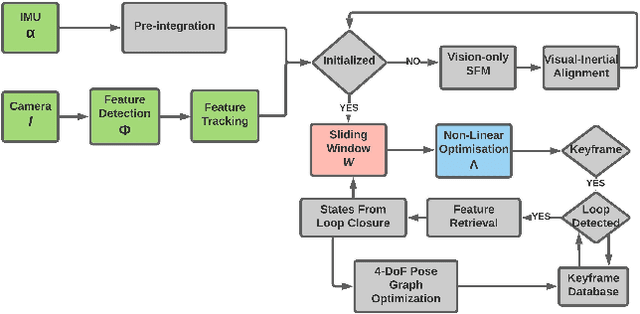

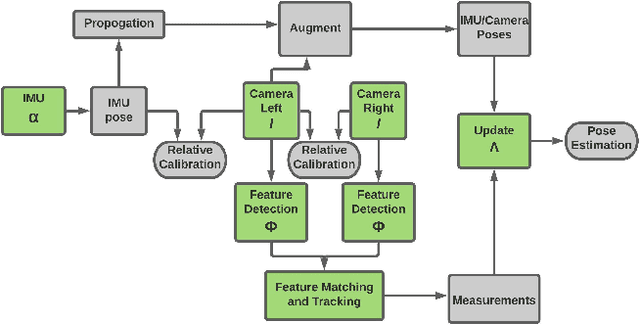

Abstract:Autonomous exploration of subterranean environments constitutes a major frontier for robotic systems as underground settings present key challenges that can render robot autonomy hard to achieve. This has motivated the DARPA Subterranean Challenge, where teams of robots search for objects of interest in various underground environments. In response, the CERBERUS system-of-systems is presented as a unified strategy towards subterranean exploration using legged and flying robots. As primary robots, ANYmal quadruped systems are deployed considering their endurance and potential to traverse challenging terrain. For aerial robots, both conventional and collision-tolerant multirotors are utilized to explore spaces too narrow or otherwise unreachable by ground systems. Anticipating degraded sensing conditions, a complementary multi-modal sensor fusion approach utilizing camera, LiDAR, and inertial data for resilient robot pose estimation is proposed. Individual robot pose estimates are refined by a centralized multi-robot map optimization approach to improve the reported location accuracy of detected objects of interest in the DARPA-defined coordinate frame. Furthermore, a unified exploration path planning policy is presented to facilitate the autonomous operation of both legged and aerial robots in complex underground networks. Finally, to enable communication between the robots and the base station, CERBERUS utilizes a ground rover with a high-gain antenna and an optical fiber connection to the base station, alongside breadcrumbing of wireless nodes by our legged robots. We report results from the CERBERUS system-of-systems deployment at the DARPA Subterranean Challenge Tunnel and Urban Circuits, along with the current limitations and the lessons learned for the benefit of the community.

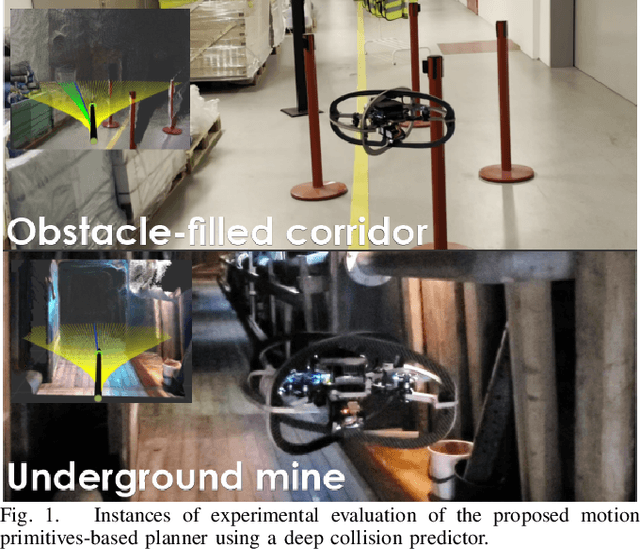

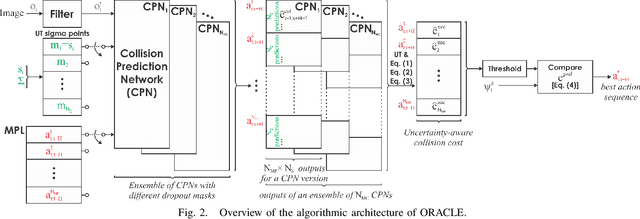

Motion Primitives-based Navigation Planning using Deep Collision Prediction

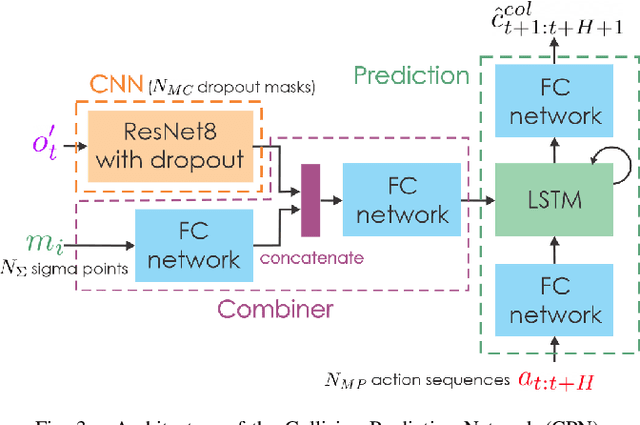

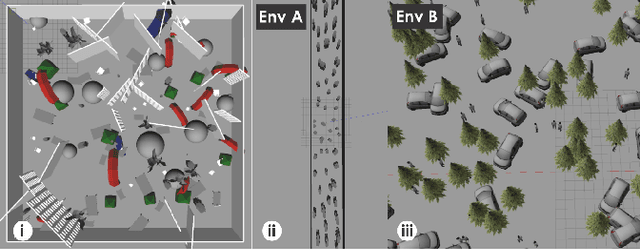

Jan 11, 2022

Abstract:This paper contributes a method to design a novel navigation planner exploiting a learning-based collision prediction network. The neural network is tasked to predict the collision cost of each action sequence in a predefined motion primitives library in the robot's velocity-steering angle space, given only the current depth image and the estimated linear and angular velocities of the robot. Furthermore, we account for the uncertainty of the robot's partial state by utilizing the Unscented Transform and the uncertainty of the neural network model by using Monte Carlo dropout. The uncertainty-aware collision cost is then combined with the goal direction given by a global planner in order to determine the best action sequence to execute in a receding horizon manner. To demonstrate the method, we develop a resilient small flying robot integrating lightweight sensing and computing resources. A set of simulation and experimental studies, including a field deployment, in both cluttered and perceptually-challenging environments is conducted to evaluate the quality of the prediction network and the performance of the proposed planner.

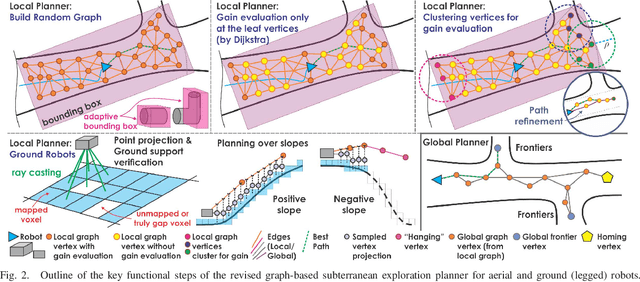

Autonomous Teamed Exploration of Subterranean Environments using Legged and Aerial Robots

Nov 11, 2021

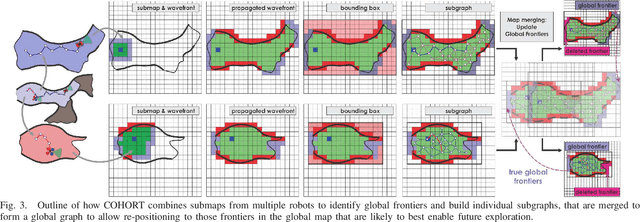

Abstract:This paper presents a novel strategy for autonomous teamed exploration of subterranean environments using legged and aerial robots. Tailored to the fact that subterranean settings, such as cave networks and underground mines, often involve complex, large-scale and multi-branched topologies, while wireless communication within them can be particularly challenging, this work is structured around the synergy of an onboard exploration path planner that allows for resilient long-term autonomy, and a multi-robot coordination framework. The onboard path planner is unified across legged and flying robots and enables navigation in environments with steep slopes, and diverse geometries. When a communication link is available, each robot of the team shares submaps to a centralized location where a multi-robot coordination framework identifies global frontiers of the exploration space to inform each system about where it should re-position to best continue its mission. The strategy is verified through a field deployment inside an underground mine in Switzerland using a legged and a flying robot collectively exploring for 45 min, as well as a longer simulation study with three systems.

Resource-aware Online Parameter Adaptation for Computationally-constrained Visual-Inertial Navigation Systems

Jun 01, 2021

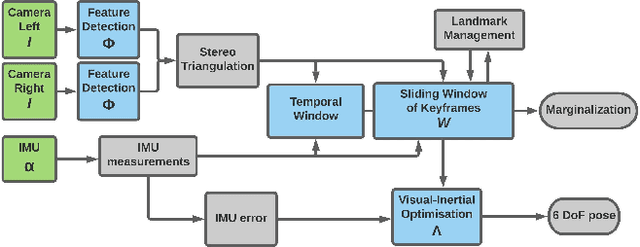

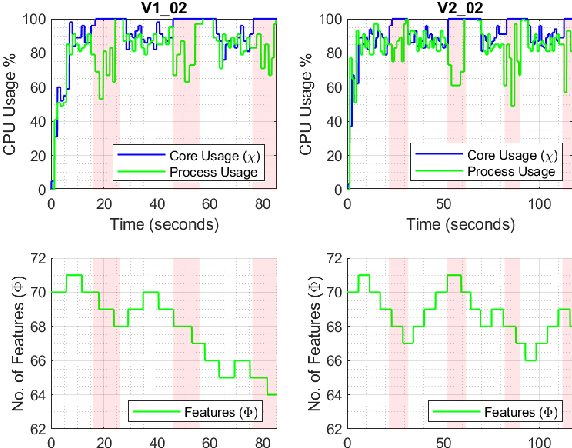

Abstract:In this paper, a computational resources-aware parameter adaptation method for visual-inertial navigation systems is proposed with the goal of enabling the improved deployment of such algorithms on computationally constrained systems. Such a capacity can prove critical when employed on ultra-lightweight systems or alongside mission critical computationally expensive processes. To achieve this objective, the algorithm proposes selected changes in the vision front-end and optimization back-end of visual-inertial odometry algorithms, both prior to execution and in real-time based on an online profiling of available resources. The method also utilizes information from the motion dynamics experienced by the system to manipulate parameters online. The general policy is demonstrated on three established algorithms, namely S-MSCKF, VINS-Mono and OKVIS and has been verified experimentally on the EuRoC dataset. The proposed approach achieved comparable performance at a fraction of the original computational cost.

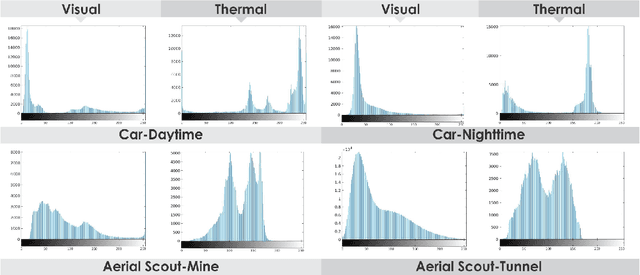

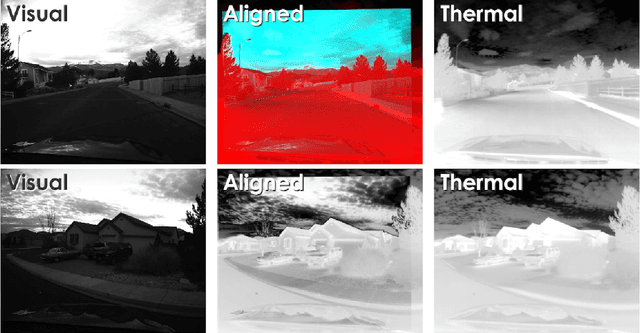

Visual-Thermal Camera Dataset Release and Multi-Modal Alignment without Calibration Information

Dec 29, 2020

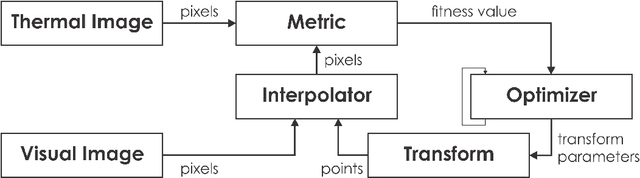

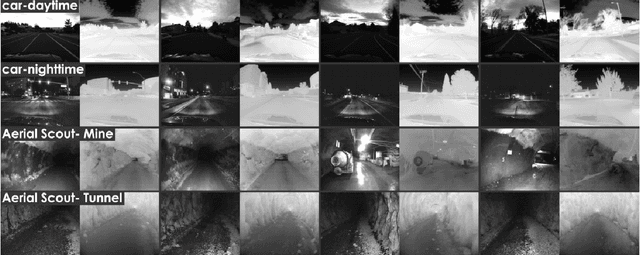

Abstract:This report accompanies a dataset release on visual and thermal camera data and details a procedure followed to align such multi-modal camera frames in order to provide pixel-level correspondence between the two without using intrinsic or extrinsic calibration information. To achieve this goal we benefit from progress in the domain of multi-modal image alignment and specifically employ the Mattes Mutual Information Metric to guide the registration process. In the released dataset we release both the raw visual and thermal camera data, as well as the aligned frames, alongside calibration parameters with the goal to better facilitate the investigation on common local/global features across such multi-modal image streams.

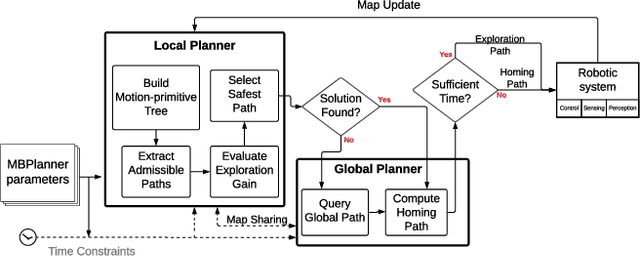

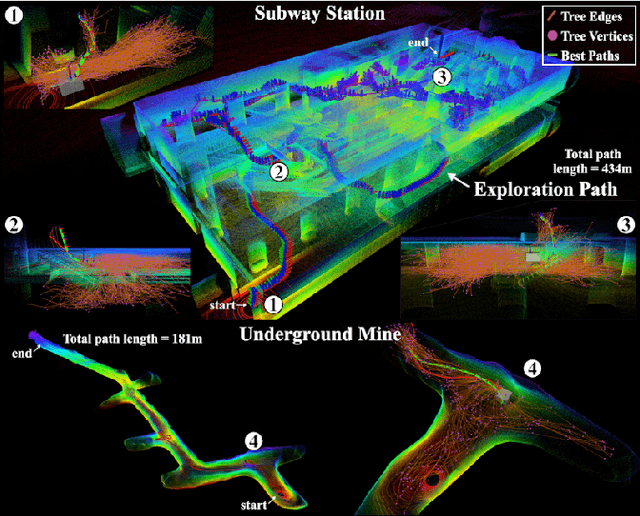

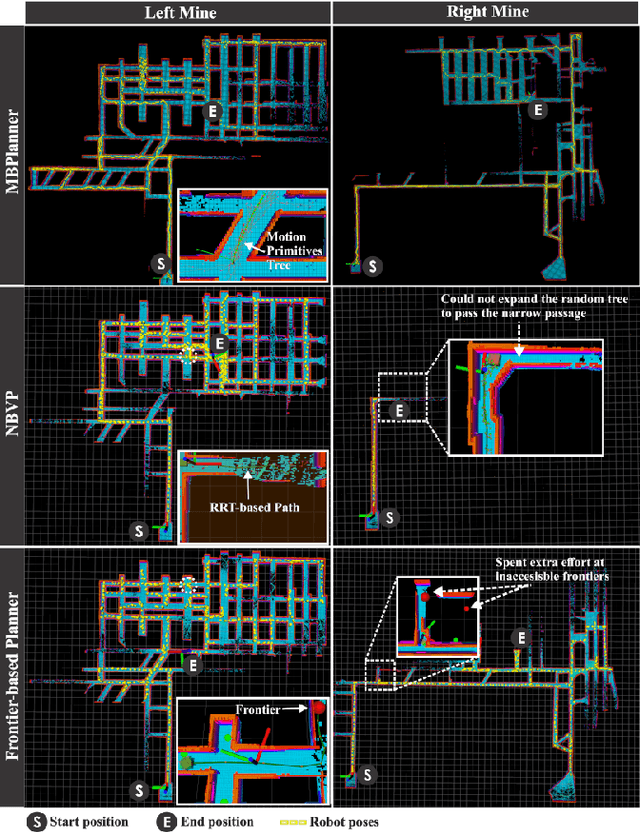

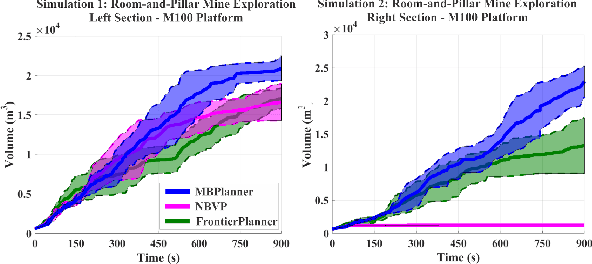

Appendix for the Motion Primitives-based Path Planning for Fast and Agile Exploration Method

Dec 06, 2020

Abstract:This manuscript presents enhancements on our motion-primitives exploration path planning method for agile exploration using aerial robots. The method now further integrates a global planning layer to facilitate reliable large-scale exploration. The implemented bifurcation between local and global planning allows for efficient exploration combined with the ability to plan within very large environments, while also ensuring safe and timely return-to-home. A new set of simulation studies and experimental results are presented to demonstrate the new improvements and enhancements. The method is available open source as a Robot Operating System (ROS) package.

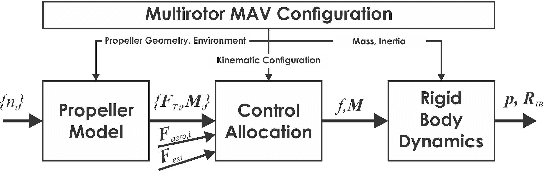

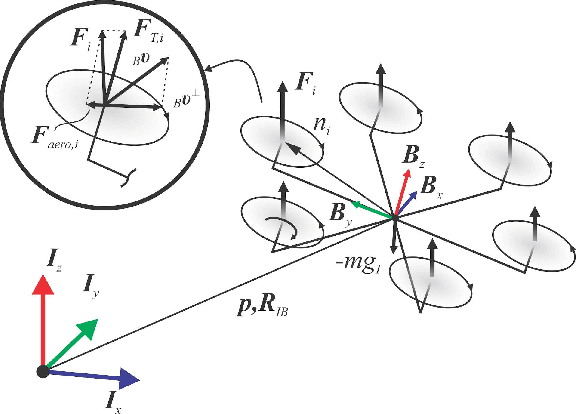

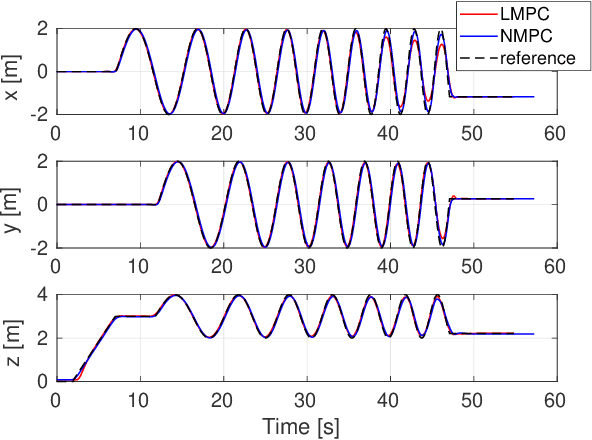

Model Predictive Control for Micro Aerial Vehicles: A Survey

Nov 22, 2020

Abstract:This paper presents a review of the design and application of model predictive control strategies for Micro Aerial Vehicles and specifically multirotor configurations such as quadrotors. The diverse set of works in the domain is organized based on the control law being optimized over linear or nonlinear dynamics, the integration of state and input constraints, possible fault-tolerant design, if reinforcement learning methods have been utilized and if the controller refers to free-flight or other tasks such as physical interaction or load transportation. A selected set of comparison results are also presented and serve to provide insight for the selection between linear and nonlinear schemes, the tuning of the prediction horizon, the importance of disturbance observer-based offset-free tracking and the intrinsic robustness of such methods to parameter uncertainty. Furthermore, an overview of recent research trends on the combined application of modern deep reinforcement learning techniques and model predictive control for multirotor vehicles is presented. Finally, this review concludes with explicit discussion regarding selected open-source software packages that deliver off-the-shelf model predictive control functionality applicable to a wide variety of Micro Aerial Vehicle configurations.

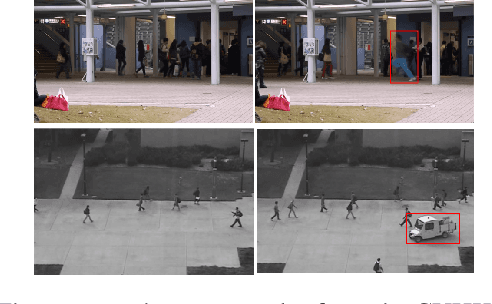

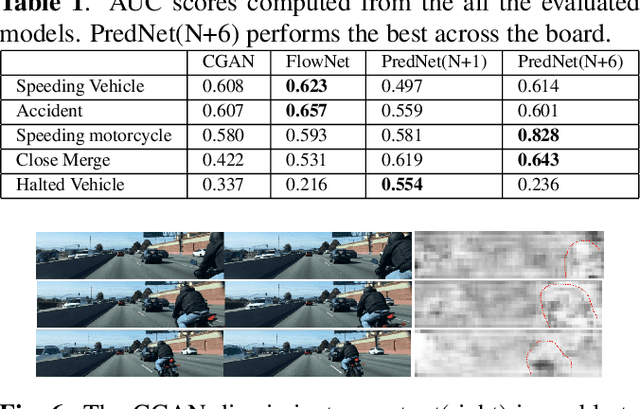

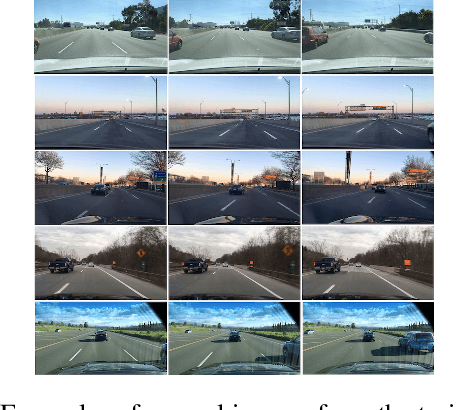

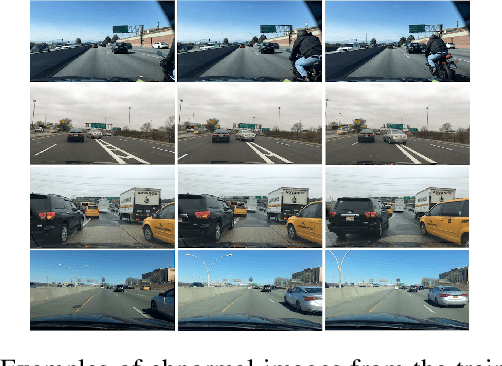

Anomalous Motion Detection on Highway Using Deep Learning

Jun 15, 2020

Abstract:Research in visual anomaly detection draws much interest due to its applications in surveillance. Common datasets for evaluation are constructed using a stationary camera overlooking a region of interest. Previous research has shown promising results in detecting spatial as well as temporal anomalies in these settings. The advent of self-driving cars provides an opportunity to apply visual anomaly detection in a more dynamic application yet no dataset exists in this type of environment. This paper presents a new anomaly detection dataset - the Highway Traffic Anomaly (HTA) dataset - for the problem of detecting anomalous traffic patterns from dash cam videos of vehicles on highways. We evaluate state-of-the-art deep learning anomaly detection models and propose novel variations to these methods. Our results show that state-of-the-art models built for settings with a stationary camera do not translate well to a more dynamic environment. The proposed variations to these SoTA methods show promising results on the new HTA dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge