Jingyan Shen

When Reasoning Meets Its Laws

Dec 19, 2025

Abstract:Despite the superior performance of Large Reasoning Models (LRMs), their reasoning behaviors are often counterintuitive, leading to suboptimal reasoning capabilities. To theoretically formalize the desired reasoning behaviors, this paper presents the Laws of Reasoning (LoRe), a unified framework that characterizes intrinsic reasoning patterns in LRMs. We first propose compute law with the hypothesis that the reasoning compute should scale linearly with question complexity. Beyond compute, we extend LoRe with a supplementary accuracy law. Since the question complexity is difficult to quantify in practice, we examine these hypotheses by two properties of the laws, monotonicity and compositionality. We therefore introduce LoRe-Bench, a benchmark that systematically measures these two tractable properties for large reasoning models. Evaluation shows that most reasoning models exhibit reasonable monotonicity but lack compositionality. In response, we develop an effective finetuning approach that enforces compute-law compositionality. Extensive empirical studies demonstrate that better compliance with compute laws yields consistently improved reasoning performance on multiple benchmarks, and uncovers synergistic effects across properties and laws. Project page: https://lore-project.github.io/

Improving Data Efficiency for LLM Reinforcement Fine-tuning Through Difficulty-targeted Online Data Selection and Rollout Replay

Jun 05, 2025Abstract:Reinforcement learning (RL) has become an effective approach for fine-tuning large language models (LLMs), particularly to enhance their reasoning capabilities. However, RL fine-tuning remains highly resource-intensive, and existing work has largely overlooked the problem of data efficiency. In this paper, we propose two techniques to improve data efficiency in LLM RL fine-tuning: difficulty-targeted online data selection and rollout replay. We introduce the notion of adaptive difficulty to guide online data selection, prioritizing questions of moderate difficulty that are more likely to yield informative learning signals. To estimate adaptive difficulty efficiently, we develop an attention-based framework that requires rollouts for only a small reference set of questions. The adaptive difficulty of the remaining questions is then estimated based on their similarity to this set. To further reduce rollout cost, we introduce a rollout replay mechanism that reuses recent rollouts, lowering per-step computation while maintaining stable updates. Extensive experiments across 6 LLM-dataset combinations show that our method reduces RL fine-tuning time by 25% to 65% to reach the same level of performance as the original GRPO algorithm.

MiCRo: Mixture Modeling and Context-aware Routing for Personalized Preference Learning

May 30, 2025Abstract:Reward modeling is a key step in building safe foundation models when applying reinforcement learning from human feedback (RLHF) to align Large Language Models (LLMs). However, reward modeling based on the Bradley-Terry (BT) model assumes a global reward function, failing to capture the inherently diverse and heterogeneous human preferences. Hence, such oversimplification limits LLMs from supporting personalization and pluralistic alignment. Theoretically, we show that when human preferences follow a mixture distribution of diverse subgroups, a single BT model has an irreducible error. While existing solutions, such as multi-objective learning with fine-grained annotations, help address this issue, they are costly and constrained by predefined attributes, failing to fully capture the richness of human values. In this work, we introduce MiCRo, a two-stage framework that enhances personalized preference learning by leveraging large-scale binary preference datasets without requiring explicit fine-grained annotations. In the first stage, MiCRo introduces context-aware mixture modeling approach to capture diverse human preferences. In the second stage, MiCRo integrates an online routing strategy that dynamically adapts mixture weights based on specific context to resolve ambiguity, allowing for efficient and scalable preference adaptation with minimal additional supervision. Experiments on multiple preference datasets demonstrate that MiCRo effectively captures diverse human preferences and significantly improves downstream personalization.

Conformal Tail Risk Control for Large Language Model Alignment

Feb 27, 2025Abstract:Recent developments in large language models (LLMs) have led to their widespread usage for various tasks. The prevalence of LLMs in society implores the assurance on the reliability of their performance. In particular, risk-sensitive applications demand meticulous attention to unexpectedly poor outcomes, i.e., tail events, for instance, toxic answers, humiliating language, and offensive outputs. Due to the costly nature of acquiring human annotations, general-purpose scoring models have been created to automate the process of quantifying these tail events. This phenomenon introduces potential human-machine misalignment between the respective scoring mechanisms. In this work, we present a lightweight calibration framework for blackbox models that ensures the alignment of humans and machines with provable guarantees. Our framework provides a rigorous approach to controlling any distortion risk measure that is characterized by a weighted average of quantiles of the loss incurred by the LLM with high confidence. The theoretical foundation of our method relies on the connection between conformal risk control and a traditional family of statistics, i.e., L-statistics. To demonstrate the utility of our framework, we conduct comprehensive experiments that address the issue of human-machine misalignment.

Rethinking Diverse Human Preference Learning through Principal Component Analysis

Feb 18, 2025Abstract:Understanding human preferences is crucial for improving foundation models and building personalized AI systems. However, preferences are inherently diverse and complex, making it difficult for traditional reward models to capture their full range. While fine-grained preference data can help, collecting it is expensive and hard to scale. In this paper, we introduce Decomposed Reward Models (DRMs), a novel approach that extracts diverse human preferences from binary comparisons without requiring fine-grained annotations. Our key insight is to represent human preferences as vectors and analyze them using Principal Component Analysis (PCA). By constructing a dataset of embedding differences between preferred and rejected responses, DRMs identify orthogonal basis vectors that capture distinct aspects of preference. These decomposed rewards can be flexibly combined to align with different user needs, offering an interpretable and scalable alternative to traditional reward models. We demonstrate that DRMs effectively extract meaningful preference dimensions (e.g., helpfulness, safety, humor) and adapt to new users without additional training. Our results highlight DRMs as a powerful framework for personalized and interpretable LLM alignment.

2D-OOB: Attributing Data Contribution through Joint Valuation Framework

Aug 07, 2024

Abstract:Data valuation has emerged as a powerful framework to quantify the contribution of each datum to the training of a particular machine learning model. However, it is crucial to recognize that the quality of various cells within a single data point can vary greatly in practice. For example, even in the case of an abnormal data point, not all cells are necessarily noisy. The single scalar valuation assigned by existing methods blurs the distinction between noisy and clean cells of a data point, thereby compromising the interpretability of the valuation. In this paper, we propose 2D-OOB, an out-of-bag estimation framework for jointly determining helpful (or detrimental) samples, as well as the particular cells that drive them. Our comprehensive experiments demonstrate that 2D-OOB achieves state-of-the-art performance across multiple use cases, while being exponentially faster. 2D-OOB excels in detecting and rectifying fine-grained outliers at the cell level, as well as localizing backdoor triggers in data poisoning attacks.

TimeInf: Time Series Data Contribution via Influence Functions

Jul 23, 2024Abstract:Evaluating the contribution of individual data points to a model's prediction is critical for interpreting model predictions and improving model performance. Existing data contribution methods have been applied to various data types, including tabular data, images, and texts; however, their primary focus has been on i.i.d. settings. Despite the pressing need for principled approaches tailored to time series datasets, the problem of estimating data contribution in such settings remains unexplored, possibly due to challenges associated with handling inherent temporal dependencies. This paper introduces TimeInf, a data contribution estimation method for time-series datasets. TimeInf uses influence functions to attribute model predictions to individual time points while preserving temporal structures. Our extensive empirical results demonstrate that TimeInf outperforms state-of-the-art methods in identifying harmful anomalies and helpful time points for forecasting. Additionally, TimeInf offers intuitive and interpretable attributions of data values, allowing us to easily distinguish diverse anomaly patterns through visualizations.

MMA-RNN: A Multi-level Multi-task Attention-based Recurrent Neural Network for Discrimination and Localization of Atrial Fibrillation

Feb 09, 2023Abstract:The automatic detection of atrial fibrillation based on electrocardiograph (ECG) signals has received wide attention both clinically and practically. It is challenging to process ECG signals with cyclical pattern, varying length and unstable quality due to noise and distortion. Besides, there has been insufficient research on separating persistent atrial fibrillation from paroxysmal atrial fibrillation, and little discussion on locating the onsets and end points of AF episodes. It is even more arduous to perform well on these two distinct but interrelated tasks, while avoiding the mistakes inherent from stage-by-stage approaches. This paper proposes the Multi-level Multi-task Attention-based Recurrent Neural Network for three-class discrimination on patients and localization of the exact timing of AF episodes. Our model captures three-level sequential features based on a hierarchical architecture utilizing Bidirectional Long and Short-Term Memory Network (Bi-LSTM) and attention layers, and accomplishes the two tasks simultaneously with a multi-head classifier. The model is designed as an end-to-end framework to enhance information interaction and reduce error accumulation. Finally, we conduct experiments on CPSC 2021 dataset and the result demonstrates the superior performance of our method, indicating the potential application of MMA-RNN to wearable mobile devices for routine AF monitoring and early diagnosis.

SRCN3D: Sparse R-CNN 3D Surround-View Camera Object Detection and Tracking for Autonomous Driving

Jun 29, 2022

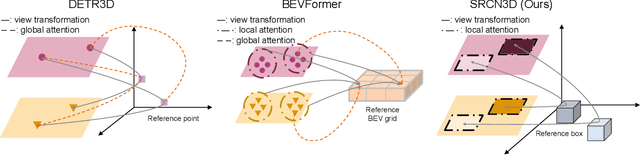

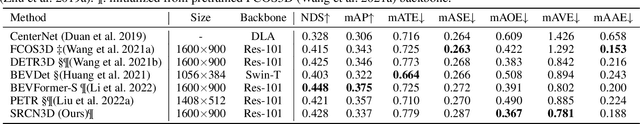

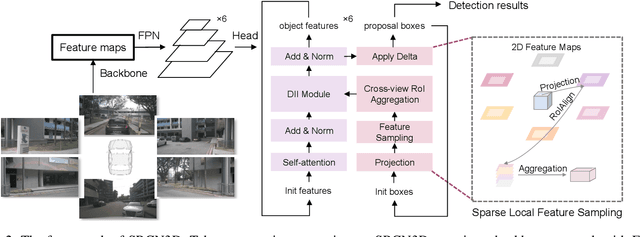

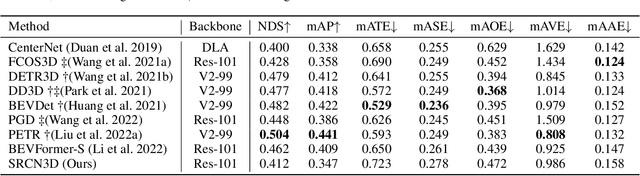

Abstract:Detection And Tracking of Moving Objects (DATMO) is an essential component in environmental perception for autonomous driving. While 3D detectors using surround-view cameras are just flourishing, there is a growing tendency of using different transformer-based methods to learn queries in 3D space from 2D feature maps of perspective view. This paper proposes Sparse R-CNN 3D (SRCN3D), a novel two-stage fully-convolutional mapping pipeline for surround-view camera detection and tracking. SRCN3D adopts a cascade structure with twin-track update of both fixed number of proposal boxes and proposal latent features. Proposal boxes are projected to perspective view so as to aggregate Region of Interest (RoI) local features. Based on that, proposal features are refined via a dynamic instance interactive head, which then generates classification and the offsets applied to original bounding boxes. Compared to prior arts, our sparse feature sampling module only utilizes local 2D features for adjustment of each corresponding 3D proposal box, leading to a complete sparse paradigm. The proposal features and appearance features are both taken in data association process in a multi-hypotheses 3D multi-object tracking approach. Extensive experiments on nuScenes dataset demonstrate the effectiveness of our proposed SRCN3D detector and tracker. Code is available at https://github.com/synsin0/SRCN3D.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge