Jing Ma

SAIF: A Sparse Autoencoder Framework for Interpreting and Steering Instruction Following of Language Models

Feb 17, 2025Abstract:The ability of large language models (LLMs) to follow instructions is crucial for their practical applications, yet the underlying mechanisms remain poorly understood. This paper presents a novel framework that leverages sparse autoencoders (SAE) to interpret how instruction following works in these models. We demonstrate how the features we identify can effectively steer model outputs to align with given instructions. Through analysis of SAE latent activations, we identify specific latents responsible for instruction following behavior. Our findings reveal that instruction following capabilities are encoded by a distinct set of instruction-relevant SAE latents. These latents both show semantic proximity to relevant instructions and demonstrate causal effects on model behavior. Our research highlights several crucial factors for achieving effective steering performance: precise feature identification, the role of final layer, and optimal instruction positioning. Additionally, we demonstrate that our methodology scales effectively across SAEs and LLMs of varying sizes.

LLM-Enhanced Multiple Instance Learning for Joint Rumor and Stance Detection with Social Context Information

Feb 13, 2025Abstract:The proliferation of misinformation, such as rumors on social media, has drawn significant attention, prompting various expressions of stance among users. Although rumor detection and stance detection are distinct tasks, they can complement each other. Rumors can be identified by cross-referencing stances in related posts, and stances are influenced by the nature of the rumor. However, existing stance detection methods often require post-level stance annotations, which are costly to obtain. We propose a novel LLM-enhanced MIL approach to jointly predict post stance and claim class labels, supervised solely by claim labels, using an undirected microblog propagation model. Our weakly supervised approach relies only on bag-level labels of claim veracity, aligning with multi-instance learning (MIL) principles. To achieve this, we transform the multi-class problem into multiple MIL-based binary classification problems. We then employ a discriminative attention layer to aggregate the outputs from these classifiers into finer-grained classes. Experiments conducted on three rumor datasets and two stance datasets demonstrate the effectiveness of our approach, highlighting strong connections between rumor veracity and expressed stances in responding posts. Our method shows promising performance in joint rumor and stance detection compared to the state-of-the-art methods.

GraphICL: Unlocking Graph Learning Potential in LLMs through Structured Prompt Design

Jan 27, 2025

Abstract:The growing importance of textual and relational systems has driven interest in enhancing large language models (LLMs) for graph-structured data, particularly Text-Attributed Graphs (TAGs), where samples are represented by textual descriptions interconnected by edges. While research has largely focused on developing specialized graph LLMs through task-specific instruction tuning, a comprehensive benchmark for evaluating LLMs solely through prompt design remains surprisingly absent. Without such a carefully crafted evaluation benchmark, most if not all, tailored graph LLMs are compared against general LLMs using simplistic queries (e.g., zero-shot reasoning with LLaMA), which can potentially camouflage many advantages as well as unexpected predicaments of them. To achieve more general evaluations and unveil the true potential of LLMs for graph tasks, we introduce Graph In-context Learning (GraphICL) Benchmark, a comprehensive benchmark comprising novel prompt templates designed to capture graph structure and handle limited label knowledge. Our systematic evaluation shows that general-purpose LLMs equipped with our GraphICL outperform state-of-the-art specialized graph LLMs and graph neural network models in resource-constrained settings and out-of-domain tasks. These findings highlight the significant potential of prompt engineering to enhance LLM performance on graph learning tasks without training and offer a strong baseline for advancing research in graph LLMs.

Backdoor Token Unlearning: Exposing and Defending Backdoors in Pretrained Language Models

Jan 05, 2025

Abstract:Supervised fine-tuning has become the predominant method for adapting large pretrained models to downstream tasks. However, recent studies have revealed that these models are vulnerable to backdoor attacks, where even a small number of malicious samples can successfully embed backdoor triggers into the model. While most existing defense methods focus on post-training backdoor defense, efficiently defending against backdoor attacks during training phase remains largely unexplored. To address this gap, we propose a novel defense method called Backdoor Token Unlearning (BTU), which proactively detects and neutralizes trigger tokens during the training stage. Our work is based on two key findings: 1) backdoor learning causes distinctive differences between backdoor token parameters and clean token parameters in word embedding layers, and 2) the success of backdoor attacks heavily depends on backdoor token parameters. The BTU defense leverages these properties to identify aberrant embedding parameters and subsequently removes backdoor behaviors using a fine-grained unlearning technique. Extensive evaluations across three datasets and four types of backdoor attacks demonstrate that BTU effectively defends against these threats while preserving the model's performance on primary tasks. Our code is available at https://github.com/XDJPH/BTU.

ClarityEthic: Explainable Moral Judgment Utilizing Contrastive Ethical Insights from Large Language Models

Dec 17, 2024

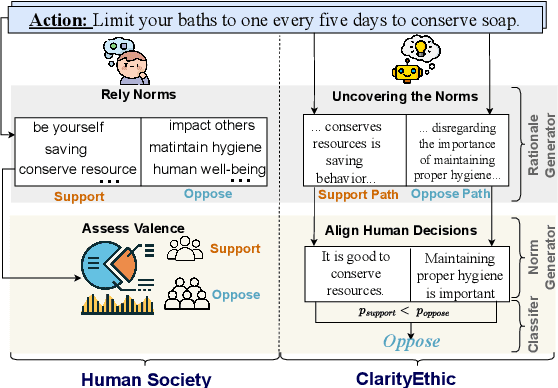

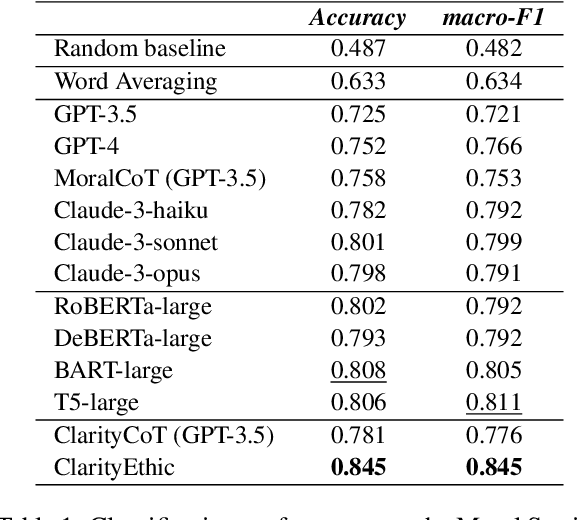

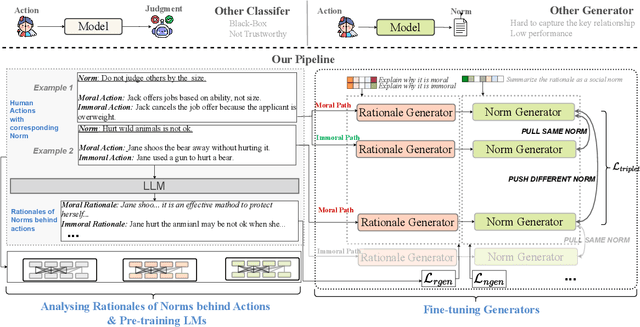

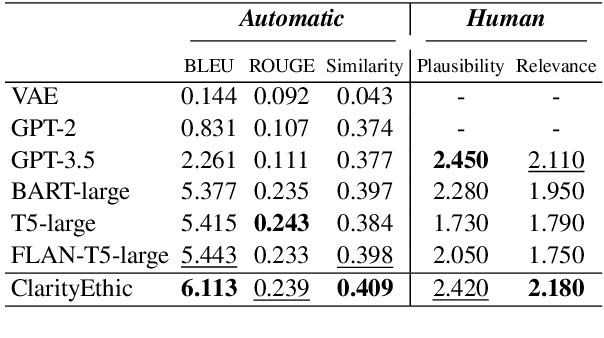

Abstract:With the rise and widespread use of Large Language Models (LLMs), ensuring their safety is crucial to prevent harm to humans and promote ethical behaviors. However, directly assessing value valence (i.e., support or oppose) by leveraging large-scale data training is untrustworthy and inexplainable. We assume that emulating humans to rely on social norms to make moral decisions can help LLMs understand and predict moral judgment. However, capturing human values remains a challenge, as multiple related norms might conflict in specific contexts. Consider norms that are upheld by the majority and promote the well-being of society are more likely to be accepted and widely adopted (e.g., "don't cheat,"). Therefore, it is essential for LLM to identify the appropriate norms for a given scenario before making moral decisions. To this end, we introduce a novel moral judgment approach called \textit{ClarityEthic} that leverages LLMs' reasoning ability and contrastive learning to uncover relevant social norms for human actions from different perspectives and select the most reliable one to enhance judgment accuracy. Extensive experiments demonstrate that our method outperforms state-of-the-art approaches in moral judgment tasks. Moreover, human evaluations confirm that the generated social norms provide plausible explanations that support the judgments. This suggests that modeling human moral judgment with the emulating humans moral strategy is promising for improving the ethical behaviors of LLMs.

ScratchEval: Are GPT-4o Smarter than My Child? Evaluating Large Multimodal Models with Visual Programming Challenges

Nov 28, 2024Abstract:Recent advancements in large multimodal models (LMMs) have showcased impressive code generation capabilities, primarily evaluated through image-to-code benchmarks. However, these benchmarks are limited to specific visual programming scenarios where the logic reasoning and the multimodal understanding capacities are split apart. To fill this gap, we propose ScratchEval, a novel benchmark designed to evaluate the visual programming reasoning ability of LMMs. ScratchEval is based on Scratch, a block-based visual programming language widely used in children's programming education. By integrating visual elements and embedded programming logic, ScratchEval requires the model to process both visual information and code structure, thereby comprehensively evaluating its programming intent understanding ability. Our evaluation approach goes beyond the traditional image-to-code mapping and focuses on unified logical thinking and problem-solving abilities, providing a more comprehensive and challenging framework for evaluating the visual programming ability of LMMs. ScratchEval not only fills the gap in existing evaluation methods, but also provides new insights for the future development of LMMs in the field of visual programming. Our benchmark can be accessed at https://github.com/HKBUNLP/ScratchEval .

VideoAutoArena: An Automated Arena for Evaluating Large Multimodal Models in Video Analysis through User Simulation

Nov 20, 2024

Abstract:Large multimodal models (LMMs) with advanced video analysis capabilities have recently garnered significant attention. However, most evaluations rely on traditional methods like multiple-choice questions in benchmarks such as VideoMME and LongVideoBench, which are prone to lack the depth needed to capture the complex demands of real-world users. To address this limitation-and due to the prohibitive cost and slow pace of human annotation for video tasks-we introduce VideoAutoArena, an arena-style benchmark inspired by LMSYS Chatbot Arena's framework, designed to automatically assess LMMs' video analysis abilities. VideoAutoArena utilizes user simulation to generate open-ended, adaptive questions that rigorously assess model performance in video understanding. The benchmark features an automated, scalable evaluation framework, incorporating a modified ELO Rating System for fair and continuous comparisons across multiple LMMs. To validate our automated judging system, we construct a 'gold standard' using a carefully curated subset of human annotations, demonstrating that our arena strongly aligns with human judgment while maintaining scalability. Additionally, we introduce a fault-driven evolution strategy, progressively increasing question complexity to push models toward handling more challenging video analysis scenarios. Experimental results demonstrate that VideoAutoArena effectively differentiates among state-of-the-art LMMs, providing insights into model strengths and areas for improvement. To further streamline our evaluation, we introduce VideoAutoBench as an auxiliary benchmark, where human annotators label winners in a subset of VideoAutoArena battles. We use GPT-4o as a judge to compare responses against these human-validated answers. Together, VideoAutoArena and VideoAutoBench offer a cost-effective, and scalable framework for evaluating LMMs in user-centric video analysis.

Invariant Shape Representation Learning For Image Classification

Nov 19, 2024

Abstract:Geometric shape features have been widely used as strong predictors for image classification. Nevertheless, most existing classifiers such as deep neural networks (DNNs) directly leverage the statistical correlations between these shape features and target variables. However, these correlations can often be spurious and unstable across different environments (e.g., in different age groups, certain types of brain changes have unstable relations with neurodegenerative disease); hence leading to biased or inaccurate predictions. In this paper, we introduce a novel framework that for the first time develops invariant shape representation learning (ISRL) to further strengthen the robustness of image classifiers. In contrast to existing approaches that mainly derive features in the image space, our model ISRL is designed to jointly capture invariant features in latent shape spaces parameterized by deformable transformations. To achieve this goal, we develop a new learning paradigm based on invariant risk minimization (IRM) to learn invariant representations of image and shape features across multiple training distributions/environments. By embedding the features that are invariant with regard to target variables in different environments, our model consistently offers more accurate predictions. We validate our method by performing classification tasks on both simulated 2D images, real 3D brain and cine cardiovascular magnetic resonance images (MRIs). Our code is publicly available at https://github.com/tonmoy-hossain/ISRL.

Multimodal Clinical Reasoning through Knowledge-augmented Rationale Generation

Nov 12, 2024Abstract:Clinical rationales play a pivotal role in accurate disease diagnosis; however, many models predominantly use discriminative methods and overlook the importance of generating supportive rationales. Rationale distillation is a process that transfers knowledge from large language models (LLMs) to smaller language models (SLMs), thereby enhancing the latter's ability to break down complex tasks. Despite its benefits, rationale distillation alone is inadequate for addressing domain knowledge limitations in tasks requiring specialized expertise, such as disease diagnosis. Effectively embedding domain knowledge in SLMs poses a significant challenge. While current LLMs are primarily geared toward processing textual data, multimodal LLMs that incorporate time series data, especially electronic health records (EHRs), are still evolving. To tackle these limitations, we introduce ClinRaGen, an SLM optimized for multimodal rationale generation in disease diagnosis. ClinRaGen incorporates a unique knowledge-augmented attention mechanism to merge domain knowledge with time series EHR data, utilizing a stepwise rationale distillation strategy to produce both textual and time series-based clinical rationales. Our evaluations show that ClinRaGen markedly improves the SLM's capability to interpret multimodal EHR data and generate accurate clinical rationales, supporting more reliable disease diagnosis, advancing LLM applications in healthcare, and narrowing the performance divide between LLMs and SLMs.

From General to Specific: Utilizing General Hallucation to Automatically Measure the Role Relationship Fidelity for Specific Role-Play Agents

Nov 12, 2024

Abstract:The advanced role-playing capabilities of Large Language Models (LLMs) have paved the way for developing Role-Playing Agents (RPAs). However, existing benchmarks, such as HPD, which incorporates manually scored character relationships into the context for LLMs to sort coherence, and SocialBench, which uses specific profiles generated by LLMs in the context of multiple-choice tasks to assess character preferences, face limitations like poor generalizability, implicit and inaccurate judgments, and excessive context length. To address the above issues, we propose an automatic, scalable, and generalizable paradigm. Specifically, we construct a benchmark by extracting relations from a general knowledge graph and leverage RPA's inherent hallucination properties to prompt it to interact across roles, employing ChatGPT for stance detection and defining relationship hallucination along with three related metrics. Extensive experiments validate the effectiveness and stability of our metrics. Our findings further explore factors influencing these metrics and discuss the trade-off between relationship hallucination and factuality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge