Jing Gao

Zhejiang University

Towards Self-Supervision for Video Identification of Individual Holstein-Friesian Cattle: The Cows2021 Dataset

May 05, 2021

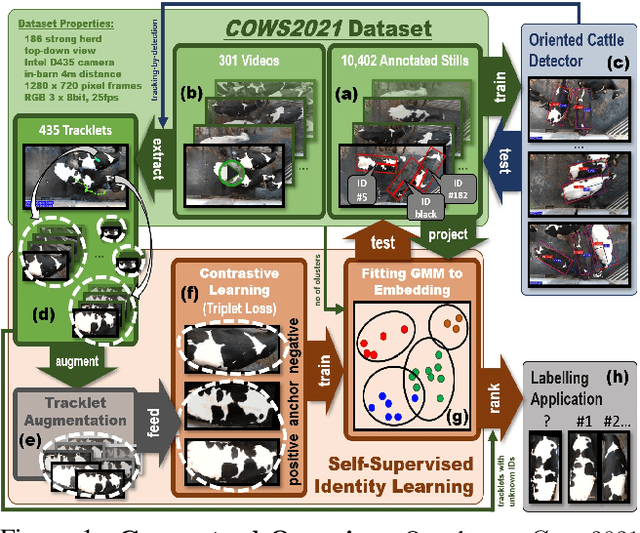

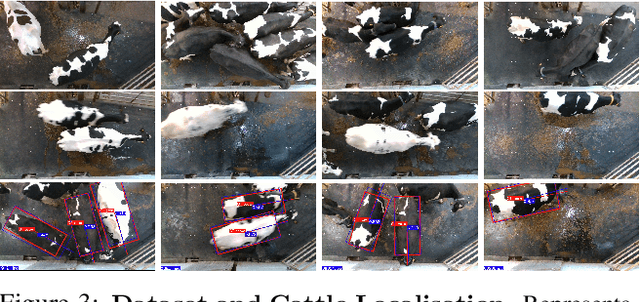

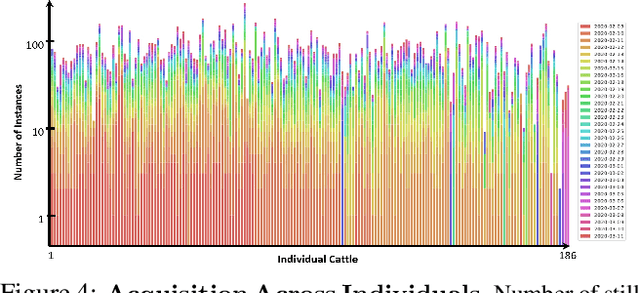

Abstract:In this paper we publish the largest identity-annotated Holstein-Friesian cattle dataset Cows2021 and a first self-supervision framework for video identification of individual animals. The dataset contains 10,402 RGB images with labels for localisation and identity as well as 301 videos from the same herd. The data shows top-down in-barn imagery, which captures the breed's individually distinctive black and white coat pattern. Motivated by the labelling burden involved in constructing visual cattle identification systems, we propose exploiting the temporal coat pattern appearance across videos as a self-supervision signal for animal identity learning. Using an individual-agnostic cattle detector that yields oriented bounding-boxes, rotation-normalised tracklets of individuals are formed via tracking-by-detection and enriched via augmentations. This produces a `positive' sample set per tracklet, which is paired against a `negative' set sampled from random cattle of other videos. Frame-triplet contrastive learning is then employed to construct a metric latent space. The fitting of a Gaussian Mixture Model to this space yields a cattle identity classifier. Results show an accuracy of Top-1 57.0% and Top-4: 76.9% and an Adjusted Rand Index: 0.53 compared to the ground truth. Whilst supervised training surpasses this benchmark by a large margin, we conclude that self-supervision can nevertheless play a highly effective role in speeding up labelling efforts when initially constructing supervision information. We provide all data and full source code alongside an analysis and evaluation of the system.

Fairness-aware Outlier Ensemble

Mar 17, 2021

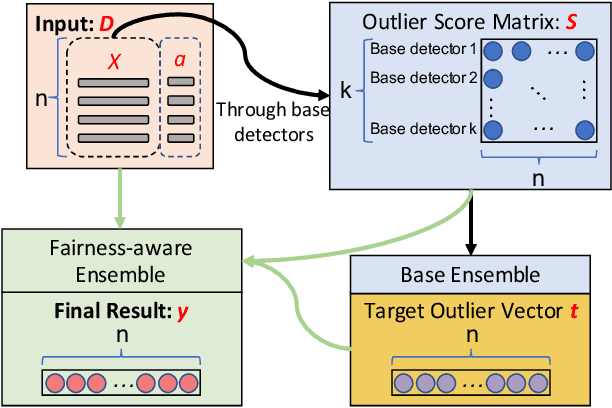

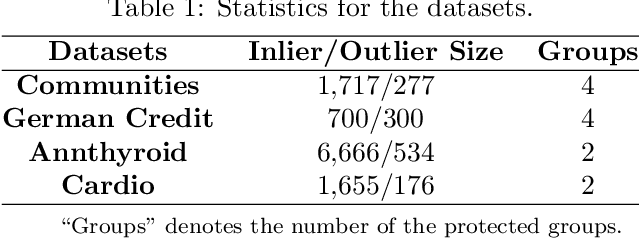

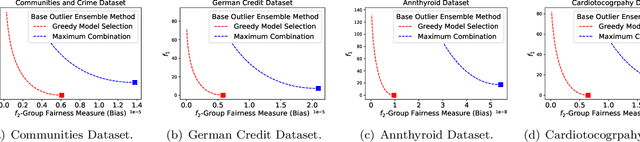

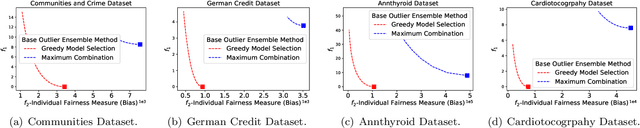

Abstract:Outlier ensemble methods have shown outstanding performance on the discovery of instances that are significantly different from the majority of the data. However, without the awareness of fairness, their applicability in the ethical scenarios, such as fraud detection and judiciary judgement system, could be degraded. In this paper, we propose to reduce the bias of the outlier ensemble results through a fairness-aware ensemble framework. Due to the lack of ground truth in the outlier detection task, the key challenge is how to mitigate the degradation in the detection performance with the improvement of fairness. To address this challenge, we define a distance measure based on the output of conventional outlier ensemble techniques to estimate the possible cost associated with detection performance degradation. Meanwhile, we propose a post-processing framework to tune the original ensemble results through a stacking process so that we can achieve a trade off between fairness and detection performance. Detection performance is measured by the area under ROC curve (AUC) while fairness is measured at both group and individual level. Experiments on eight public datasets are conducted. Results demonstrate the effectiveness of the proposed framework in improving fairness of outlier ensemble results. We also analyze the trade-off between AUC and fairness.

On Estimating Recommendation Evaluation Metrics under Sampling

Mar 03, 2021

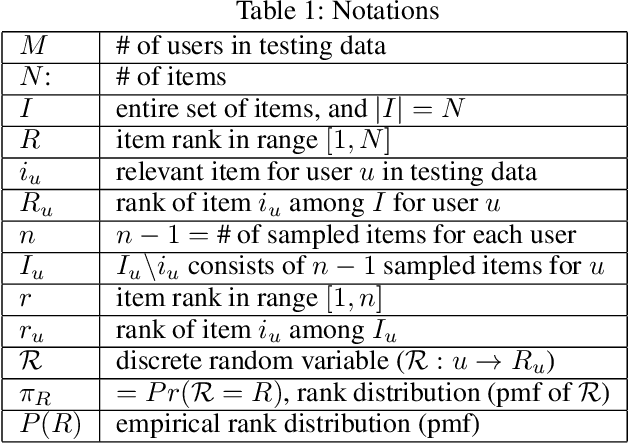

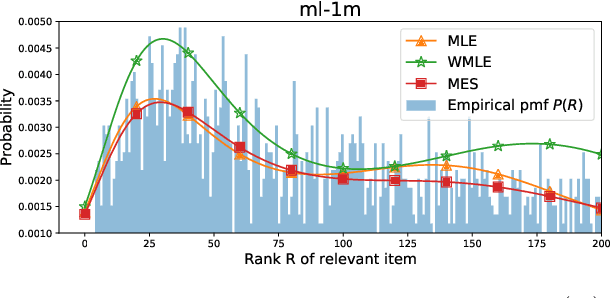

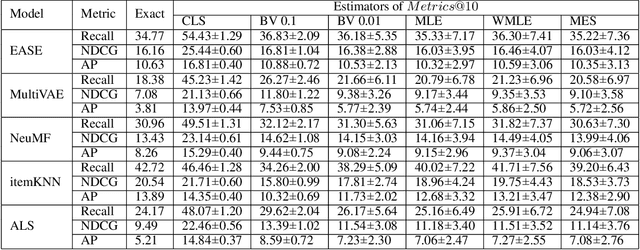

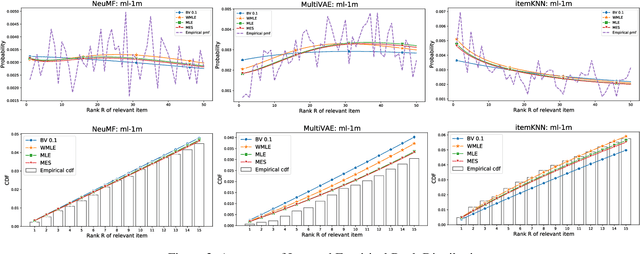

Abstract:Since the recent study (Krichene and Rendle 2020) done by Krichene and Rendle on the sampling-based top-k evaluation metric for recommendation, there has been a lot of debates on the validity of using sampling to evaluate recommendation algorithms. Though their work and the recent work (Li et al.2020) have proposed some basic approaches for mapping the sampling-based metrics to their global counterparts which rank the entire set of items, there is still a lack of understanding and consensus on how sampling should be used for recommendation evaluation. The proposed approaches either are rather uninformative (linking sampling to metric evaluation) or can only work on simple metrics, such as Recall/Precision (Krichene and Rendle 2020; Li et al. 2020). In this paper, we introduce a new research problem on learning the empirical rank distribution, and a new approach based on the estimated rank distribution, to estimate the top-k metrics. Since this question is closely related to the underlying mechanism of sampling for recommendation, tackling it can help better understand the power of sampling and can help resolve the questions of if and how should we use sampling for evaluating recommendation. We introduce two approaches based on MLE (MaximalLikelihood Estimation) and its weighted variants, and ME(Maximal Entropy) principals to recover the empirical rank distribution, and then utilize them for metrics estimation. The experimental results show the advantages of using the new approaches for evaluating recommendation algorithms based on top-k metrics.

Adaptive Self-training for Few-shot Neural Sequence Labeling

Oct 07, 2020

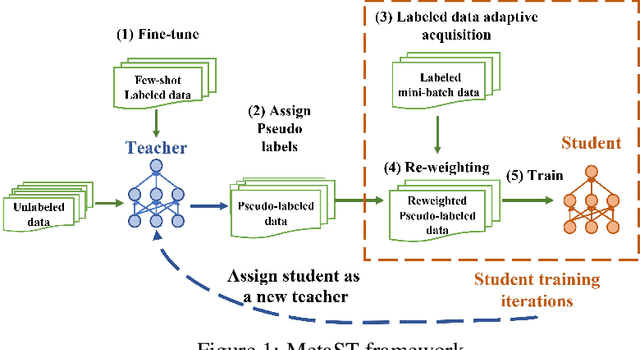

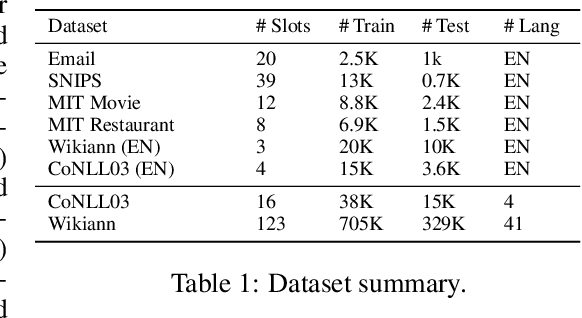

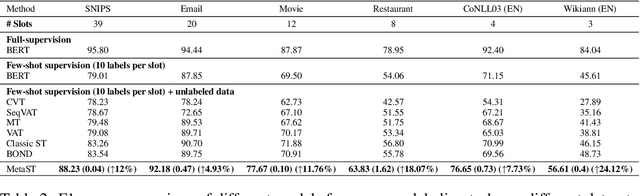

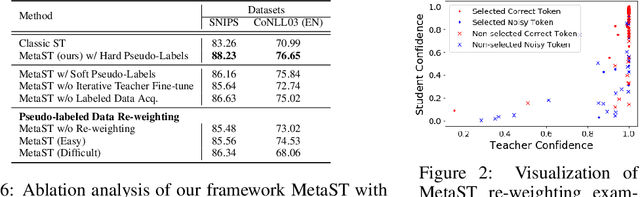

Abstract:Neural sequence labeling is an important technique employed for many Natural Language Processing (NLP) tasks, such as Named Entity Recognition (NER), slot tagging for dialog systems and semantic parsing. Large-scale pre-trained language models obtain very good performance on these tasks when fine-tuned on large amounts of task-specific labeled data. However, such large-scale labeled datasets are difficult to obtain for several tasks and domains due to the high cost of human annotation as well as privacy and data access constraints for sensitive user applications. This is exacerbated for sequence labeling tasks requiring such annotations at token-level. In this work, we develop techniques to address the label scarcity challenge for neural sequence labeling models. Specifically, we develop self-training and meta-learning techniques for few-shot training of neural sequence taggers, namely MetaST. While self-training serves as an effective mechanism to learn from large amounts of unlabeled data -- meta-learning helps in adaptive sample re-weighting to mitigate error propagation from noisy pseudo-labels. Extensive experiments on six benchmark datasets including two massive multilingual NER datasets and four slot tagging datasets for task-oriented dialog systems demonstrate the effectiveness of our method with around 10% improvement over state-of-the-art systems for the 10-shot setting.

Efficient Knowledge Graph Validation via Cross-Graph Representation Learning

Aug 16, 2020

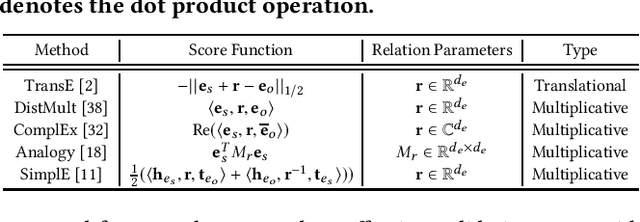

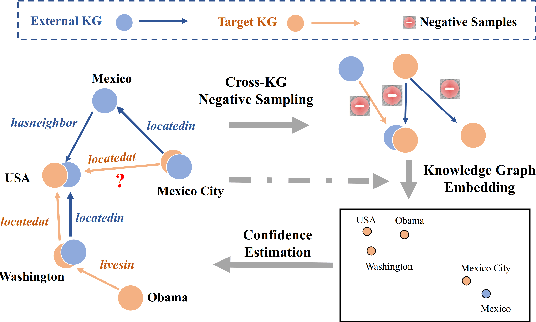

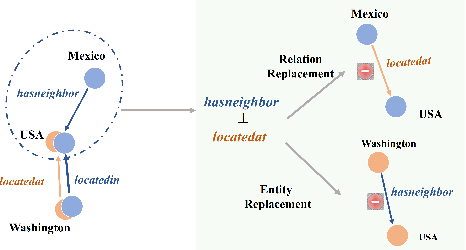

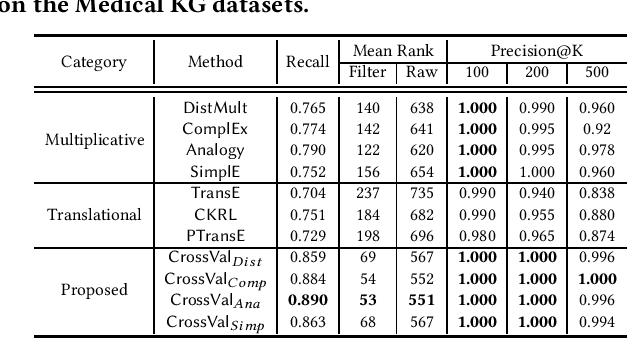

Abstract:Recent advances in information extraction have motivated the automatic construction of huge Knowledge Graphs (KGs) by mining from large-scale text corpus. However, noisy facts are unavoidably introduced into KGs that could be caused by automatic extraction. To validate the correctness of facts (i.e., triplets) inside a KG, one possible approach is to map the triplets into vector representations by capturing the semantic meanings of facts. Although many representation learning approaches have been developed for knowledge graphs, these methods are not effective for validation. They usually assume that facts are correct, and thus may overfit noisy facts and fail to detect such facts. Towards effective KG validation, we propose to leverage an external human-curated KG as auxiliary information source to help detect the errors in a target KG. The external KG is built upon human-curated knowledge repositories and tends to have high precision. On the other hand, although the target KG built by information extraction from texts has low precision, it can cover new or domain-specific facts that are not in any human-curated repositories. To tackle this challenging task, we propose a cross-graph representation learning framework, i.e., CrossVal, which can leverage an external KG to validate the facts in the target KG efficiently. This is achieved by embedding triplets based on their semantic meanings, drawing cross-KG negative samples and estimating a confidence score for each triplet based on its degree of correctness. We evaluate the proposed framework on datasets across different domains. Experimental results show that the proposed framework achieves the best performance compared with the state-of-the-art methods on large-scale KGs.

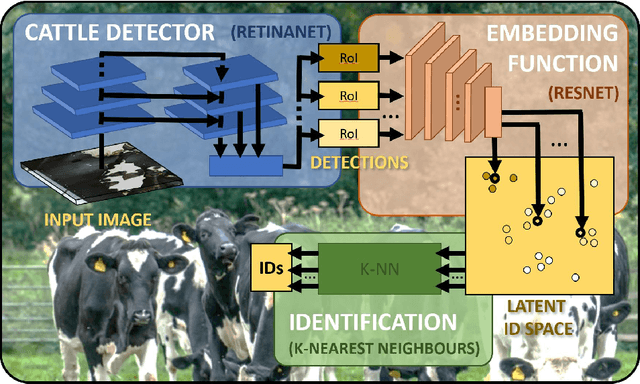

Visual Identification of Individual Holstein-Friesian Cattle via Deep Metric Learning

Jul 04, 2020

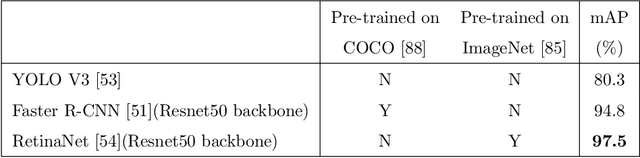

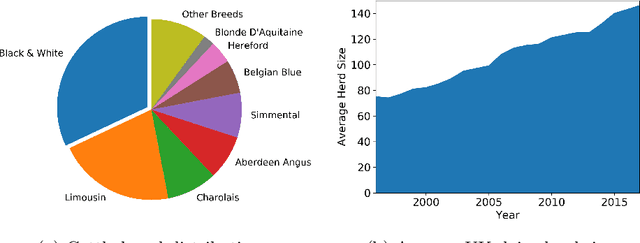

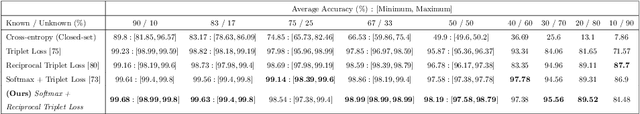

Abstract:Holstein-Friesian cattle exhibit individually-characteristic black and white coat patterns visually akin to those arising from Turing's reaction-diffusion systems. This work takes advantage of these natural markings in order to automate visual detection and biometric identification of individual Holstein-Friesians via convolutional neural networks and deep metric learning techniques. Existing approaches rely on markings, tags or wearables with a variety of maintenance requirements, whereas we present a totally hands-off method for the automated detection, localisation, and identification of individual animals from overhead imaging in an open herd setting, i.e. where new additions to the herd are identified without re-training. We propose the use of SoftMax-based reciprocal triplet loss to address the identification problem and evaluate the techniques in detail against fixed herd paradigms. We find that deep metric learning systems show strong performance even when many cattle unseen during system training are to be identified and re-identified - achieving 98.2% accuracy when trained on just half of the population. This work paves the way for facilitating the non-intrusive monitoring of cattle applicable to precision farming and surveillance for automated productivity, health and welfare monitoring, and to veterinary research such as behavioural analysis, disease outbreak tracing, and more. Key parts of the source code, network weights and underpinning datasets are available publicly.

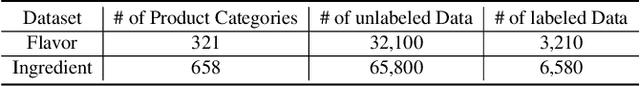

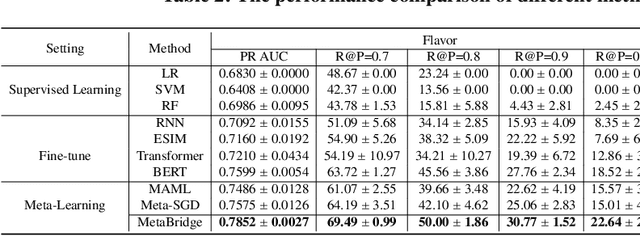

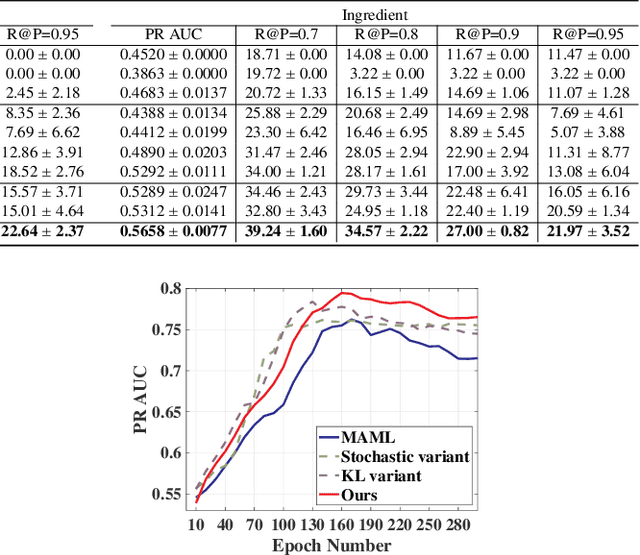

Automatic Validation of Textual Attribute Values in E-commerce Catalog by Learning with Limited Labeled Data

Jun 23, 2020

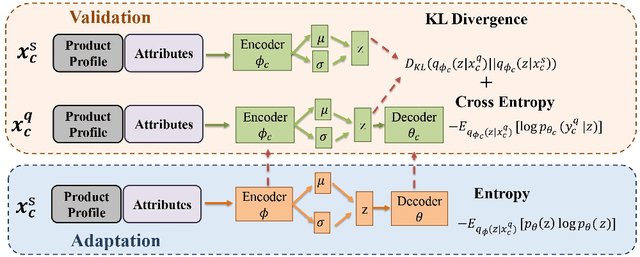

Abstract:Product catalogs are valuable resources for eCommerce website. In the catalog, a product is associated with multiple attributes whose values are short texts, such as product name, brand, functionality and flavor. Usually individual retailers self-report these key values, and thus the catalog information unavoidably contains noisy facts. Although existing deep neural network models have shown success in conducting cross-checking between two pieces of texts, their success has to be dependent upon a large set of quality labeled data, which are hard to obtain in this validation task: products span a variety of categories. To address the aforementioned challenges, we propose a novel meta-learning latent variable approach, called MetaBridge, which can learn transferable knowledge from a subset of categories with limited labeled data and capture the uncertainty of never-seen categories with unlabeled data. More specifically, we make the following contributions. (1) We formalize the problem of validating the textual attribute values of products from a variety of categories as a natural language inference task in the few-shot learning setting, and propose a meta-learning latent variable model to jointly process the signals obtained from product profiles and textual attribute values. (2) We propose to integrate meta learning and latent variable in a unified model to effectively capture the uncertainty of various categories. (3) We propose a novel objective function based on latent variable model in the few-shot learning setting, which ensures distribution consistency between unlabeled and labeled data and prevents overfitting by sampling from the learned distribution. Extensive experiments on real eCommerce datasets from hundreds of categories demonstrate the effectiveness of MetaBridge on textual attribute validation and its outstanding performance compared with state-of-the-art approaches.

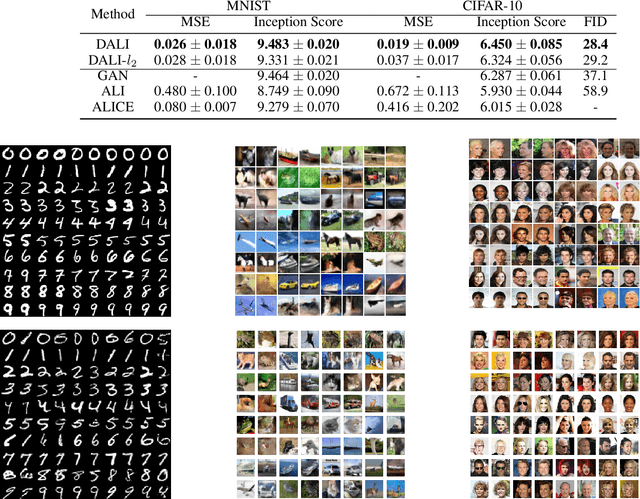

Decomposed Adversarial Learned Inference

Apr 21, 2020

Abstract:Effective inference for a generative adversarial model remains an important and challenging problem. We propose a novel approach, Decomposed Adversarial Learned Inference (DALI), which explicitly matches prior and conditional distributions in both data and code spaces, and puts a direct constraint on the dependency structure of the generative model. We derive an equivalent form of the prior and conditional matching objective that can be optimized efficiently without any parametric assumption on the data. We validate the effectiveness of DALI on the MNIST, CIFAR-10, and CelebA datasets by conducting quantitative and qualitative evaluations. Results demonstrate that DALI significantly improves both reconstruction and generation as compared to other adversarial inference models.

Large-scale Real-time Personalized Similar Product Recommendations

Apr 12, 2020

Abstract:Similar product recommendation is one of the most common scenes in e-commerce. Many recommendation algorithms such as item-to-item Collaborative Filtering are working on measuring item similarities. In this paper, we introduce our real-time personalized algorithm to model product similarity and real-time user interests. We also introduce several other baseline algorithms including an image-similarity-based method, item-to-item collaborative filtering, and item2vec, and compare them on our large-scale real-world e-commerce dataset. The algorithms which achieve good offline results are also tested on the online e-commerce website. Our personalized method achieves a 10% improvement on the add-cart number in the real-world e-commerce scenario.

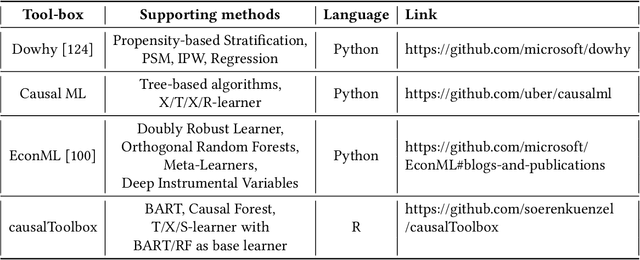

A Survey on Causal Inference

Feb 05, 2020

Abstract:Causal inference is a critical research topic across many domains, such as statistics, computer science, education, public policy and economics, for decades. Nowadays, estimating causal effect from observational data has become an appealing research direction owing to the large amount of available data and low budget requirement, compared with randomized controlled trials. Embraced with the rapidly developed machine learning area, various causal effect estimation methods for observational data have sprung up. In this survey, we provide a comprehensive review of causal inference methods under the potential outcome framework, one of the well known causal inference framework. The methods are divided into two categories depending on whether they require all three assumptions of the potential outcome framework or not. For each category, both the traditional statistical methods and the recent machine learning enhanced methods are discussed and compared. The plausible applications of these methods are also presented, including the applications in advertising, recommendation, medicine and so on. Moreover, the commonly used benchmark datasets as well as the open-source codes are also summarized, which facilitate researchers and practitioners to explore, evaluate and apply the causal inference methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge