Jie Gui

Fast Online Hashing with Multi-Label Projection

Dec 03, 2022Abstract:Hashing has been widely researched to solve the large-scale approximate nearest neighbor search problem owing to its time and storage superiority. In recent years, a number of online hashing methods have emerged, which can update the hash functions to adapt to the new stream data and realize dynamic retrieval. However, existing online hashing methods are required to update the whole database with the latest hash functions when a query arrives, which leads to low retrieval efficiency with the continuous increase of the stream data. On the other hand, these methods ignore the supervision relationship among the examples, especially in the multi-label case. In this paper, we propose a novel Fast Online Hashing (FOH) method which only updates the binary codes of a small part of the database. To be specific, we first build a query pool in which the nearest neighbors of each central point are recorded. When a new query arrives, only the binary codes of the corresponding potential neighbors are updated. In addition, we create a similarity matrix which takes the multi-label supervision information into account and bring in the multi-label projection loss to further preserve the similarity among the multi-label data. The experimental results on two common benchmarks show that the proposed FOH can achieve dramatic superiority on query time up to 6.28 seconds less than state-of-the-art baselines with competitive retrieval accuracy.

Good helper is around you: Attention-driven Masked Image Modeling

Dec 01, 2022Abstract:It has been witnessed that masked image modeling (MIM) has shown a huge potential in self-supervised learning in the past year. Benefiting from the universal backbone vision transformer, MIM learns self-supervised visual representations through masking a part of patches of the image while attempting to recover the missing pixels. Most previous works mask patches of the image randomly, which underutilizes the semantic information that is beneficial to visual representation learning. On the other hand, due to the large size of the backbone, most previous works have to spend much time on pre-training. In this paper, we propose \textbf{Attention-driven Masking and Throwing Strategy} (AMT), which could solve both problems above. We first leverage the self-attention mechanism to obtain the semantic information of the image during the training process automatically without using any supervised methods. Masking strategy can be guided by that information to mask areas selectively, which is helpful for representation learning. Moreover, a redundant patch throwing strategy is proposed, which makes learning more efficient. As a plug-and-play module for masked image modeling, AMT improves the linear probing accuracy of MAE by $2.9\% \sim 5.9\%$ on CIFAR-10/100, STL-10, Tiny ImageNet, and ImageNet-1K, and obtains an improved performance with respect to fine-tuning accuracy of MAE and SimMIM. Moreover, this design also achieves superior performance on downstream detection and segmentation tasks. Code is available at https://github.com/guijiejie/AMT.

AlignVE: Visual Entailment Recognition Based on Alignment Relations

Nov 16, 2022

Abstract:Visual entailment (VE) is to recognize whether the semantics of a hypothesis text can be inferred from the given premise image, which is one special task among recent emerged vision and language understanding tasks. Currently, most of the existing VE approaches are derived from the methods of visual question answering. They recognize visual entailment by quantifying the similarity between the hypothesis and premise in the content semantic features from multi modalities. Such approaches, however, ignore the VE's unique nature of relation inference between the premise and hypothesis. Therefore, in this paper, a new architecture called AlignVE is proposed to solve the visual entailment problem with a relation interaction method. It models the relation between the premise and hypothesis as an alignment matrix. Then it introduces a pooling operation to get feature vectors with a fixed size. Finally, it goes through the fully-connected layer and normalization layer to complete the classification. Experiments show that our alignment-based architecture reaches 72.45\% accuracy on SNLI-VE dataset, outperforming previous content-based models under the same settings.

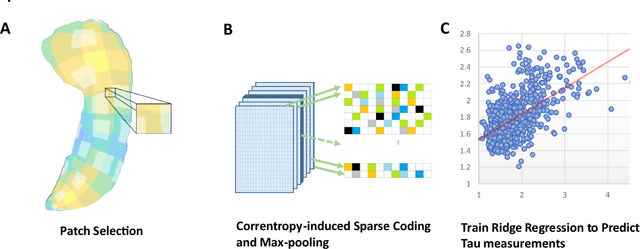

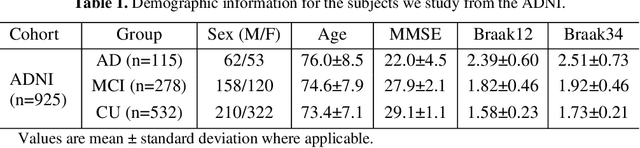

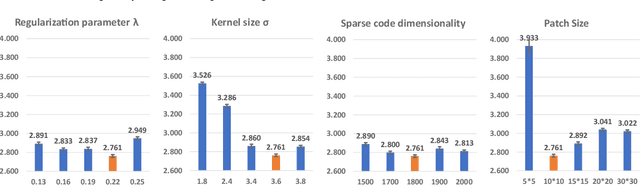

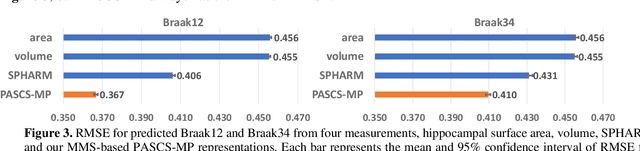

Predicting Tau Accumulation in Cerebral Cortex with Multivariate MRI Morphometry Measurements, Sparse Coding, and Correntropy

Oct 20, 2021

Abstract:Biomarker-assisted diagnosis and intervention in Alzheimer's disease (AD) may be the key to prevention breakthroughs. One of the hallmarks of AD is the accumulation of tau plaques in the human brain. However, current methods to detect tau pathology are either invasive (lumbar puncture) or quite costly and not widely available (Tau PET). In our previous work, structural MRI-based hippocampal multivariate morphometry statistics (MMS) showed superior performance as an effective neurodegenerative biomarker for preclinical AD and Patch Analysis-based Surface Correntropy-induced Sparse coding and max-pooling (PASCS-MP) has excellent ability to generate low-dimensional representations with strong statistical power for brain amyloid prediction. In this work, we apply this framework together with ridge regression models to predict Tau deposition in Braak12 and Braak34 brain regions separately. We evaluate our framework on 925 subjects from the Alzheimer's Disease Neuroimaging Initiative (ADNI). Each subject has one pair consisting of a PET image and MRI scan which were collected at about the same times. Experimental results suggest that the representations from our MMS and PASCS-MP have stronger predictive power and their predicted Braak12 and Braak34 are closer to the real values compared to the measures derived from other approaches such as hippocampal surface area and volume, and shape morphometry features based on spherical harmonics (SPHARM).

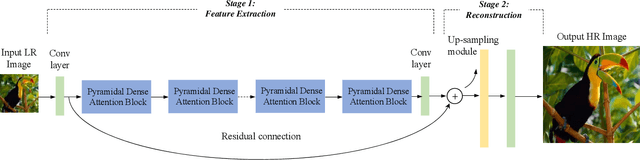

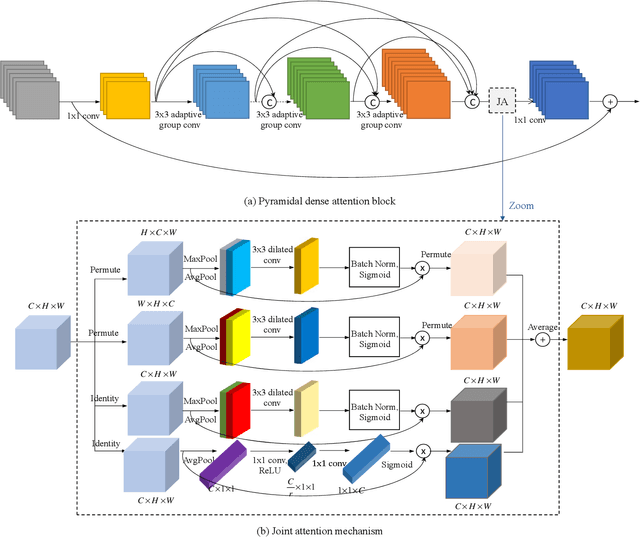

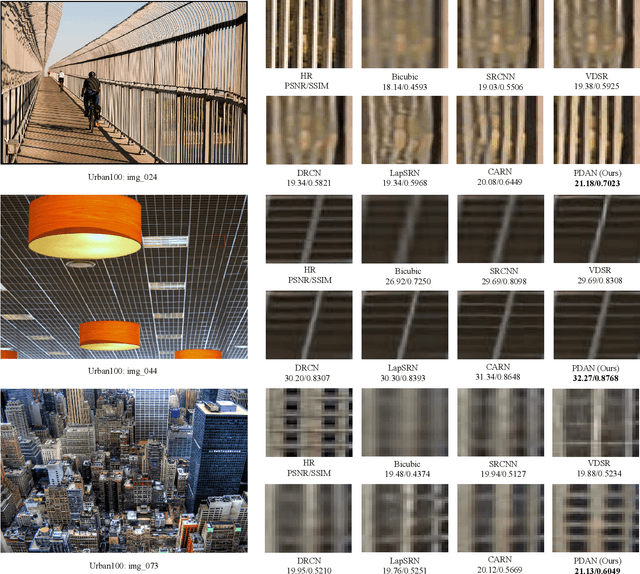

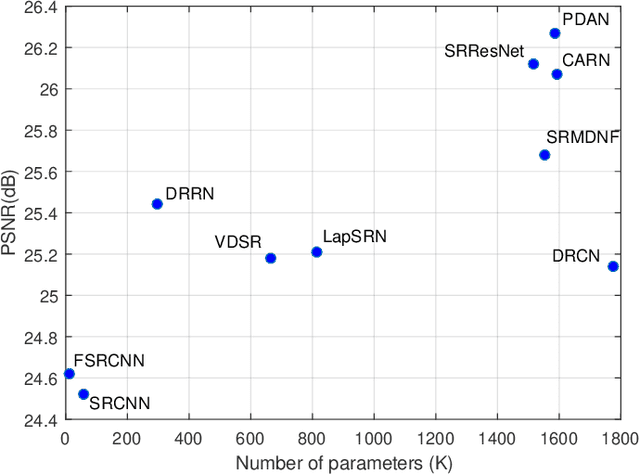

Pyramidal Dense Attention Networks for Lightweight Image Super-Resolution

Jun 13, 2021

Abstract:Recently, deep convolutional neural network methods have achieved an excellent performance in image superresolution (SR), but they can not be easily applied to embedded devices due to large memory cost. To solve this problem, we propose a pyramidal dense attention network (PDAN) for lightweight image super-resolution in this paper. In our method, the proposed pyramidal dense learning can gradually increase the width of the densely connected layer inside a pyramidal dense block to extract deep features efficiently. Meanwhile, the adaptive group convolution that the number of groups grows linearly with dense convolutional layers is introduced to relieve the parameter explosion. Besides, we also present a novel joint attention to capture cross-dimension interaction between the spatial dimensions and channel dimension in an efficient way for providing rich discriminative feature representations. Extensive experimental results show that our method achieves superior performance in comparison with the state-of-the-art lightweight SR methods.

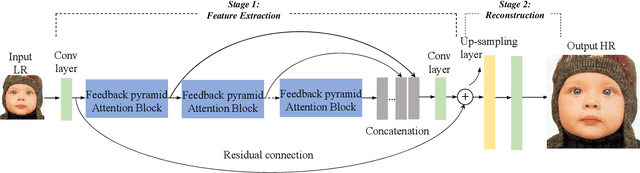

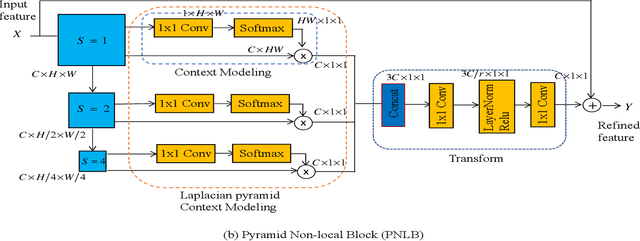

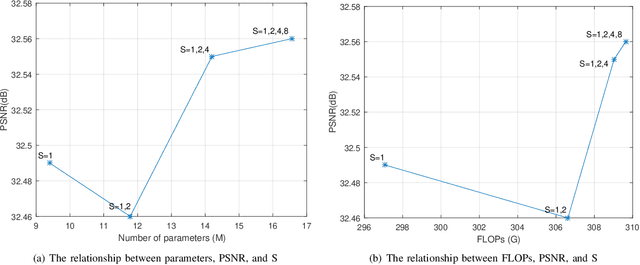

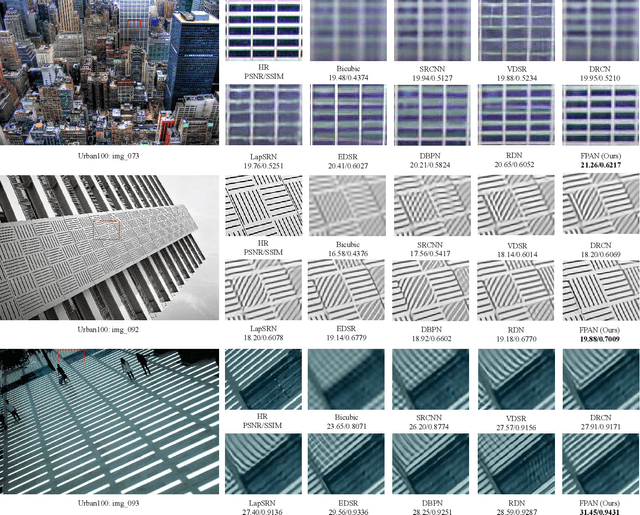

Feedback Pyramid Attention Networks for Single Image Super-Resolution

Jun 13, 2021

Abstract:Recently, convolutional neural network (CNN) based image super-resolution (SR) methods have achieved significant performance improvement. However, most CNN-based methods mainly focus on feed-forward architecture design and neglect to explore the feedback mechanism, which usually exists in the human visual system. In this paper, we propose feedback pyramid attention networks (FPAN) to fully exploit the mutual dependencies of features. Specifically, a novel feedback connection structure is developed to enhance low-level feature expression with high-level information. In our method, the output of each layer in the first stage is also used as the input of the corresponding layer in the next state to re-update the previous low-level filters. Moreover, we introduce a pyramid non-local structure to model global contextual information in different scales and improve the discriminative representation of the network. Extensive experimental results on various datasets demonstrate the superiority of our FPAN in comparison with the state-of-the-art SR methods.

A Comprehensive Survey on Image Dehazing Based on Deep Learning

Jun 07, 2021

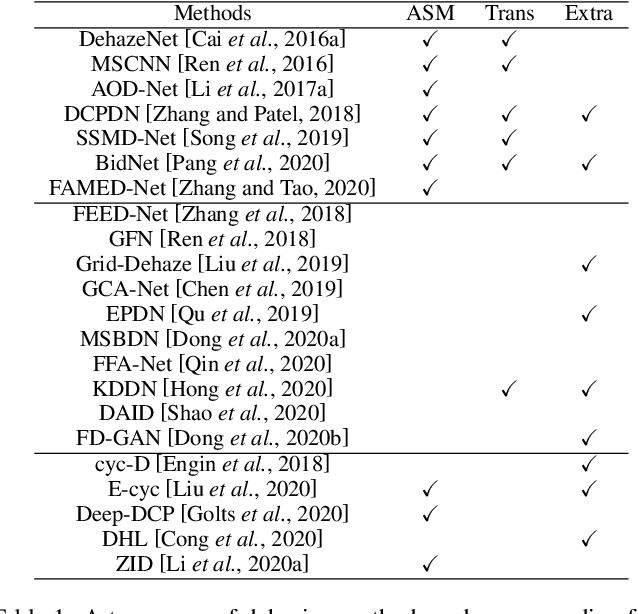

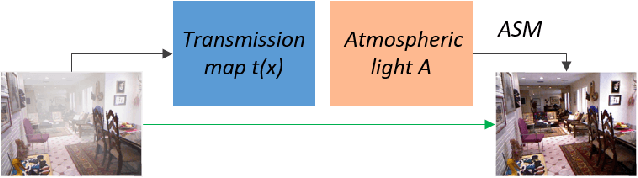

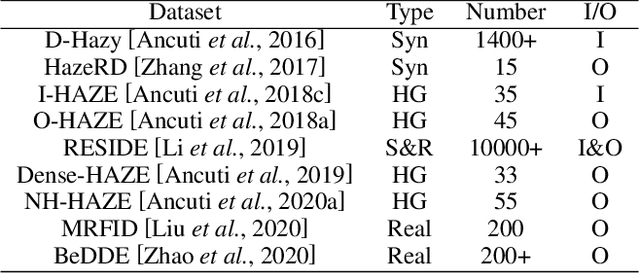

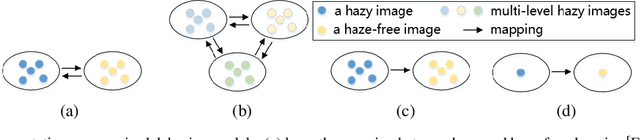

Abstract:The presence of haze significantly reduces the quality of images. Researchers have designed a variety of algorithms for image dehazing (ID) to restore the quality of hazy images. However, there are few studies that summarize the deep learning (DL) based dehazing technologies. In this paper, we conduct a comprehensive survey on the recent proposed dehazing methods. Firstly, we summarize the commonly used datasets, loss functions and evaluation metrics. Secondly, we group the existing researches of ID into two major categories: supervised ID and unsupervised ID. The core ideas of various influential dehazing models are introduced. Finally, the open issues for future research on ID are pointed out.

Learning Rates for Multi-task Regularization Networks

Apr 20, 2021Abstract:Multi-task learning is an important trend of machine learning in facing the era of artificial intelligence and big data. Despite a large amount of researches on learning rate estimates of various single-task machine learning algorithms, there is little parallel work for multi-task learning. We present mathematical analysis on the learning rate estimate of multi-task learning based on the theory of vector-valued reproducing kernel Hilbert spaces and matrix-valued reproducing kernels. For the typical multi-task regularization networks, an explicit learning rate dependent both on the number of sample data and the number of tasks is obtained. It reveals that the generalization ability of multi-task learning algorithms is indeed affected as the number of tasks increases.

Delving into Variance Transmission and Normalization: Shift of Average Gradient Makes the Network Collapse

Mar 22, 2021

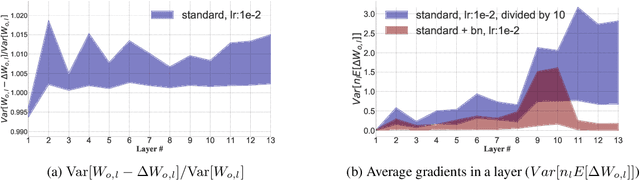

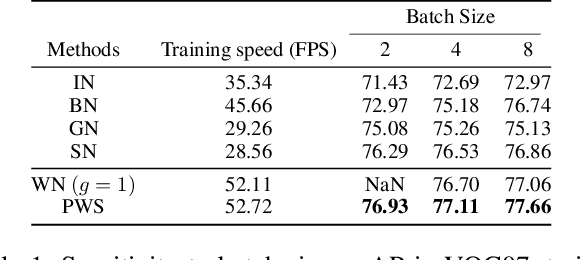

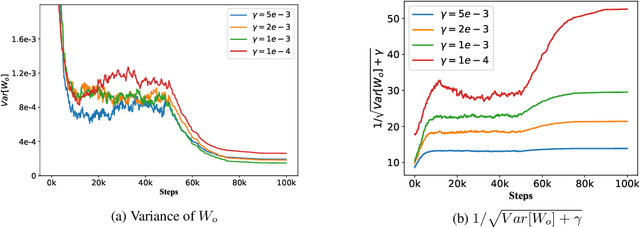

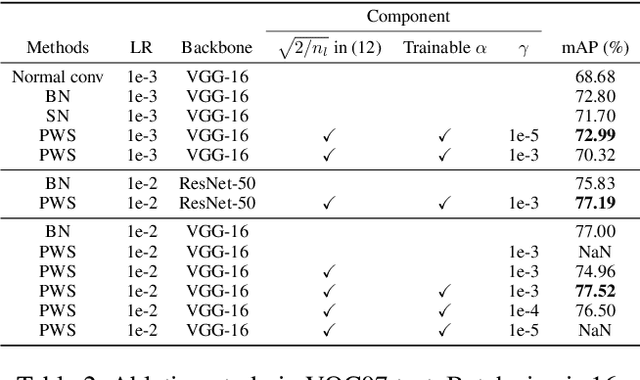

Abstract:Normalization operations are essential for state-of-the-art neural networks and enable us to train a network from scratch with a large learning rate (LR). We attempt to explain the real effect of Batch Normalization (BN) from the perspective of variance transmission by investigating the relationship between BN and Weights Normalization (WN). In this work, we demonstrate that the problem of the shift of the average gradient will amplify the variance of every convolutional (conv) layer. We propose Parametric Weights Standardization (PWS), a fast and robust to mini-batch size module used for conv filters, to solve the shift of the average gradient. PWS can provide the speed-up of BN. Besides, it has less computation and does not change the output of a conv layer. PWS enables the network to converge fast without normalizing the outputs. This result enhances the persuasiveness of the shift of the average gradient and explains why BN works from the perspective of variance transmission. The code and appendix will be made available on https://github.com/lyxzzz/PWSConv.

Randomized Kernel Multi-view Discriminant Analysis

Apr 02, 2020

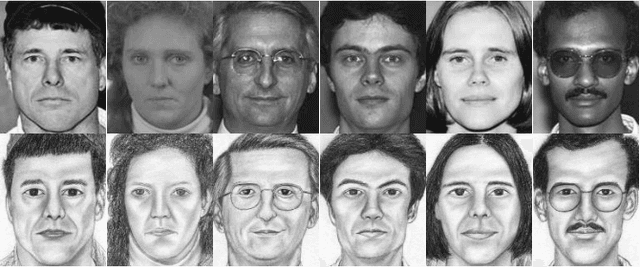

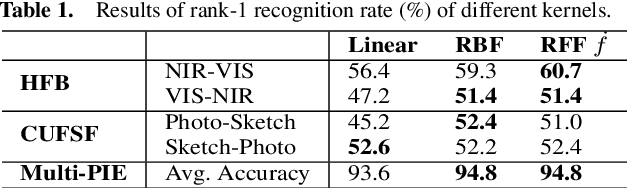

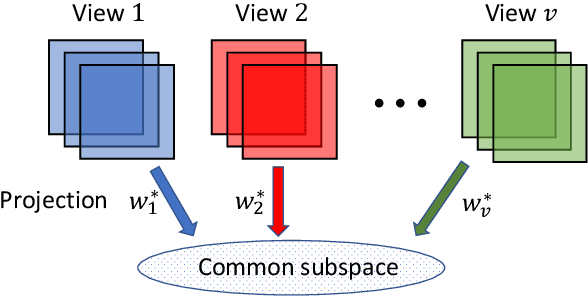

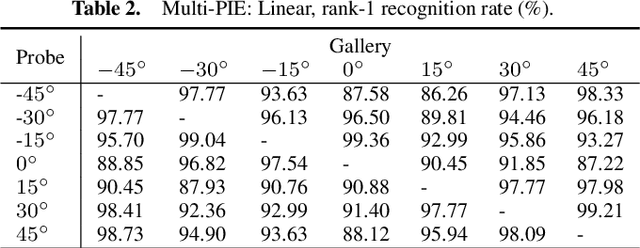

Abstract:In many artificial intelligence and computer vision systems, the same object can be observed at distinct viewpoints or by diverse sensors, which raises the challenges for recognizing objects from different, even heterogeneous views. Multi-view discriminant analysis (MvDA) is an effective multi-view subspace learning method, which finds a discriminant common subspace by jointly learning multiple view-specific linear projections for object recognition from multiple views, in a non-pairwise way. In this paper, we propose the kernel version of multi-view discriminant analysis, called kernel multi-view discriminant analysis (KMvDA). To overcome the well-known computational bottleneck of kernel methods, we also study the performance of using random Fourier features (RFF) to approximate Gaussian kernels in KMvDA, for large scale learning. Theoretical analysis on stability of this approximation is developed. We also conduct experiments on several popular multi-view datasets to illustrate the effectiveness of our proposed strategy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge