Jianxin Li

Beihang University

Curvature Graph Generative Adversarial Networks

Mar 03, 2022

Abstract:Generative adversarial network (GAN) is widely used for generalized and robust learning on graph data. However, for non-Euclidean graph data, the existing GAN-based graph representation methods generate negative samples by random walk or traverse in discrete space, leading to the information loss of topological properties (e.g. hierarchy and circularity). Moreover, due to the topological heterogeneity (i.e., different densities across the graph structure) of graph data, they suffer from serious topological distortion problems. In this paper, we proposed a novel Curvature Graph Generative Adversarial Networks method, named \textbf{\modelname}, which is the first GAN-based graph representation method in the Riemannian geometric manifold. To better preserve the topological properties, we approximate the discrete structure as a continuous Riemannian geometric manifold and generate negative samples efficiently from the wrapped normal distribution. To deal with the topological heterogeneity, we leverage the Ricci curvature for local structures with different topological properties, obtaining to low-distortion representations. Extensive experiments show that CurvGAN consistently and significantly outperforms the state-of-the-art methods across multiple tasks and shows superior robustness and generalization.

Exploring Human Mobility for Multi-Pattern Passenger Prediction: A Graph Learning Framework

Feb 17, 2022

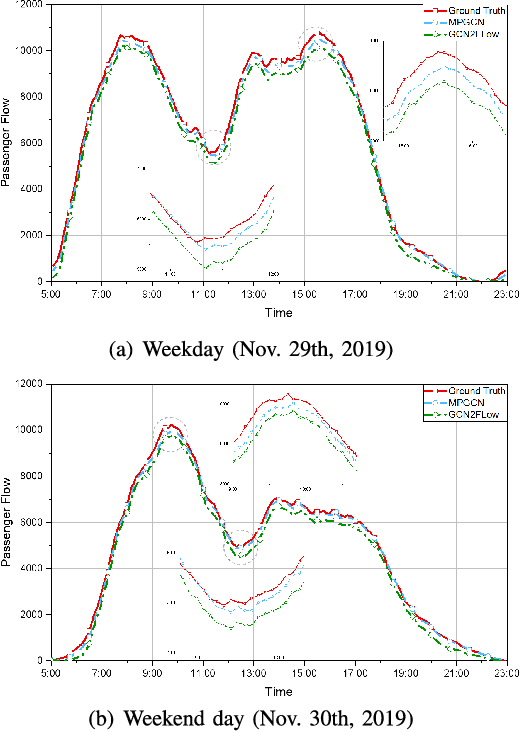

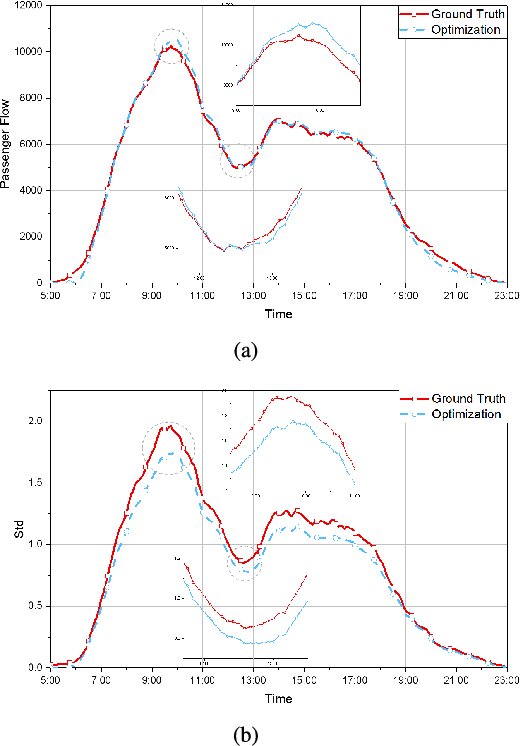

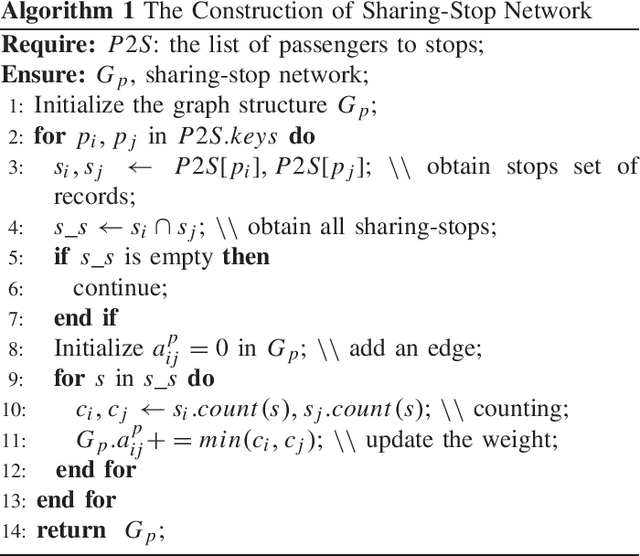

Abstract:Traffic flow prediction is an integral part of an intelligent transportation system and thus fundamental for various traffic-related applications. Buses are an indispensable way of moving for urban residents with fixed routes and schedules, which leads to latent travel regularity. However, human mobility patterns, specifically the complex relationships between bus passengers, are deeply hidden in this fixed mobility mode. Although many models exist to predict traffic flow, human mobility patterns have not been well explored in this regard. To reduce this research gap and learn human mobility knowledge from this fixed travel behaviors, we propose a multi-pattern passenger flow prediction framework, MPGCN, based on Graph Convolutional Network (GCN). Firstly, we construct a novel sharing-stop network to model relationships between passengers based on bus record data. Then, we employ GCN to extract features from the graph by learning useful topology information and introduce a deep clustering method to recognize mobility patterns hidden in bus passengers. Furthermore, to fully utilize Spatio-temporal information, we propose GCN2Flow to predict passenger flow based on various mobility patterns. To the best of our knowledge, this paper is the first work to adopt a multipattern approach to predict the bus passenger flow from graph learning. We design a case study for optimizing routes. Extensive experiments upon a real-world bus dataset demonstrate that MPGCN has potential efficacy in passenger flow prediction and route optimization.

Bi-CLKT: Bi-Graph Contrastive Learning based Knowledge Tracing

Jan 22, 2022

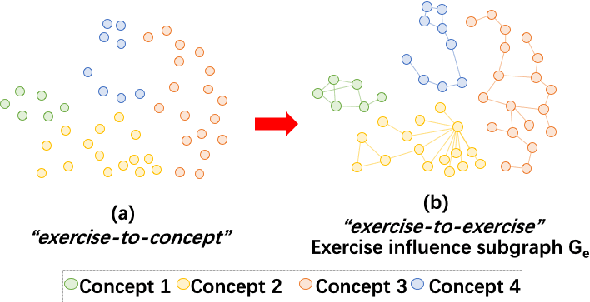

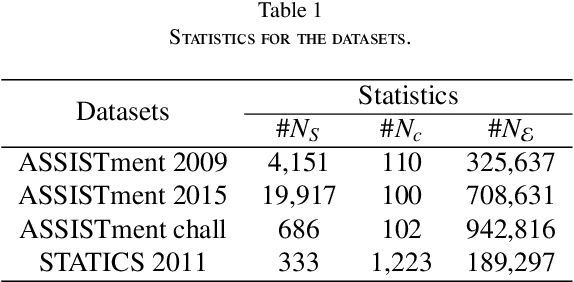

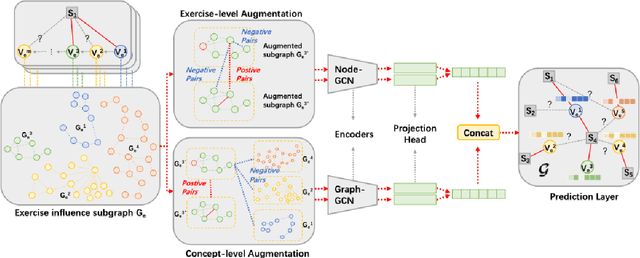

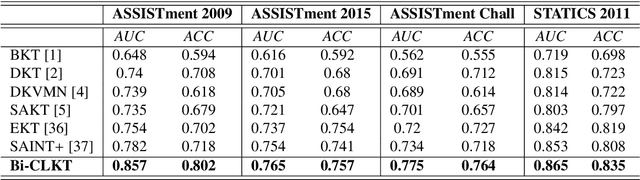

Abstract:The goal of Knowledge Tracing (KT) is to estimate how well students have mastered a concept based on their historical learning of related exercises. The benefit of knowledge tracing is that students' learning plans can be better organised and adjusted, and interventions can be made when necessary. With the recent rise of deep learning, Deep Knowledge Tracing (DKT) has utilised Recurrent Neural Networks (RNNs) to accomplish this task with some success. Other works have attempted to introduce Graph Neural Networks (GNNs) and redefine the task accordingly to achieve significant improvements. However, these efforts suffer from at least one of the following drawbacks: 1) they pay too much attention to details of the nodes rather than to high-level semantic information; 2) they struggle to effectively establish spatial associations and complex structures of the nodes; and 3) they represent either concepts or exercises only, without integrating them. Inspired by recent advances in self-supervised learning, we propose a Bi-Graph Contrastive Learning based Knowledge Tracing (Bi-CLKT) to address these limitations. Specifically, we design a two-layer contrastive learning scheme based on an "exercise-to-exercise" (E2E) relational subgraph. It involves node-level contrastive learning of subgraphs to obtain discriminative representations of exercises, and graph-level contrastive learning to obtain discriminative representations of concepts. Moreover, we designed a joint contrastive loss to obtain better representations and hence better prediction performance. Also, we explored two different variants, using RNN and memory-augmented neural networks as the prediction layer for comparison to obtain better representations of exercises and concepts respectively. Extensive experiments on four real-world datasets show that the proposed Bi-CLKT and its variants outperform other baseline models.

Temporal Knowledge Graph Completion: A Survey

Jan 16, 2022

Abstract:Knowledge graph completion (KGC) can predict missing links and is crucial for real-world knowledge graphs, which widely suffer from incompleteness. KGC methods assume a knowledge graph is static, but that may lead to inaccurate prediction results because many facts in the knowledge graphs change over time. Recently, emerging methods have shown improved predictive results by further incorporating the timestamps of facts; namely, temporal knowledge graph completion (TKGC). With this temporal information, TKGC methods can learn the dynamic evolution of the knowledge graph that KGC methods fail to capture. In this paper, for the first time, we summarize the recent advances in TKGC research. First, we detail the background of TKGC, including the problem definition, benchmark datasets, and evaluation metrics. Then, we summarize existing TKGC methods based on how timestamps of facts are used to capture the temporal dynamics. Finally, we conclude the paper and present future research directions of TKGC.

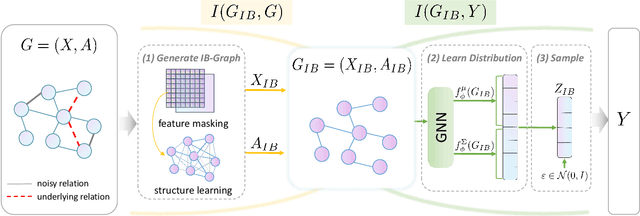

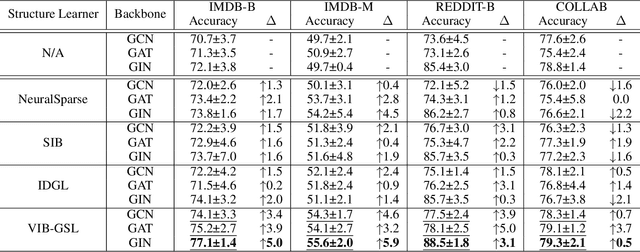

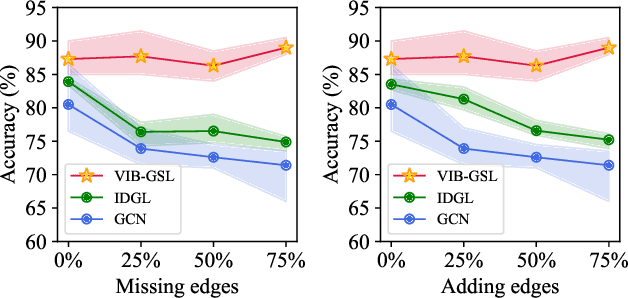

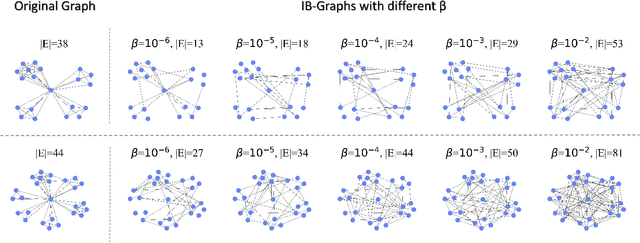

Graph Structure Learning with Variational Information Bottleneck

Dec 16, 2021

Abstract:Graph Neural Networks (GNNs) have shown promising results on a broad spectrum of applications. Most empirical studies of GNNs directly take the observed graph as input, assuming the observed structure perfectly depicts the accurate and complete relations between nodes. However, graphs in the real world are inevitably noisy or incomplete, which could even exacerbate the quality of graph representations. In this work, we propose a novel Variational Information Bottleneck guided Graph Structure Learning framework, namely VIB-GSL, in the perspective of information theory. VIB-GSL advances the Information Bottleneck (IB) principle for graph structure learning, providing a more elegant and universal framework for mining underlying task-relevant relations. VIB-GSL learns an informative and compressive graph structure to distill the actionable information for specific downstream tasks. VIB-GSL deduces a variational approximation for irregular graph data to form a tractable IB objective function, which facilitates training stability. Extensive experimental results demonstrate that the superior effectiveness and robustness of VIB-GSL.

ACE-HGNN: Adaptive Curvature Exploration Hyperbolic Graph Neural Network

Oct 15, 2021

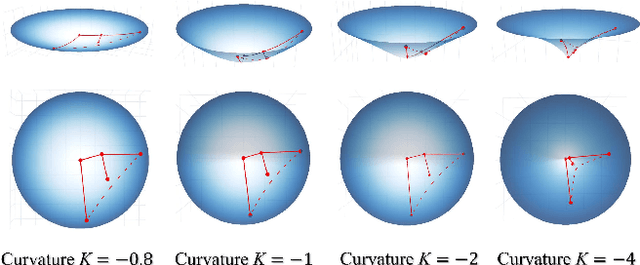

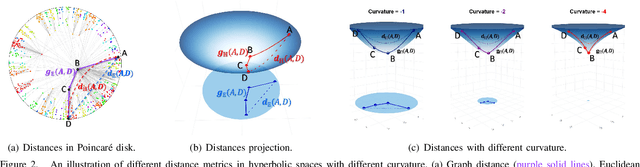

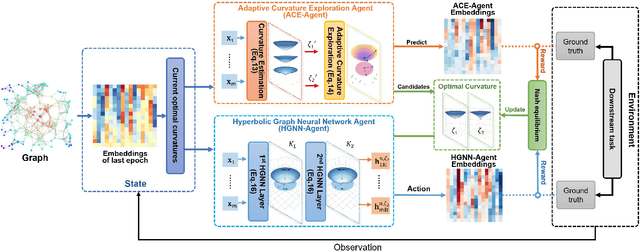

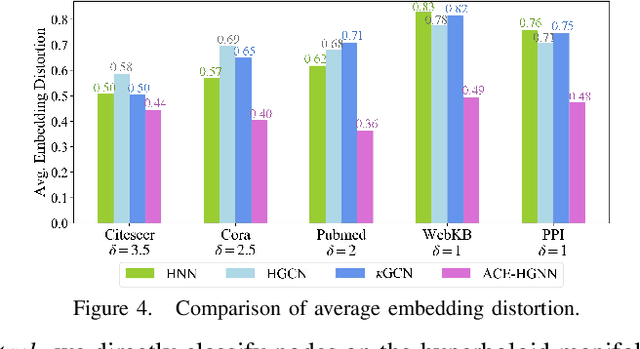

Abstract:Graph Neural Networks (GNNs) have been widely studied in various graph data mining tasks. Most existingGNNs embed graph data into Euclidean space and thus are less effective to capture the ubiquitous hierarchical structures in real-world networks. Hyperbolic Graph Neural Networks(HGNNs) extend GNNs to hyperbolic space and thus are more effective to capture the hierarchical structures of graphs in node representation learning. In hyperbolic geometry, the graph hierarchical structure can be reflected by the curvatures of the hyperbolic space, and different curvatures can model different hierarchical structures of a graph. However, most existing HGNNs manually set the curvature to a fixed value for simplicity, which achieves a suboptimal performance of graph learning due to the complex and diverse hierarchical structures of the graphs. To resolve this problem, we propose an Adaptive Curvature Exploration Hyperbolic Graph NeuralNetwork named ACE-HGNN to adaptively learn the optimal curvature according to the input graph and downstream tasks. Specifically, ACE-HGNN exploits a multi-agent reinforcement learning framework and contains two agents, ACE-Agent andHGNN-Agent for learning the curvature and node representations, respectively. The two agents are updated by a NashQ-leaning algorithm collaboratively, seeking the optimal hyperbolic space indexed by the curvature. Extensive experiments on multiple real-world graph datasets demonstrate a significant and consistent performance improvement in model quality with competitive performance and good generalization ability.

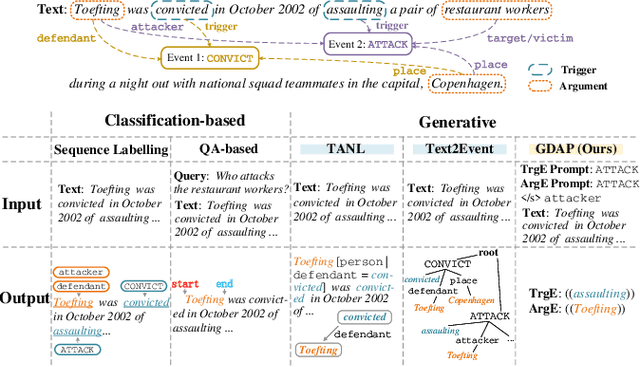

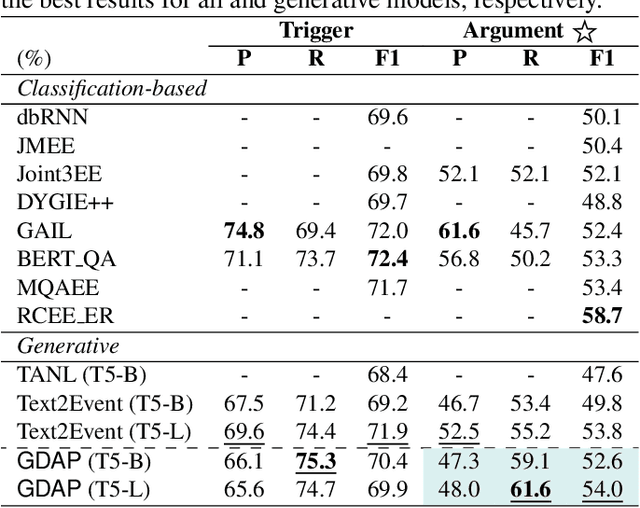

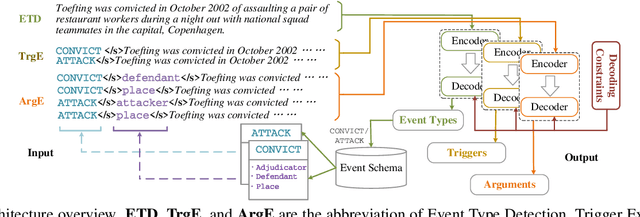

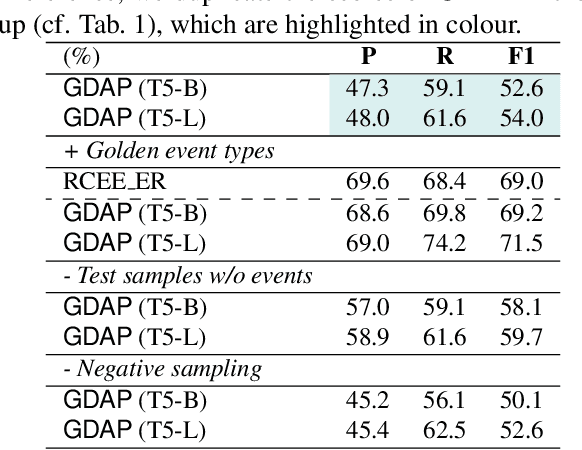

Generating Disentangled Arguments with Prompts: A Simple Event Extraction Framework that Works

Oct 09, 2021

Abstract:Event Extraction bridges the gap between text and event signals. Based on the assumption of trigger-argument dependency, existing approaches have achieved state-of-the-art performance with expert-designed templates or complicated decoding constraints. In this paper, for the first time we introduce the prompt-based learning strategy to the domain of Event Extraction, which empowers the automatic exploitation of label semantics on both input and output sides. To validate the effectiveness of the proposed generative method, we conduct extensive experiments with 11 diverse baselines. Empirical results show that, in terms of F1 score on Argument Extraction, our simple architecture is stronger than any other generative counterpart and even competitive with algorithms that require template engineering. Regarding the measure of recall, it sets new overall records for both Argument and Trigger Extractions. We hereby recommend this framework to the community, with the code publicly available at https://git.io/GDAP.

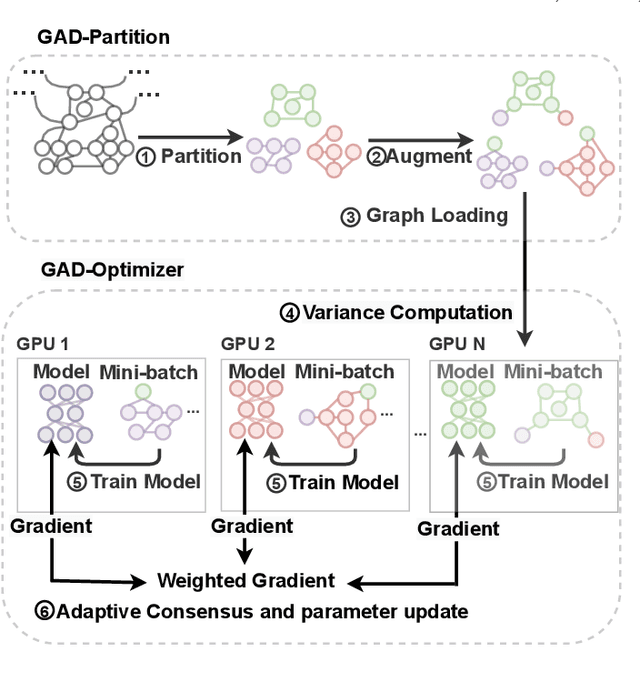

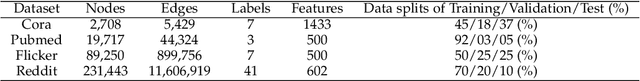

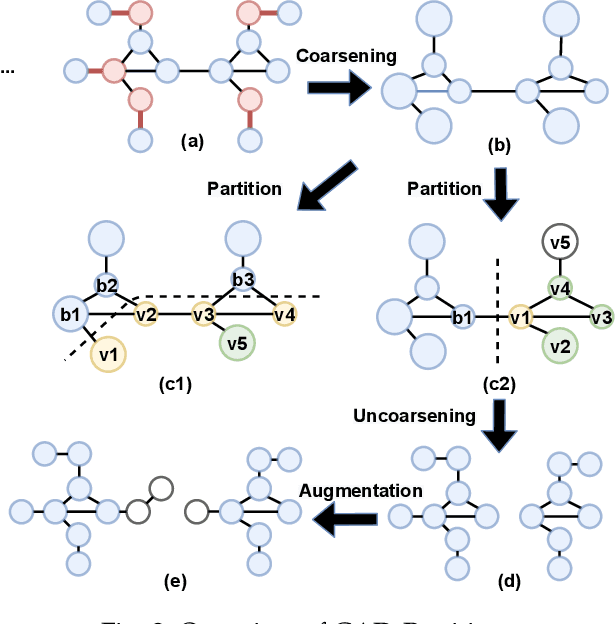

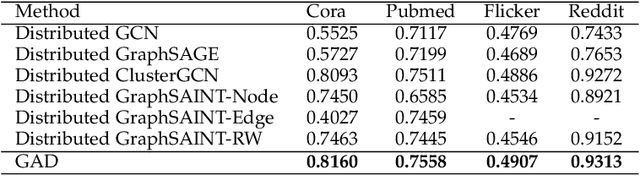

Distributed Optimization of Graph Convolutional Network using Subgraph Variance

Oct 06, 2021

Abstract:In recent years, Graph Convolutional Networks (GCNs) have achieved great success in learning from graph-structured data. With the growing tendency of graph nodes and edges, GCN training by single processor cannot meet the demand for time and memory, which led to a boom into distributed GCN training frameworks research. However, existing distributed GCN training frameworks require enormous communication costs between processors since multitudes of dependent nodes and edges information need to be collected and transmitted for GCN training from other processors. To address this issue, we propose a Graph Augmentation based Distributed GCN framework(GAD). In particular, GAD has two main components, GAD-Partition and GAD-Optimizer. We first propose a graph augmentation-based partition (GAD-Partition) that can divide original graph into augmented subgraphs to reduce communication by selecting and storing as few significant nodes of other processors as possible while guaranteeing the accuracy of the training. In addition, we further design a subgraph variance-based importance calculation formula and propose a novel weighted global consensus method, collectively referred to as GAD-Optimizer. This optimizer adaptively reduces the importance of subgraphs with large variances for the purpose of reducing the effect of extra variance introduced by GAD-Partition on distributed GCN training. Extensive experiments on four large-scale real-world datasets demonstrate that our framework significantly reduces the communication overhead (50%), improves the convergence speed (2X) of distributed GCN training, and slight gain in accuracy (0.45%) based on minimal redundancy compared to the state-of-the-art methods.

Representation Learning for Short Text Clustering

Sep 21, 2021

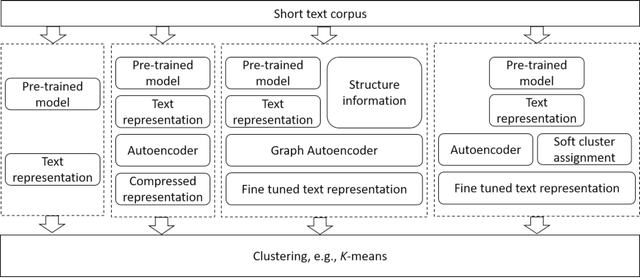

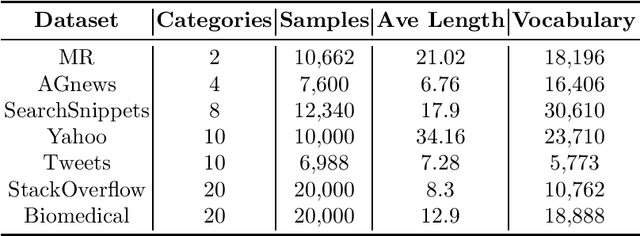

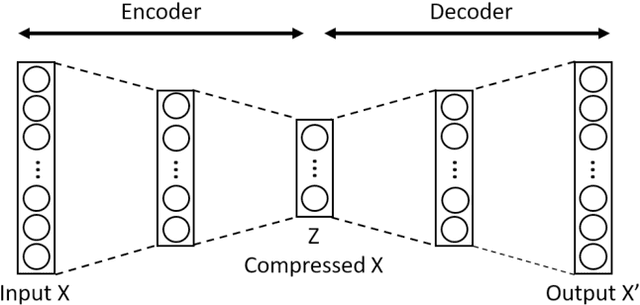

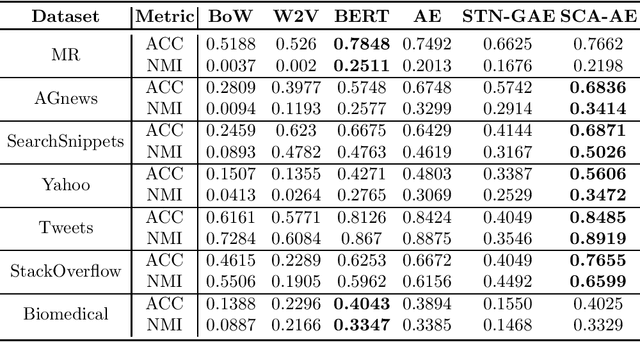

Abstract:Effective representation learning is critical for short text clustering due to the sparse, high-dimensional and noise attributes of short text corpus. Existing pre-trained models (e.g., Word2vec and BERT) have greatly improved the expressiveness for short text representations with more condensed, low-dimensional and continuous features compared to the traditional Bag-of-Words (BoW) model. However, these models are trained for general purposes and thus are suboptimal for the short text clustering task. In this paper, we propose two methods to exploit the unsupervised autoencoder (AE) framework to further tune the short text representations based on these pre-trained text models for optimal clustering performance. In our first method Structural Text Network Graph Autoencoder (STN-GAE), we exploit the structural text information among the corpus by constructing a text network, and then adopt graph convolutional network as encoder to fuse the structural features with the pre-trained text features for text representation learning. In our second method Soft Cluster Assignment Autoencoder (SCA-AE), we adopt an extra soft cluster assignment constraint on the latent space of autoencoder to encourage the learned text representations to be more clustering-friendly. We tested two methods on seven popular short text datasets, and the experimental results show that when only using the pre-trained model for short text clustering, BERT performs better than BoW and Word2vec. However, as long as we further tune the pre-trained representations, the proposed method like SCA-AE can greatly increase the clustering performance, and the accuracy improvement compared to use BERT alone could reach as much as 14\%.

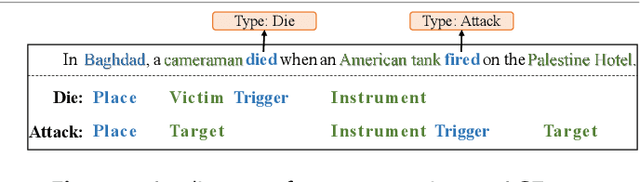

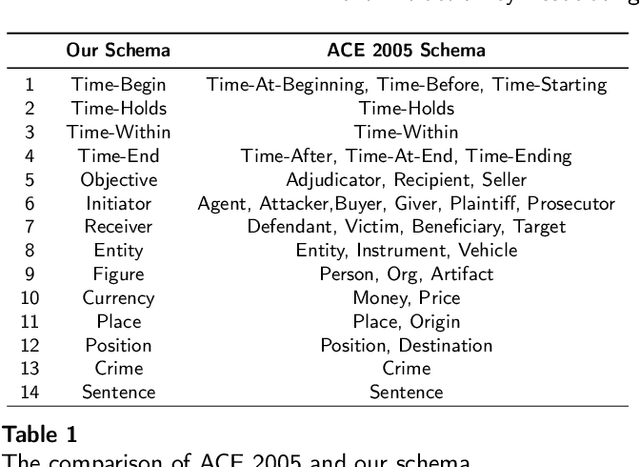

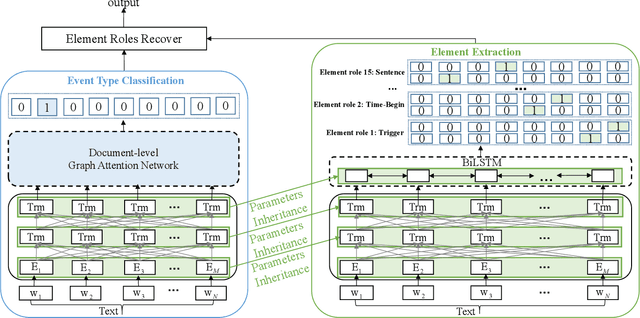

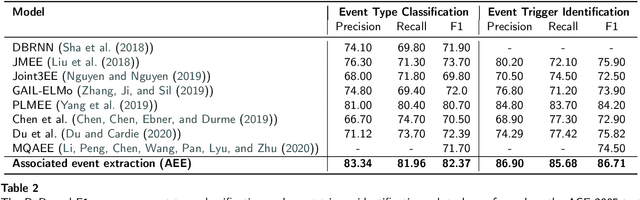

Event Extraction by Associating Event Types and Argument Roles

Aug 23, 2021

Abstract:Event extraction (EE), which acquires structural event knowledge from texts, can be divided into two sub-tasks: event type classification and element extraction (namely identifying triggers and arguments under different role patterns). As different event types always own distinct extraction schemas (i.e., role patterns), previous work on EE usually follows an isolated learning paradigm, performing element extraction independently for different event types. It ignores meaningful associations among event types and argument roles, leading to relatively poor performance for less frequent types/roles. This paper proposes a novel neural association framework for the EE task. Given a document, it first performs type classification via constructing a document-level graph to associate sentence nodes of different types, and adopting a graph attention network to learn sentence embeddings. Then, element extraction is achieved by building a universal schema of argument roles, with a parameter inheritance mechanism to enhance role preference for extracted elements. As such, our model takes into account type and role associations during EE, enabling implicit information sharing among them. Experimental results show that our approach consistently outperforms most state-of-the-art EE methods in both sub-tasks. Particularly, for types/roles with less training data, the performance is superior to the existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge