Ji Wang

Verifying Safety of Neural Networks from Topological Perspectives

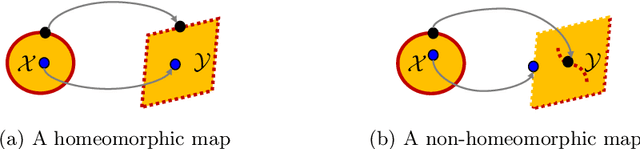

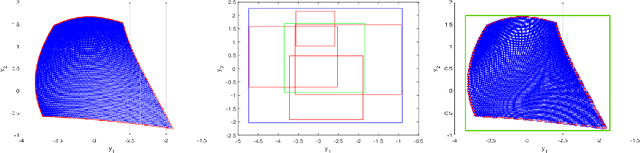

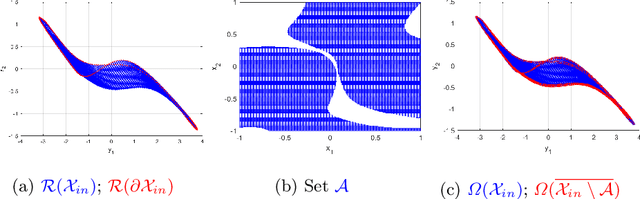

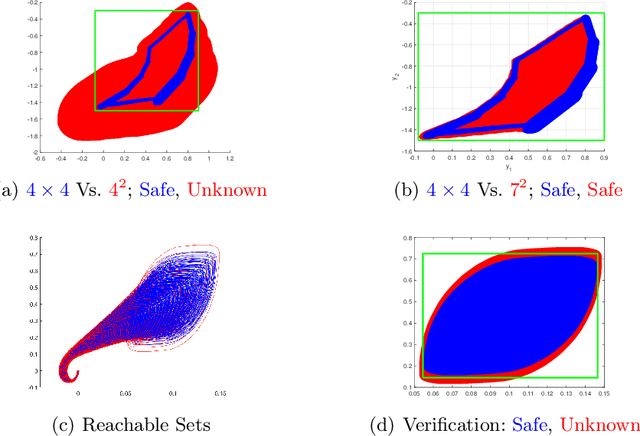

Jun 27, 2023Abstract:Neural networks (NNs) are increasingly applied in safety-critical systems such as autonomous vehicles. However, they are fragile and are often ill-behaved. Consequently, their behaviors should undergo rigorous guarantees before deployment in practice. In this paper, we propose a set-boundary reachability method to investigate the safety verification problem of NNs from a topological perspective. Given an NN with an input set and a safe set, the safety verification problem is to determine whether all outputs of the NN resulting from the input set fall within the safe set. In our method, the homeomorphism property and the open map property of NNs are mainly exploited, which establish rigorous guarantees between the boundaries of the input set and the boundaries of the output set. The exploitation of these two properties facilitates reachability computations via extracting subsets of the input set rather than the entire input set, thus controlling the wrapping effect in reachability analysis and facilitating the reduction of computation burdens for safety verification. The homeomorphism property exists in some widely used NNs such as invertible residual networks (i-ResNets) and Neural ordinary differential equations (Neural ODEs), and the open map is a less strict property and easier to satisfy compared with the homeomorphism property. For NNs establishing either of these properties, our set-boundary reachability method only needs to perform reachability analysis on the boundary of the input set. Moreover, for NNs that do not feature these properties with respect to the input set, we explore subsets of the input set for establishing the local homeomorphism property and then abandon these subsets for reachability computations. Finally, some examples demonstrate the performance of the proposed method.

Repairing Deep Neural Networks Based on Behavior Imitation

May 05, 2023Abstract:The increasing use of deep neural networks (DNNs) in safety-critical systems has raised concerns about their potential for exhibiting ill-behaviors. While DNN verification and testing provide post hoc conclusions regarding unexpected behaviors, they do not prevent the erroneous behaviors from occurring. To address this issue, DNN repair/patch aims to eliminate unexpected predictions generated by defective DNNs. Two typical DNN repair paradigms are retraining and fine-tuning. However, existing methods focus on the high-level abstract interpretation or inference of state spaces, ignoring the underlying neurons' outputs. This renders patch processes computationally prohibitive and limited to piecewise linear (PWL) activation functions to great extent. To address these shortcomings, we propose a behavior-imitation based repair framework, BIRDNN, which integrates the two repair paradigms for the first time. BIRDNN corrects incorrect predictions of negative samples by imitating the closest expected behaviors of positive samples during the retraining repair procedure. For the fine-tuning repair process, BIRDNN analyzes the behavior differences of neurons on positive and negative samples to identify the most responsible neurons for the erroneous behaviors. To tackle more challenging domain-wise repair problems (DRPs), we synthesize BIRDNN with a domain behavior characterization technique to repair buggy DNNs in a probably approximated correct style. We also implement a prototype tool based on BIRDNN and evaluate it on ACAS Xu DNNs. Our experimental results show that BIRDNN can successfully repair buggy DNNs with significantly higher efficiency than state-of-the-art repair tools. Additionally, BIRDNN is highly compatible with different activation functions.

AI Empowered Net-RCA for 6G

Dec 05, 2022Abstract:6G is envisioned to offer higher data rate, improved reliability, ubiquitous AI services, and support massive scale of connected devices. As a consequence, 6G will be much more complex than its predecessors. The growth of the system scale and complexity as well as the coexistence with the legacy networks and the diversified service requirements will inevitably incur huge maintenance cost and efforts for future 6G networks. Network Root Cause Analysis (Net-RCA) plays a critical role in identifying root causes of network faults. In this article, we first give an introduction about the envisioned 6G networks. Next, we discuss the challenges and potential solutions of 6G network operation and management, and comprehensively survey existing RCA methods. Then we propose an artificial intelligence (AI)-empowered Net-RCA framework for 6G. Performance comparisons on both synthetic and real-world network data are carried out to demonstrate that the proposed method outperforms the existing method considerably.

Scalable Spectral Clustering with Group Fairness Constraints

Oct 28, 2022Abstract:There are synergies of research interests and industrial efforts in modeling fairness and correcting algorithmic bias in machine learning. In this paper, we present a scalable algorithm for spectral clustering (SC) with group fairness constraints. Group fairness is also known as statistical parity where in each cluster, each protected group is represented with the same proportion as in the entirety. While FairSC algorithm (Kleindessner et al., 2019) is able to find the fairer clustering, it is compromised by high costs due to the kernels of computing nullspaces and the square roots of dense matrices explicitly. We present a new formulation of underlying spectral computation by incorporating nullspace projection and Hotelling's deflation such that the resulting algorithm, called s-FairSC, only involves the sparse matrix-vector products and is able to fully exploit the sparsity of the fair SC model. The experimental results on the modified stochastic block model demonstrate that s-FairSC is comparable with FairSC in recovering fair clustering. Meanwhile, it is sped up by a factor of 12 for moderate model sizes. s-FairSC is further demonstrated to be scalable in the sense that the computational costs of s-FairSC only increase marginally compared to the SC without fairness constraints.

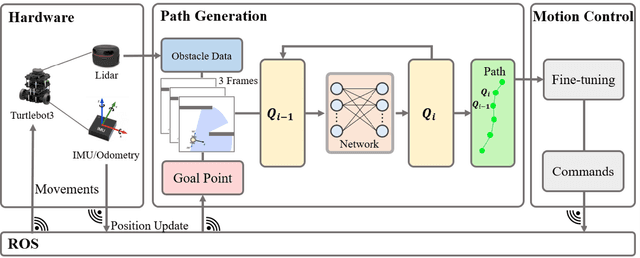

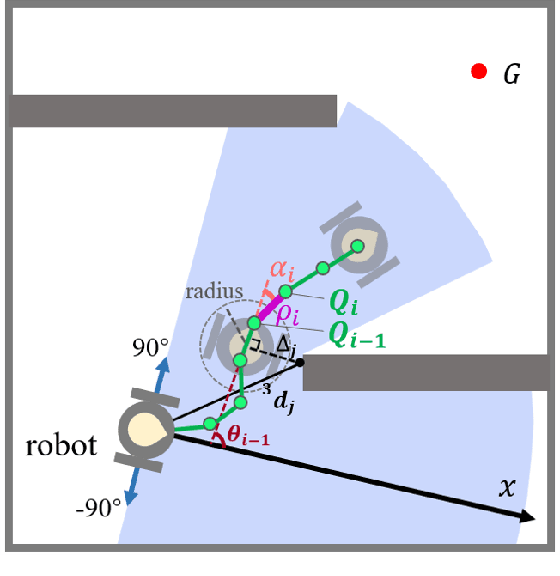

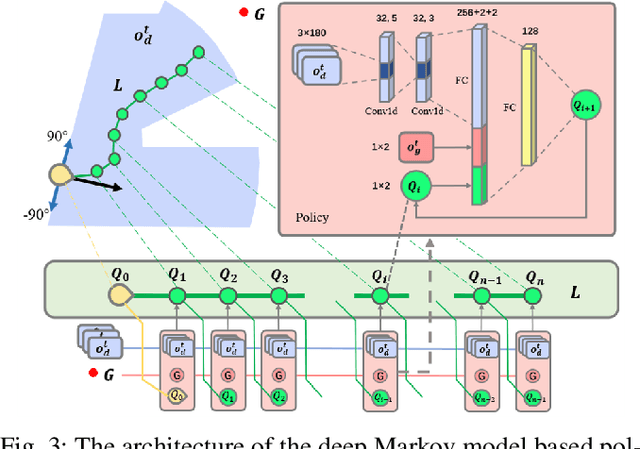

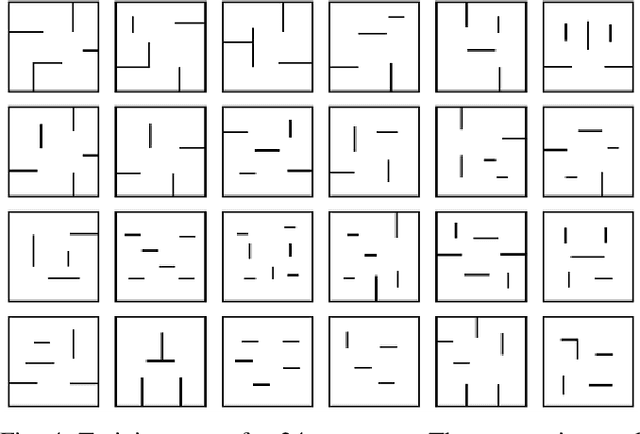

Robot Navigation with Reinforcement Learned Path Generation and Fine-Tuned Motion Control

Oct 19, 2022

Abstract:In this paper, we propose a novel reinforcement learning (RL) based path generation (RL-PG) approach for mobile robot navigation without a prior exploration of an unknown environment. Multiple predictive path points are dynamically generated by a deep Markov model optimized using RL approach for robot to track. To ensure the safety when tracking the predictive points, the robot's motion is fine-tuned by a motion fine-tuning module. Such an approach, using the deep Markov model with RL algorithm for planning, focuses on the relationship between adjacent path points. We analyze the benefits that our proposed approach are more effective and are with higher success rate than RL-Based approach DWA-RL and a traditional navigation approach APF. We deploy our model on both simulation and physical platforms and demonstrate our model performs robot navigation effectively and safely.

Safety Verification for Neural Networks Based on Set-boundary Analysis

Oct 09, 2022

Abstract:Neural networks (NNs) are increasingly applied in safety-critical systems such as autonomous vehicles. However, they are fragile and are often ill-behaved. Consequently, their behaviors should undergo rigorous guarantees before deployment in practice. In this paper we propose a set-boundary reachability method to investigate the safety verification problem of NNs from a topological perspective. Given an NN with an input set and a safe set, the safety verification problem is to determine whether all outputs of the NN resulting from the input set fall within the safe set. In our method, the homeomorphism property of NNs is mainly exploited, which establishes a relationship mapping boundaries to boundaries. The exploitation of this property facilitates reachability computations via extracting subsets of the input set rather than the entire input set, thus controlling the wrapping effect in reachability analysis and facilitating the reduction of computation burdens for safety verification. The homeomorphism property exists in some widely used NNs such as invertible NNs. Notable representations are invertible residual networks (i-ResNets) and Neural ordinary differential equations (Neural ODEs). For these NNs, our set-boundary reachability method only needs to perform reachability analysis on the boundary of the input set. For NNs which do not feature this property with respect to the input set, we explore subsets of the input set for establishing the local homeomorphism property, and then abandon these subsets for reachability computations. Finally, some examples demonstrate the performance of the proposed method.

On the Properties of Kullback-Leibler Divergence Between Gaussians

Feb 24, 2021

Abstract:Kullback-Leibler (KL) divergence is one of the most important divergence measures between probability distributions. In this paper, we investigate the properties of KL divergence between Gaussians. Firstly, for any two $n$-dimensional Gaussians $\mathcal{N}_1$ and $\mathcal{N}_2$, we find the supremum of $KL(\mathcal{N}_1||\mathcal{N}_2)$ when $KL(\mathcal{N}_2||\mathcal{N}_1)\leq \epsilon$ for $\epsilon>0$. This reveals the approximate symmetry of small KL divergence between Gaussians. We also find the infimum of $KL(\mathcal{N}_1||\mathcal{N}_2)$ when $KL(\mathcal{N}_2||\mathcal{N}_1)\geq M$ for $M>0$. Secondly, for any three $n$-dimensional Gaussians $\mathcal{N}_1, \mathcal{N}_2$ and $\mathcal{N}_3$, we find a bound of $KL(\mathcal{N}_1||\mathcal{N}_3)$ if $KL(\mathcal{N}_1||\mathcal{N}_2)$ and $KL(\mathcal{N}_2||\mathcal{N}_3)$ are bounded. This reveals that the KL divergence between Gaussians follows a relaxed triangle inequality. Importantly, all the bounds in the theorems presented in this paper are independent of the dimension $n$.

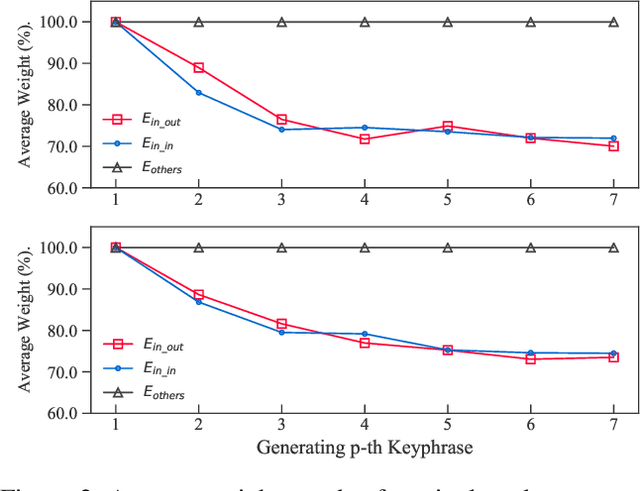

Keyphrase Extraction with Dynamic Graph Convolutional Networks and Diversified Inference

Oct 24, 2020

Abstract:Keyphrase extraction (KE) aims to summarize a set of phrases that accurately express a concept or a topic covered in a given document. Recently, Sequence-to-Sequence (Seq2Seq) based generative framework is widely used in KE task, and it has obtained competitive performance on various benchmarks. The main challenges of Seq2Seq methods lie in acquiring informative latent document representation and better modeling the compositionality of the target keyphrases set, which will directly affect the quality of generated keyphrases. In this paper, we propose to adopt the Dynamic Graph Convolutional Networks (DGCN) to solve the above two problems simultaneously. Concretely, we explore to integrate dependency trees with GCN for latent representation learning. Moreover, the graph structure in our model is dynamically modified during the learning process according to the generated keyphrases. To this end, our approach is able to explicitly learn the relations within the keyphrases collection and guarantee the information interchange between encoder and decoder in both directions. Extensive experiments on various KE benchmark datasets demonstrate the effectiveness of our approach.

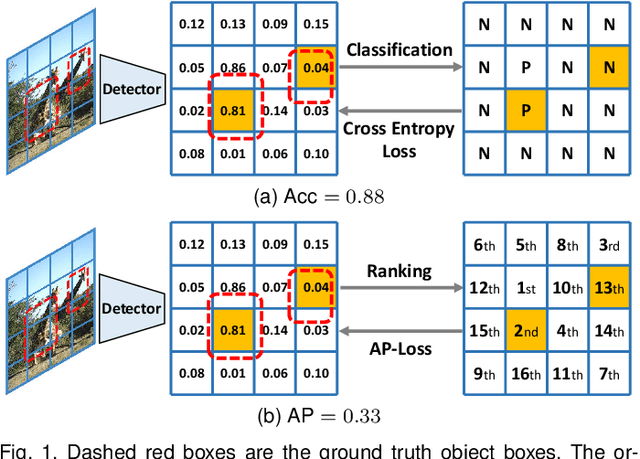

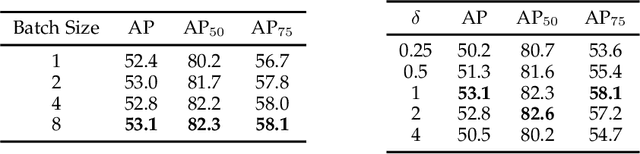

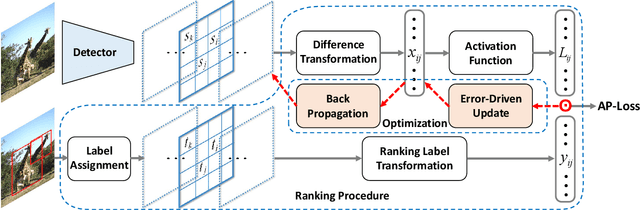

AP-Loss for Accurate One-Stage Object Detection

Aug 17, 2020

Abstract:One-stage object detectors are trained by optimizing classification-loss and localization-loss simultaneously, with the former suffering much from extreme foreground-background class imbalance issue due to the large number of anchors. This paper alleviates this issue by proposing a novel framework to replace the classification task in one-stage detectors with a ranking task, and adopting the Average-Precision loss (AP-loss) for the ranking problem. Due to its non-differentiability and non-convexity, the AP-loss cannot be optimized directly. For this purpose, we develop a novel optimization algorithm, which seamlessly combines the error-driven update scheme in perceptron learning and backpropagation algorithm in deep networks. We provide in-depth analyses on the good convergence property and computational complexity of the proposed algorithm, both theoretically and empirically. Experimental results demonstrate notable improvement in addressing the imbalance issue in object detection over existing AP-based optimization algorithms. An improved state-of-the-art performance is achieved in one-stage detectors based on AP-loss over detectors using classification-losses on various standard benchmarks. The proposed framework is also highly versatile in accommodating different network architectures. Code is available at https://github.com/cccorn/AP-loss .

Input Validation for Neural Networks via Runtime Local Robustness Verification

Feb 09, 2020

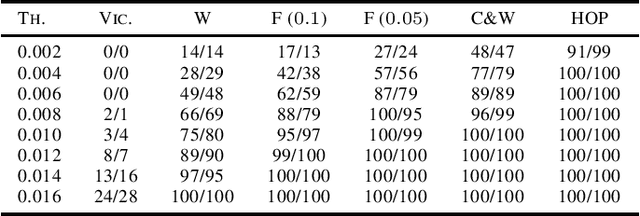

Abstract:Local robustness verification can verify that a neural network is robust wrt. any perturbation to a specific input within a certain distance. We call this distance Robustness Radius. We observe that the robustness radii of correctly classified inputs are much larger than that of misclassified inputs which include adversarial examples, especially those from strong adversarial attacks. Another observation is that the robustness radii of correctly classified inputs often follow a normal distribution. Based on these two observations, we propose to validate inputs for neural networks via runtime local robustness verification. Experiments show that our approach can protect neural networks from adversarial examples and improve their accuracies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge