Helen Hastie

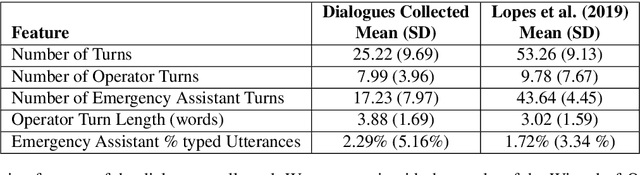

The Lab vs The Crowd: An Investigation into Data Quality for Neural Dialogue Models

Dec 07, 2020

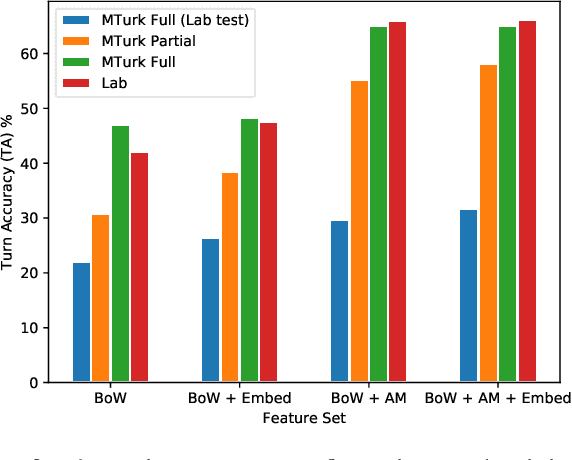

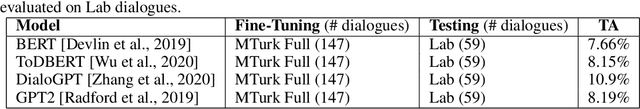

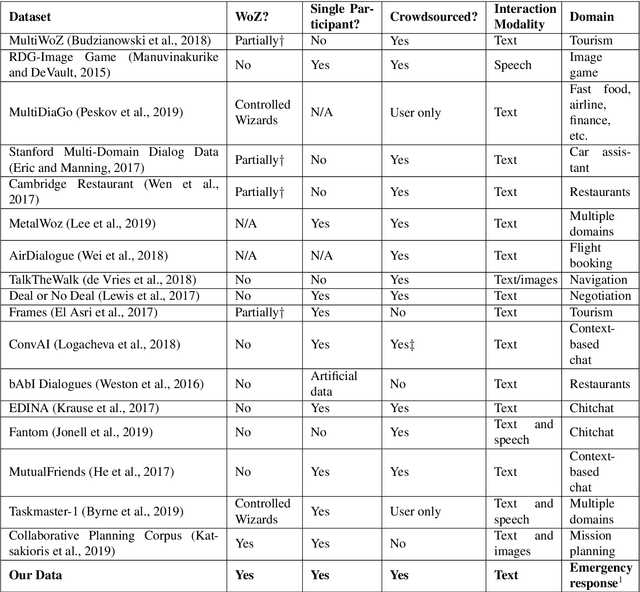

Abstract:Challenges around collecting and processing quality data have hampered progress in data-driven dialogue models. Previous approaches are moving away from costly, resource-intensive lab settings, where collection is slow but where the data is deemed of high quality. The advent of crowd-sourcing platforms, such as Amazon Mechanical Turk, has provided researchers with an alternative cost-effective and rapid way to collect data. However, the collection of fluid, natural spoken or textual interaction can be challenging, particularly between two crowd-sourced workers. In this study, we compare the performance of dialogue models for the same interaction task but collected in two different settings: in the lab vs. crowd-sourced. We find that fewer lab dialogues are needed to reach similar accuracy, less than half the amount of lab data as crowd-sourced data. We discuss the advantages and disadvantages of each data collection method.

Transfer Learning for British Sign Language Modelling

Jun 03, 2020

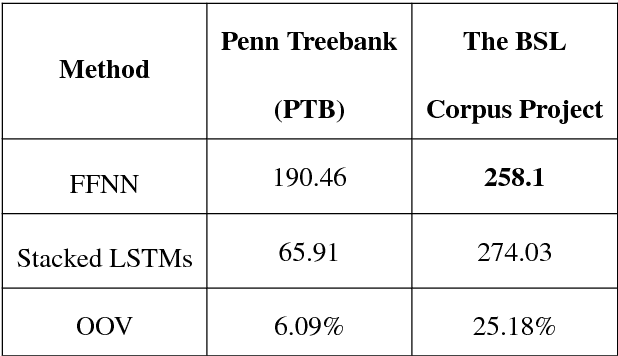

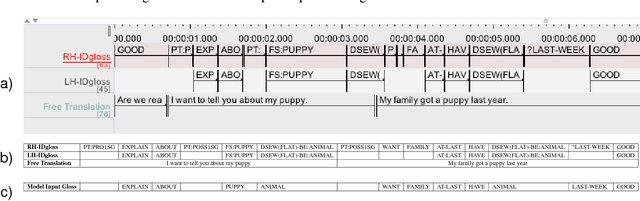

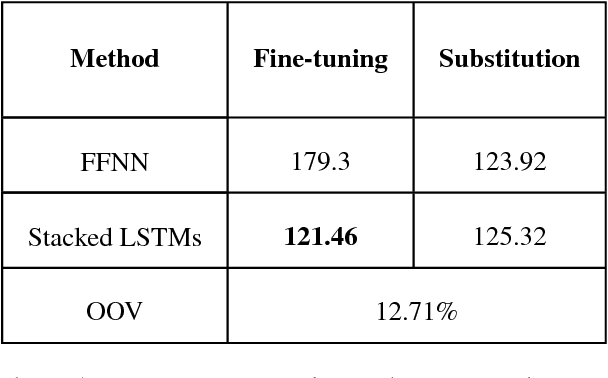

Abstract:Automatic speech recognition and spoken dialogue systems have made great advances through the use of deep machine learning methods. This is partly due to greater computing power but also through the large amount of data available in common languages, such as English. Conversely, research in minority languages, including sign languages, is hampered by the severe lack of data. This has led to work on transfer learning methods, whereby a model developed for one language is reused as the starting point for a model on a second language, which is less resourced. In this paper, we examine two transfer learning techniques of fine-tuning and layer substitution for language modelling of British Sign Language. Our results show improvement in perplexity when using transfer learning with standard stacked LSTM models, trained initially using a large corpus for standard English from the Penn Treebank corpus

* 10 pages, 3 figures

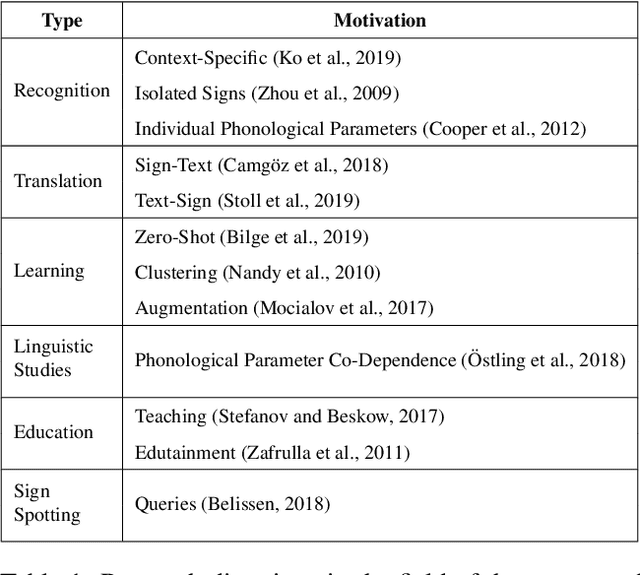

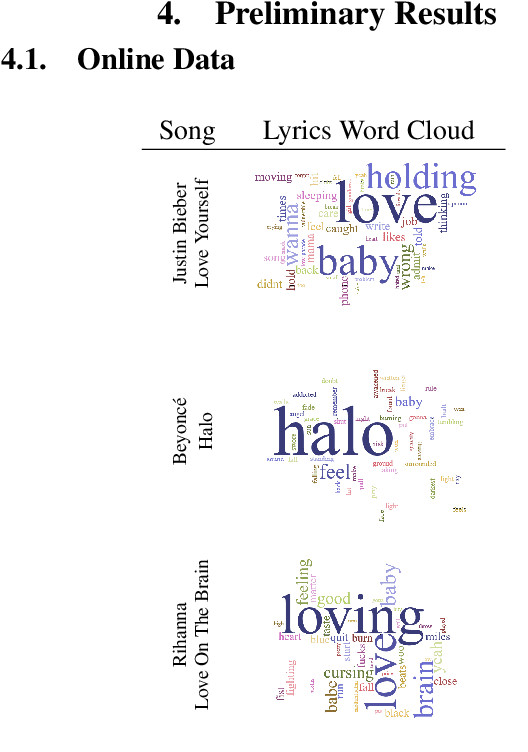

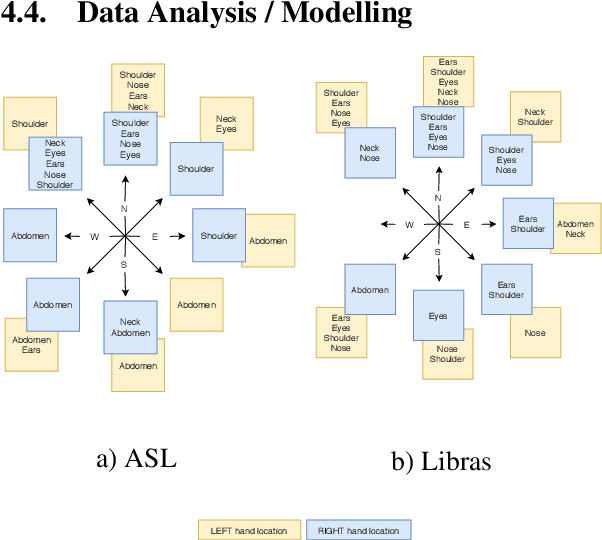

Towards Large-Scale Data Mining for Data-Driven Analysis of Sign Languages

Jun 03, 2020

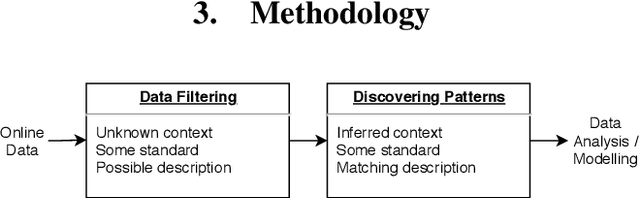

Abstract:Access to sign language data is far from adequate. We show that it is possible to collect the data from social networking services such as TikTok, Instagram, and YouTube by applying data filtering to enforce quality standards and by discovering patterns in the filtered data, making it easier to analyse and model. Using our data collection pipeline, we collect and examine the interpretation of songs in both the American Sign Language (ASL) and the Brazilian Sign Language (Libras). We explore their differences and similarities by looking at the co-dependence of the orientation and location phonological parameters

* https://colab.research.google.com/drive/118Sx1ua-NXy9kjqWi94vz-RrRVYKmnDl?usp=sharing

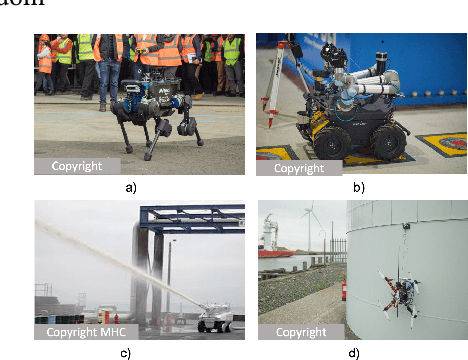

Robots in the Danger Zone: Exploring Public Perception through Engagement

Apr 01, 2020

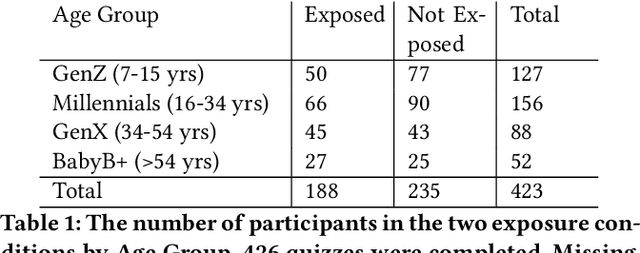

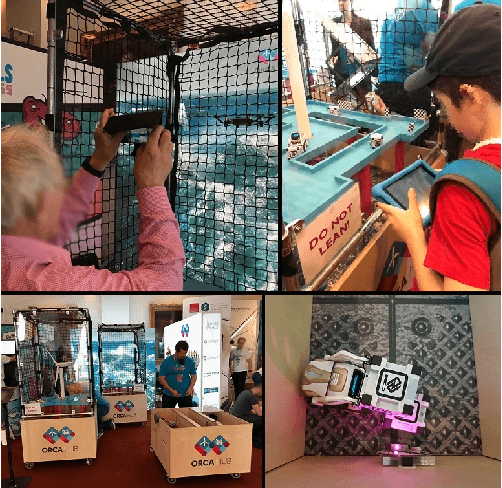

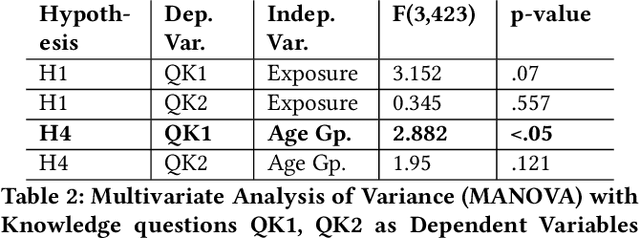

Abstract:Public perceptions of Robotics and Artificial Intelligence (RAI) are important in the acceptance, uptake, government regulation and research funding of this technology. Recent research has shown that the public's understanding of RAI can be negative or inaccurate. We believe effective public engagement can help ensure that public opinion is better informed. In this paper, we describe our first iteration of a high throughput in-person public engagement activity. We describe the use of a light touch quiz-format survey instrument to integrate in-the-wild research participation into the engagement, allowing us to probe both the effectiveness of our engagement strategy, and public perceptions of the future roles of robots and humans working in dangerous settings, such as in the off-shore energy sector. We critique our methods and share interesting results into generational differences within the public's view of the future of Robotics and AI in hazardous environments. These findings include that older peoples' views about the future of robots in hazardous environments were not swayed by exposure to our exhibit, while the views of younger people were affected by our exhibit, leading us to consider carefully in future how to more effectively engage with and inform older people.

* Accepted in HRI 2020, Keywords: Human robot interaction, robotics, artificial intelligence, public engagement, public perceptions of robots, robotics and society

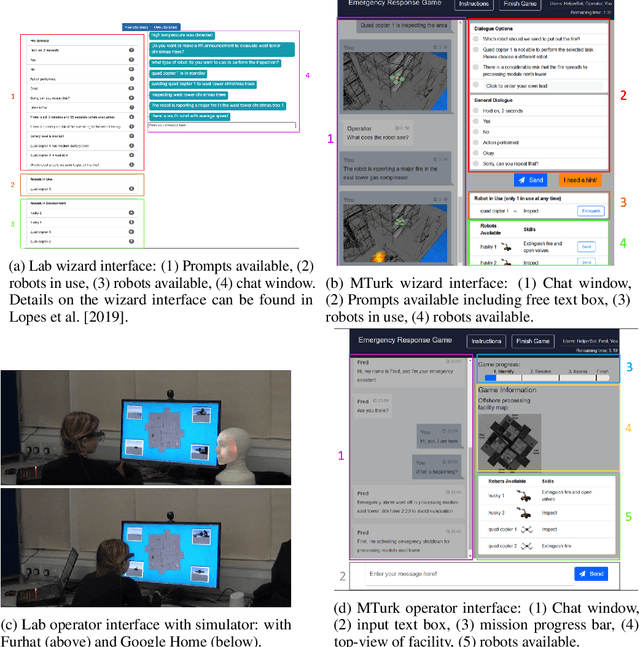

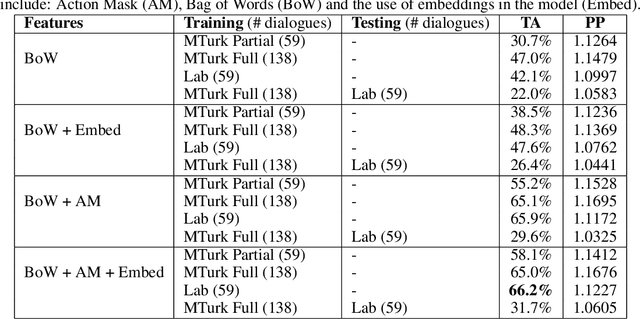

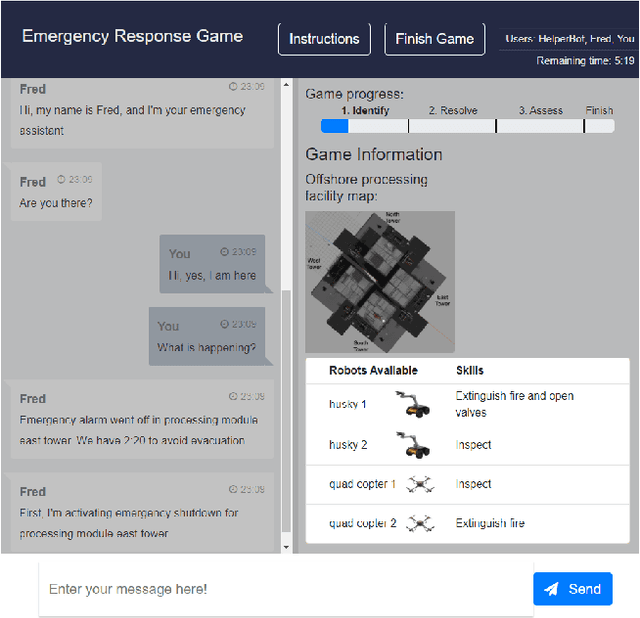

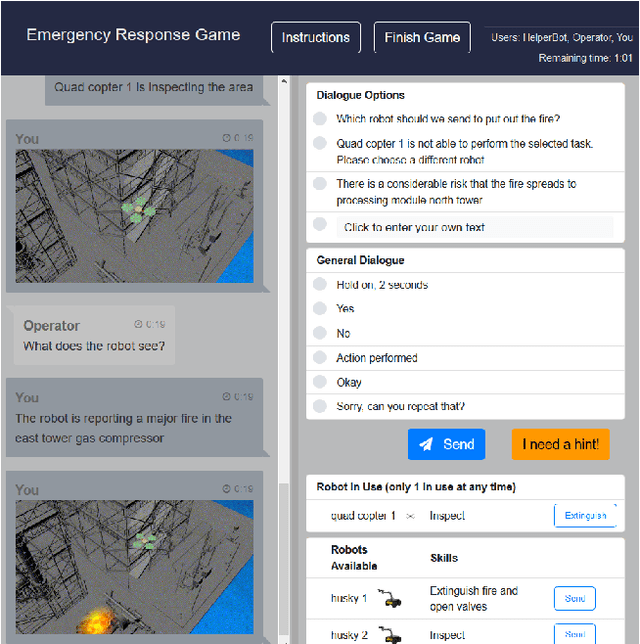

CRWIZ: A Framework for Crowdsourcing Real-Time Wizard-of-Oz Dialogues

Mar 12, 2020

Abstract:Large corpora of task-based and open-domain conversational dialogues are hugely valuable in the field of data-driven dialogue systems. Crowdsourcing platforms, such as Amazon Mechanical Turk, have been an effective method for collecting such large amounts of data. However, difficulties arise when task-based dialogues require expert domain knowledge or rapid access to domain-relevant information, such as databases for tourism. This will become even more prevalent as dialogue systems become increasingly ambitious, expanding into tasks with high levels of complexity that require collaboration and forward planning, such as in our domain of emergency response. In this paper, we propose CRWIZ: a framework for collecting real-time Wizard of Oz dialogues through crowdsourcing for collaborative, complex tasks. This framework uses semi-guided dialogue to avoid interactions that breach procedures and processes only known to experts, while enabling the capture of a wide variety of interactions. The framework is available at https://github.com/JChiyah/crwiz

Natural Language Interaction to Facilitate Mental Models of Remote Robots

Mar 12, 2020

Abstract:Increasingly complex and autonomous robots are being deployed in real-world environments with far-reaching consequences. High-stakes scenarios, such as emergency response or offshore energy platform and nuclear inspections, require robot operators to have clear mental models of what the robots can and can't do. However, operators are often not the original designers of the robots and thus, they do not necessarily have such clear mental models, especially if they are novice users. This lack of mental model clarity can slow adoption and can negatively impact human-machine teaming. We propose that interaction with a conversational assistant, who acts as a mediator, can help the user with understanding the functionality of remote robots and increase transparency through natural language explanations, as well as facilitate the evaluation of operators' mental models.

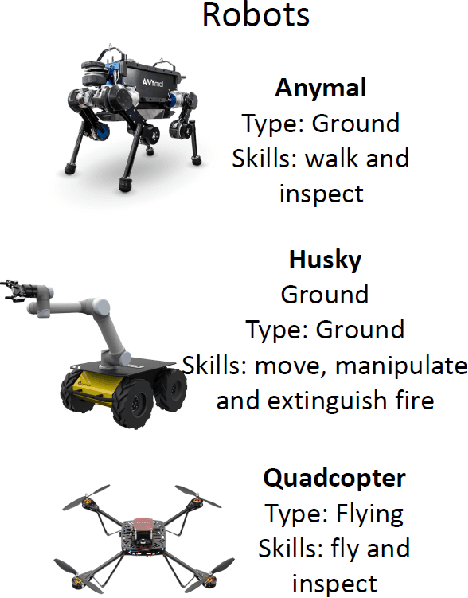

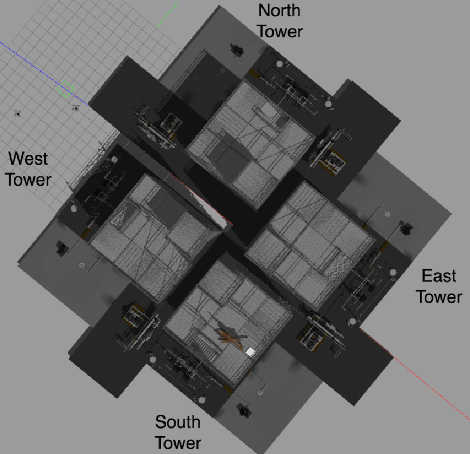

Challenges in Collaborative HRI for Remote Robot Teams

May 17, 2019

Abstract:Collaboration between human supervisors and remote teams of robots is highly challenging, particularly in high-stakes, distant, hazardous locations, such as off-shore energy platforms. In order for these teams of robots to truly be beneficial, they need to be trusted to operate autonomously, performing tasks such as inspection and emergency response, thus reducing the number of personnel placed in harm's way. As remote robots are generally trusted less than robots in close-proximity, we present a solution to instil trust in the operator through a `mediator robot' that can exhibit social skills, alongside sophisticated visualisation techniques. In this position paper, we present general challenges and then take a closer look at one challenge in particular, discussing an initial study, which investigates the relationship between the level of control the supervisor hands over to the mediator robot and how this affects their trust. We show that the supervisor is more likely to have higher trust overall if their initial experience involves handing over control of the emergency situation to the robotic assistant. We discuss this result, here, as well as other challenges and interaction techniques for human-robot collaboration.

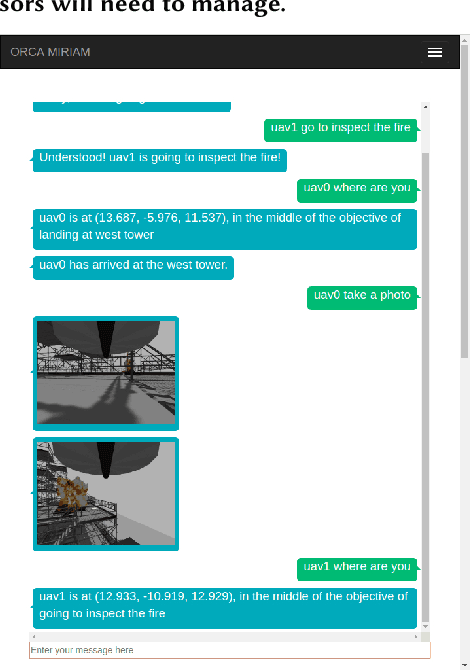

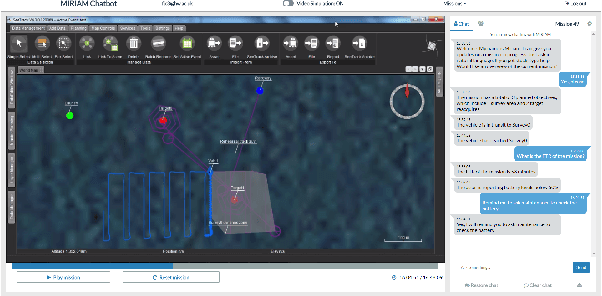

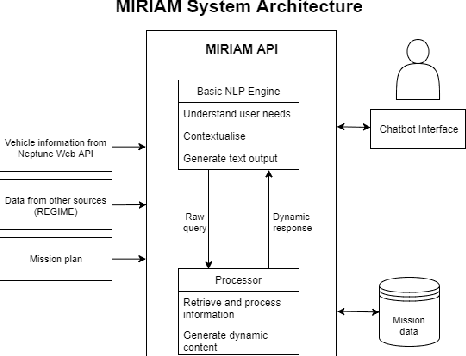

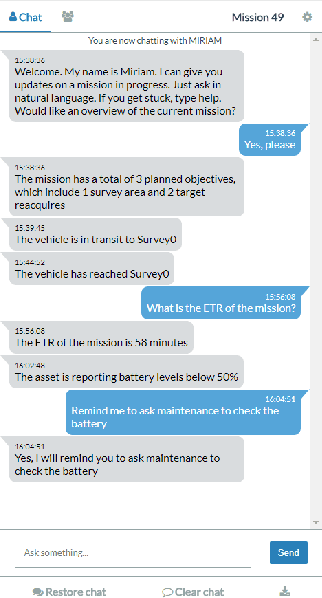

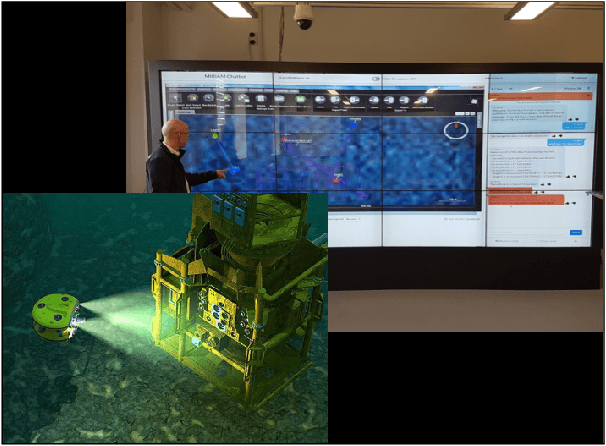

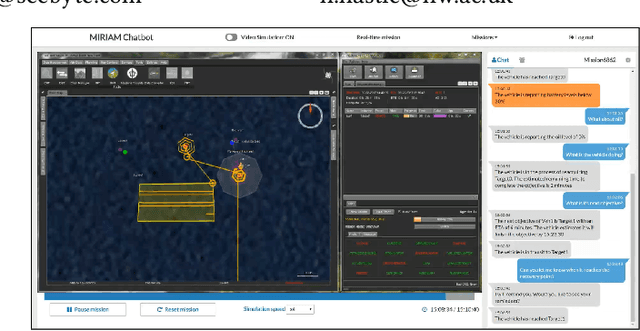

MIRIAM: A Multimodal Chat-Based Interface for Autonomous Systems

Mar 06, 2018

Abstract:We present MIRIAM (Multimodal Intelligent inteRactIon for Autonomous systeMs), a multimodal interface to support situation awareness of autonomous vehicles through chat-based interaction. The user is able to chat about the vehicle's plan, objectives, previous activities and mission progress. The system is mixed initiative in that it pro-actively sends messages about key events, such as fault warnings. We will demonstrate MIRIAM using SeeByte's SeeTrack command and control interface and Neptune autonomy simulator.

The ORCA Hub: Explainable Offshore Robotics through Intelligent Interfaces

Mar 06, 2018

Abstract:We present the UK Robotics and Artificial Intelligence Hub for Offshore Robotics for Certification of Assets (ORCA Hub), a 3.5 year EPSRC funded, multi-site project. The ORCA Hub vision is to use teams of robots and autonomous intelligent systems (AIS) to work on offshore energy platforms to enable cheaper, safer and more efficient working practices. The ORCA Hub will research, integrate, validate and deploy remote AIS solutions that can operate with existing and future offshore energy assets and sensors, interacting safely in autonomous or semi-autonomous modes in complex and cluttered environments, co-operating with remote operators. The goal is that through the use of such robotic systems offshore, the need for personnel will decrease. To enable this to happen, the remote operator will need a high level of situation awareness and key to this is the transparency of what the autonomous systems are doing and why. This increased transparency will facilitate a trusting relationship, which is particularly key in high-stakes, hazardous situations.

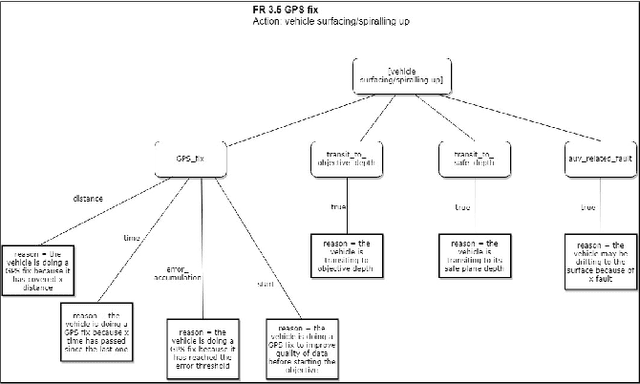

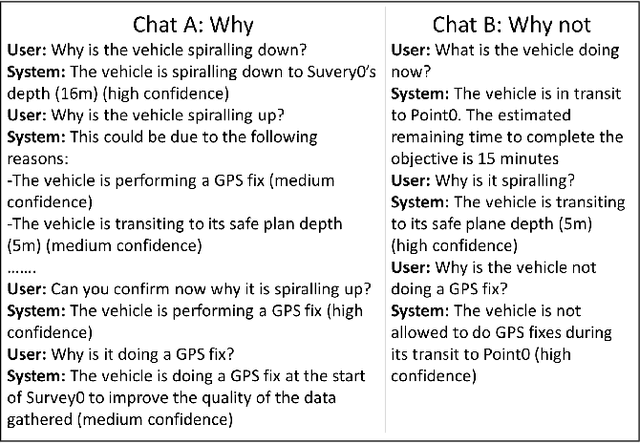

Explain Yourself: A Natural Language Interface for Scrutable Autonomous Robots

Mar 06, 2018

Abstract:Autonomous systems in remote locations have a high degree of autonomy and there is a need to explain what they are doing and why in order to increase transparency and maintain trust. Here, we describe a natural language chat interface that enables vehicle behaviour to be queried by the user. We obtain an interpretable model of autonomy through having an expert 'speak out-loud' and provide explanations during a mission. This approach is agnostic to the type of autonomy model and as expert and operator are from the same user-group, we predict that these explanations will align well with the operator's mental model, increase transparency and assist with operator training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge