Ronald P. A. Petrick

Heriot-Watt University

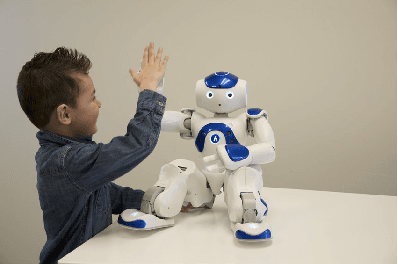

A Socially Assistive Robot using Automated Planning in a Paediatric Clinical Setting

Oct 18, 2022

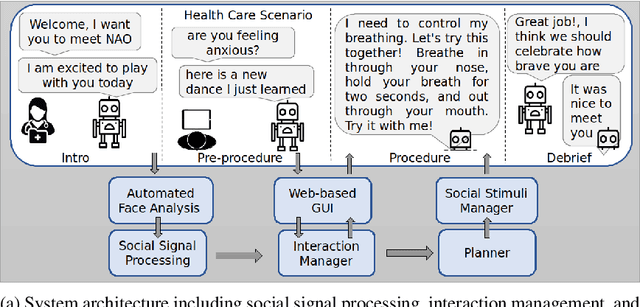

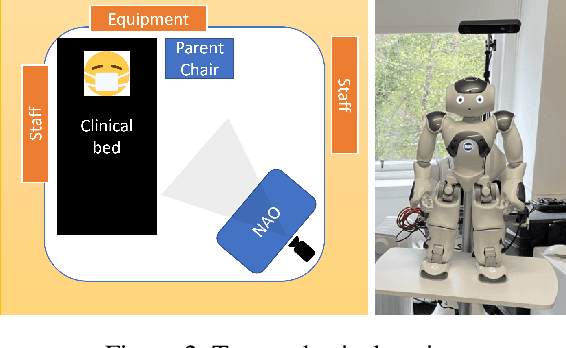

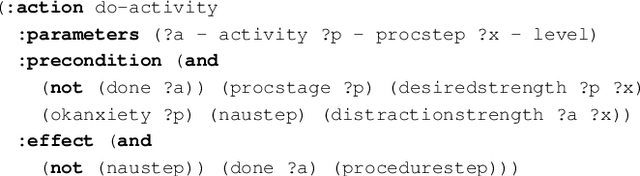

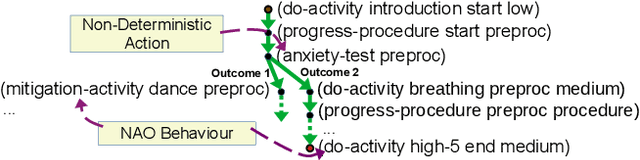

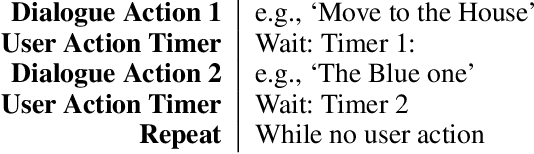

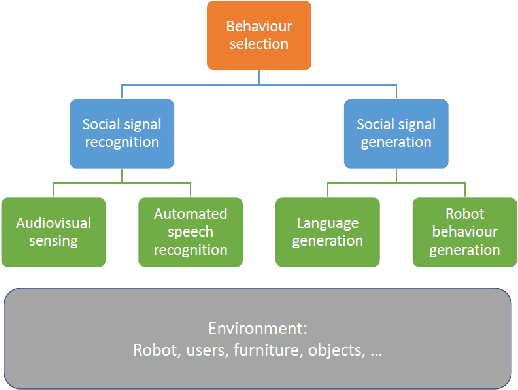

Abstract:We present an ongoing project that aims to develop a social robot to help children cope with painful and distressing medical procedures in a clinical setting. Our approach uses automated planning as a core component for action selection in order to generate plans that include physical, sensory, and social actions for the robot to use when interacting with humans. A key capability of our system is that the robot's behaviour adapts based on the affective state of the child patient. The robot must operate in a challenging physical and social environment where appropriate and safe interaction with children, parents/caregivers, and healthcare professionals is crucial. In this paper, we present our system, examine some of the key challenges of the scenario, and describe how they are addressed by our system.

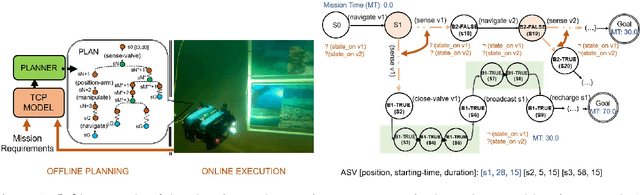

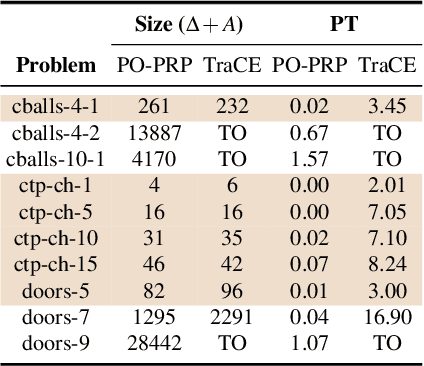

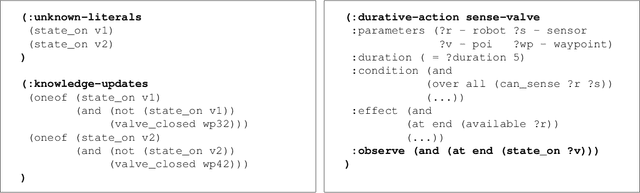

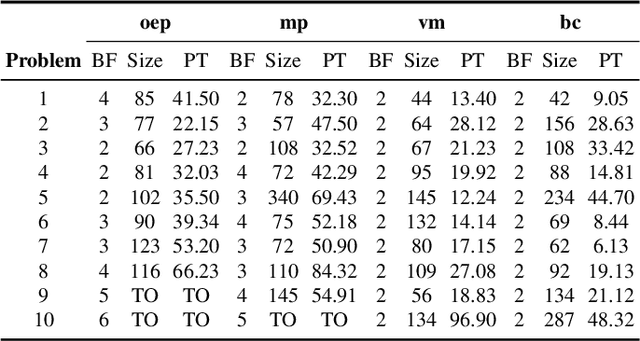

Temporal Planning with Incomplete Knowledge and Perceptual Information

Jul 20, 2022

Abstract:In real-world applications, the ability to reason about incomplete knowledge, sensing, temporal notions, and numeric constraints is vital. While several AI planners are capable of dealing with some of these requirements, they are mostly limited to problems with specific types of constraints. This paper presents a new planning approach that combines contingent plan construction within a temporal planning framework, offering solutions that consider numeric constraints and incomplete knowledge. We propose a small extension to the Planning Domain Definition Language (PDDL) to model (i) incomplete, (ii) knowledge sensing actions that operate over unknown propositions, and (iii) possible outcomes from non-deterministic sensing effects. We also introduce a new set of planning domains to evaluate our solver, which has shown good performance on a variety of problems.

* In Proceedings AREA 2022, arXiv:2207.09058

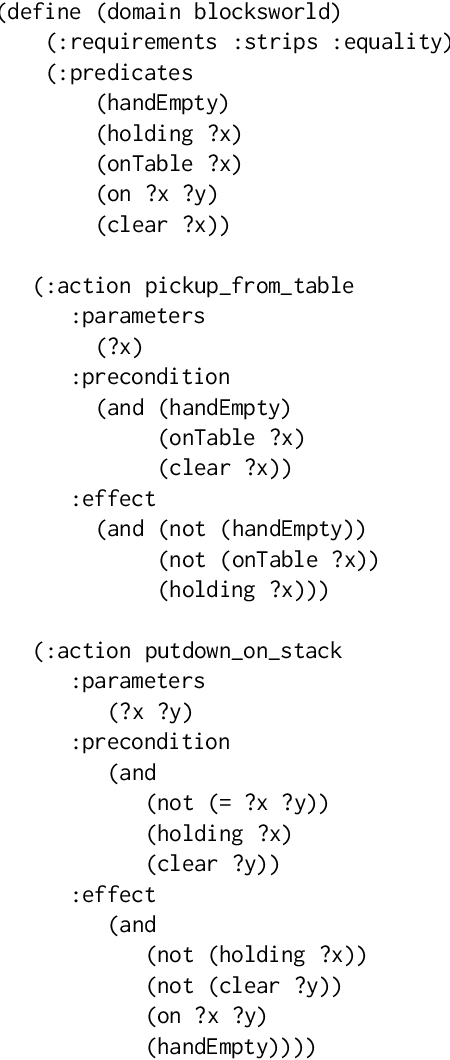

Actions You Can Handle: Dependent Types for AI Plans

May 24, 2021

Abstract:Verification of AI is a challenge that has engineering, algorithmic and programming language components. For example, AI planners are deployed to model actions of autonomous agents. They comprise a number of searching algorithms that, given a set of specified properties, find a sequence of actions that satisfy these properties. Although AI planners are mature tools from the algorithmic and engineering points of view, they have limitations as programming languages. Decidable and efficient automated search entails restrictions on the syntax of the language, prohibiting use of higher-order properties or recursion. This paper proposes a methodology for embedding plans produced by AI planners into dependently-typed language Agda, which enables users to reason about and verify more general and abstract properties of plans, and also provides a more holistic programming language infrastructure for modelling plan execution.

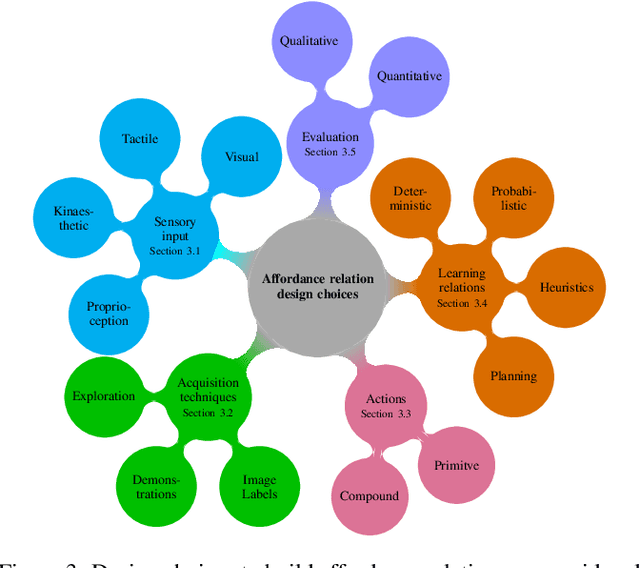

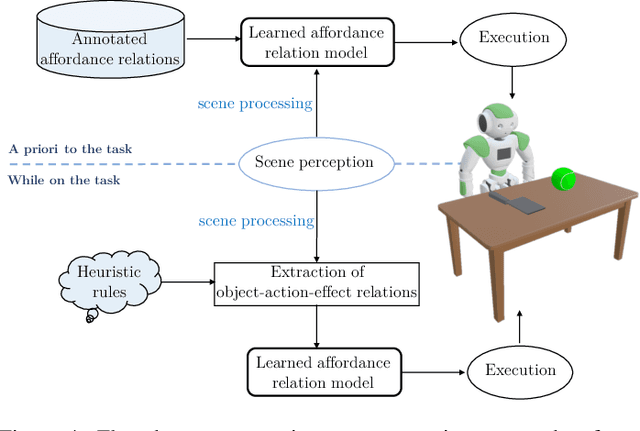

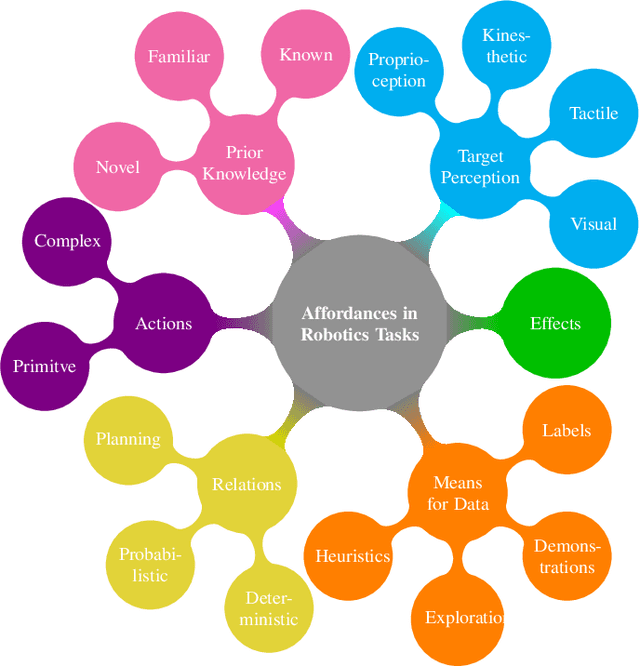

Building Affordance Relations for Robotic Agents - A Review

May 14, 2021

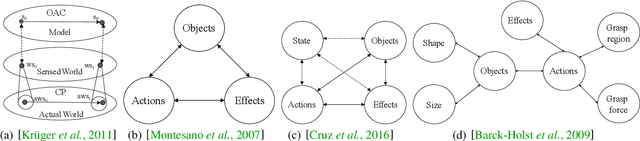

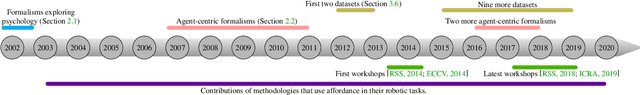

Abstract:Affordances describe the possibilities for an agent to perform actions with an object. While the significance of the affordance concept has been previously studied from varied perspectives, such as psychology and cognitive science, these approaches are not always sufficient to enable direct transfer, in the sense of implementations, to artificial intelligence (AI)-based systems and robotics. However, many efforts have been made to pragmatically employ the concept of affordances, as it represents great potential for AI agents to effectively bridge perception to action. In this survey, we review and find common ground amongst different strategies that use the concept of affordances within robotic tasks, and build on these methods to provide guidance for including affordances as a mechanism to improve autonomy. To this end, we outline common design choices for building representations of affordance relations, and their implications on the generalisation capabilities of an agent when facing previously unseen scenarios. Finally, we identify and discuss a range of interesting research directions involving affordances that have the potential to improve the capabilities of an AI agent.

Investigating Human Response, Behaviour, and Preference in Joint-Task Interaction

Nov 27, 2020

Abstract:Human interaction relies on a wide range of signals, including non-verbal cues. In order to develop effective Explainable Planning (XAIP) agents it is important that we understand the range and utility of these communication channels. Our starting point is existing results from joint task interaction and their study in cognitive science. Our intention is that these lessons can inform the design of interaction agents -- including those using planning techniques -- whose behaviour is conditioned on the user's response, including affective measures of the user (i.e., explicitly incorporating the user's affective state within the planning model). We have identified several concepts at the intersection of plan-based agent behaviour and joint task interaction and have used these to design two agents: one reactive and the other partially predictive. We have designed an experiment in order to examine human behaviour and response as they interact with these agents. In this paper we present the designed study and the key questions that are being investigated. We also present the results from an empirical analysis where we examined the behaviour of the two agents for simulated users.

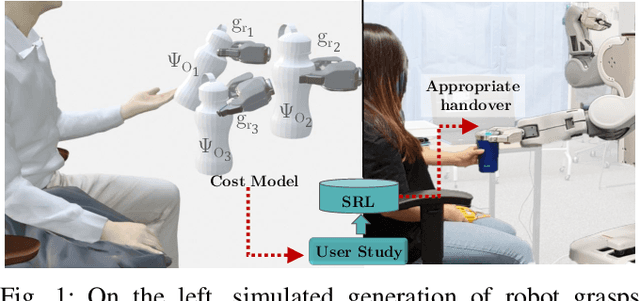

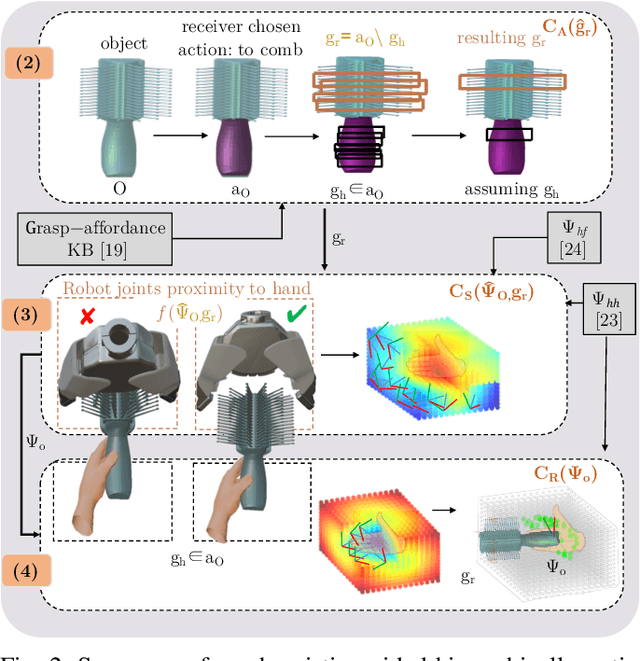

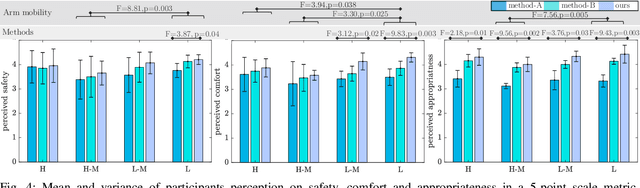

Affordance-Aware Handovers with Human Arm Mobility Constraints

Oct 29, 2020

Abstract:Reasoning about object handover configurations allows an assistive agent to estimate the appropriateness of handover for a receiver with different arm mobility capacities. While there are existing approaches to estimating the effectiveness of handovers, their findings are limited to users without arm mobility impairments and to specific objects. Therefore, current state-of-the-art approaches are unable to hand over novel objects to receivers with different arm mobility capacities. We propose a method that generalises handover behaviours to previously unseen objects, subject to the constraint of a user's arm mobility levels and the task context. We propose a heuristic-guided hierarchically optimised cost whose optimisation adapts object configurations for receivers with low arm mobility. This also ensures that the robot grasps consider the context of the user's upcoming task, i.e., the usage of the object. To understand preferences over handover configurations, we report on the findings of an online study, wherein we presented different handover methods, including ours, to $259$ users with different levels of arm mobility. We encapsulate these preferences in a SRL that is able to reason about the most suitable handover configuration given a receiver's arm mobility and upcoming task. We find that people's preferences over handover methods are correlated to their arm mobility capacities. In experiments with a PR2 robotic platform, we obtained an average handover accuracy of $90.8\%$ when generalising handovers to novel objects.

Towards Social HRI for Improving Children's Healthcare Experiences

Oct 09, 2020

Abstract:This paper describes a new research project that aims to develop a social robot designed to help children cope with painful and distressing medical procedures in a clinical setting. While robots have previously been trialled for this task, with promising initial results, the systems have tended to be teleoperated, limiting their flexibility and robustness. This project will use epistemic planning techniques as a core component for action selection in the robot system, in order to generate plans that include physical, sensory, and social actions for interacting with humans. The robot will operate in a task environment where appropriate and safe interaction with children, parents/caregivers, and healthcare professionals is required. In addition to addressing the core technical challenge of building an autonomous social robot, the project will incorporate co-design techniques involving all participant groups, and the final robot system will be evaluated in a two-site clinical trial.

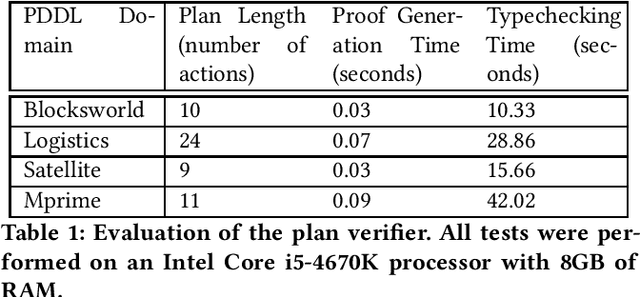

Proof-Carrying Plans: a Resource Logic for AI Planning

Aug 10, 2020

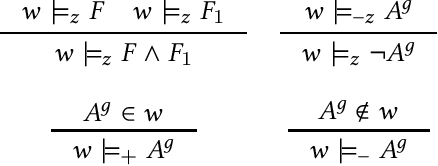

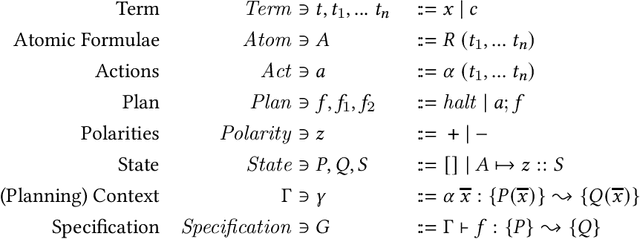

Abstract:Recent trends in AI verification and Explainable AI have raised the question of whether AI planning techniques can be verified. In this paper, we present a novel resource logic, the Proof Carrying Plans (PCP) logic that can be used to verify plans produced by AI planners. The PCP logic takes inspiration from existing resource logics (such as Linear logic and Separation logic) as well as Hoare logic when it comes to modelling states and resource-aware plan execution. It also capitalises on the Curry-Howard approach to logics, in its treatment of plans as functions and plan pre- and post-conditions as types. This paper presents two main results. From the theoretical perspective, we show that the PCP logic is sound relative to the standard possible world semantics used in AI planning. From the practical perspective, we present a complete Agda formalisation of the PCP logic and of its soundness proof. Moreover, we showcase the Curry-Howard, or functional, value of this implementation by supplementing it with the library that parses AI plans into Agda's proofs automatically. We provide evaluation of this library and the resulting Agda functions.

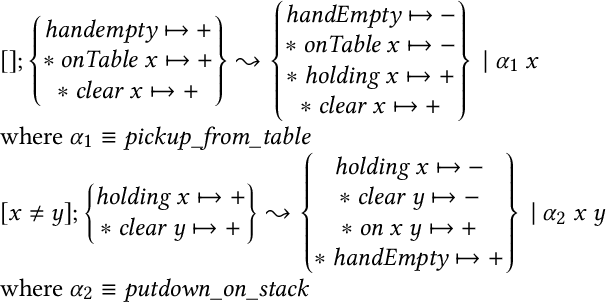

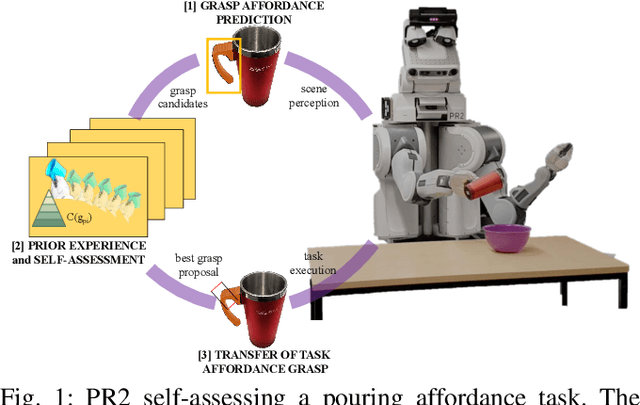

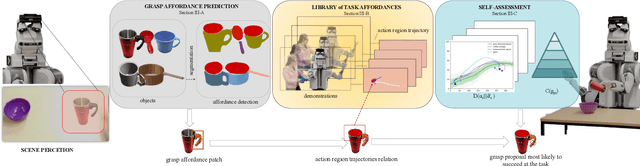

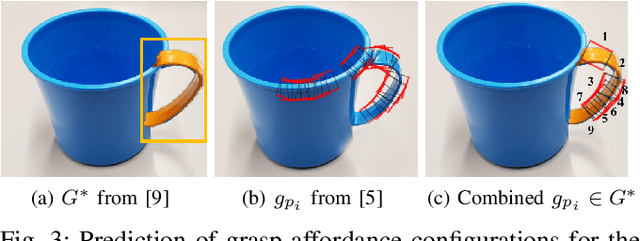

Self-Assessment of Grasp Affordance Transfer

Jul 04, 2020

Abstract:Reasoning about object grasp affordances allows an autonomous agent to estimate the most suitable grasp to execute a task. While current approaches for estimating grasp affordances are effective, their prediction is driven by hypotheses on visual features rather than an indicator of a proposal's suitability for an affordance task. Consequently, these works cannot guarantee any level of performance when executing a task and, in fact, not even ensure successful task completion. In this work, we present a pipeline for SAGAT based on prior experiences. We visually detect a grasp affordance region to extract multiple grasp affordance configuration candidates. Using these candidates, we forward simulate the outcome of executing the affordance task to analyse the relation between task outcome and grasp candidates. The relations are ranked by performance success with a heuristic confidence function and used to build a library of affordance task experiences. The library is later queried to perform one-shot transfer estimation of the best grasp configuration on new objects. Experimental evaluation shows that our method exhibits a significant performance improvement up to 11.7% against current state-of-the-art methods on grasp affordance detection. Experiments on a PR2 robotic platform demonstrate our method's highly reliable deployability to deal with real-world task affordance problems.

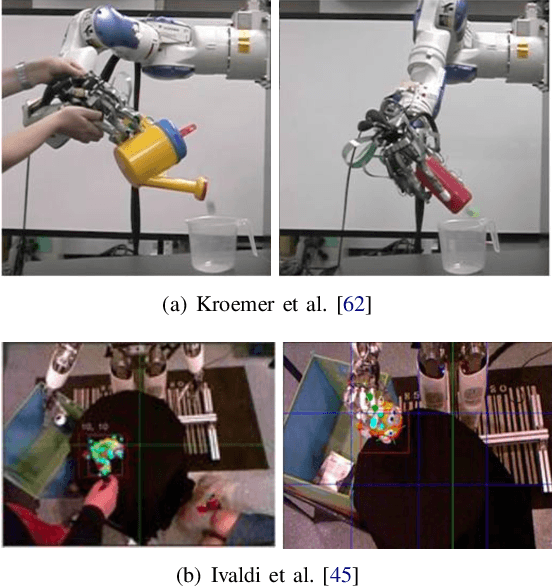

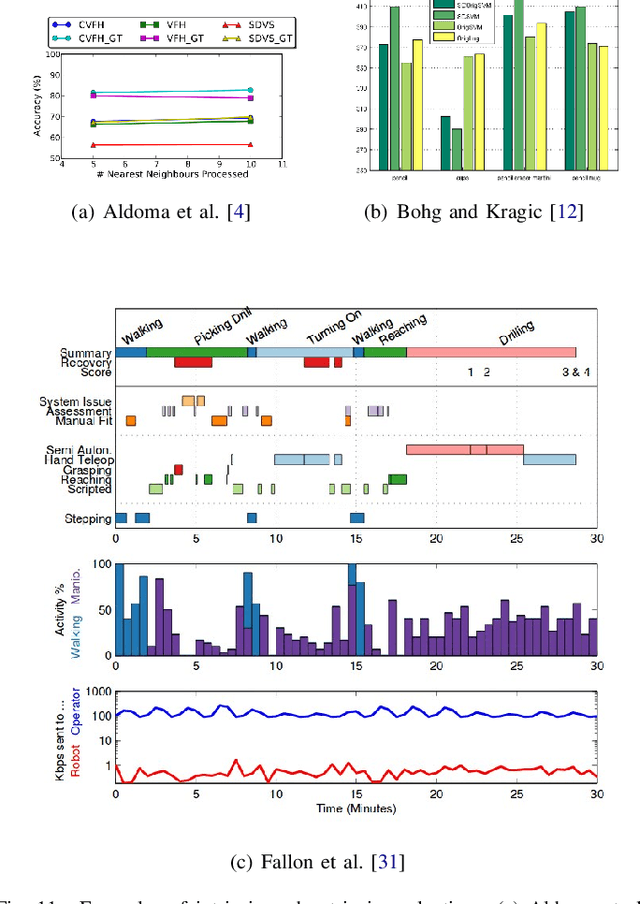

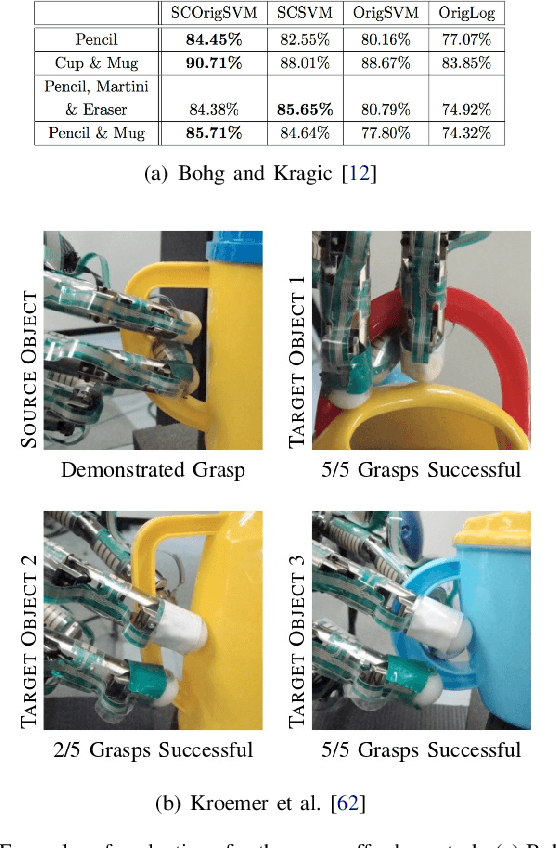

Affordances in Robotic Tasks -- A Survey

Apr 15, 2020

Abstract:Affordances are key attributes of what must be perceived by an autonomous robotic agent in order to effectively interact with novel objects. Historically, the concept derives from the literature in psychology and cognitive science, where affordances are discussed in a way that makes it hard for the definition to be directly transferred to computational specifications useful for robots. This review article is focused specifically on robotics, so we discuss the related literature from this perspective. In this survey, we classify the literature and try to find common ground amongst different approaches with a view to application in robotics. We propose a categorisation based on the level of prior knowledge that is assumed to build the relationship among different affordance components that matter for a particular robotic task. We also identify areas for future improvement and discuss possible directions that are likely to be fruitful in terms of impact on robotics practice.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge