Pedro Patron

MIRIAM: A Multimodal Chat-Based Interface for Autonomous Systems

Mar 06, 2018

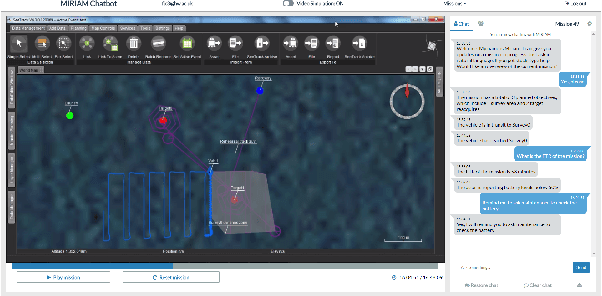

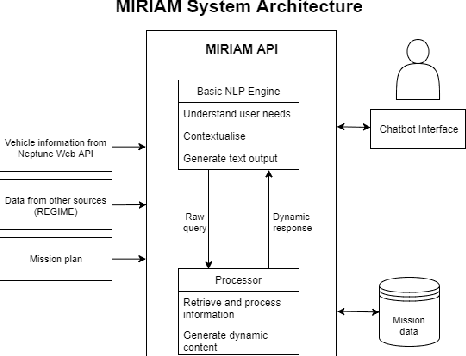

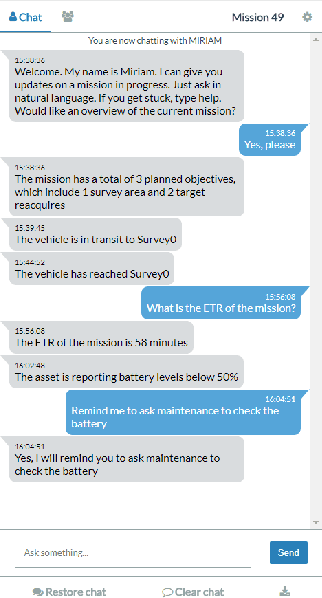

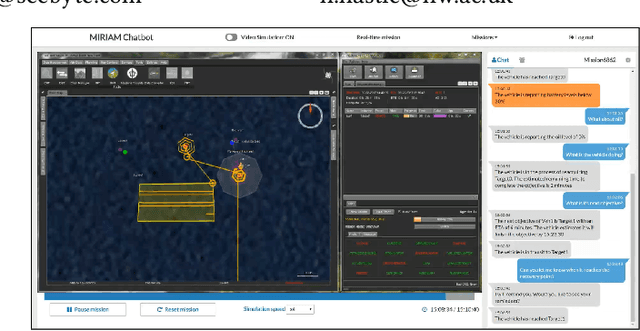

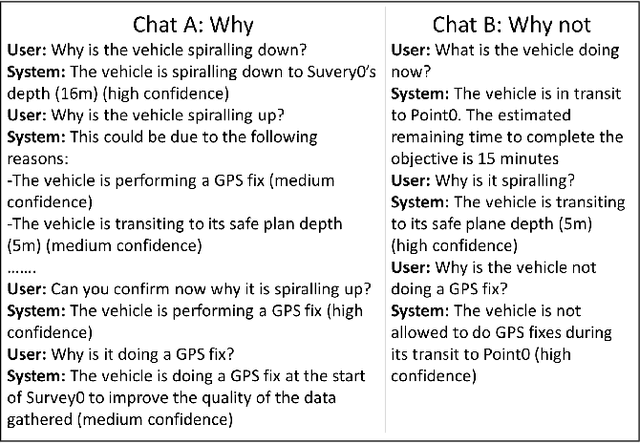

Abstract:We present MIRIAM (Multimodal Intelligent inteRactIon for Autonomous systeMs), a multimodal interface to support situation awareness of autonomous vehicles through chat-based interaction. The user is able to chat about the vehicle's plan, objectives, previous activities and mission progress. The system is mixed initiative in that it pro-actively sends messages about key events, such as fault warnings. We will demonstrate MIRIAM using SeeByte's SeeTrack command and control interface and Neptune autonomy simulator.

Explain Yourself: A Natural Language Interface for Scrutable Autonomous Robots

Mar 06, 2018

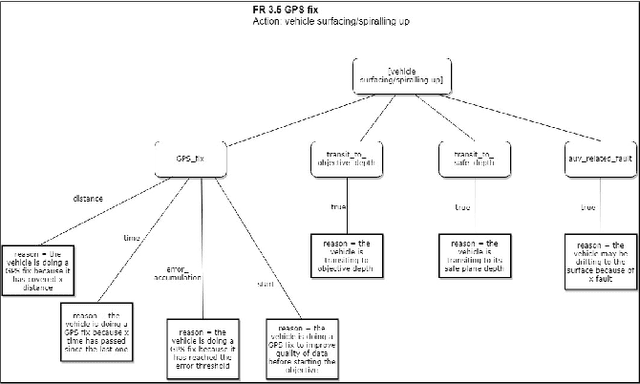

Abstract:Autonomous systems in remote locations have a high degree of autonomy and there is a need to explain what they are doing and why in order to increase transparency and maintain trust. Here, we describe a natural language chat interface that enables vehicle behaviour to be queried by the user. We obtain an interpretable model of autonomy through having an expert 'speak out-loud' and provide explanations during a mission. This approach is agnostic to the type of autonomy model and as expert and operator are from the same user-group, we predict that these explanations will align well with the operator's mental model, increase transparency and assist with operator training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge