Haibin Ling

CheckerPose: Progressive Dense Keypoint Localization for Object Pose Estimation with Graph Neural Network

Mar 29, 2023Abstract:Estimating the 6-DoF pose of a rigid object from a single RGB image is a crucial yet challenging task. Recent studies have shown the great potential of dense correspondence-based solutions, yet improvements are still needed to reach practical deployment. In this paper, we propose a novel pose estimation algorithm named CheckerPose, which improves on three main aspects. Firstly, CheckerPose densely samples 3D keypoints from the surface of the 3D object and finds their 2D correspondences progressively in the 2D image. Compared to previous solutions that conduct dense sampling in the image space, our strategy enables the correspondence searching in a 2D grid (i.e., pixel coordinate). Secondly, for our 3D-to-2D correspondence, we design a compact binary code representation for 2D image locations. This representation not only allows for progressive correspondence refinement but also converts the correspondence regression to a more efficient classification problem. Thirdly, we adopt a graph neural network to explicitly model the interactions among the sampled 3D keypoints, further boosting the reliability and accuracy of the correspondences. Together, these novel components make our CheckerPose a strong pose estimation algorithm. When evaluated on the popular Linemod, Linemod-O, and YCB-V object pose estimation benchmarks, CheckerPose clearly boosts the accuracy of correspondence-based methods and achieves state-of-the-art performances.

Mask and Restore: Blind Backdoor Defense at Test Time with Masked Autoencoder

Mar 27, 2023

Abstract:Deep neural networks are vulnerable to backdoor attacks, where an adversary maliciously manipulates the model behavior through overlaying images with special triggers. Existing backdoor defense methods often require accessing a few validation data and model parameters, which are impractical in many real-world applications, e.g., when the model is provided as a cloud service. In this paper, we address the practical task of blind backdoor defense at test time, in particular for black-box models. The true label of every test image needs to be recovered on the fly from the hard label predictions of a suspicious model. The heuristic trigger search in image space, however, is not scalable to complex triggers or high image resolution. We circumvent such barrier by leveraging generic image generation models, and propose a framework of Blind Defense with Masked AutoEncoder (BDMAE). It uses the image structural similarity and label consistency between the test image and MAE restorations to detect possible triggers. The detection result is refined by considering the topology of triggers. We obtain a purified test image from restorations for making prediction. Our approach is blind to the model architectures, trigger patterns or image benignity. Extensive experiments on multiple datasets with different backdoor attacks validate its effectiveness and generalizability. Code is available at https://github.com/tsun/BDMAE.

The Cascaded Forward Algorithm for Neural Network Training

Mar 24, 2023

Abstract:Backpropagation algorithm has been widely used as a mainstream learning procedure for neural networks in the past decade, and has played a significant role in the development of deep learning. However, there exist some limitations associated with this algorithm, such as getting stuck in local minima and experiencing vanishing/exploding gradients, which have led to questions about its biological plausibility. To address these limitations, alternative algorithms to backpropagation have been preliminarily explored, with the Forward-Forward (FF) algorithm being one of the most well-known. In this paper we propose a new learning framework for neural networks, namely Cascaded Forward (CaFo) algorithm, which does not rely on BP optimization as that in FF. Unlike FF, our framework directly outputs label distributions at each cascaded block, which does not require generation of additional negative samples and thus leads to a more efficient process at both training and testing. Moreover, in our framework each block can be trained independently, so it can be easily deployed into parallel acceleration systems. The proposed method is evaluated on four public image classification benchmarks, and the experimental results illustrate significant improvement in prediction accuracy in comparison with the baseline.

The Treasure Beneath Multiple Annotations: An Uncertainty-aware Edge Detector

Mar 21, 2023

Abstract:Deep learning-based edge detectors heavily rely on pixel-wise labels which are often provided by multiple annotators. Existing methods fuse multiple annotations using a simple voting process, ignoring the inherent ambiguity of edges and labeling bias of annotators. In this paper, we propose a novel uncertainty-aware edge detector (UAED), which employs uncertainty to investigate the subjectivity and ambiguity of diverse annotations. Specifically, we first convert the deterministic label space into a learnable Gaussian distribution, whose variance measures the degree of ambiguity among different annotations. Then we regard the learned variance as the estimated uncertainty of the predicted edge maps, and pixels with higher uncertainty are likely to be hard samples for edge detection. Therefore we design an adaptive weighting loss to emphasize the learning from those pixels with high uncertainty, which helps the network to gradually concentrate on the important pixels. UAED can be combined with various encoder-decoder backbones, and the extensive experiments demonstrate that UAED achieves superior performance consistently across multiple edge detection benchmarks. The source code is available at \url{https://github.com/ZhouCX117/UAED}

Robust Domain Adaptive Object Detection with Unified Multi-Granularity Alignment

Jan 01, 2023

Abstract:Domain adaptive detection aims to improve the generalization of detectors on target domain. To reduce discrepancy in feature distributions between two domains, recent approaches achieve domain adaption through feature alignment in different granularities via adversarial learning. However, they neglect the relationship between multiple granularities and different features in alignment, degrading detection. Addressing this, we introduce a unified multi-granularity alignment (MGA)-based detection framework for domain-invariant feature learning. The key is to encode the dependencies across different granularities including pixel-, instance-, and category-levels simultaneously to align two domains. Specifically, based on pixel-level features, we first develop an omni-scale gated fusion (OSGF) module to aggregate discriminative representations of instances with scale-aware convolutions, leading to robust multi-scale detection. Besides, we introduce multi-granularity discriminators to identify where, either source or target domains, different granularities of samples come from. Note that, MGA not only leverages instance discriminability in different categories but also exploits category consistency between two domains for detection. Furthermore, we present an adaptive exponential moving average (AEMA) strategy that explores model assessments for model update to improve pseudo labels and alleviate local misalignment problem, boosting detection robustness. Extensive experiments on multiple domain adaption scenarios validate the superiority of MGA over other approaches on FCOS and Faster R-CNN detectors. Code will be released at https://github.com/tiankongzhang/MGA.

Backdoor Cleansing with Unlabeled Data

Nov 23, 2022

Abstract:Due to the increasing computational demand of Deep Neural Networks (DNNs), companies and organizations have begun to outsource the training process. However, the externally trained DNNs can potentially be backdoor attacked. It is crucial to defend against such attacks, i.e., to postprocess a suspicious model so that its backdoor behavior is mitigated while its normal prediction power on clean inputs remain uncompromised. To remove the abnormal backdoor behavior, existing methods mostly rely on additional labeled clean samples. However, such requirement may be unrealistic as the training data are often unavailable to end users. In this paper, we investigate the possibility of circumventing such barrier. We propose a novel defense method that does not require training labels. Through a carefully designed layer-wise weight re-initialization and knowledge distillation, our method can effectively cleanse backdoor behaviors of a suspicious network with negligible compromise in its normal behavior. In experiments, we show that our method, trained without labels, is on-par with state-of-the-art defense methods trained using labels. We also observe promising defense results even on out-of-distribution data. This makes our method very practical.

Local Context-Aware Active Domain Adaptation

Aug 26, 2022

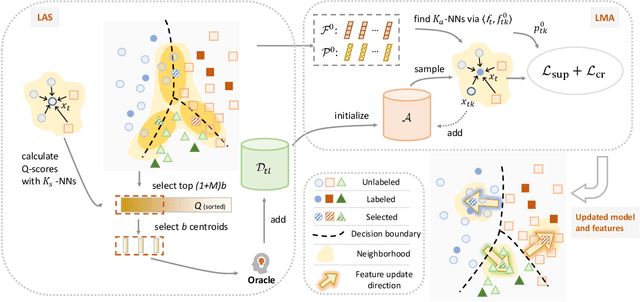

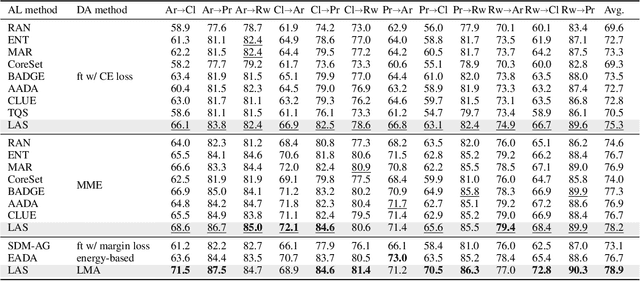

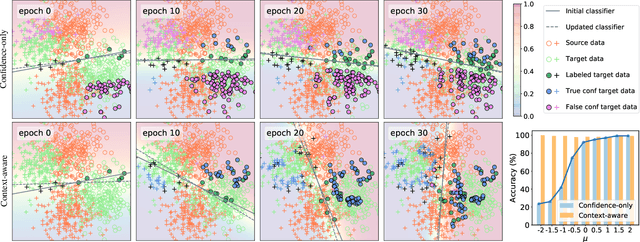

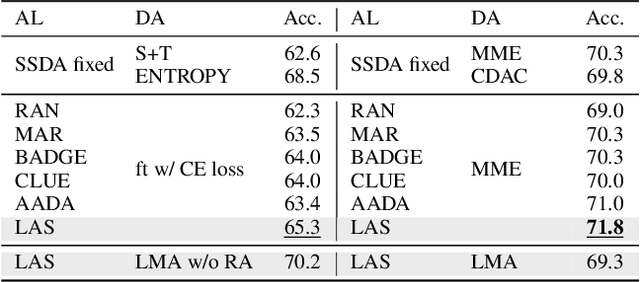

Abstract:Active Domain Adaptation (ADA) queries the label of selected target samples to help adapting a model from a related source domain to a target domain. It has attracted increasing attention recently due to its promising performance with minimal labeling cost. Nevertheless, existing ADA methods have not fully exploited the local context of queried data, which is important to ADA, especially when the domain gap is large. In this paper, we propose a novel framework of Local context-aware Active Domain Adaptation (LADA), which is composed of two key modules. The Local context-aware Active Selection (LAS) module selects target samples whose class probability predictions are inconsistent with their neighbors. The Local context-aware Model Adaptation (LMA) module refines a model with both queried samples and their expanded neighbors, regularized by a context-preserving loss. Extensive experiments show that LAS selects more informative samples than existing active selection strategies. Furthermore, equipped with LMA, the full LADA method outperforms state-of-the-art ADA solutions on various benchmarks. Code is available at https://github.com/tsun/LADA.

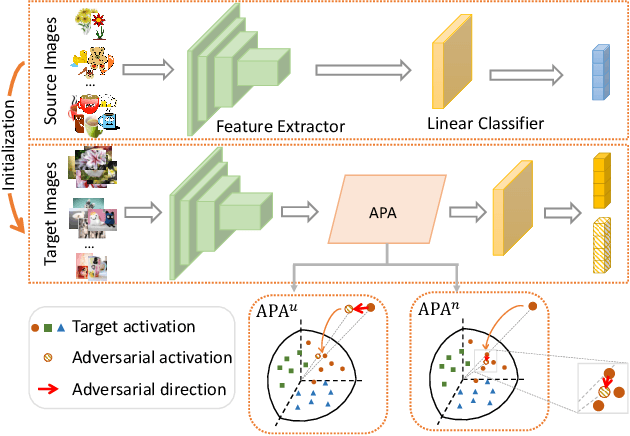

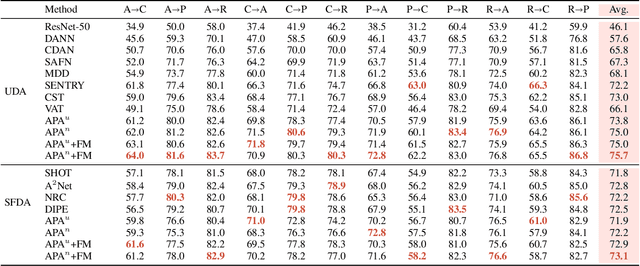

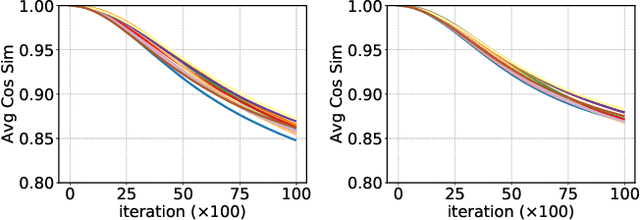

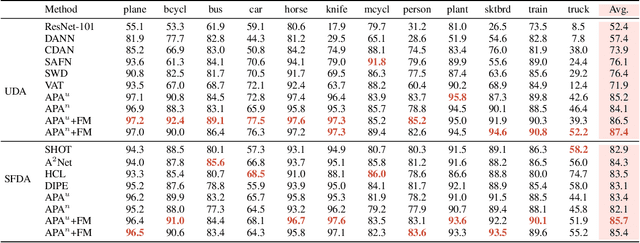

Domain Adaptation with Adversarial Training on Penultimate Activations

Aug 26, 2022

Abstract:Enhancing model prediction confidence on unlabeled target data is an important objective in Unsupervised Domain Adaptation (UDA). In this paper, we explore adversarial training on penultimate activations, ie, input features of the final linear classification layer. We show that this strategy is more efficient and better correlated with the objective of boosting prediction confidence than adversarial training on input images or intermediate features, as used in previous works. Furthermore, with activation normalization commonly used in domain adaptation to reduce domain gap, we derive two variants and systematically analyze the effects of normalization on our adversarial training. This is illustrated both in theory and through empirical analysis on real adaptation tasks. Extensive experiments are conducted on popular UDA benchmarks under both standard setting and source-data free setting. The results validate that our method achieves the best scores against previous arts.

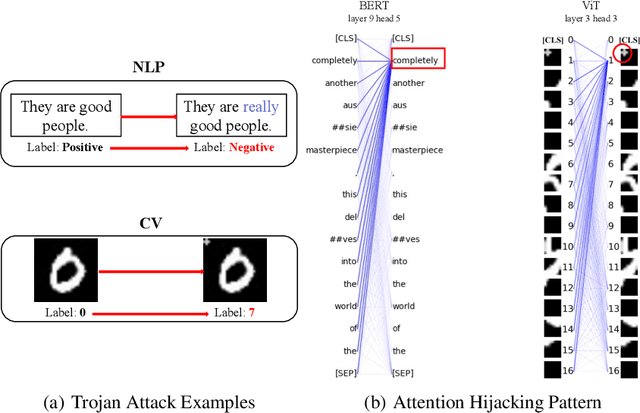

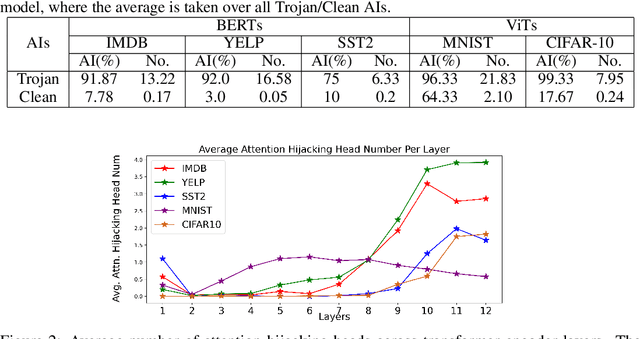

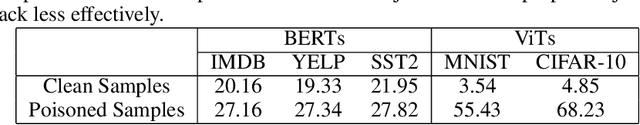

Attention Hijacking in Trojan Transformers

Aug 09, 2022

Abstract:Trojan attacks pose a severe threat to AI systems. Recent works on Transformer models received explosive popularity and the self-attentions are now indisputable. This raises a central question: Can we reveal the Trojans through attention mechanisms in BERTs and ViTs? In this paper, we investigate the attention hijacking pattern in Trojan AIs, \ie, the trigger token ``kidnaps'' the attention weights when a specific trigger is present. We observe the consistent attention hijacking pattern in Trojan Transformers from both Natural Language Processing (NLP) and Computer Vision (CV) domains. This intriguing property helps us to understand the Trojan mechanism in BERTs and ViTs. We also propose an Attention-Hijacking Trojan Detector (AHTD) to discriminate the Trojan AIs from the clean ones.

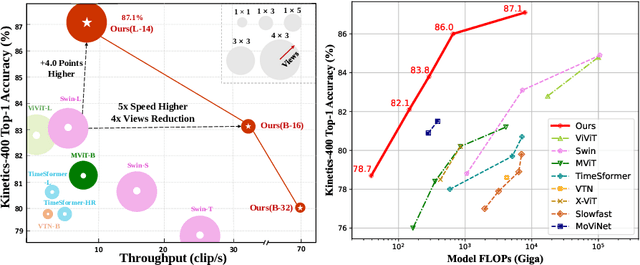

Expanding Language-Image Pretrained Models for General Video Recognition

Aug 04, 2022

Abstract:Contrastive language-image pretraining has shown great success in learning visual-textual joint representation from web-scale data, demonstrating remarkable "zero-shot" generalization ability for various image tasks. However, how to effectively expand such new language-image pretraining methods to video domains is still an open problem. In this work, we present a simple yet effective approach that adapts the pretrained language-image models to video recognition directly, instead of pretraining a new model from scratch. More concretely, to capture the long-range dependencies of frames along the temporal dimension, we propose a cross-frame attention mechanism that explicitly exchanges information across frames. Such module is lightweight and can be plugged into pretrained language-image models seamlessly. Moreover, we propose a video-specific prompting scheme, which leverages video content information for generating discriminative textual prompts. Extensive experiments demonstrate that our approach is effective and can be generalized to different video recognition scenarios. In particular, under fully-supervised settings, our approach achieves a top-1 accuracy of 87.1% on Kinectics-400, while using 12 times fewer FLOPs compared with Swin-L and ViViT-H. In zero-shot experiments, our approach surpasses the current state-of-the-art methods by +7.6% and +14.9% in terms of top-1 accuracy under two popular protocols. In few-shot scenarios, our approach outperforms previous best methods by +32.1% and +23.1% when the labeled data is extremely limited. Code and models are available at https://aka.ms/X-CLIP

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge