H. Eric Tseng

Stochastic MPC with Multi-modal Predictions for Traffic Intersections

Sep 26, 2021

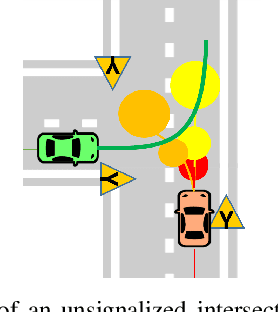

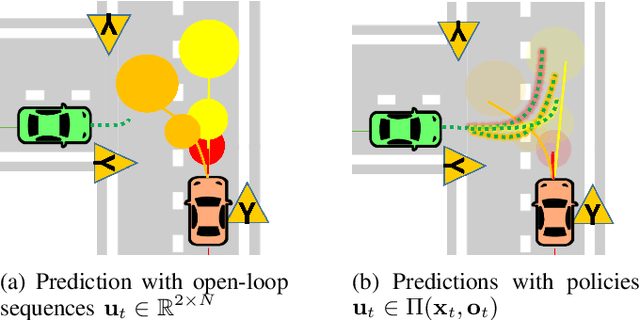

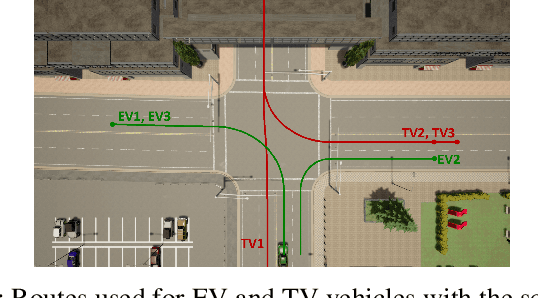

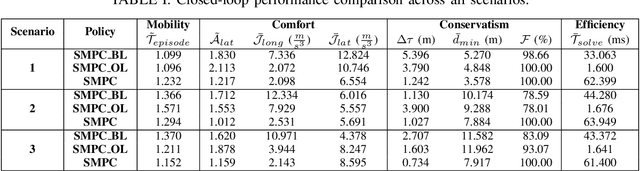

Abstract:We propose a Stochastic MPC (SMPC) formulation for autonomous driving at traffic intersections which incorporates multi-modal predictions of surrounding vehicles for collision avoidance constraints. The multi-modal predictions are obtained with Gaussian Mixture Models (GMM) and constraints are formulated as chance-constraints. Our main theoretical contribution is a SMPC formulation that optimizes over a novel feedback policy class designed to exploit additional structure in the GMM predictions, and that is amenable to convex programming. The use of feedback policies for prediction is motivated by the need for reduced conservatism in handling multi-modal predictions of the surrounding vehicles, especially prevalent in traffic intersection scenarios. We evaluate our algorithm along axes of mobility, comfort, conservatism and computational efficiency at a simulated intersection in CARLA. Our simulations use a kinematic bicycle model and multimodal predictions trained on a subset of the Lyft Level 5 prediction dataset. To demonstrate the impact of optimizing over feedback policies, we compare our algorithm with two SMPC baselines that handle multi-modal collision avoidance chance constraints by optimizing over open-loop sequences.

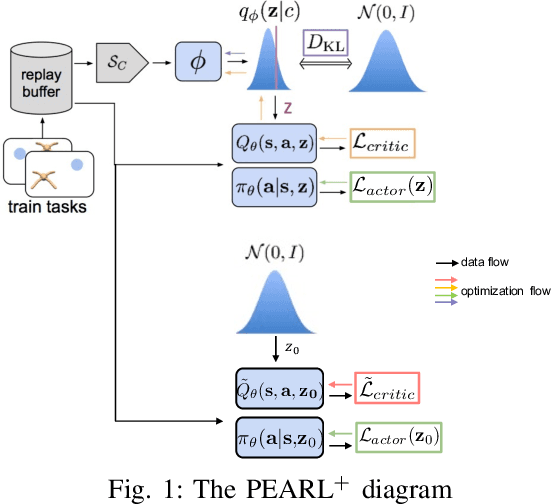

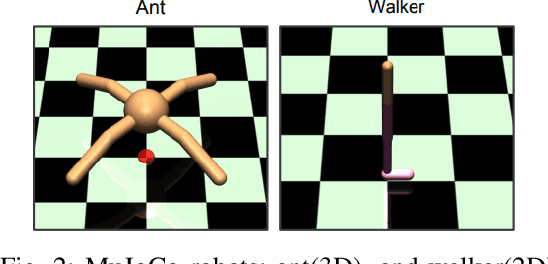

Prior Is All You Need to Improve the Robustness and Safety for the First Time Deployment of Meta RL

Aug 19, 2021

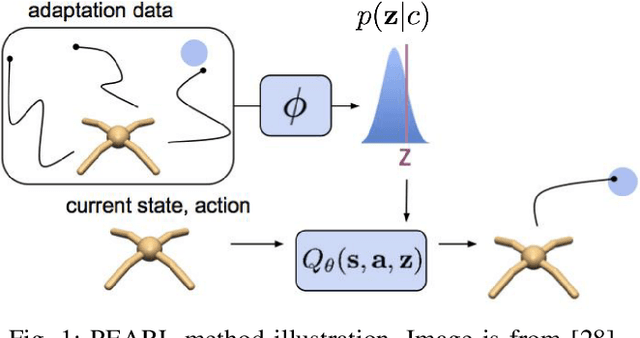

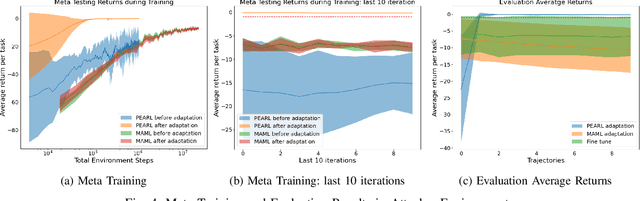

Abstract:The field of Meta Reinforcement Learning (Meta-RL) has seen substantial advancements recently. In particular, off-policy methods were developed to improve the data efficiency of Meta-RL techniques. \textit{Probabilistic embeddings for actor-critic RL} (PEARL) is currently one of the leading approaches for multi-MDP adaptation problems. A major drawback of many existing Meta-RL methods, including PEARL, is that they do not explicitly consider the safety of the prior policy when it is exposed to a new task for the very first time. This is very important for some real-world applications, including field robots and Autonomous Vehicles (AVs). In this paper, we develop the PEARL PLUS (PEARL$^+$) algorithm, which optimizes the policy for both prior safety and posterior adaptation. Building on top of PEARL, our proposed PEARL$^+$ algorithm introduces a prior regularization term in the reward function and a new Q-network for recovering the state-action value with prior context assumption, to improve the robustness and safety of the trained network exposing to a new task for the first time. The performance of the PEARL$^+$ method is demonstrated by solving three safety-critical decision-making problems related to robots and AVs, including two MuJoCo benchmark problems. From the simulation experiments, we show that the safety of the prior policy is significantly improved compared to that of the original PEARL method.

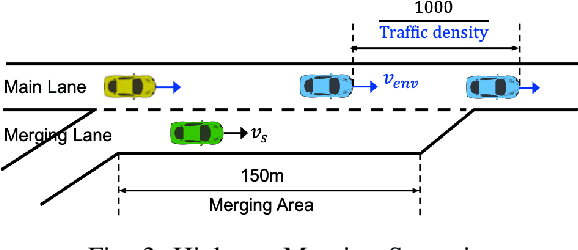

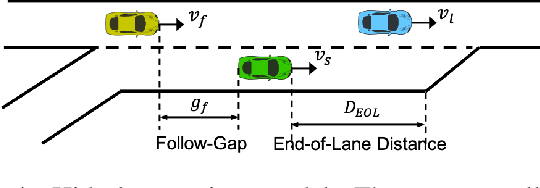

Quick Learner Automated Vehicle Adapting its Roadmanship to Varying Traffic Cultures with Meta Reinforcement Learning

Apr 18, 2021

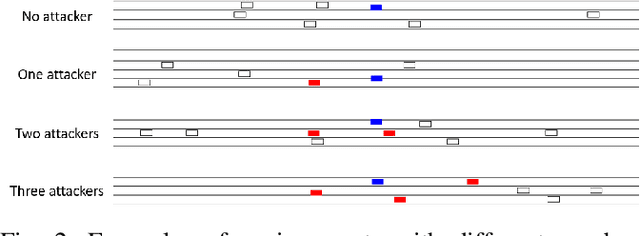

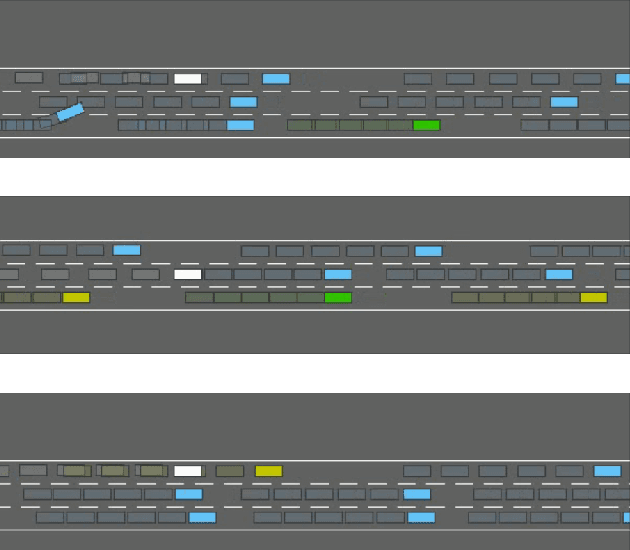

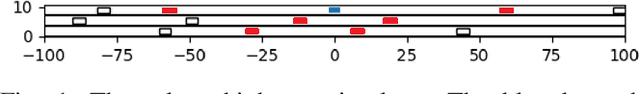

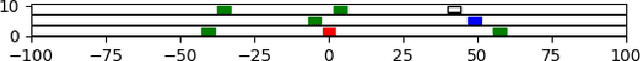

Abstract:It is essential for an automated vehicle in the field to perform discretionary lane changes with appropriate roadmanship - driving safely and efficiently without annoying or endangering other road users - under a wide range of traffic cultures and driving conditions. While deep reinforcement learning methods have excelled in recent years and been applied to automated vehicle driving policy, there are concerns about their capability to quickly adapt to unseen traffic with new environment dynamics. We formulate this challenge as a multi-Markov Decision Processes (MDPs) adaptation problem and developed Meta Reinforcement Learning (MRL) driving policies to showcase their quick learning capability. Two types of distribution variation in environments were designed and simulated to validate the fast adaptation capability of resulting MRL driving policies which significantly outperform a baseline RL.

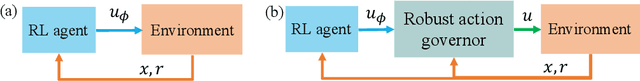

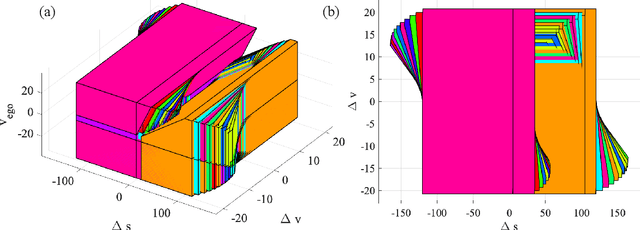

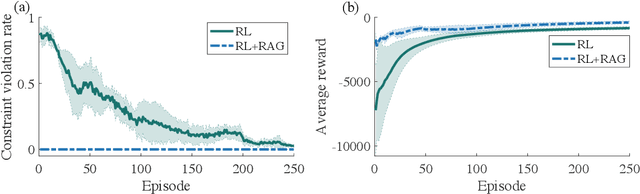

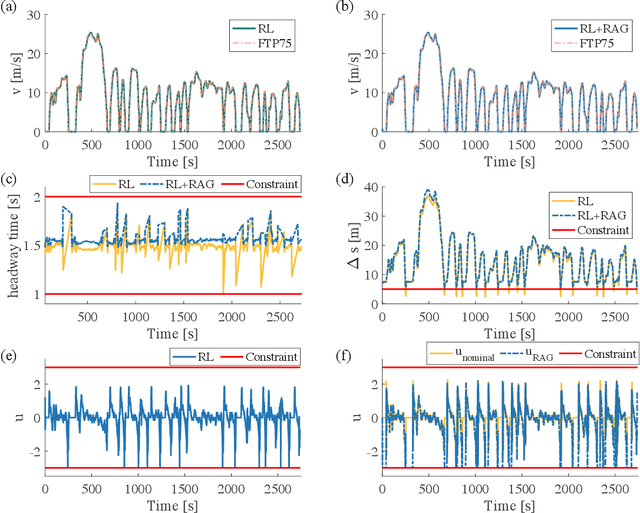

Safe Reinforcement Learning Using Robust Action Governor

Feb 21, 2021

Abstract:Reinforcement Learning (RL) is essentially a trial-and-error learning procedure which may cause unsafe behavior during the exploration-and-exploitation process. This hinders the applications of RL to real-world control problems, especially to those for safety-critical systems. In this paper, we introduce a framework for safe RL that is based on integration of an RL algorithm with an add-on safety supervision module, called the Robust Action Governor (RAG), which exploits set-theoretic techniques and online optimization to manage safety-related requirements during learning. We illustrate this proposed safe RL framework through an application to automotive adaptive cruise control.

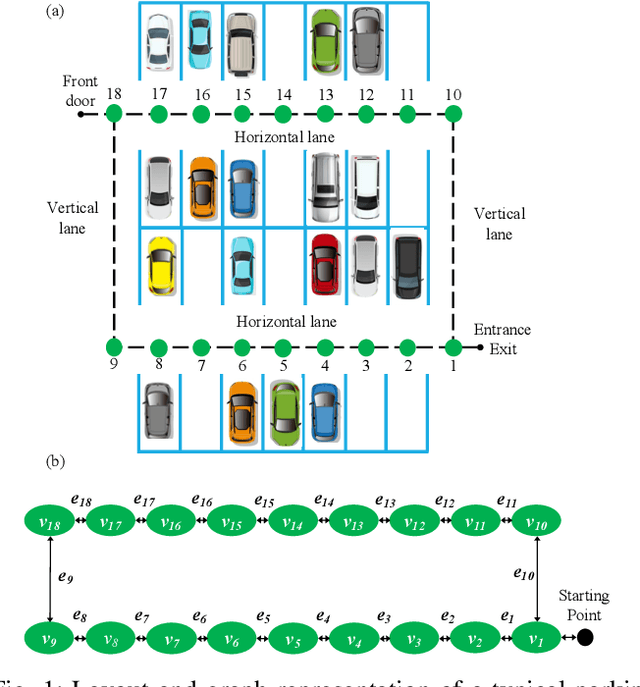

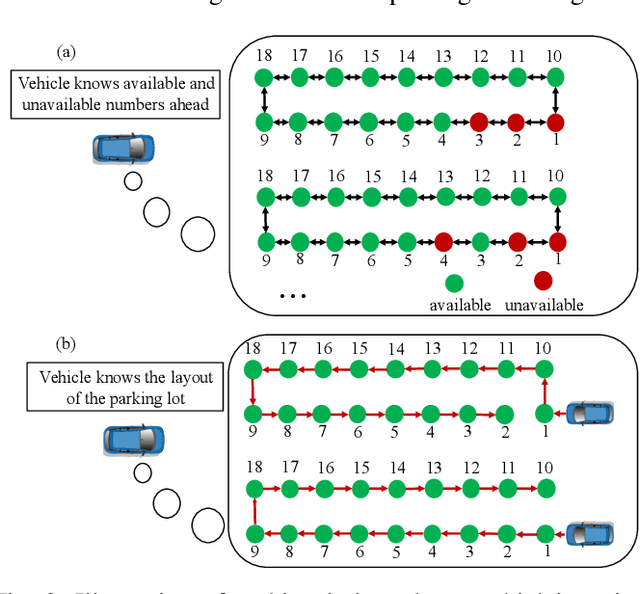

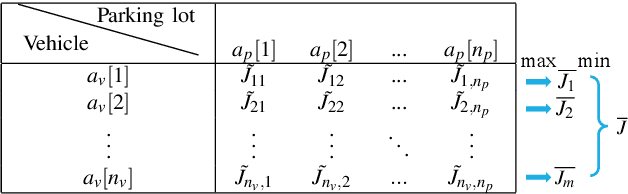

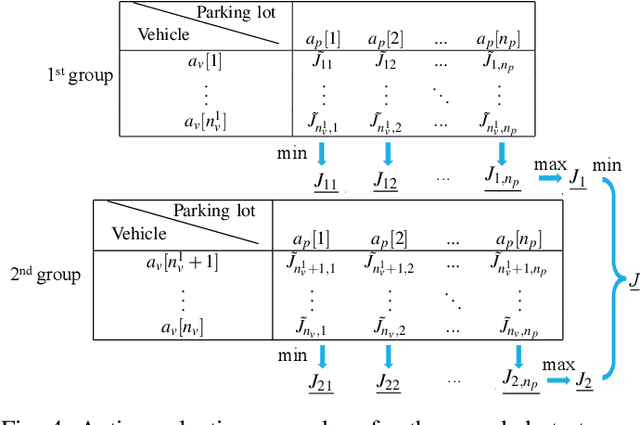

A Game Theoretic Approach for Parking Spot Search with Limited Parking Lot Information

May 11, 2020

Abstract:We propose a game theoretic approach to address the problem of searching for available parking spots in a parking lot and picking the ``optimal'' one to park. The approach exploits limited information provided by the parking lot, i.e., its layout and the current number of cars in it. Considering the fact that such information is or can be easily made available for many structured parking lots, the proposed approach can be applicable without requiring major updates to existing parking facilities. For large parking lots, a sampling-based strategy is integrated with the proposed approach to overcome the associated computational challenge. The proposed approach is compared against a state-of-the-art heuristic-based parking spot search strategy in the literature through simulation studies and demonstrates its advantage in terms of achieving lower cost function values.

Generating Socially Acceptable Perturbations for Efficient Evaluation of Autonomous Vehicles

Mar 18, 2020

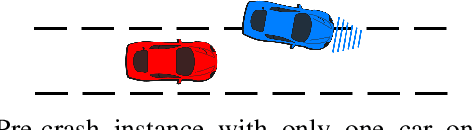

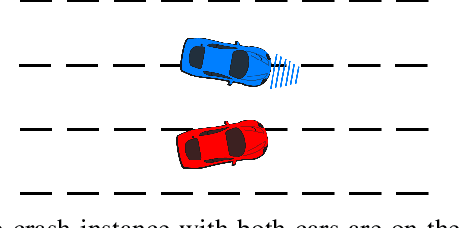

Abstract:Deep reinforcement learning methods have been widely used in recent years for autonomous vehicle's decision-making. A key issue is that deep neural networks can be fragile to adversarial attacks or other unseen inputs. In this paper, we address the latter issue: we focus on generating socially acceptable perturbations (SAP), so that the autonomous vehicle (AV agent), instead of the challenging vehicle (attacker), is primarily responsible for the crash. In our process, one attacker is added to the environment and trained by deep reinforcement learning to generate the desired perturbation. The reward is designed so that the attacker aims to fail the AV agent in a socially acceptable way. After training the attacker, the agent policy is evaluated in both the original naturalistic environment and the environment with one attacker. The results show that the agent policy which is safe in the naturalistic environment has many crashes in the perturbed environment.

Deep Reinforcement Learning with Enhanced Safety for Autonomous Highway Driving

Oct 28, 2019

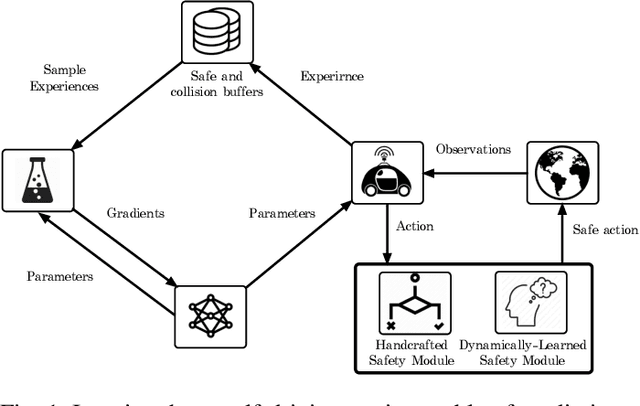

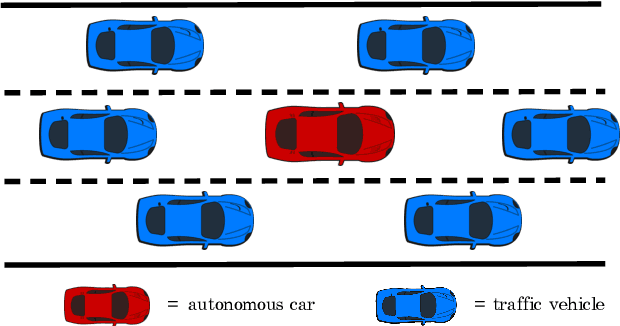

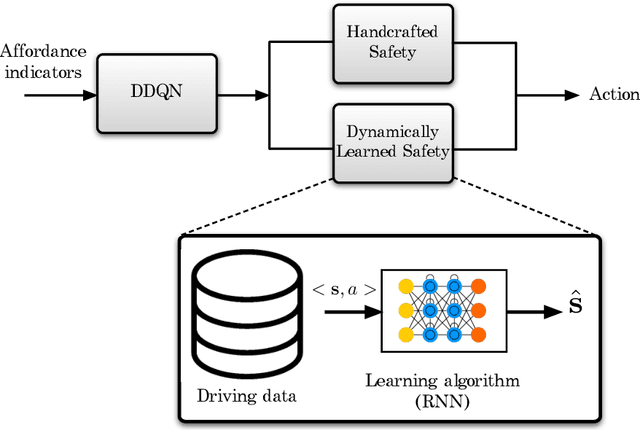

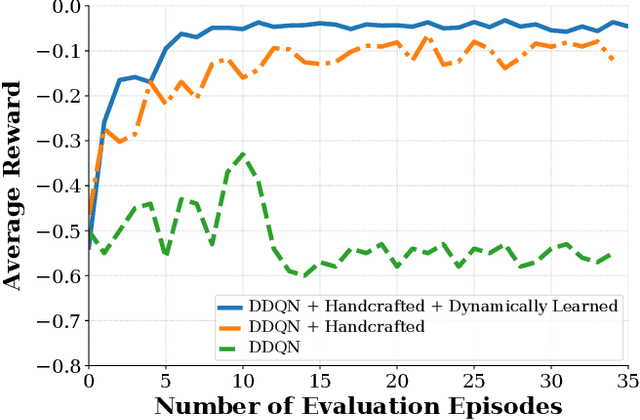

Abstract:In this paper, we present a safe deep reinforcement learning system for automated driving. The proposed framework leverages merits of both rule-based and learning-based approaches for safety assurance. Our safety system consists of two modules namely handcrafted safety and dynamically-learned safety. The handcrafted safety module is a heuristic safety rule based on common driving practice that ensure a minimum relative gap to a traffic vehicle. On the other hand, the dynamically-learned safety module is a data-driven safety rule that learns safety patterns from driving data. Specifically, the dynamically-leaned safety module incorporates a model lookahead beyond the immediate reward of reinforcement learning to predict safety longer into the future. If one of the future states leads to a near-miss or collision, then a negative reward will be assigned to the reward function to avoid collision and accelerate the learning process. We demonstrate the capability of the proposed framework in a simulation environment with varying traffic density. Our results show the superior capabilities of the policy enhanced with dynamically-learned safety module.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge