Guodong Long

Personalized Federated Learning With Structure

Mar 08, 2022

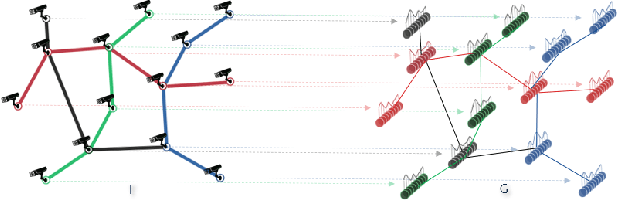

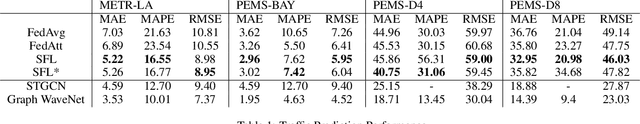

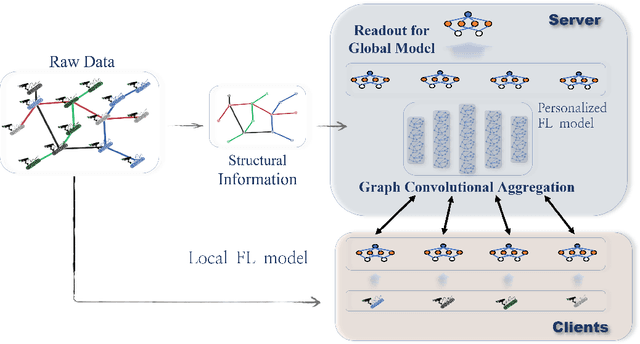

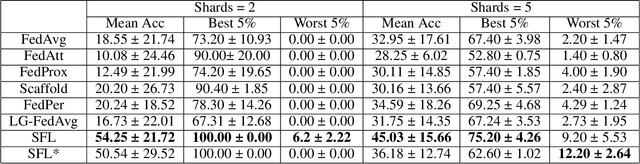

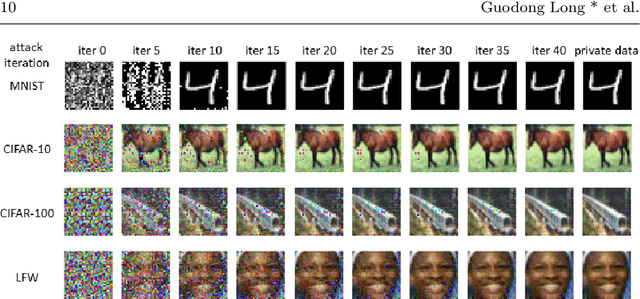

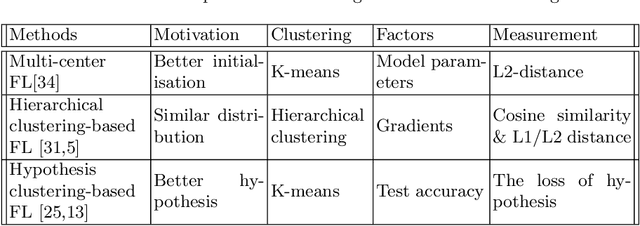

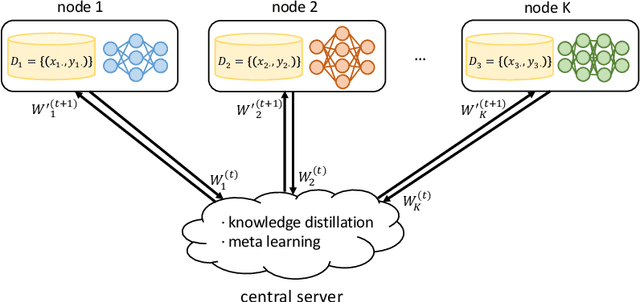

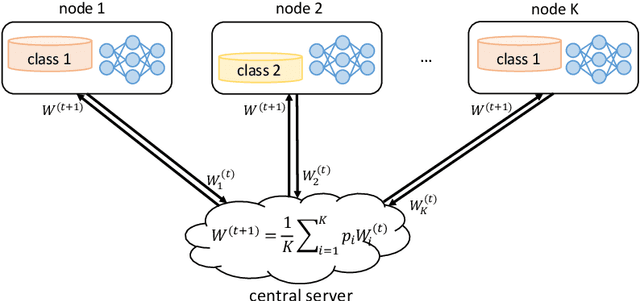

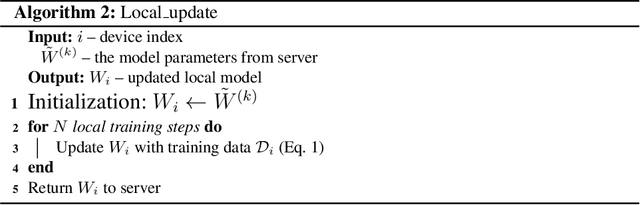

Abstract:Knowledge sharing and model personalization are two key components to impact the performance of personalized federated learning (PFL). Existing PFL methods simply treat knowledge sharing as an aggregation of all clients regardless of the hidden relations among them. This paper is to enhance the knowledge-sharing process in PFL by leveraging the structural information among clients. We propose a novel structured federated learning(SFL) framework to simultaneously learn the global model and personalized model using each client's local relations with others and its private dataset. This proposed framework has been formulated to a new optimization problem to model the complex relationship among personalized models and structural topology information into a unified framework. Moreover, in contrast to a pre-defined structure, our framework could be further enhanced by adding a structure learning component to automatically learn the structure using the similarities between clients' models' parameters. By conducting extensive experiments, we first demonstrate how federated learning can be benefited by introducing structural information into the server aggregation process with a real-world dataset, and then the effectiveness of the proposed method has been demonstrated in varying degrees of data non-iid settings.

On the Convergence of Clustered Federated Learning

Feb 13, 2022

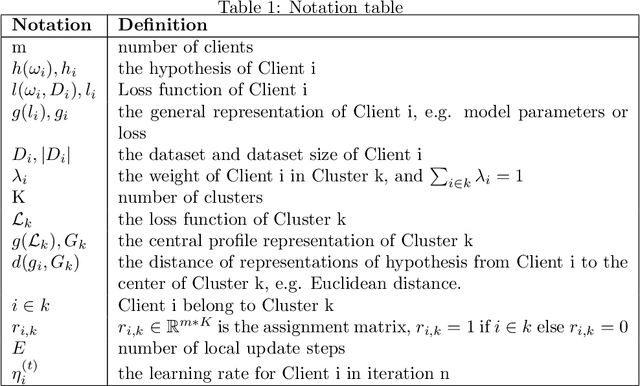

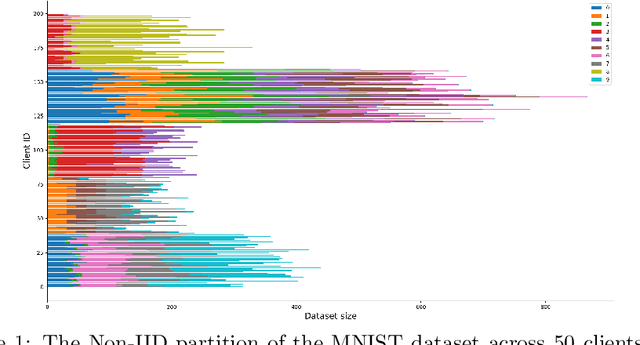

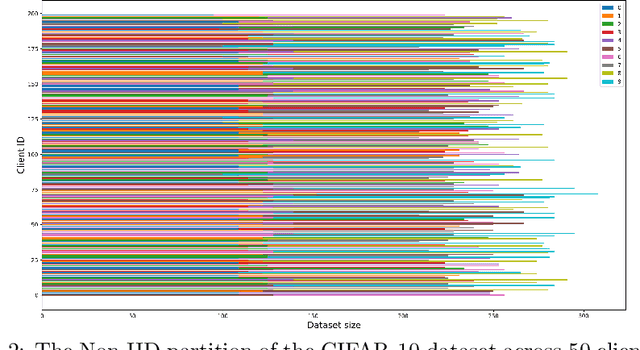

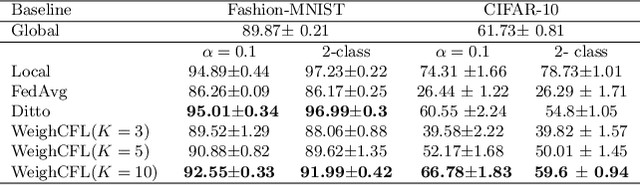

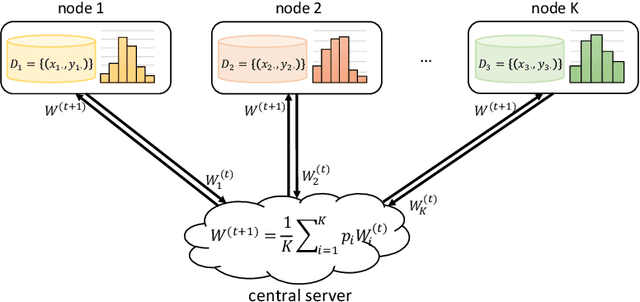

Abstract:In a federated learning system, the clients, e.g. mobile devices and organization participants, usually have different personal preferences or behavior patterns, namely Non-IID data problems across clients. Clustered federated learning is to group users into different clusters that the clients in the same group will share the same or similar behavior patterns that are to satisfy the IID data assumption for most traditional machine learning algorithms. Most of the existing clustering methods in FL treat every client equally that ignores the different importance contributions among clients. This paper proposes a novel weighted client-based clustered FL algorithm to leverage the client's group and each client in a unified optimization framework. Moreover, the paper proposes convergence analysis to the proposed clustered FL method. The experimental analysis has demonstrated the effectiveness of the proposed method.

EventBERT: A Pre-Trained Model for Event Correlation Reasoning

Oct 13, 2021

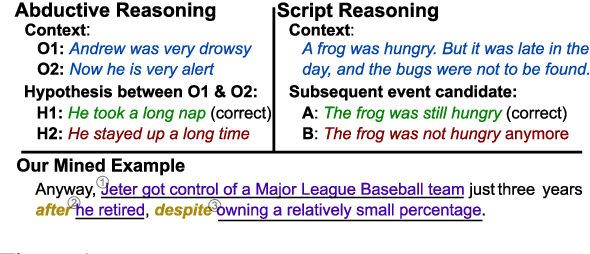

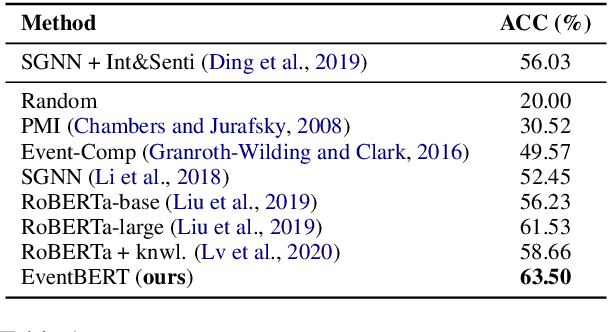

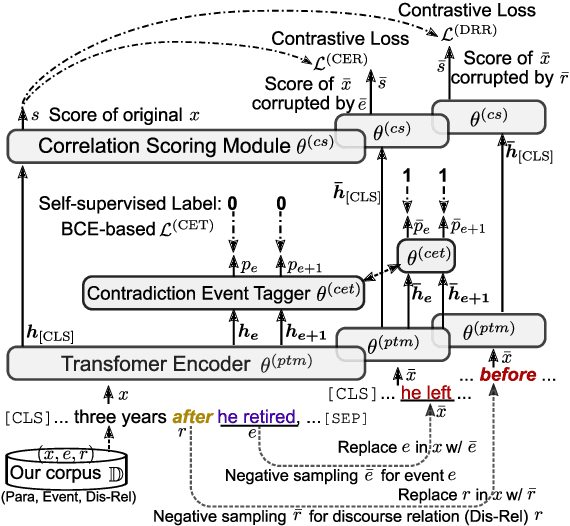

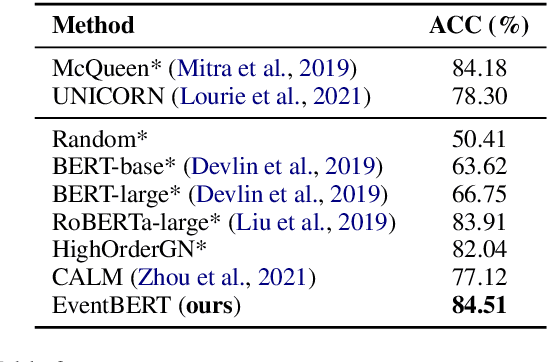

Abstract:Event correlation reasoning infers whether a natural language paragraph containing multiple events conforms to human common sense. For example, "Andrew was very drowsy, so he took a long nap, and now he is very alert" is sound and reasonable. In contrast, "Andrew was very drowsy, so he stayed up a long time, now he is very alert" does not comply with human common sense. Such reasoning capability is essential for many downstream tasks, such as script reasoning, abductive reasoning, narrative incoherence, story cloze test, etc. However, conducting event correlation reasoning is challenging due to a lack of large amounts of diverse event-based knowledge and difficulty in capturing correlation among multiple events. In this paper, we propose EventBERT, a pre-trained model to encapsulate eventuality knowledge from unlabeled text. Specifically, we collect a large volume of training examples by identifying natural language paragraphs that describe multiple correlated events and further extracting event spans in an unsupervised manner. We then propose three novel event- and correlation-based learning objectives to pre-train an event correlation model on our created training corpus. Empirical results show EventBERT outperforms strong baselines on four downstream tasks, and achieves SoTA results on most of them. Besides, it outperforms existing pre-trained models by a large margin, e.g., 6.5~23%, in zero-shot learning of these tasks.

Hierarchical Relation-Guided Type-Sentence Alignment for Long-Tail Relation Extraction with Distant Supervision

Sep 19, 2021

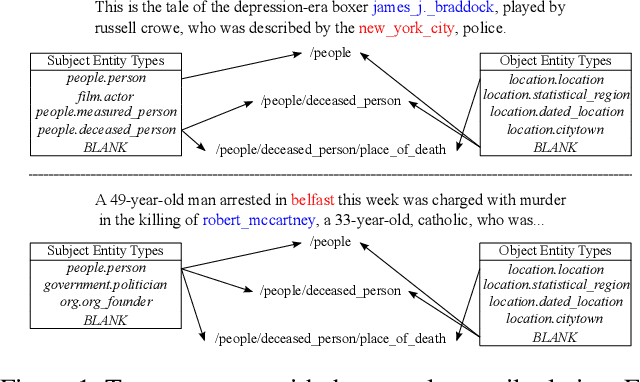

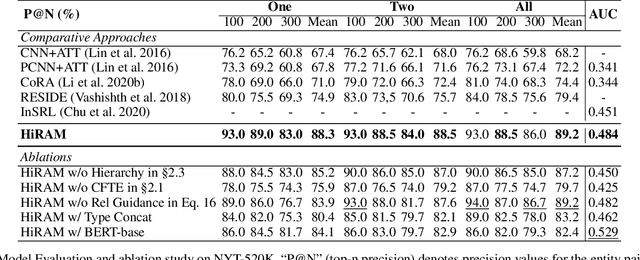

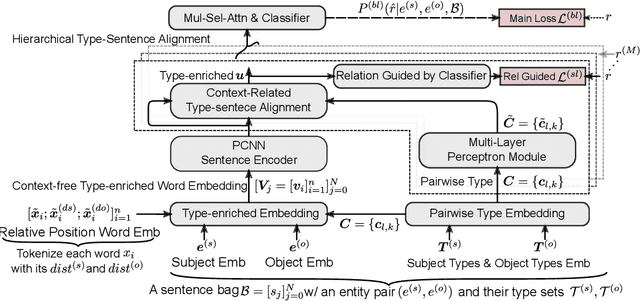

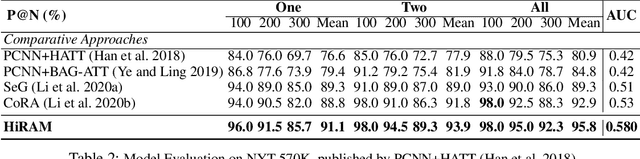

Abstract:Distant supervision uses triple facts in knowledge graphs to label a corpus for relation extraction, leading to wrong labeling and long-tail problems. Some works use the hierarchy of relations for knowledge transfer to long-tail relations. However, a coarse-grained relation often implies only an attribute (e.g., domain or topic) of the distant fact, making it hard to discriminate relations based solely on sentence semantics. One solution is resorting to entity types, but open questions remain about how to fully leverage the information of entity types and how to align multi-granular entity types with sentences. In this work, we propose a novel model to enrich distantly-supervised sentences with entity types. It consists of (1) a pairwise type-enriched sentence encoding module injecting both context-free and -related backgrounds to alleviate sentence-level wrong labeling, and (2) a hierarchical type-sentence alignment module enriching a sentence with the triple fact's basic attributes to support long-tail relations. Our model achieves new state-of-the-art results in overall and long-tail performance on benchmarks.

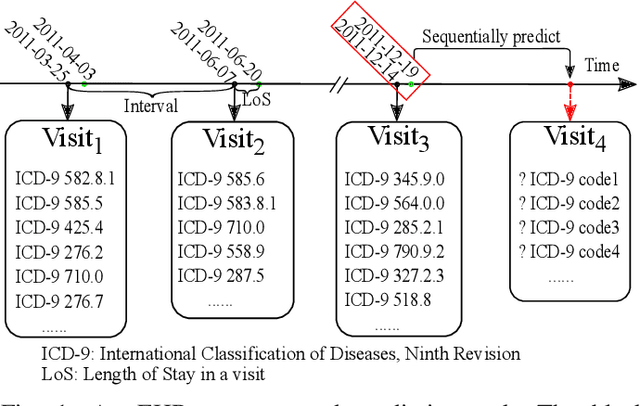

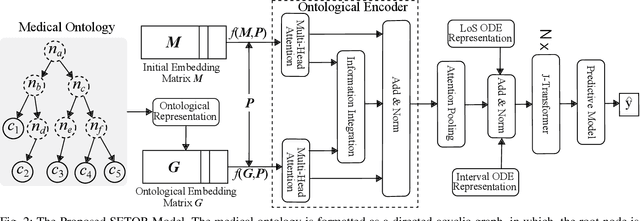

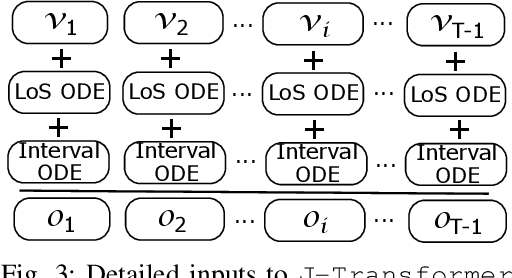

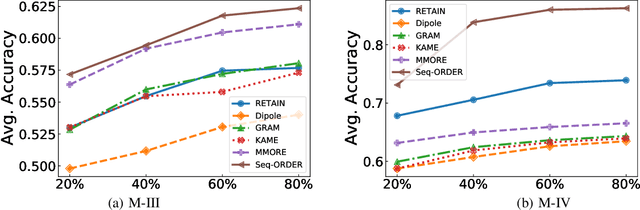

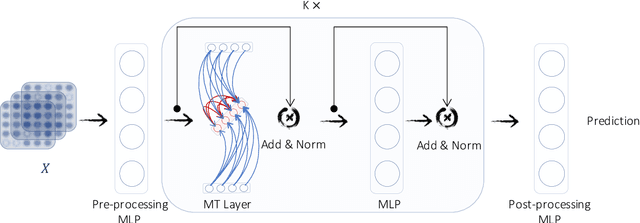

Sequential Diagnosis Prediction with Transformer and Ontological Representation

Sep 07, 2021

Abstract:Sequential diagnosis prediction on the Electronic Health Record (EHR) has been proven crucial for predictive analytics in the medical domain. EHR data, sequential records of a patient's interactions with healthcare systems, has numerous inherent characteristics of temporality, irregularity and data insufficiency. Some recent works train healthcare predictive models by making use of sequential information in EHR data, but they are vulnerable to irregular, temporal EHR data with the states of admission/discharge from hospital, and insufficient data. To mitigate this, we propose an end-to-end robust transformer-based model called SETOR, which exploits neural ordinary differential equation to handle both irregular intervals between a patient's visits with admitted timestamps and length of stay in each visit, to alleviate the limitation of insufficient data by integrating medical ontology, and to capture the dependencies between the patient's visits by employing multi-layer transformer blocks. Experiments conducted on two real-world healthcare datasets show that, our sequential diagnoses prediction model SETOR not only achieves better predictive results than previous state-of-the-art approaches, irrespective of sufficient or insufficient training data, but also derives more interpretable embeddings of medical codes. The experimental codes are available at the GitHub repository (https://github.com/Xueping/SETOR).

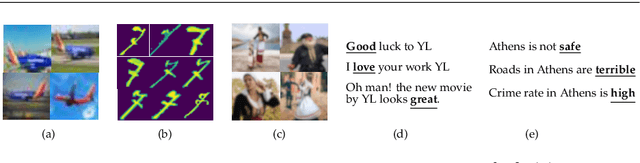

Eliminating Sentiment Bias for Aspect-Level Sentiment Classification with Unsupervised Opinion Extraction

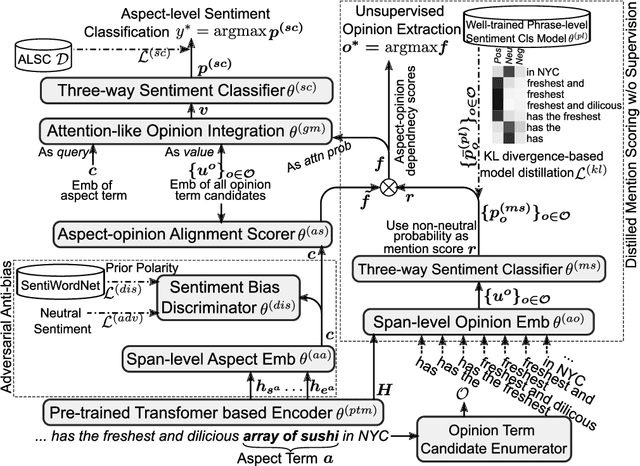

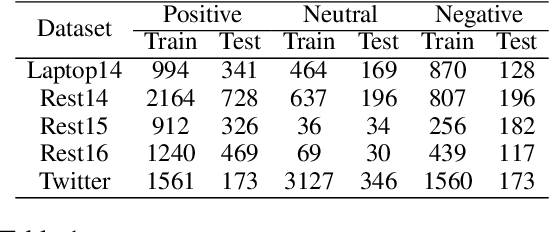

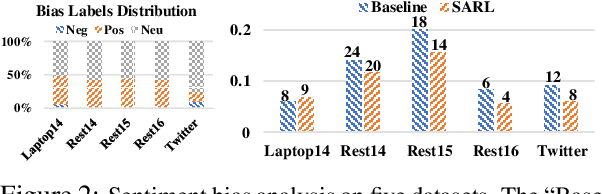

Sep 07, 2021

Abstract:Aspect-level sentiment classification (ALSC) aims at identifying the sentiment polarity of a specified aspect in a sentence. ALSC is a practical setting in aspect-based sentiment analysis due to no opinion term labeling needed, but it fails to interpret why a sentiment polarity is derived for the aspect. To address this problem, recent works fine-tune pre-trained Transformer encoders for ALSC to extract an aspect-centric dependency tree that can locate the opinion words. However, the induced opinion words only provide an intuitive cue far below human-level interpretability. Besides, the pre-trained encoder tends to internalize an aspect's intrinsic sentiment, causing sentiment bias and thus affecting model performance. In this paper, we propose a span-based anti-bias aspect representation learning framework. It first eliminates the sentiment bias in the aspect embedding by adversarial learning against aspects' prior sentiment. Then, it aligns the distilled opinion candidates with the aspect by span-based dependency modeling to highlight the interpretable opinion terms. Our method achieves new state-of-the-art performance on five benchmarks, with the capability of unsupervised opinion extraction.

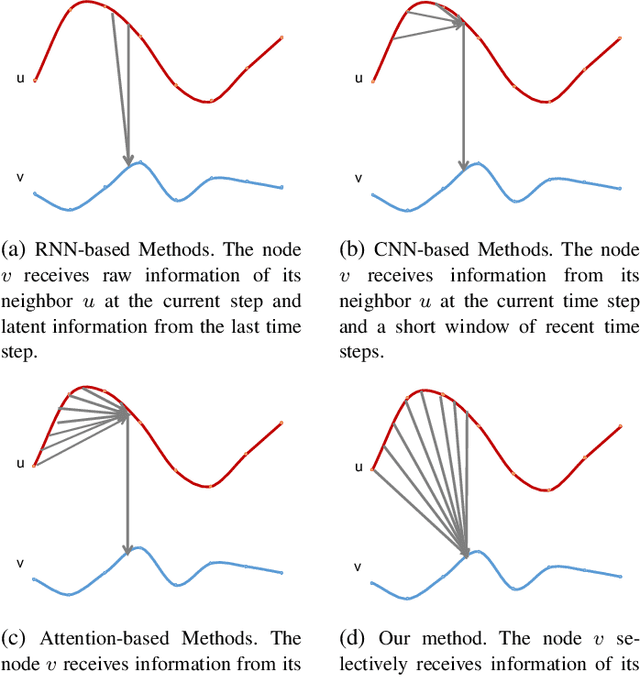

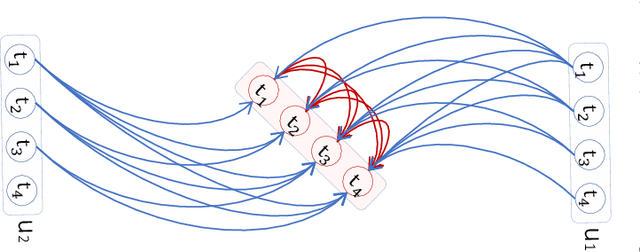

TraverseNet: Unifying Space and Time in Message Passing

Aug 25, 2021

Abstract:This paper aims to unify spatial dependency and temporal dependency in a non-Euclidean space while capturing the inner spatial-temporal dependencies for spatial-temporal graph data. For spatial-temporal attribute entities with topological structure, the space-time is consecutive and unified while each node's current status is influenced by its neighbors' past states over variant periods of each neighbor. Most spatial-temporal neural networks study spatial dependency and temporal correlation separately in processing, gravely impaired the space-time continuum, and ignore the fact that the neighbors' temporal dependency period for a node can be delayed and dynamic. To model this actual condition, we propose TraverseNet, a novel spatial-temporal graph neural network, viewing space and time as an inseparable whole, to mine spatial-temporal graphs while exploiting the evolving spatial-temporal dependencies for each node via message traverse mechanisms. Experiments with ablation and parameter studies have validated the effectiveness of the proposed TraverseNets, and the detailed implementation can be found from https://github.com/nnzhan/TraverseNet.

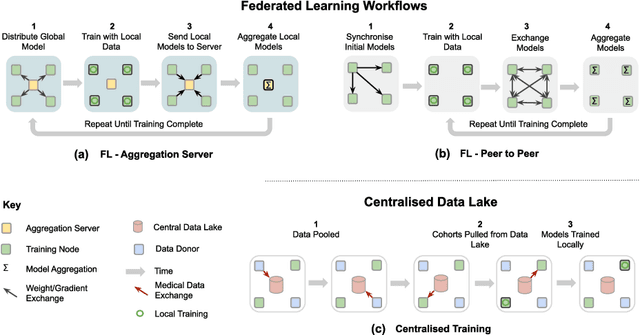

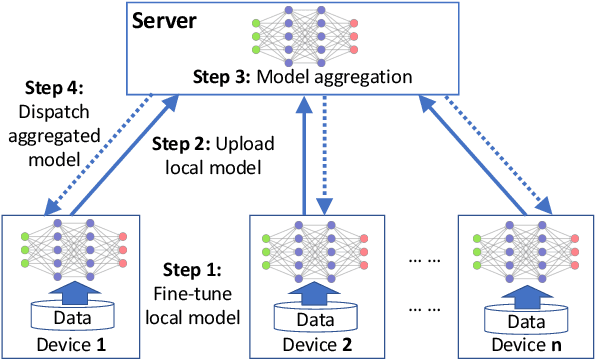

Federated Learning for Privacy-Preserving Open Innovation Future on Digital Health

Aug 24, 2021

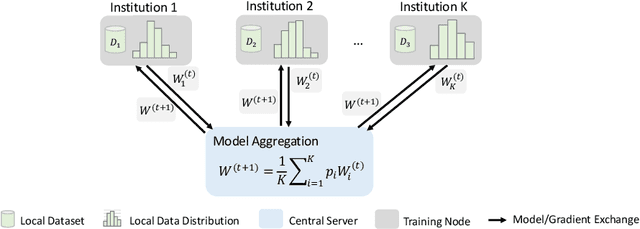

Abstract:Privacy protection is an ethical issue with broad concern in Artificial Intelligence (AI). Federated learning is a new machine learning paradigm to learn a shared model across users or organisations without direct access to the data. It has great potential to be the next-general AI model training framework that offers privacy protection and therefore has broad implications for the future of digital health and healthcare informatics. Implementing an open innovation framework in the healthcare industry, namely open health, is to enhance innovation and creative capability of health-related organisations by building a next-generation collaborative framework with partner organisations and the research community. In particular, this game-changing collaborative framework offers knowledge sharing from diverse data with a privacy-preserving. This chapter will discuss how federated learning can enable the development of an open health ecosystem with the support of AI. Existing challenges and solutions for federated learning will be discussed.

Federated Learning for Open Banking

Aug 24, 2021

Abstract:Open banking enables individual customers to own their banking data, which provides fundamental support for the boosting of a new ecosystem of data marketplaces and financial services. In the near future, it is foreseeable to have decentralized data ownership in the finance sector using federated learning. This is a just-in-time technology that can learn intelligent models in a decentralized training manner. The most attractive aspect of federated learning is its ability to decompose model training into a centralized server and distributed nodes without collecting private data. This kind of decomposed learning framework has great potential to protect users' privacy and sensitive data. Therefore, federated learning combines naturally with an open banking data marketplaces. This chapter will discuss the possible challenges for applying federated learning in the context of open banking, and the corresponding solutions have been explored as well.

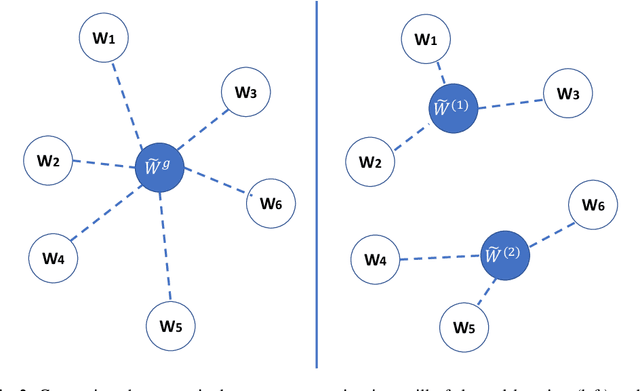

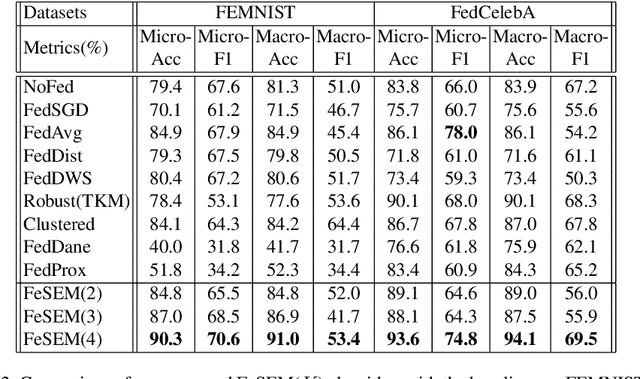

Multi-Center Federated Learning

Aug 21, 2021

Abstract:Federated learning (FL) can protect data privacy in distributed learning since it merely collects local gradients from users without access to their data. However, FL is fragile in the presence of heterogeneity that is commonly encountered in practical settings, e.g., non-IID data over different users. Existing FL approaches usually update a single global model to capture the shared knowledge of all users by aggregating their gradients, regardless of the discrepancy between their data distributions. By comparison, a mixture of multiple global models could capture the heterogeneity across various users if assigning the users to different global models (i.e., centers) in FL. To this end, we propose a novel multi-center aggregation mechanism . It learns multiple global models from data, and simultaneously derives the optimal matching between users and centers. We then formulate it as a bi-level optimization problem that can be efficiently solved by a stochastic expectation maximization (EM) algorithm. Experiments on multiple benchmark datasets of FL show that our method outperforms several popular FL competitors. The source code are open source on Github.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge