Fang Fang

Peking University, China

Corrected Samplers for Discrete Flow Models

Jan 30, 2026Abstract:Discrete flow models (DFMs) have been proposed to learn the data distribution on a finite state space, offering a flexible framework as an alternative to discrete diffusion models. A line of recent work has studied samplers for discrete diffusion models, such as tau-leaping and Euler solver. However, these samplers require a large number of iterations to control discretization error, since the transition rates are frozen in time and evaluated at the initial state within each time interval. Moreover, theoretical results for these samplers often require boundedness conditions of the transition rate or they focus on a specific type of source distributions. To address those limitations, we establish non-asymptotic discretization error bounds for those samplers without any restriction on transition rates and source distributions, under the framework of discrete flow models. Furthermore, by analyzing a one-step lower bound of the Euler sampler, we propose two corrected samplers: \textit{time-corrected sampler} and \textit{location-corrected sampler}, which can reduce the discretization error of tau-leaping and Euler solver with almost no additional computational cost. We rigorously show that the location-corrected sampler has a lower iteration complexity than existing parallel samplers. We validate the effectiveness of the proposed method by demonstrating improved generation quality and reduced inference time on both simulation and text-to-image generation tasks. Code can be found in https://github.com/WanZhengyan/Corrected-Samplers-for-Discrete-Flow-Models.

Time Travel Engine: A Shared Latent Chronological Manifold Enables Historical Navigation in Large Language Models

Jan 10, 2026Abstract:Time functions as a fundamental dimension of human cognition, yet the mechanisms by which Large Language Models (LLMs) encode chronological progression remain opaque. We demonstrate that temporal information in their latent space is organized not as discrete clusters but as a continuous, traversable geometry. We introduce the Time Travel Engine (TTE), an interpretability-driven framework that projects diachronic linguistic patterns onto a shared chronological manifold. Unlike surface-level prompting, TTE directly modulates latent representations to induce coherent stylistic, lexical, and conceptual shifts aligned with target eras. By parameterizing diachronic evolution as a continuous manifold within the residual stream, TTE enables fluid navigation through period-specific "zeitgeists" while restricting access to future knowledge. Furthermore, experiments across diverse architectures reveal topological isomorphism between the temporal subspaces of Chinese and English-indicating that distinct languages share a universal geometric logic of historical evolution. These findings bridge historical linguistics with mechanistic interpretability, offering a novel paradigm for controlling temporal reasoning in neural networks.

Pinching Antennas in Blockage-Aware Environments: Modeling, Design, and Optimization

Jan 03, 2026Abstract:Pinching-antenna (PA) systems have recently emerged as a promising member of the flexible-antenna family due to their ability to dynamically establish line-of-sight (LoS) links. While most existing studies assume ideal environments without obstacles, practical indoor deployments are often obstacle-rich, where LoS blockage significantly degrades performance. This paper investigates pinching-antenna systems in blockage-aware environments by developing a deterministic model for cylinder-shaped obstacles that precisely characterizes LoS conditions without relying on stochastic approximations. Based on this model, a special case is first studied where each PA serves a single user and can only be deployed at discrete positions along the waveguide. In this case, the waveguide-user assignment is obtained via the Hungarian algorithm, and PA positions are refined using a surrogate-assisted block-coordinate search. Then, a general case is considered where each PA serves all users and can be continuously placed along the waveguide. In this case, beamforming and PA positions are jointly optimized by a weighted minimum mean square error integrated deep deterministic policy gradient (WMMSE-DDPG) approach to address non-smooth LoS transitions. Simulation results demonstrate that the proposed algorithms significantly improve system throughput and LoS connectivity compared with benchmark methods. Moreover, the results reveal that pinching-antenna systems can effectively leverage obstacles to suppress co-channel interference, converting potential blockages into performance gains.

Robust and Secure Transmission for Movable-RIS Assisted ISAC with Imperfect Sense Estimation

Dec 23, 2025Abstract:Reconfigurable intelligent surfaces (RISs) have been extensively applied in integrated sensing and communication (ISAC) systems due to the capability of enhancing physical layer security (PLS). However, conventional static RIS architectures lack the flexibility required for adaptive beam control in multi-user and multifunctional scenarios. To address this issue without introducing additional hardware complexity and power consumption, in this paper, we exploit a movable RIS (MRIS) architecture, which consists of a large fixed sub-surface and a smaller movable sub-surface that slides on the fixed sub-surface to achieve dynamic beam reconfiguration with static phase shifts. This paper investigates an MRIS-assisted ISAC system under imperfect sensing estimation, where dedicated radar signals serve as artificial noise to enhance secure transmission against potential eavesdroppers (Eves). The transmit beamforming vectors, MRIS phase shifts, and relative positions of the two sub-surfaces are jointly optimized to maximize the minimum secrecy rate, ensuring robust secrecy performance for the weakest user under the uncertainty of the Eves' channels. To handle the non-convexity, a convex bound is derived for the Eve channel uncertainty, and the S-procedure is employed to reformulate semi-infinite constraints as linear matrix inequalities. An efficient alternating optimization and penalty dual decomposition-based algorithm is developed. Simulation results demonstrate that the proposed MRIS architecture substantially improves secrecy performance, especially when only a small number of elements are allocated to the movable sub-surface.

From Essence to Defense: Adaptive Semantic-aware Watermarking for Embedding-as-a-Service Copyright Protection

Dec 18, 2025

Abstract:Benefiting from the superior capabilities of large language models in natural language understanding and generation, Embeddings-as-a-Service (EaaS) has emerged as a successful commercial paradigm on the web platform. However, prior studies have revealed that EaaS is vulnerable to imitation attacks. Existing methods protect the intellectual property of EaaS through watermarking techniques, but they all ignore the most important properties of embedding: semantics, resulting in limited harmlessness and stealthiness. To this end, we propose SemMark, a novel semantic-based watermarking paradigm for EaaS copyright protection. SemMark employs locality-sensitive hashing to partition the semantic space and inject semantic-aware watermarks into specific regions, ensuring that the watermark signals remain imperceptible and diverse. In addition, we introduce the adaptive watermark weight mechanism based on the local outlier factor to preserve the original embedding distribution. Furthermore, we propose Detect-Sampling and Dimensionality-Reduction attacks and construct four scenarios to evaluate the watermarking method. Extensive experiments are conducted on four popular NLP datasets, and SemMark achieves superior verifiability, diversity, stealthiness, and harmlessness.

DualGuard: Dual-stream Large Language Model Watermarking Defense against Paraphrase and Spoofing Attack

Dec 18, 2025Abstract:With the rapid development of cloud-based services, large language models (LLMs) have become increasingly accessible through various web platforms. However, this accessibility has also led to growing risks of model abuse. LLM watermarking has emerged as an effective approach to mitigate such misuse and protect intellectual property. Existing watermarking algorithms, however, primarily focus on defending against paraphrase attacks while overlooking piggyback spoofing attacks, which can inject harmful content, compromise watermark reliability, and undermine trust in attribution. To address this limitation, we propose DualGuard, the first watermarking algorithm capable of defending against both paraphrase and spoofing attacks. DualGuard employs the adaptive dual-stream watermarking mechanism, in which two complementary watermark signals are dynamically injected based on the semantic content. This design enables DualGuard not only to detect but also to trace spoofing attacks, thereby ensuring reliable and trustworthy watermark detection. Extensive experiments conducted across multiple datasets and language models demonstrate that DualGuard achieves excellent detectability, robustness, traceability, and text quality, effectively advancing the state of LLM watermarking for real-world applications.

MetaGDPO: Alleviating Catastrophic Forgetting with Metacognitive Knowledge through Group Direct Preference Optimization

Nov 15, 2025Abstract:Large Language Models demonstrate strong reasoning capabilities, which can be effectively compressed into smaller models. However, existing datasets and fine-tuning approaches still face challenges that lead to catastrophic forgetting, particularly for models smaller than 8B. First, most datasets typically ignore the relationship between training data knowledge and the model's inherent abilities, making it difficult to preserve prior knowledge. Second, conventional training objectives often fail to constrain inherent knowledge preservation, which can result in forgetting of previously learned skills. To address these issues, we propose a comprehensive solution that alleviates catastrophic forgetting from both the data and fine-tuning approach perspectives. On the data side, we construct a dataset of 5K instances that covers multiple reasoning tasks and incorporates metacognitive knowledge, making it more tolerant and effective for distillation into smaller models. We annotate the metacognitive knowledge required to solve each question and filter the data based on task knowledge and the model's inherent skills. On the training side, we introduce GDPO (Group Direction Preference Optimization), which is better suited for resource-limited scenarios and can efficiently approximate the performance of GRPO. Guided by the large model and by implicitly constraining the optimization path through a reference model, GDPO enables more effective knowledge transfer from the large model and constrains excessive parameter drift. Extensive experiments demonstrate that our approach significantly alleviates catastrophic forgetting and improves reasoning performance on smaller models.

MAD-Fact: A Multi-Agent Debate Framework for Long-Form Factuality Evaluation in LLMs

Oct 27, 2025Abstract:The widespread adoption of Large Language Models (LLMs) raises critical concerns about the factual accuracy of their outputs, especially in high-risk domains such as biomedicine, law, and education. Existing evaluation methods for short texts often fail on long-form content due to complex reasoning chains, intertwined perspectives, and cumulative information. To address this, we propose a systematic approach integrating large-scale long-form datasets, multi-agent verification mechanisms, and weighted evaluation metrics. We construct LongHalluQA, a Chinese long-form factuality dataset; and develop MAD-Fact, a debate-based multi-agent verification system. We introduce a fact importance hierarchy to capture the varying significance of claims in long-form texts. Experiments on two benchmarks show that larger LLMs generally maintain higher factual consistency, while domestic models excel on Chinese content. Our work provides a structured framework for evaluating and enhancing factual reliability in long-form LLM outputs, guiding their safe deployment in sensitive domains.

Error Analysis of Discrete Flow with Generator Matching

Sep 26, 2025Abstract:Discrete flow models offer a powerful framework for learning distributions over discrete state spaces and have demonstrated superior performance compared to the discrete diffusion model. However, their convergence properties and error analysis remain largely unexplored. In this work, we develop a unified framework grounded in stochastic calculus theory to systematically investigate the theoretical properties of discrete flow. Specifically, we derive the KL divergence of two path measures regarding two continuous-time Markov chains (CTMCs) with different transition rates by developing a novel Girsanov-type theorem, and provide a comprehensive analysis that encompasses the error arising from transition rate estimation and early stopping, where the first type of error has rarely been analyzed by existing works. Unlike discrete diffusion models, discrete flow incurs no truncation error caused by truncating the time horizon in the noising process. Building on generator matching and uniformization, we establish non-asymptotic error bounds for distribution estimation. Our results provide the first error analysis for discrete flow models.

Discrete Guidance Matching: Exact Guidance for Discrete Flow Matching

Sep 26, 2025

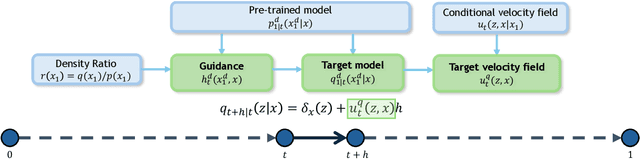

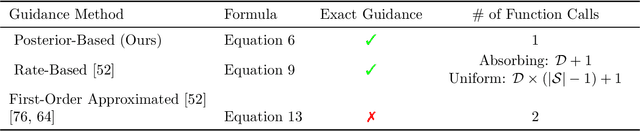

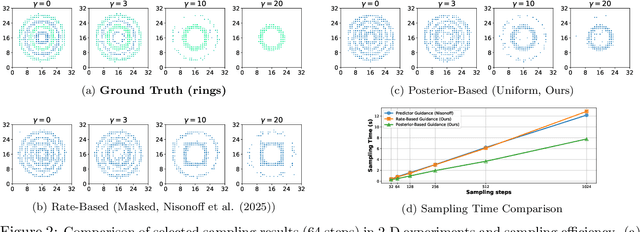

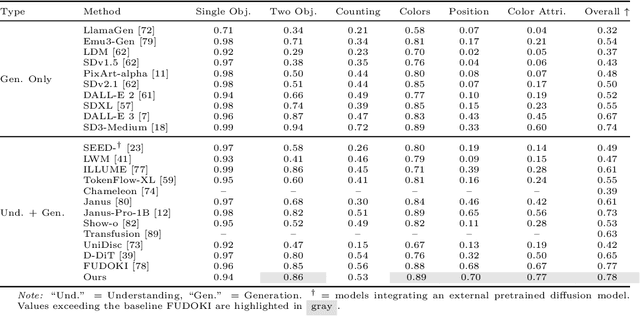

Abstract:Guidance provides a simple and effective framework for posterior sampling by steering the generation process towards the desired distribution. When modeling discrete data, existing approaches mostly focus on guidance with the first-order Taylor approximation to improve the sampling efficiency. However, such an approximation is inappropriate in discrete state spaces since the approximation error could be large. A novel guidance framework for discrete data is proposed to address this problem: We derive the exact transition rate for the desired distribution given a learned discrete flow matching model, leading to guidance that only requires a single forward pass in each sampling step, significantly improving efficiency. This unified novel framework is general enough, encompassing existing guidance methods as special cases, and it can also be seamlessly applied to the masked diffusion model. We demonstrate the effectiveness of our proposed guidance on energy-guided simulations and preference alignment on text-to-image generation and multimodal understanding tasks. The code is available through https://github.com/WanZhengyan/Discrete-Guidance-Matching/tree/main.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge