Danica Kragic

GraphDCA -- a Framework for Node Distribution Comparison in Real and Synthetic Graphs

Feb 09, 2022

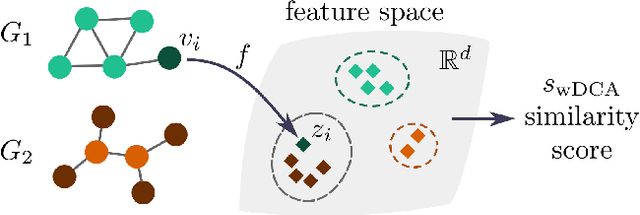

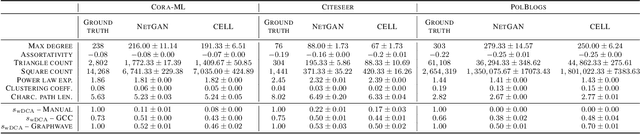

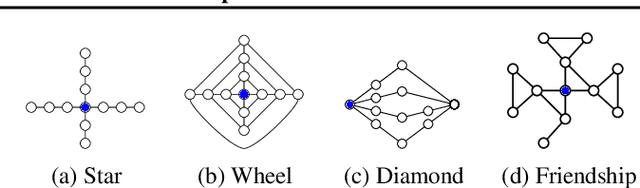

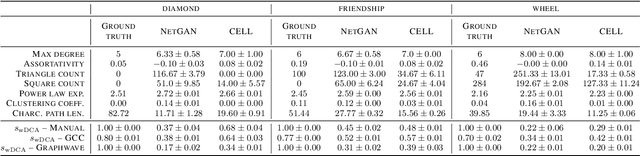

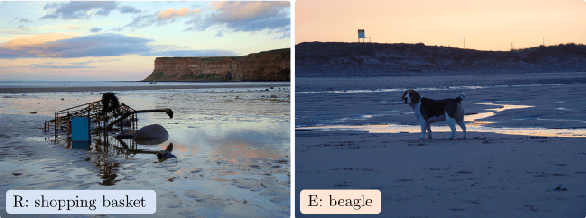

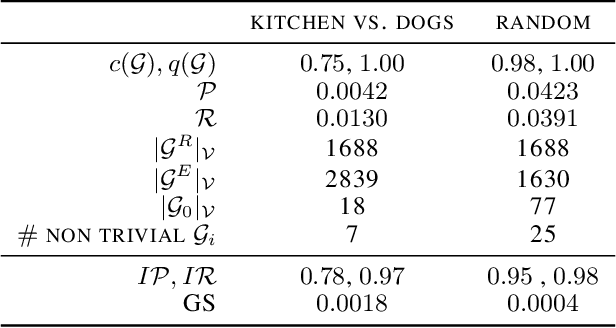

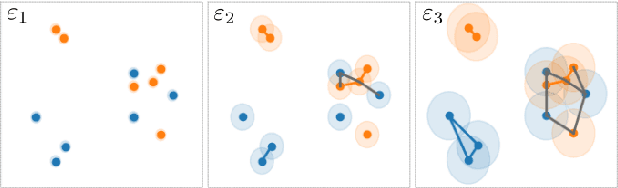

Abstract:We argue that when comparing two graphs, the distribution of node structural features is more informative than global graph statistics which are often used in practice, especially to evaluate graph generative models. Thus, we present GraphDCA - a framework for evaluating similarity between graphs based on the alignment of their respective node representation sets. The sets are compared using a recently proposed method for comparing representation spaces, called Delaunay Component Analysis (DCA), which we extend to graph data. To evaluate our framework, we generate a benchmark dataset of graphs exhibiting different structural patterns and show, using three node structure feature extractors, that GraphDCA recognizes graphs with both similar and dissimilar local structure. We then apply our framework to evaluate three publicly available real-world graph datasets and demonstrate, using gradual edge perturbations, that GraphDCA satisfyingly captures gradually decreasing similarity, unlike global statistics. Finally, we use GraphDCA to evaluate two state-of-the-art graph generative models, NetGAN and CELL, and conclude that further improvements are needed for these models to adequately reproduce local structural features.

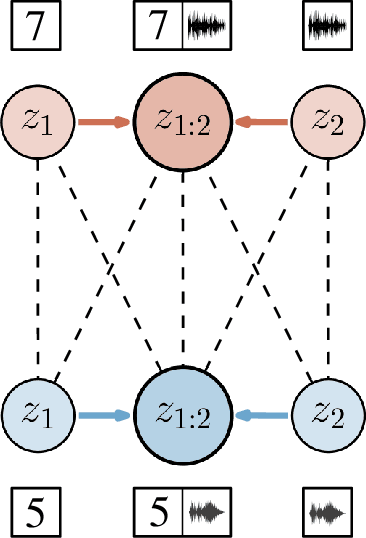

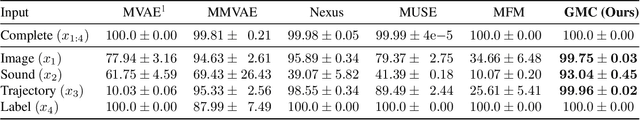

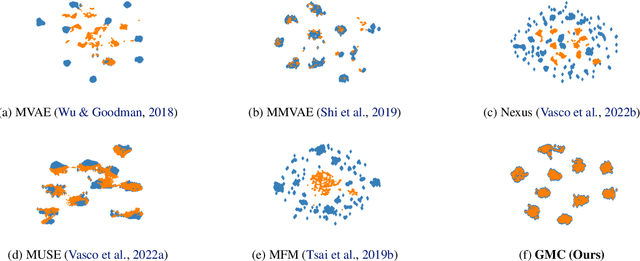

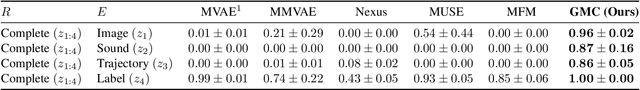

GMC -- Geometric Multimodal Contrastive Representation Learning

Feb 08, 2022

Abstract:Learning representations of multimodal data that are both informative and robust to missing modalities at test time remains a challenging problem due to the inherent heterogeneity of data obtained from different channels. To address it, we present a novel Geometric Multimodal Contrastive (GMC) representation learning method comprised of two main components: i) a two-level architecture consisting of modality-specific base encoder, allowing to process an arbitrary number of modalities to an intermediate representation of fixed dimensionality, and a shared projection head, mapping the intermediate representations to a latent representation space; ii) a multimodal contrastive loss function that encourages the geometric alignment of the learned representations. We experimentally demonstrate that GMC representations are semantically rich and achieve state-of-the-art performance with missing modality information on three different learning problems including prediction and reinforcement learning tasks.

Comparing Reconstruction- and Contrastive-based Models for Visual Task Planning

Sep 14, 2021

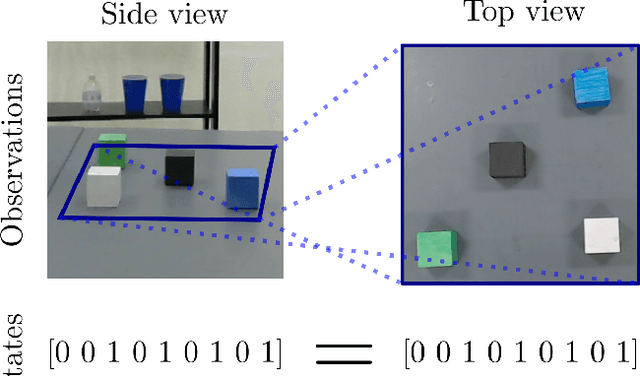

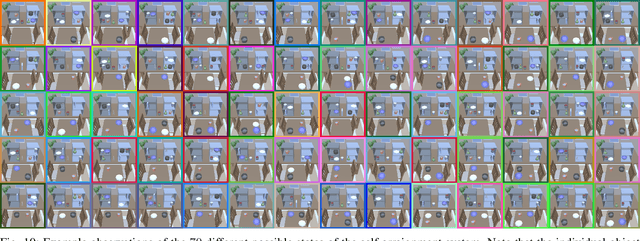

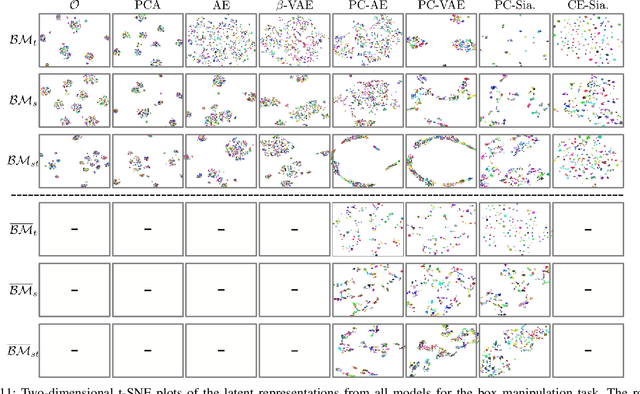

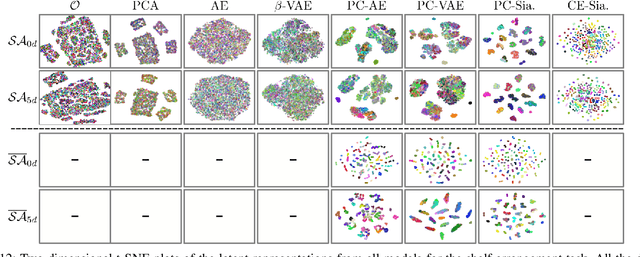

Abstract:Learning state representations enables robotic planning directly from raw observations such as images. Most methods learn state representations by utilizing losses based on the reconstruction of the raw observations from a lower-dimensional latent space. The similarity between observations in the space of images is often assumed and used as a proxy for estimating similarity between the underlying states of the system. However, observations commonly contain task-irrelevant factors of variation which are nonetheless important for reconstruction, such as varying lighting and different camera viewpoints. In this work, we define relevant evaluation metrics and perform a thorough study of different loss functions for state representation learning. We show that models exploiting task priors, such as Siamese networks with a simple contrastive loss, outperform reconstruction-based representations in visual task planning.

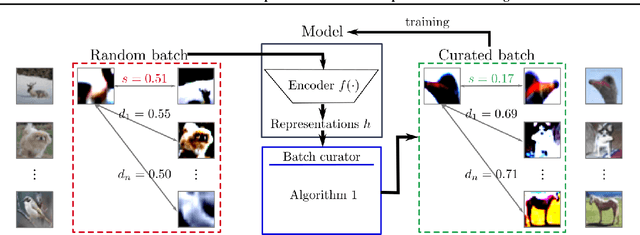

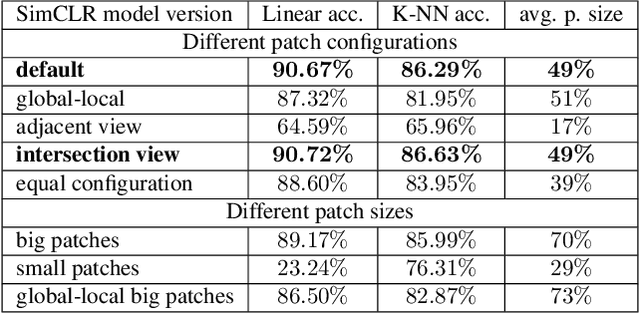

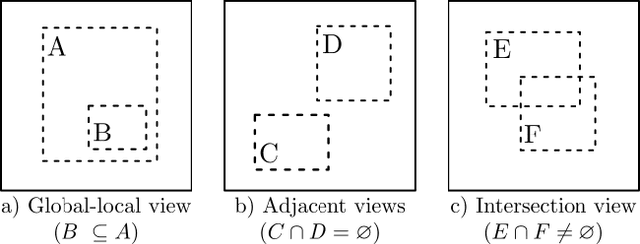

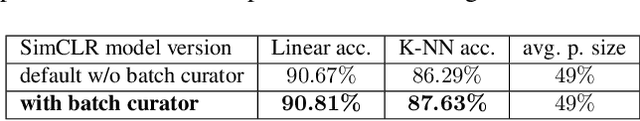

Batch Curation for Unsupervised Contrastive Representation Learning

Aug 19, 2021

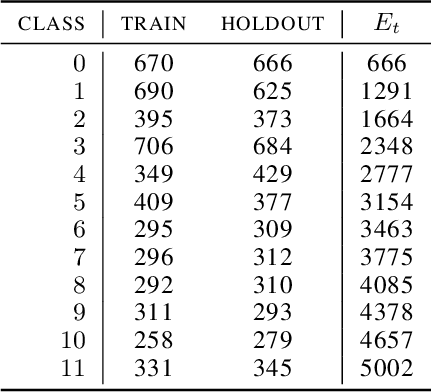

Abstract:The state-of-the-art unsupervised contrastive visual representation learning methods that have emerged recently (SimCLR, MoCo, SwAV) all make use of data augmentations in order to construct a pretext task of instant discrimination consisting of similar and dissimilar pairs of images. Similar pairs are constructed by randomly extracting patches from the same image and applying several other transformations such as color jittering or blurring, while transformed patches from different image instances in a given batch are regarded as dissimilar pairs. We argue that this approach can result similar pairs that are \textit{semantically} dissimilar. In this work, we address this problem by introducing a \textit{batch curation} scheme that selects batches during the training process that are more inline with the underlying contrastive objective. We provide insights into what constitutes beneficial similar and dissimilar pairs as well as validate \textit{batch curation} on CIFAR10 by integrating it in the SimCLR model.

GeomCA: Geometric Evaluation of Data Representations

May 26, 2021

Abstract:Evaluating the quality of learned representations without relying on a downstream task remains one of the challenges in representation learning. In this work, we present Geometric Component Analysis (GeomCA) algorithm that evaluates representation spaces based on their geometric and topological properties. GeomCA can be applied to representations of any dimension, independently of the model that generated them. We demonstrate its applicability by analyzing representations obtained from a variety of scenarios, such as contrastive learning models, generative models and supervised learning models.

Graph-based Normalizing Flow for Human Motion Generation and Reconstruction

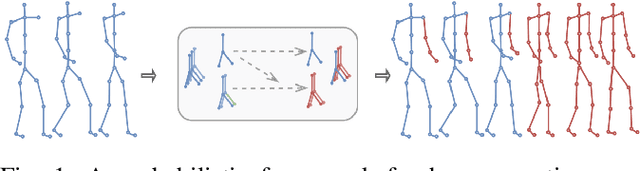

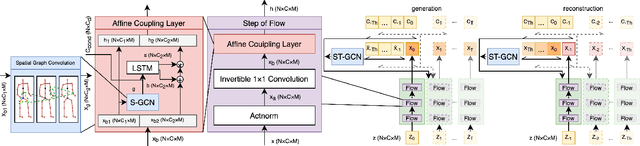

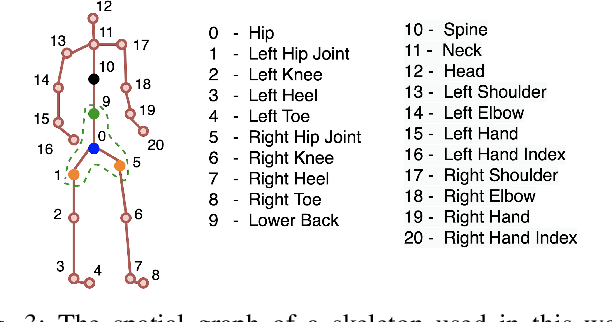

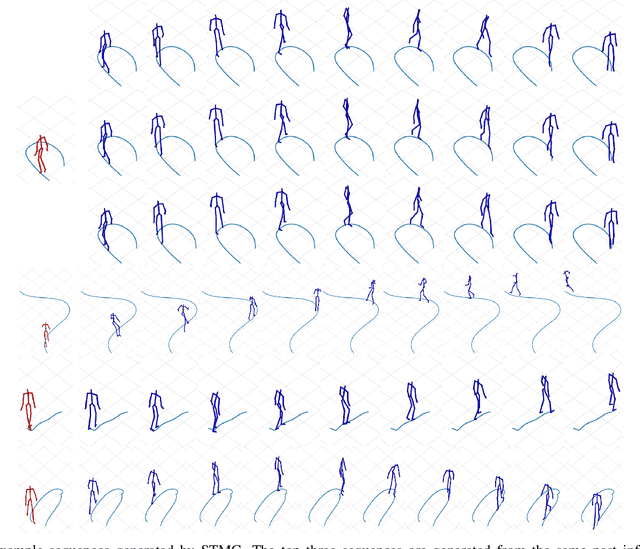

Apr 07, 2021

Abstract:Data-driven approaches for modeling human skeletal motion have found various applications in interactive media and social robotics. Challenges remain in these fields for generating high-fidelity samples and robustly reconstructing motion from imperfect input data, due to e.g. missed marker detection. In this paper, we propose a probabilistic generative model to synthesize and reconstruct long horizon motion sequences conditioned on past information and control signals, such as the path along which an individual is moving. Our method adapts the existing work MoGlow by introducing a new graph-based model. The model leverages the spatial-temporal graph convolutional network (ST-GCN) to effectively capture the spatial structure and temporal correlation of skeletal motion data at multiple scales. We evaluate the models on a mixture of motion capture datasets of human locomotion with foot-step and bone-length analysis. The results demonstrate the advantages of our model in reconstructing missing markers and achieving comparable results on generating realistic future poses. When the inputs are imperfect, our model shows improvements on robustness of generation.

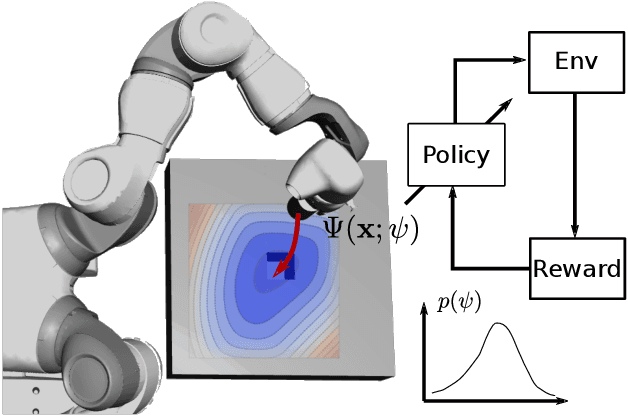

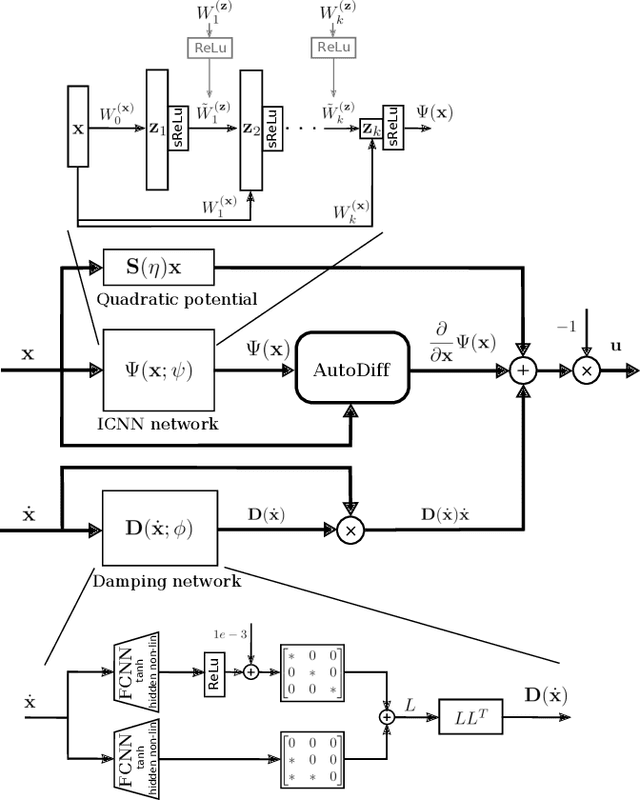

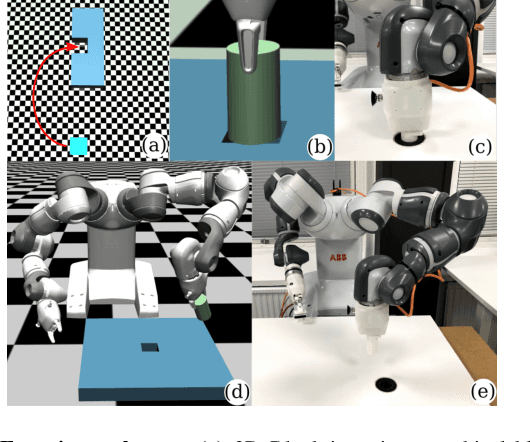

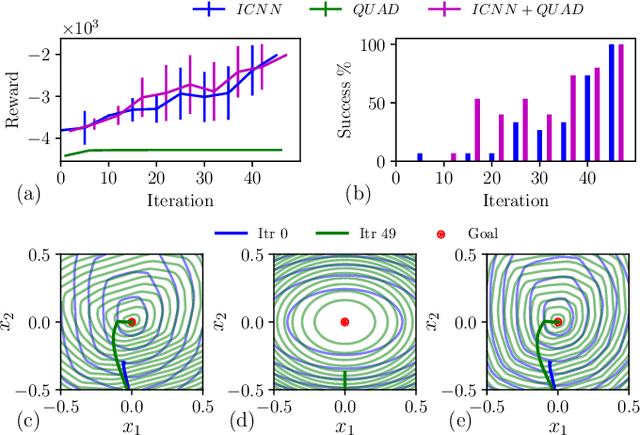

Learning Deep Neural Policies with Stability Guarantees

Mar 30, 2021

Abstract:Reinforcement learning (RL) has been successfully used to solve various robotic control tasks. However, most of the existing works do not address the issue of control stability. This is in sharp contrast to the control theory community where the well-established norm is to prove stability whenever a control law is synthesized. What makes guaranteeing stability during RL difficult is threefold: non interpretable neural network policies, unknown system dynamics and random exploration. We contribute towards solving the stable RL problem in the context of robotic manipulation that may involve physical contact with the environment. Our solution is derived from physics-based prior that originates from Lagrangian mechanics and does not involve learning any dynamics model. We show how to parameterize the resulting $\textit{energy shaping}$ policy as a deep neural network that consists of a convex potential function and a velocity dependent damping component. Our experiments, that include a real-world peg insertion task by a 7-DOF robot, validate the proposed policy structure and demonstrate the benefits of stability in RL.

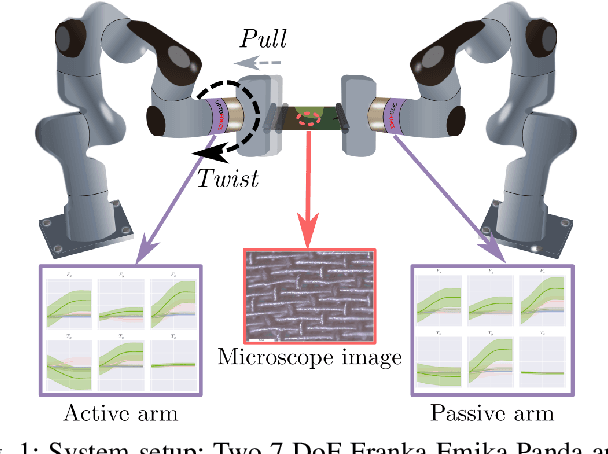

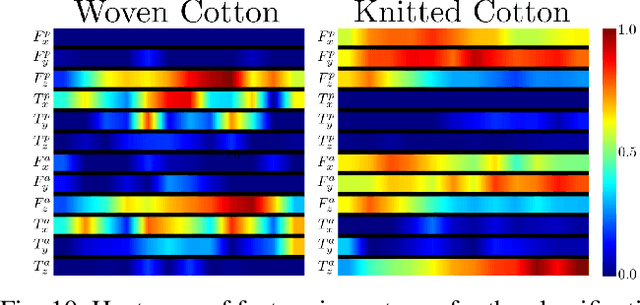

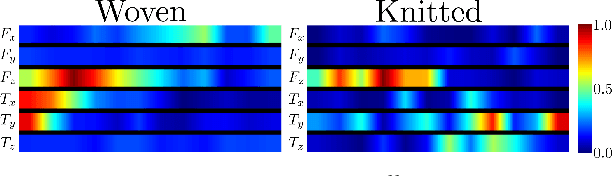

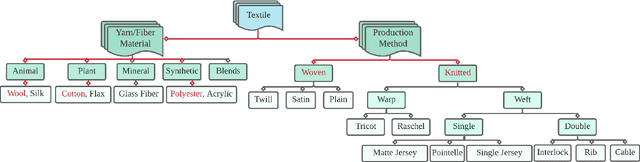

Textile Taxonomy and Classification Using Pulling and Twisting

Mar 17, 2021

Abstract:Identification of textile properties is an important milestone toward advanced robotic manipulation tasks that consider interaction with clothing items such as assisted dressing, laundry folding, automated sewing, textile recycling and reusing. Despite the abundance of work considering this class of deformable objects, many open problems remain. These relate to the choice and modelling of the sensory feedback as well as the control and planning of the interaction and manipulation strategies. Most importantly, there is no structured approach for studying and assessing different approaches that may bridge the gap between the robotics community and textile production industry. To this end, we outline a textile taxonomy considering fiber types and production methods, commonly used in textile industry. We devise datasets according to the taxonomy, and study how robotic actions, such as pulling and twisting of the textile samples, can be used for the classification. We also provide important insights from the perspective of visualization and interpretability of the gathered data.

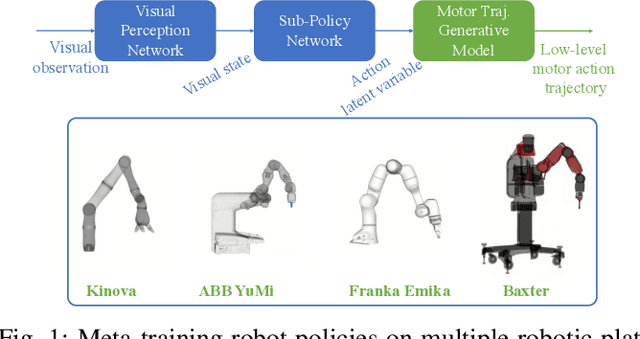

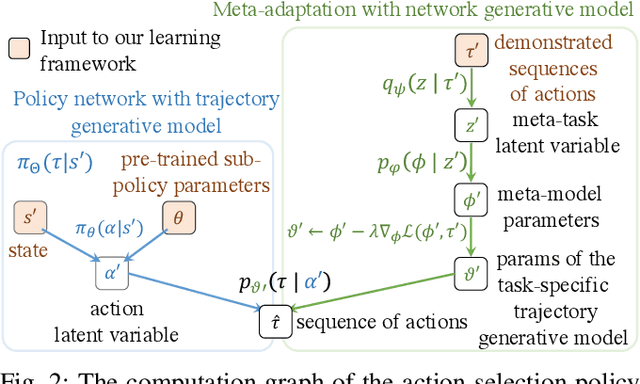

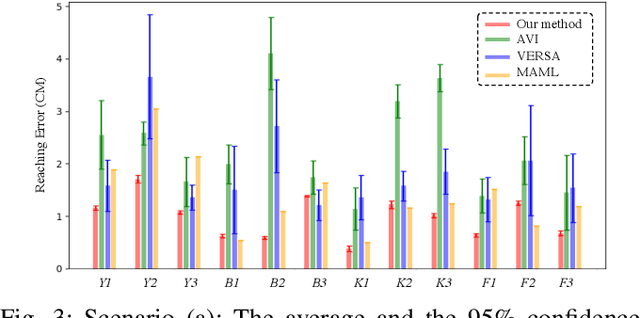

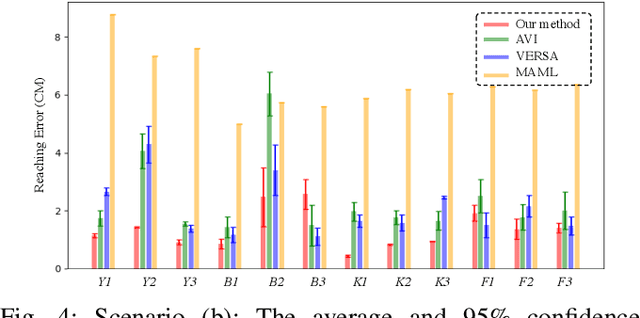

Bayesian Meta-Learning for Few-Shot Policy Adaptation Across Robotic Platforms

Mar 05, 2021

Abstract:Reinforcement learning methods can achieve significant performance but require a large amount of training data collected on the same robotic platform. A policy trained with expensive data is rendered useless after making even a minor change to the robot hardware. In this paper, we address the challenging problem of adapting a policy, trained to perform a task, to a novel robotic hardware platform given only few demonstrations of robot motion trajectories on the target robot. We formulate it as a few-shot meta-learning problem where the goal is to find a meta-model that captures the common structure shared across different robotic platforms such that data-efficient adaptation can be performed. We achieve such adaptation by introducing a learning framework consisting of a probabilistic gradient-based meta-learning algorithm that models the uncertainty arising from the few-shot setting with a low-dimensional latent variable. We experimentally evaluate our framework on a simulated reaching and a real-robot picking task using 400 simulated robots generated by varying the physical parameters of an existing set of robotic platforms. Our results show that the proposed method can successfully adapt a trained policy to different robotic platforms with novel physical parameters and the superiority of our meta-learning algorithm compared to state-of-the-art methods for the introduced few-shot policy adaptation problem.

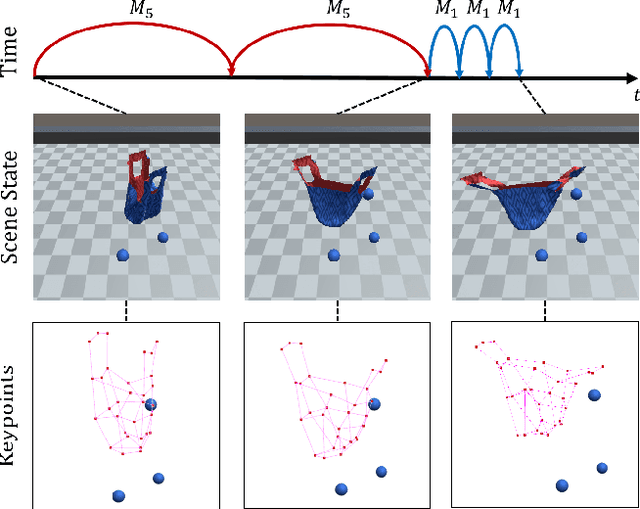

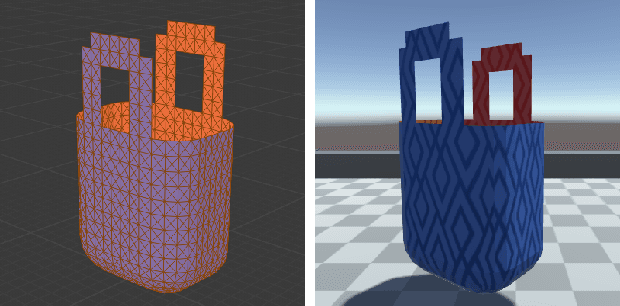

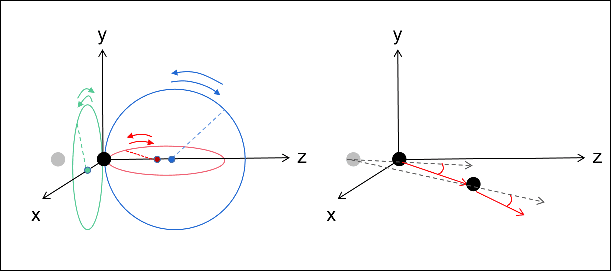

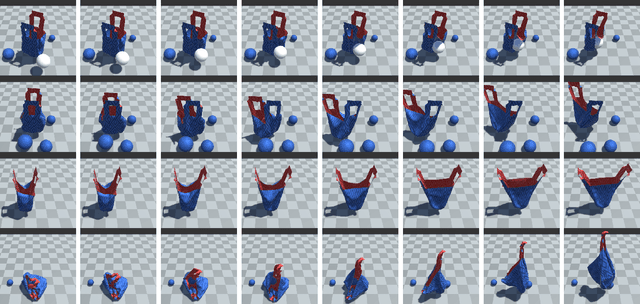

Graph-based Task-specific Prediction Models for Interactions between Deformable and Rigid Objects

Mar 04, 2021

Abstract:Capturing scene dynamics and predicting the future scene state is challenging but essential for robotic manipulation tasks, especially when the scene contains both rigid and deformable objects. In this work, we contribute a simulation environment and generate a novel dataset for task-specific manipulation, involving interactions between rigid objects and a deformable bag. The dataset incorporates a rich variety of scenarios including different object sizes, object numbers and manipulation actions. We approach dynamics learning by proposing an object-centric graph representation and two modules which are Active Prediction Module (APM) and Position Prediction Module (PPM) based on graph neural networks with an encode-process-decode architecture. At the inference stage, we build a two-stage model based on the learned modules for single time step prediction. We combine modules with different prediction horizons into a mixed-horizon model which addresses long-term prediction. In an ablation study, we show the benefits of the two-stage model for single time step prediction and the effectiveness of the mixed-horizon model for long-term prediction tasks. Supplementary material is available at https://github.com/wengzehang/deformable_rigid_interaction_prediction

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge