Cheng Zhuo

Unitho: A Unified Multi-Task Framework for Computational Lithography

Nov 14, 2025Abstract:Reliable, generalizable data foundations are critical for enabling large-scale models in computational lithography. However, essential tasks-mask generation, rule violation detection, and layout optimization-are often handled in isolation, hindered by scarce datasets and limited modeling approaches. To address these challenges, we introduce Unitho, a unified multi-task large vision model built upon the Transformer architecture. Trained on a large-scale industrial lithography simulation dataset with hundreds of thousands of cases, Unitho supports end-to-end mask generation, lithography simulation, and rule violation detection. By enabling agile and high-fidelity lithography simulation, Unitho further facilitates the construction of robust data foundations for intelligent EDA. Experimental results validate its effectiveness and generalizability, with performance substantially surpassing academic baselines.

FactorHD: A Hyperdimensional Computing Model for Multi-Object Multi-Class Representation and Factorization

Jul 16, 2025Abstract:Neuro-symbolic artificial intelligence (neuro-symbolic AI) excels in logical analysis and reasoning. Hyperdimensional Computing (HDC), a promising brain-inspired computational model, is integral to neuro-symbolic AI. Various HDC models have been proposed to represent class-instance and class-class relations, but when representing the more complex class-subclass relation, where multiple objects associate different levels of classes and subclasses, they face challenges for factorization, a crucial task for neuro-symbolic AI systems. In this article, we propose FactorHD, a novel HDC model capable of representing and factorizing the complex class-subclass relation efficiently. FactorHD features a symbolic encoding method that embeds an extra memorization clause, preserving more information for multiple objects. In addition, it employs an efficient factorization algorithm that selectively eliminates redundant classes by identifying the memorization clause of the target class. Such model significantly enhances computing efficiency and accuracy in representing and factorizing multiple objects with class-subclass relation, overcoming limitations of existing HDC models such as "superposition catastrophe" and "the problem of 2". Evaluations show that FactorHD achieves approximately 5667x speedup at a representation size of 10^9 compared to existing HDC models. When integrated with the ResNet-18 neural network, FactorHD achieves 92.48% factorization accuracy on the Cifar-10 dataset.

SafeMVDrive: Multi-view Safety-Critical Driving Video Synthesis in the Real World Domain

May 23, 2025

Abstract:Safety-critical scenarios are rare yet pivotal for evaluating and enhancing the robustness of autonomous driving systems. While existing methods generate safety-critical driving trajectories, simulations, or single-view videos, they fall short of meeting the demands of advanced end-to-end autonomous systems (E2E AD), which require real-world, multi-view video data. To bridge this gap, we introduce SafeMVDrive, the first framework designed to generate high-quality, safety-critical, multi-view driving videos grounded in real-world domains. SafeMVDrive strategically integrates a safety-critical trajectory generator with an advanced multi-view video generator. To tackle the challenges inherent in this integration, we first enhance scene understanding ability of the trajectory generator by incorporating visual context -- which is previously unavailable to such generator -- and leveraging a GRPO-finetuned vision-language model to achieve more realistic and context-aware trajectory generation. Second, recognizing that existing multi-view video generators struggle to render realistic collision events, we introduce a two-stage, controllable trajectory generation mechanism that produces collision-evasion trajectories, ensuring both video quality and safety-critical fidelity. Finally, we employ a diffusion-based multi-view video generator to synthesize high-quality safety-critical driving videos from the generated trajectories. Experiments conducted on an E2E AD planner demonstrate a significant increase in collision rate when tested with our generated data, validating the effectiveness of SafeMVDrive in stress-testing planning modules. Our code, examples, and datasets are publicly available at: https://zhoujiawei3.github.io/SafeMVDrive/.

LiTformer: Efficient Modeling and Analysis of High-Speed Link Transmitters Using Non-Autoregressive Transformer

Nov 18, 2024

Abstract:High-speed serial links are fundamental to energy-efficient and high-performance computing systems such as artificial intelligence, 5G mobile and automotive, enabling low-latency and high-bandwidth communication. Transmitters (TXs) within these links are key to signal quality, while their modeling presents challenges due to nonlinear behavior and dynamic interactions with links. In this paper, we propose LiTformer: a Transformer-based model for high-speed link TXs, with a non-sequential encoder and a Transformer decoder to incorporate link parameters and capture long-range dependencies of output signals. We employ a non-autoregressive mechanism in model training and inference for parallel prediction of the signal sequence. LiTformer achieves precise TX modeling considering link impacts including crosstalk from multiple links, and provides fast prediction for various long-sequence signals with high data rates. Experimental results show that LiTformer achieves 148-456$\times$ speedup for 2-link TXs and 404-944$\times$ speedup for 16-link with mean relative errors of 0.68-1.25%, supporting 4-bit signals at Gbps data rates of single-ended and differential TXs, as well as PAM4 TXs.

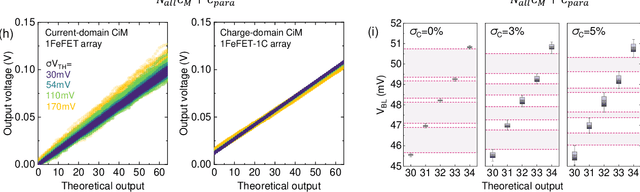

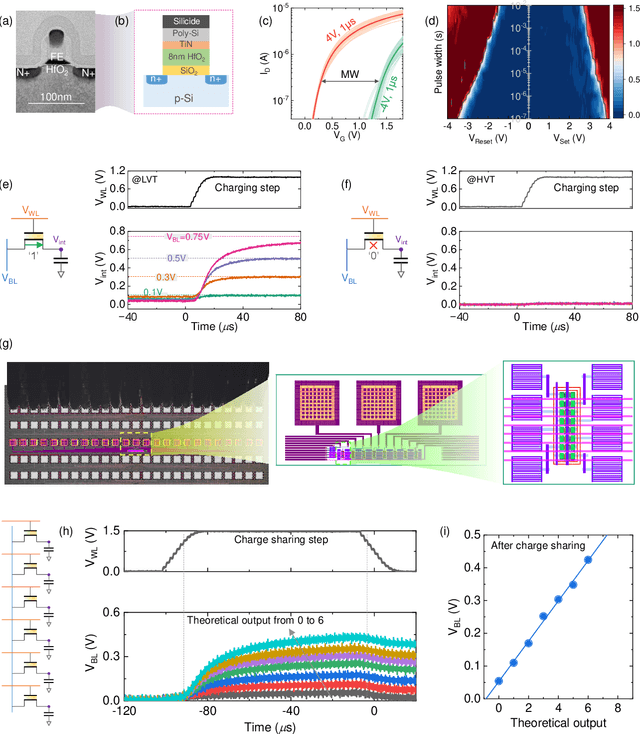

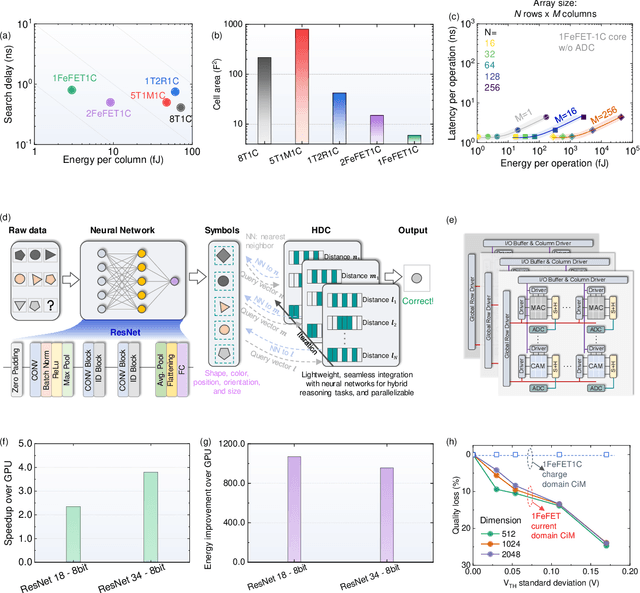

A Remedy to Compute-in-Memory with Dynamic Random Access Memory: 1FeFET-1C Technology for Neuro-Symbolic AI

Oct 20, 2024

Abstract:Neuro-symbolic artificial intelligence (AI) excels at learning from noisy and generalized patterns, conducting logical inferences, and providing interpretable reasoning. Comprising a 'neuro' component for feature extraction and a 'symbolic' component for decision-making, neuro-symbolic AI has yet to fully benefit from efficient hardware accelerators. Additionally, current hardware struggles to accommodate applications requiring dynamic resource allocation between these two components. To address these challenges-and mitigate the typical data-transfer bottleneck of classical Von Neumann architectures-we propose a ferroelectric charge-domain compute-in-memory (CiM) array as the foundational processing element for neuro-symbolic AI. This array seamlessly handles both the critical multiply-accumulate (MAC) operations of the 'neuro' workload and the parallel associative search operations of the 'symbolic' workload. To enable this approach, we introduce an innovative 1FeFET-1C cell, combining a ferroelectric field-effect transistor (FeFET) with a capacitor. This design, overcomes the destructive sensing limitations of DRAM in CiM applications, while capable of capitalizing decades of DRAM expertise with a similar cell structure as DRAM, achieves high immunity against FeFET variation-crucial for neuro-symbolic AI-and demonstrates superior energy efficiency. The functionalities of our design have been successfully validated through SPICE simulations and prototype fabrication and testing. Our hardware platform has been benchmarked in executing typical neuro-symbolic AI reasoning tasks, showing over 2x improvement in latency and 1000x improvement in energy efficiency compared to GPU-based implementations.

FabGPT: An Efficient Large Multimodal Model for Complex Wafer Defect Knowledge Queries

Jul 15, 2024

Abstract:Intelligence is key to advancing integrated circuit (IC) fabrication. Recent breakthroughs in Large Multimodal Models (LMMs) have unlocked unparalleled abilities in understanding images and text, fostering intelligent fabrication. Leveraging the power of LMMs, we introduce FabGPT, a customized IC fabrication large multimodal model for wafer defect knowledge query. FabGPT manifests expertise in conducting defect detection in Scanning Electron Microscope (SEM) images, performing root cause analysis, and providing expert question-answering (Q&A) on fabrication processes. FabGPT matches enhanced multimodal features to automatically detect minute defects under complex wafer backgrounds and reduce the subjectivity of manual threshold settings. Besides, the proposed modulation module and interactive corpus training strategy embed wafer defect knowledge into the pre-trained model, effectively balancing Q&A queries related to defect knowledge and original knowledge and mitigating the modality bias issues. Experiments on in-house fab data (SEM-WaD) show that our FabGPT achieves significant performance improvement in wafer defect detection and knowledge querying.

BasisN: Reprogramming-Free RRAM-Based In-Memory-Computing by Basis Combination for Deep Neural Networks

Jul 04, 2024Abstract:Deep neural networks (DNNs) have made breakthroughs in various fields including image recognition and language processing. DNNs execute hundreds of millions of multiply-and-accumulate (MAC) operations. To efficiently accelerate such computations, analog in-memory-computing platforms have emerged leveraging emerging devices such as resistive RAM (RRAM). However, such accelerators face the hurdle of being required to have sufficient on-chip crossbars to hold all the weights of a DNN. Otherwise, RRAM cells in the crossbars need to be reprogramed to process further layers, which causes huge time/energy overhead due to the extremely slow writing and verification of the RRAM cells. As a result, it is still not possible to deploy such accelerators to process large-scale DNNs in industry. To address this problem, we propose the BasisN framework to accelerate DNNs on any number of available crossbars without reprogramming. BasisN introduces a novel representation of the kernels in DNN layers as combinations of global basis vectors shared between all layers with quantized coefficients. These basis vectors are written to crossbars only once and used for the computations of all layers with marginal hardware modification. BasisN also provides a novel training approach to enhance computation parallelization with the global basis vectors and optimize the coefficients to construct the kernels. Experimental results demonstrate that cycles per inference and energy-delay product were reduced to below 1% compared with applying reprogramming on crossbars in processing large-scale DNNs such as DenseNet and ResNet on ImageNet and CIFAR100 datasets, while the training and hardware costs are negligible.

LiveMind: Low-latency Large Language Models with Simultaneous Inference

Jun 20, 2024

Abstract:In this paper, we introduce a novel low-latency inference framework for large language models (LLMs) inference which enables LLMs to perform inferences with incomplete prompts. By reallocating computational processes to prompt input phase, we achieve a substantial reduction in latency, thereby significantly enhancing the interactive experience for users of LLMs. The framework adeptly manages the visibility of the streaming prompt to the model, allowing it to infer from incomplete prompts or await additional prompts. Compared with traditional inference methods that utilize complete prompts, our approach demonstrates an average reduction of 59% in response latency on the MMLU-Pro dataset, while maintaining comparable accuracy. Additionally, our framework facilitates collaborative inference and output across different models. By employing an LLM for inference and a small language model (SLM) for output, we achieve an average 68% reduction in response latency, alongside a 5.5% improvement in accuracy on the MMLU-Pro dataset compared with the SLM baseline. For long prompts exceeding 20 sentences, the response latency can be reduced by up to 93%.

Reconfigurable Frequency Multipliers Based on Complementary Ferroelectric Transistors

Dec 29, 2023

Abstract:Frequency multipliers, a class of essential electronic components, play a pivotal role in contemporary signal processing and communication systems. They serve as crucial building blocks for generating high-frequency signals by multiplying the frequency of an input signal. However, traditional frequency multipliers that rely on nonlinear devices often require energy- and area-consuming filtering and amplification circuits, and emerging designs based on an ambipolar ferroelectric transistor require costly non-trivial characteristic tuning or complex technology process. In this paper, we show that a pair of standard ferroelectric field effect transistors (FeFETs) can be used to build compact frequency multipliers without aforementioned technology issues. By leveraging the tunable parabolic shape of the 2FeFET structures' transfer characteristics, we propose four reconfigurable frequency multipliers, which can switch between signal transmission and frequency doubling. Furthermore, based on the 2FeFET structures, we propose four frequency multipliers that realize triple, quadruple frequency modes, elucidating a scalable methodology to generate more multiplication harmonics of the input frequency. Performance metrics such as maximum operating frequency, power, etc., are evaluated and compared with existing works. We also implement a practical case of frequency modulation scheme based on the proposed reconfigurable multipliers without additional devices. Our work provides a novel path of scalable and reconfigurable frequency multiplier designs based on devices that have characteristics similar to FeFETs, and show that FeFETs are a promising candidate for signal processing and communication systems in terms of maximum operating frequency and power.

Class-Aware Pruning for Efficient Neural Networks

Dec 10, 2023

Abstract:Deep neural networks (DNNs) have demonstrated remarkable success in various fields. However, the large number of floating-point operations (FLOPs) in DNNs poses challenges for their deployment in resource-constrained applications, e.g., edge devices. To address the problem, pruning has been introduced to reduce the computational cost in executing DNNs. Previous pruning strategies are based on weight values, gradient values and activation outputs. Different from previous pruning solutions, in this paper, we propose a class-aware pruning technique to compress DNNs, which provides a novel perspective to reduce the computational cost of DNNs. In each iteration, the neural network training is modified to facilitate the class-aware pruning. Afterwards, the importance of filters with respect to the number of classes is evaluated. The filters that are only important for a few number of classes are removed. The neural network is then retrained to compensate for the incurred accuracy loss. The pruning iterations end until no filter can be removed anymore, indicating that the remaining filters are very important for many classes. This pruning technique outperforms previous pruning solutions in terms of accuracy, pruning ratio and the reduction of FLOPs. Experimental results confirm that this class-aware pruning technique can significantly reduce the number of weights and FLOPs, while maintaining a high inference accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge