Charles Lu

Data Measurements for Decentralized Data Markets

Jun 06, 2024Abstract:Decentralized data markets can provide more equitable forms of data acquisition for machine learning. However, to realize practical marketplaces, efficient techniques for seller selection need to be developed. We propose and benchmark federated data measurements to allow a data buyer to find sellers with relevant and diverse datasets. Diversity and relevance measures enable a buyer to make relative comparisons between sellers without requiring intermediate brokers and training task-dependent models.

Data Acquisition via Experimental Design for Decentralized Data Markets

Mar 20, 2024Abstract:Acquiring high-quality training data is essential for current machine learning models. Data markets provide a way to increase the supply of data, particularly in data-scarce domains such as healthcare, by incentivizing potential data sellers to join the market. A major challenge for a data buyer in such a market is selecting the most valuable data points from a data seller. Unlike prior work in data valuation, which assumes centralized data access, we propose a federated approach to the data selection problem that is inspired by linear experimental design. Our proposed data selection method achieves lower prediction error without requiring labeled validation data and can be optimized in a fast and federated procedure. The key insight of our work is that a method that directly estimates the benefit of acquiring data for test set prediction is particularly compatible with a decentralized market setting.

Federated Conformal Predictors for Distributed Uncertainty Quantification

Jun 01, 2023

Abstract:Conformal prediction is emerging as a popular paradigm for providing rigorous uncertainty quantification in machine learning since it can be easily applied as a post-processing step to already trained models. In this paper, we extend conformal prediction to the federated learning setting. The main challenge we face is data heterogeneity across the clients - this violates the fundamental tenet of exchangeability required for conformal prediction. We propose a weaker notion of partial exchangeability, better suited to the FL setting, and use it to develop the Federated Conformal Prediction (FCP) framework. We show FCP enjoys rigorous theoretical guarantees and excellent empirical performance on several computer vision and medical imaging datasets. Our results demonstrate a practical approach to incorporating meaningful uncertainty quantification in distributed and heterogeneous environments. We provide code used in our experiments https://github.com/clu5/federated-conformal.

Estimating Test Performance for AI Medical Devices under Distribution Shift with Conformal Prediction

Jul 12, 2022

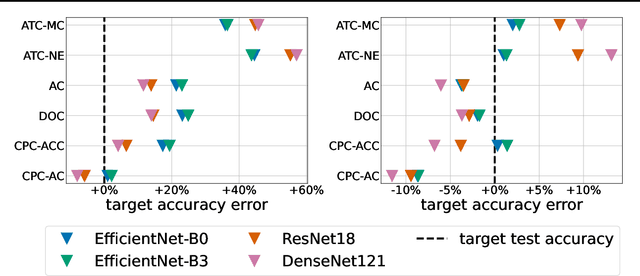

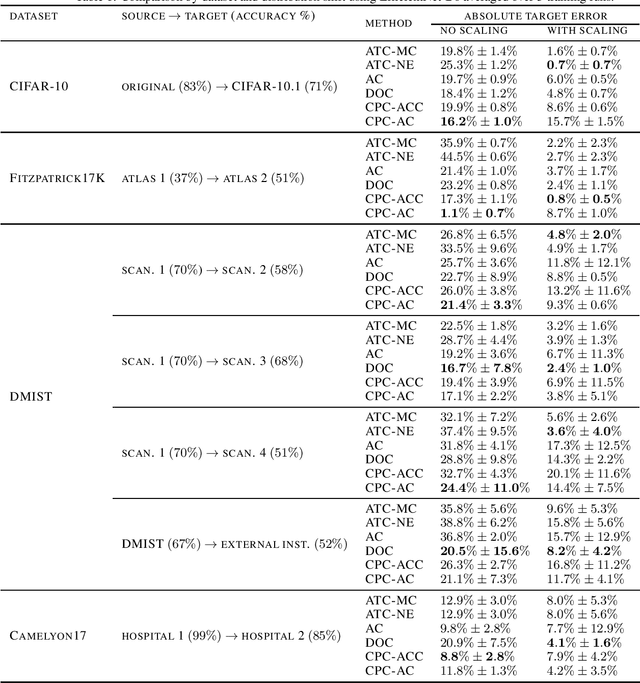

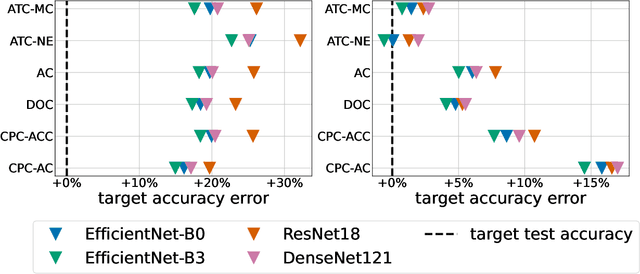

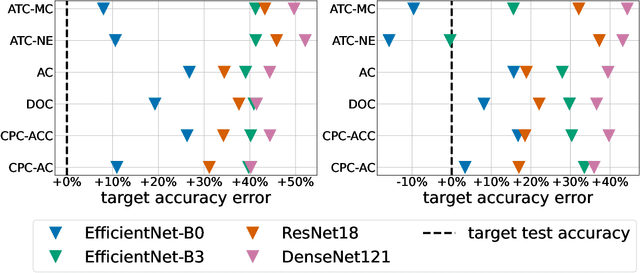

Abstract:Estimating the test performance of software AI-based medical devices under distribution shifts is crucial for evaluating the safety, efficiency, and usability prior to clinical deployment. Due to the nature of regulated medical device software and the difficulty in acquiring large amounts of labeled medical datasets, we consider the task of predicting the test accuracy of an arbitrary black-box model on an unlabeled target domain without modification to the original training process or any distributional assumptions of the original source data (i.e. we treat the model as a "black-box" and only use the predicted output responses). We propose a "black-box" test estimation technique based on conformal prediction and evaluate it against other methods on three medical imaging datasets (mammography, dermatology, and histopathology) under several clinically relevant types of distribution shift (institution, hardware scanner, atlas, hospital). We hope that by promoting practical and effective estimation techniques for black-box models, manufacturers of medical devices will develop more standardized and realistic evaluation procedures to improve the robustness and trustworthiness of clinical AI tools.

Improving Trustworthiness of AI Disease Severity Rating in Medical Imaging with Ordinal Conformal Prediction Sets

Jul 05, 2022

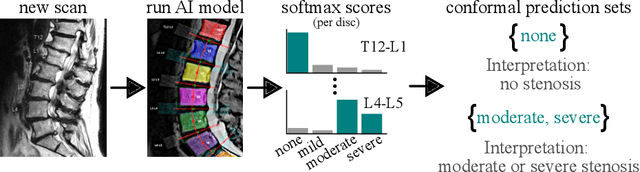

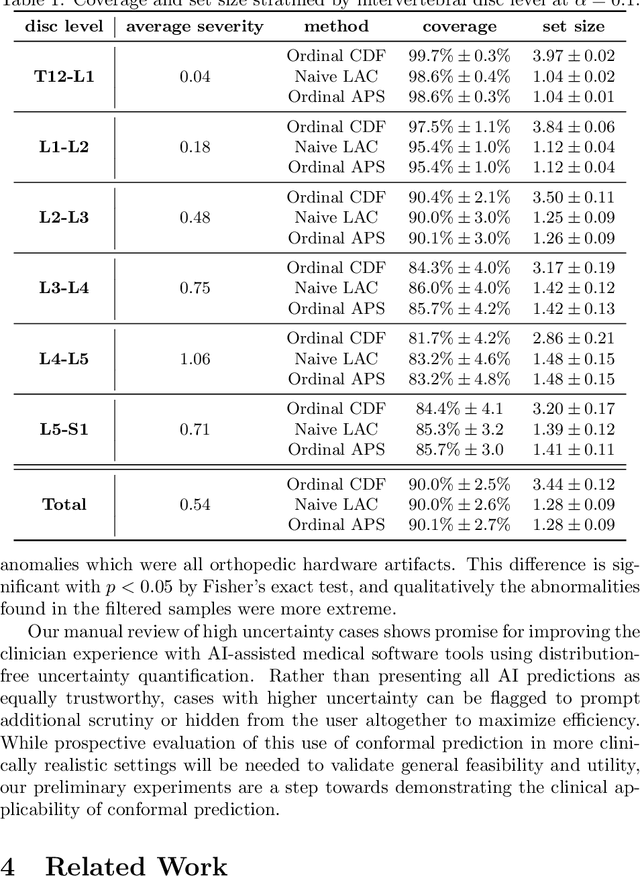

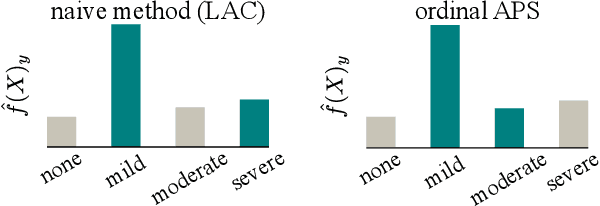

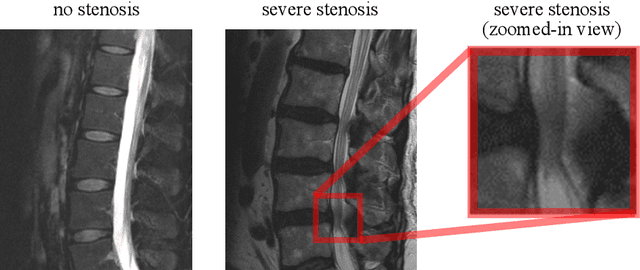

Abstract:The regulatory approval and broad clinical deployment of medical AI have been hampered by the perception that deep learning models fail in unpredictable and possibly catastrophic ways. A lack of statistically rigorous uncertainty quantification is a significant factor undermining trust in AI results. Recent developments in distribution-free uncertainty quantification present practical solutions for these issues by providing reliability guarantees for black-box models on arbitrary data distributions as formally valid finite-sample prediction intervals. Our work applies these new uncertainty quantification methods -- specifically conformal prediction -- to a deep-learning model for grading the severity of spinal stenosis in lumbar spine MRI. We demonstrate a technique for forming ordinal prediction sets that are guaranteed to contain the correct stenosis severity within a user-defined probability (confidence interval). On a dataset of 409 MRI exams processed by the deep-learning model, the conformal method provides tight coverage with small prediction set sizes. Furthermore, we explore the potential clinical applicability of flagging cases with high uncertainty predictions (large prediction sets) by quantifying an increase in the prevalence of significant imaging abnormalities (e.g. motion artifacts, metallic artifacts, and tumors) that could degrade confidence in predictive performance when compared to a random sample of cases.

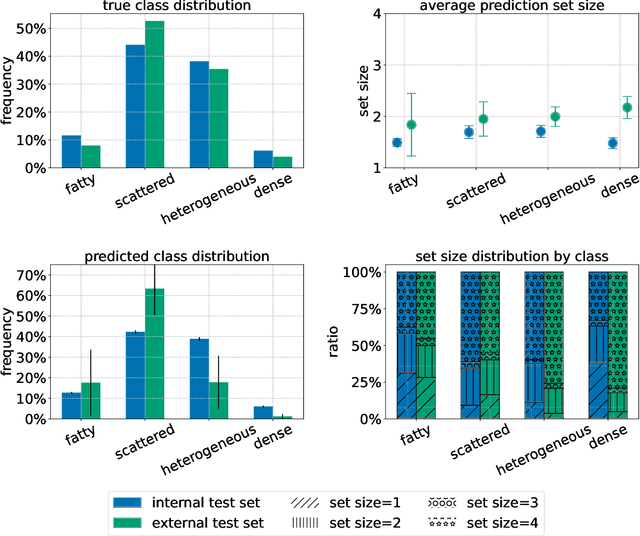

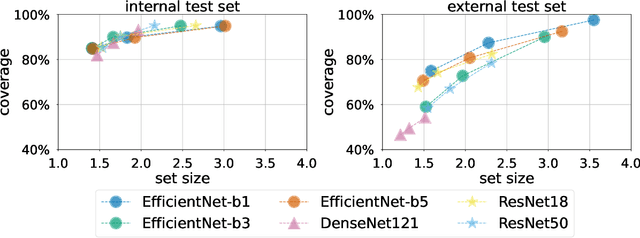

Three Applications of Conformal Prediction for Rating Breast Density in Mammography

Jun 23, 2022

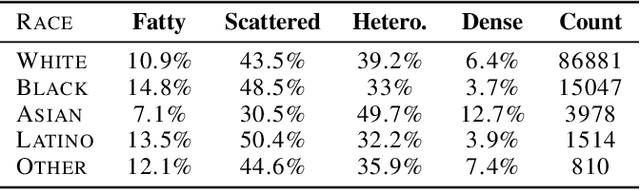

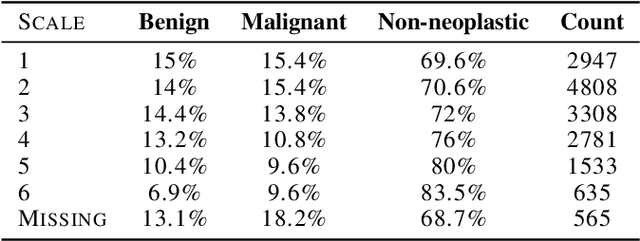

Abstract:Breast cancer is the most common cancers and early detection from mammography screening is crucial in improving patient outcomes. Assessing mammographic breast density is clinically important as the denser breasts have higher risk and are more likely to occlude tumors. Manual assessment by experts is both time-consuming and subject to inter-rater variability. As such, there has been increased interest in the development of deep learning methods for mammographic breast density assessment. Despite deep learning having demonstrated impressive performance in several prediction tasks for applications in mammography, clinical deployment of deep learning systems in still relatively rare; historically, mammography Computer-Aided Diagnoses (CAD) have over-promised and failed to deliver. This is in part due to the inability to intuitively quantify uncertainty of the algorithm for the clinician, which would greatly enhance usability. Conformal prediction is well suited to increase reliably and trust in deep learning tools but they lack realistic evaluations on medical datasets. In this paper, we present a detailed analysis of three possible applications of conformal prediction applied to medical imaging tasks: distribution shift characterization, prediction quality improvement, and subgroup fairness analysis. Our results show the potential of distribution-free uncertainty quantification techniques to enhance trust on AI algorithms and expedite their translation to usage.

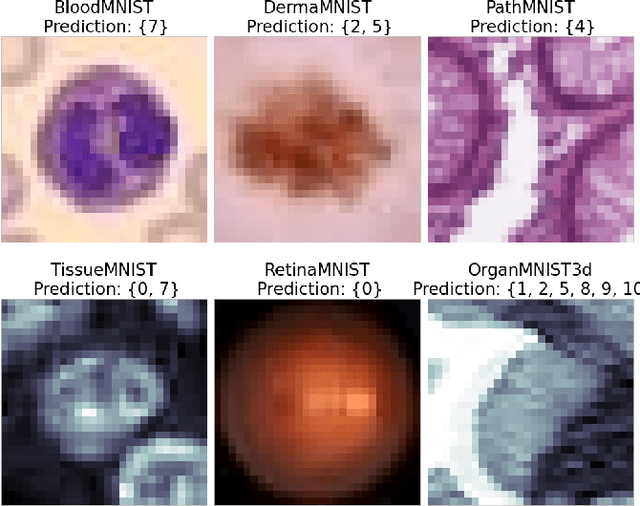

Distribution-Free Federated Learning with Conformal Predictions

Oct 14, 2021

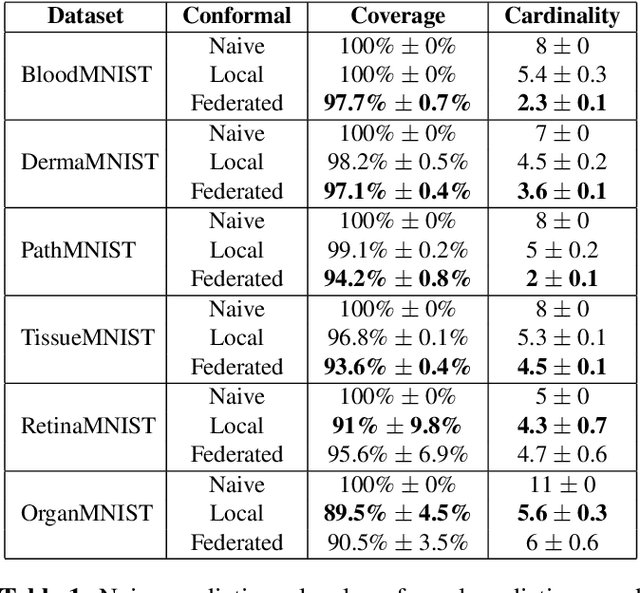

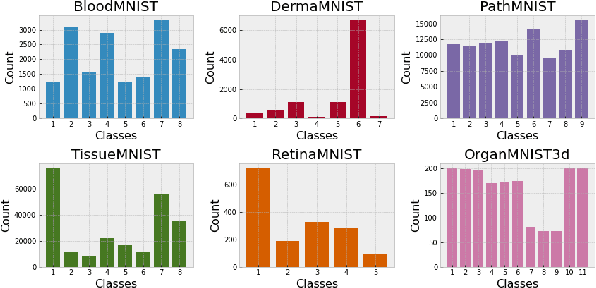

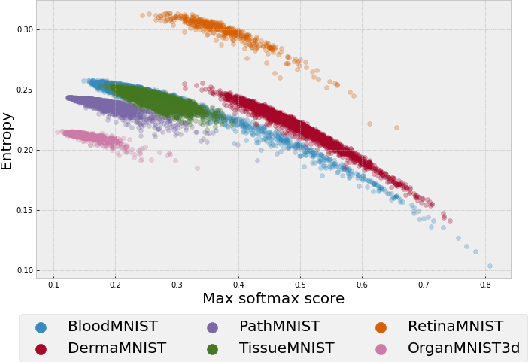

Abstract:Federated learning has attracted considerable interest for collaborative machine learning in healthcare to leverage separate institutional datasets while maintaining patient privacy. However, additional challenges such as poor calibration and lack of interpretability may also hamper widespread deployment of federated models into clinical practice and lead to user distrust or misuse of ML tools in high-stakes clinical decision-making. In this paper, we propose to address these challenges by incorporating an adaptive conformal framework into federated learning to ensure distribution-free prediction sets that provide coverage guarantees and uncertainty estimates without requiring any additional modifications to the model or assumptions. Empirical results on the MedMNIST medical imaging benchmark demonstrate our federated method provide tighter coverage in lower average cardinality over local conformal predictions on 6 different medical imaging benchmark datasets in 2D and 3D multi-class classification tasks. Further, we correlate class entropy and prediction set size to assess task uncertainty with conformal methods.

Deploying clinical machine learning? Consider the following...

Sep 14, 2021

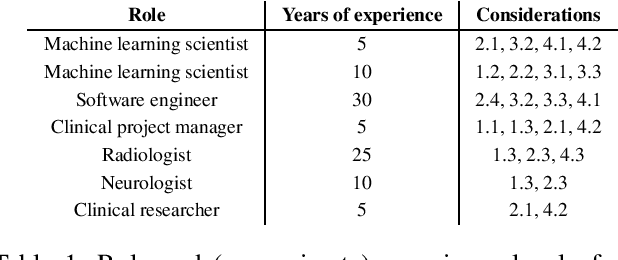

Abstract:Despite the intense attention and investment into clinical machine learning (CML) research, relatively few applications convert to clinical practice. While research is important in advancing the state-of-the-art, translation is equally important in bringing these technologies into a position to ultimately impact patient care and live up to extensive expectations surrounding AI in healthcare. To better characterize a holistic perspective among researchers and practitioners, we survey several participants with experience in developing CML for clinical deployment about their learned experiences. We collate these insights and identify several main categories of barriers and pitfalls in order to better design and develop clinical machine learning applications.

Fair Conformal Predictors for Applications in Medical Imaging

Sep 09, 2021

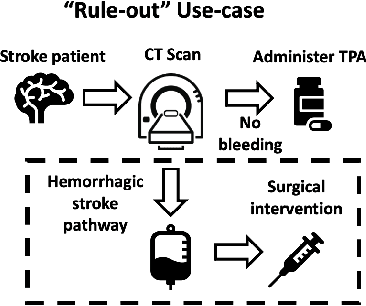

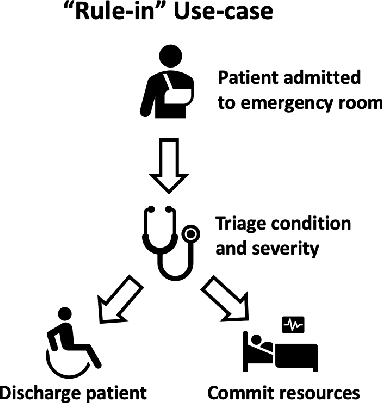

Abstract:Deep learning has the potential to augment many components of the clinical workflow, such as medical image interpretation. However, the translation of these black box algorithms into clinical practice has been marred by the relative lack of transparency compared to conventional machine learning methods, hindering in clinician trust in the systems for critical medical decision-making. Specifically, common deep learning approaches do not have intuitive ways of expressing uncertainty with respect to cases that might require further human review. Furthermore, the possibility of algorithmic bias has caused hesitancy regarding the use of developed algorithms in clinical settings. To these ends, we explore how conformal methods can complement deep learning models by providing both clinically intuitive way (by means of confidence prediction sets) of expressing model uncertainty as well as facilitating model transparency in clinical workflows. In this paper, we conduct a field survey with clinicians to assess clinical use-cases of conformal predictions. Next, we conduct experiments with a mammographic breast density and dermatology photography datasets to demonstrate the utility of conformal predictions in "rule-in" and "rule-out" disease scenarios. Further, we show that conformal predictors can be used to equalize coverage with respect to patient demographics such as race and skin tone. We find that a conformal predictions to be a promising framework with potential to increase clinical usability and transparency for better collaboration between deep learning algorithms and clinicians.

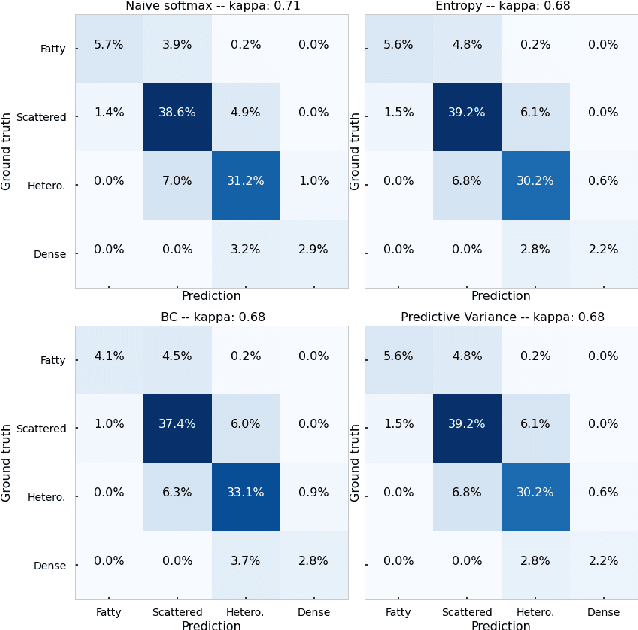

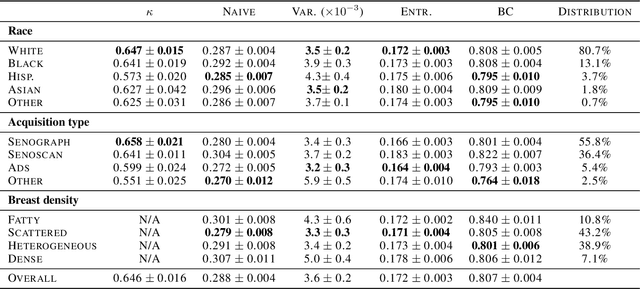

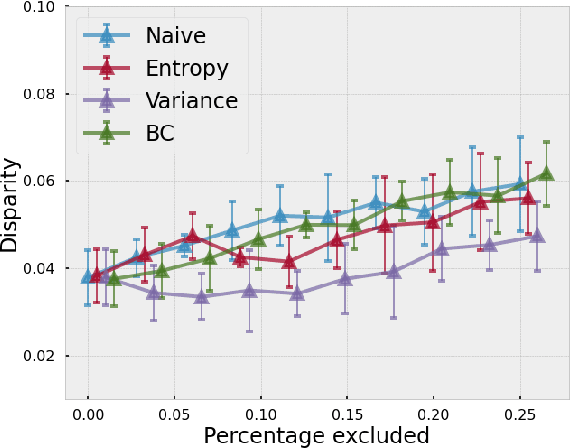

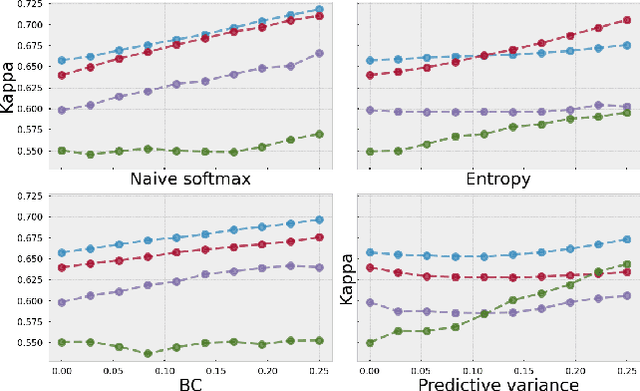

Evaluating subgroup disparity using epistemic uncertainty in mammography

Jul 15, 2021

Abstract:As machine learning (ML) continue to be integrated into healthcare systems that affect clinical decision making, new strategies will need to be incorporated in order to effectively detect and evaluate subgroup disparities to ensure accountability and generalizability in clinical workflows. In this paper, we explore how epistemic uncertainty can be used to evaluate disparity in patient demographics (race) and data acquisition (scanner) subgroups for breast density assessment on a dataset of 108,190 mammograms collected from 33 clinical sites. Our results show that even if aggregate performance is comparable, the choice of uncertainty quantification metric can significantly the subgroup level. We hope this analysis can promote further work on how uncertainty can be leveraged to increase transparency of machine learning applications for clinical deployment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge