Curvelanes

Papers and Code

Polar R-CNN: End-to-End Lane Detection with Fewer Anchors

Nov 03, 2024

Lane detection is a critical and challenging task in autonomous driving, particularly in real-world scenarios where traffic lanes can be slender, lengthy, and often obscured by other vehicles, complicating detection efforts. Existing anchor-based methods typically rely on prior lane anchors to extract features and subsequently refine the location and shape of lanes. While these methods achieve high performance, manually setting prior anchors is cumbersome, and ensuring sufficient coverage across diverse datasets often requires a large amount of dense anchors. Furthermore, the use of Non-Maximum Suppression (NMS) to eliminate redundant predictions complicates real-world deployment and may underperform in complex scenarios. In this paper, we propose Polar R-CNN, an end-to-end anchor-based method for lane detection. By incorporating both local and global polar coordinate systems, Polar R-CNN facilitates flexible anchor proposals and significantly reduces the number of anchors required without compromising performance.Additionally, we introduce a triplet head with heuristic structure that supports NMS-free paradigm, enhancing deployment efficiency and performance in scenarios with dense lanes.Our method achieves competitive results on five popular lane detection benchmarks--Tusimple, CULane,LLAMAS, CurveLanes, and DL-Rai--while maintaining a lightweight design and straightforward structure. Our source code is available at https://github.com/ShqWW/PolarRCNN.

LaneCorrect: Self-supervised Lane Detection

Apr 23, 2024

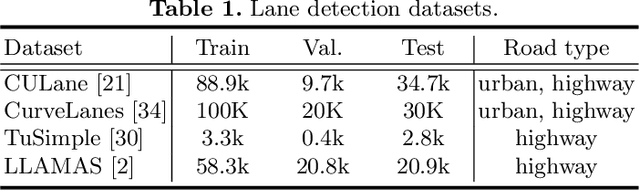

Lane detection has evolved highly functional autonomous driving system to understand driving scenes even under complex environments. In this paper, we work towards developing a generalized computer vision system able to detect lanes without using any annotation. We make the following contributions: (i) We illustrate how to perform unsupervised 3D lane segmentation by leveraging the distinctive intensity of lanes on the LiDAR point cloud frames, and then obtain the noisy lane labels in the 2D plane by projecting the 3D points; (ii) We propose a novel self-supervised training scheme, dubbed LaneCorrect, that automatically corrects the lane label by learning geometric consistency and instance awareness from the adversarial augmentations; (iii) With the self-supervised pre-trained model, we distill to train a student network for arbitrary target lane (e.g., TuSimple) detection without any human labels; (iv) We thoroughly evaluate our self-supervised method on four major lane detection benchmarks (including TuSimple, CULane, CurveLanes and LLAMAS) and demonstrate excellent performance compared with existing supervised counterpart, whilst showing more effective results on alleviating the domain gap, i.e., training on CULane and test on TuSimple.

CLRerNet: Improving Confidence of Lane Detection with LaneIoU

May 15, 2023

Lane marker detection is a crucial component of the autonomous driving and driver assistance systems. Modern deep lane detection methods with row-based lane representation exhibit excellent performance on lane detection benchmarks. Through preliminary oracle experiments, we firstly disentangle the lane representation components to determine the direction of our approach. We show that correct lane positions are already among the predictions of an existing row-based detector, and the confidence scores that accurately represent intersection-over-union (IoU) with ground truths are the most beneficial. Based on the finding, we propose LaneIoU that better correlates with the metric, by taking the local lane angles into consideration. We develop a novel detector coined CLRerNet featuring LaneIoU for the target assignment cost and loss functions aiming at the improved quality of confidence scores. Through careful and fair benchmark including cross validation, we demonstrate that CLRerNet outperforms the state-of-the-art by a large margin - enjoying F1 score of 81.43% compared with 80.47% of the existing method on CULane, and 86.47% compared with 86.10% on CurveLanes.

RCLane: Relay Chain Prediction for Lane Detection

Jul 19, 2022

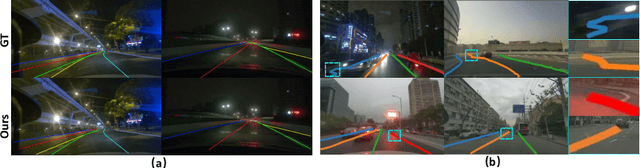

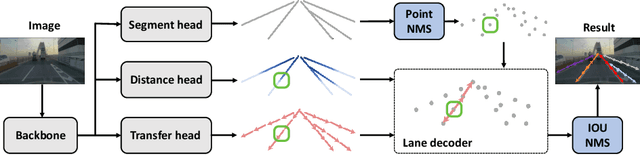

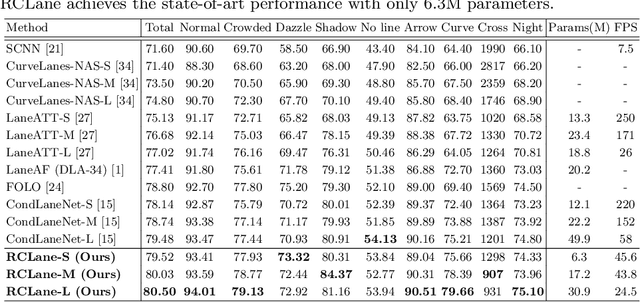

Lane detection is an important component of many real-world autonomous systems. Despite a wide variety of lane detection approaches have been proposed, reporting steady benchmark improvements over time, lane detection remains a largely unsolved problem. This is because most of the existing lane detection methods either treat the lane detection as a dense prediction or a detection task, few of them consider the unique topologies (Y-shape, Fork-shape, nearly horizontal lane) of the lane markers, which leads to sub-optimal solution. In this paper, we present a new method for lane detection based on relay chain prediction. Specifically, our model predicts a segmentation map to classify the foreground and background region. For each pixel point in the foreground region, we go through the forward branch and backward branch to recover the whole lane. Each branch decodes a transfer map and a distance map to produce the direction moving to the next point, and how many steps to progressively predict a relay station (next point). As such, our model is able to capture the keypoints along the lanes. Despite its simplicity, our strategy allows us to establish new state-of-the-art on four major benchmarks including TuSimple, CULane, CurveLanes and LLAMAS.

CurveLane-NAS: Unifying Lane-Sensitive Architecture Search and Adaptive Point Blending

Jul 23, 2020

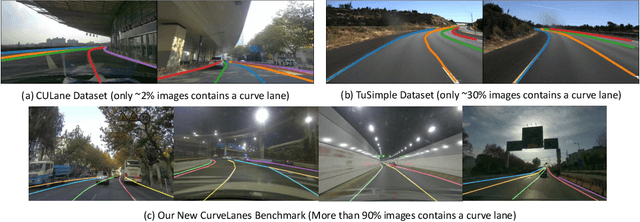

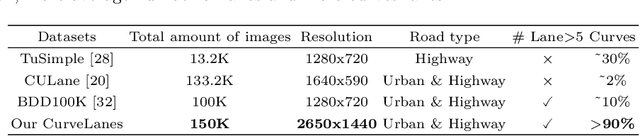

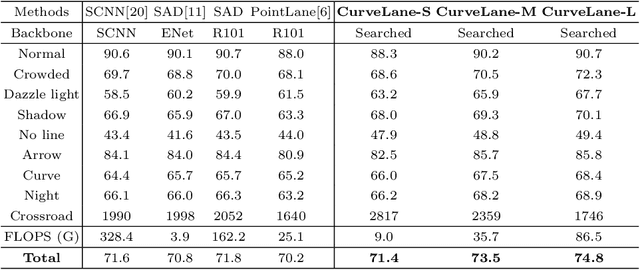

We address the curve lane detection problem which poses more realistic challenges than conventional lane detection for better facilitating modern assisted/autonomous driving systems. Current hand-designed lane detection methods are not robust enough to capture the curve lanes especially the remote parts due to the lack of modeling both long-range contextual information and detailed curve trajectory. In this paper, we propose a novel lane-sensitive architecture search framework named CurveLane-NAS to automatically capture both long-ranged coherent and accurate short-range curve information while unifying both architecture search and post-processing on curve lane predictions via point blending. It consists of three search modules: a) a feature fusion search module to find a better fusion of the local and global context for multi-level hierarchy features; b) an elastic backbone search module to explore an efficient feature extractor with good semantics and latency; c) an adaptive point blending module to search a multi-level post-processing refinement strategy to combine multi-scale head prediction. The unified framework ensures lane-sensitive predictions by the mutual guidance between NAS and adaptive point blending. Furthermore, we also steer forward to release a more challenging benchmark named CurveLanes for addressing the most difficult curve lanes. It consists of 150K images with 680K labels.The new dataset can be downloaded at github.com/xbjxh/CurveLanes (already anonymized for this submission). Experiments on the new CurveLanes show that the SOTA lane detection methods suffer substantial performance drop while our model can still reach an 80+% F1-score. Extensive experiments on traditional lane benchmarks such as CULane also demonstrate the superiority of our CurveLane-NAS, e.g. achieving a new SOTA 74.8% F1-score on CULane.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge