Zhuoyi Lin

DRAGON: LLM-Driven Decomposition and Reconstruction Agents for Large-Scale Combinatorial Optimization

Jan 10, 2026Abstract:Large Language Models (LLMs) have recently shown promise in addressing combinatorial optimization problems (COPs) through prompt-based strategies. However, their scalability and generalization remain limited, and their effectiveness diminishes as problem size increases, particularly in routing problems involving more than 30 nodes. We propose DRAGON, which stands for Decomposition and Reconstruction Agents Guided OptimizatioN, a novel framework that combines the strengths of metaheuristic design and LLM reasoning. Starting from an initial global solution, DRAGON autonomously identifies regions with high optimization potential and strategically decompose large-scale COPs into manageable subproblems. Each subproblem is then reformulated as a concise, localized optimization task and solved through targeted LLM prompting guided by accumulated experiences. Finally, the locally optimized solutions are systematically reintegrated into the original global context to yield a significantly improved overall outcome. By continuously interacting with the optimization environment and leveraging an adaptive experience memory, the agents iteratively learn from feedback, effectively coupling symbolic reasoning with heuristic search. Empirical results show that, unlike existing LLM-based solvers limited to small-scale instances, DRAGON consistently produces feasible solutions on TSPLIB, CVRPLIB, and Weibull-5k bin packing benchmarks, and achieves near-optimal results (0.16% gap) on knapsack problems with over 3M variables. This work shows the potential of feedback-driven language agents as a new paradigm for generalizable and interpretable large-scale optimization.

Do Reviews Matter for Recommendations in the Era of Large Language Models?

Dec 15, 2025

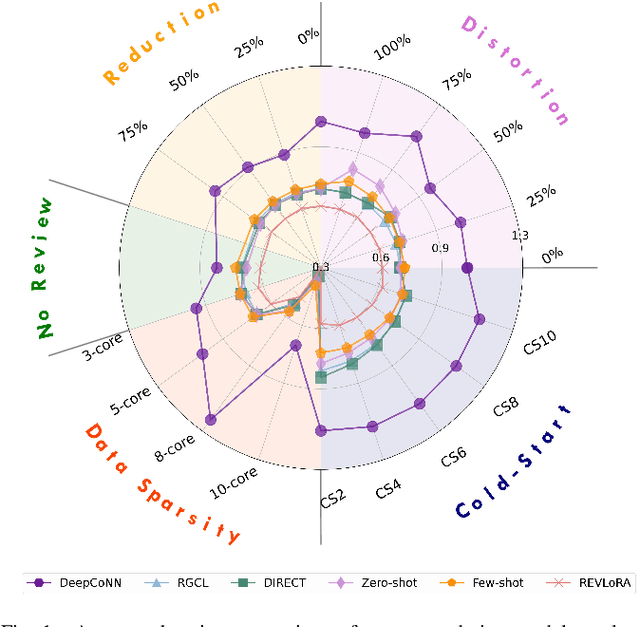

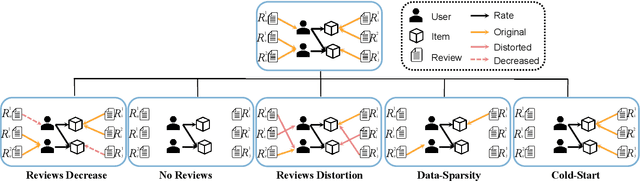

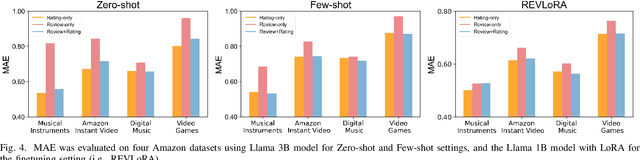

Abstract:With the advent of large language models (LLMs), the landscape of recommender systems is undergoing a significant transformation. Traditionally, user reviews have served as a critical source of rich, contextual information for enhancing recommendation quality. However, as LLMs demonstrate an unprecedented ability to understand and generate human-like text, this raises the question of whether explicit user reviews remain essential in the era of LLMs. In this paper, we provide a systematic investigation of the evolving role of text reviews in recommendation by comparing deep learning methods and LLM approaches. Particularly, we conduct extensive experiments on eight public datasets with LLMs and evaluate their performance in zero-shot, few-shot, and fine-tuning scenarios. We further introduce a benchmarking evaluation framework for review-aware recommender systems, RAREval, to comprehensively assess the contribution of textual reviews to the recommendation performance of review-aware recommender systems. Our framework examines various scenarios, including the removal of some or all textual reviews, random distortion, as well as recommendation performance in data sparsity and cold-start user settings. Our findings demonstrate that LLMs are capable of functioning as effective review-aware recommendation engines, generally outperforming traditional deep learning approaches, particularly in scenarios characterized by data sparsity and cold-start conditions. In addition, the removal of some or all textual reviews and random distortion does not necessarily lead to declines in recommendation accuracy. These findings motivate a rethinking of how user preference from text reviews can be more effectively leveraged. All code and supplementary materials are available at: https://github.com/zhytk/RAREval-data-processing.

Bridging Synthetic and Real Routing Problems via LLM-Guided Instance Generation and Progressive Adaptation

Nov 13, 2025Abstract:Recent advances in Neural Combinatorial Optimization (NCO) methods have significantly improved the capability of neural solvers to handle synthetic routing instances. Nonetheless, existing neural solvers typically struggle to generalize effectively from synthetic, uniformly-distributed training data to real-world VRP scenarios, including widely recognized benchmark instances from TSPLib and CVRPLib. To bridge this generalization gap, we present Evolutionary Realistic Instance Synthesis (EvoReal), which leverages an evolutionary module guided by large language models (LLMs) to generate synthetic instances characterized by diverse and realistic structural patterns. Specifically, the evolutionary module produces synthetic instances whose structural attributes statistically mimics those observed in authentic real-world instances. Subsequently, pre-trained NCO models are progressively refined, firstly aligning them with these structurally enriched synthetic distributions and then further adapting them through direct fine-tuning on actual benchmark instances. Extensive experimental evaluations demonstrate that EvoReal markedly improves the generalization capabilities of state-of-the-art neural solvers, yielding a notable reduced performance gap compared to the optimal solutions on the TSPLib (1.05%) and CVRPLib (2.71%) benchmarks across a broad spectrum of problem scales.

A Spatial-Physics Informed Model for 3D Spiral Sample Scanned by SQUID Microscopy

Jul 16, 2025Abstract:The development of advanced packaging is essential in the semiconductor manufacturing industry. However, non-destructive testing (NDT) of advanced packaging becomes increasingly challenging due to the depth and complexity of the layers involved. In such a scenario, Magnetic field imaging (MFI) enables the imaging of magnetic fields generated by currents. For MFI to be effective in NDT, the magnetic fields must be converted into current density. This conversion has typically relied solely on a Fast Fourier Transform (FFT) for magnetic field inversion; however, the existing approach does not consider eddy current effects or image misalignment in the test setup. In this paper, we present a spatial-physics informed model (SPIM) designed for a 3D spiral sample scanned using Superconducting QUantum Interference Device (SQUID) microscopy. The SPIM encompasses three key components: i) magnetic image enhancement by aligning all the "sharp" wire field signals to mitigate the eddy current effect using both in-phase (I-channel) and quadrature-phase (Q-channel) images; (ii) magnetic image alignment that addresses skew effects caused by any misalignment of the scanning SQUID microscope relative to the wire segments; and (iii) an inversion method for converting magnetic fields to magnetic currents by integrating the Biot-Savart Law with FFT. The results show that the SPIM improves I-channel sharpness by 0.3% and reduces Q-channel sharpness by 25%. Also, we were able to remove rotational and skew misalignments of 0.30 in a real image. Overall, SPIM highlights the potential of combining spatial analysis with physics-driven models in practical applications.

* copyright 2025 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future media, including reprinting/republishing this material for advertising or promotional purposes, creating new collective works, for resale or redistribution to servers or lists, or reuse of any copyrighted component of this work in other works

Cross-Problem Learning for Solving Vehicle Routing Problems

Apr 17, 2024

Abstract:Existing neural heuristics often train a deep architecture from scratch for each specific vehicle routing problem (VRP), ignoring the transferable knowledge across different VRP variants. This paper proposes the cross-problem learning to assist heuristics training for different downstream VRP variants. Particularly, we modularize neural architectures for complex VRPs into 1) the backbone Transformer for tackling the travelling salesman problem (TSP), and 2) the additional lightweight modules for processing problem-specific features in complex VRPs. Accordingly, we propose to pre-train the backbone Transformer for TSP, and then apply it in the process of fine-tuning the Transformer models for each target VRP variant. On the one hand, we fully fine-tune the trained backbone Transformer and problem-specific modules simultaneously. On the other hand, we only fine-tune small adapter networks along with the modules, keeping the backbone Transformer still. Extensive experiments on typical VRPs substantiate that 1) the full fine-tuning achieves significantly better performance than the one trained from scratch, and 2) the adapter-based fine-tuning also delivers comparable performance while being notably parameter-efficient. Furthermore, we empirically demonstrate the favorable effect of our method in terms of cross-distribution application and versatility.

Attention over Self-attention:Intention-aware Re-ranking with Dynamic Transformer Encoders for Recommendation

Jan 14, 2022

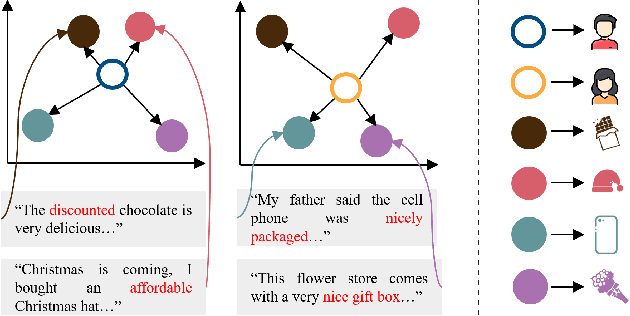

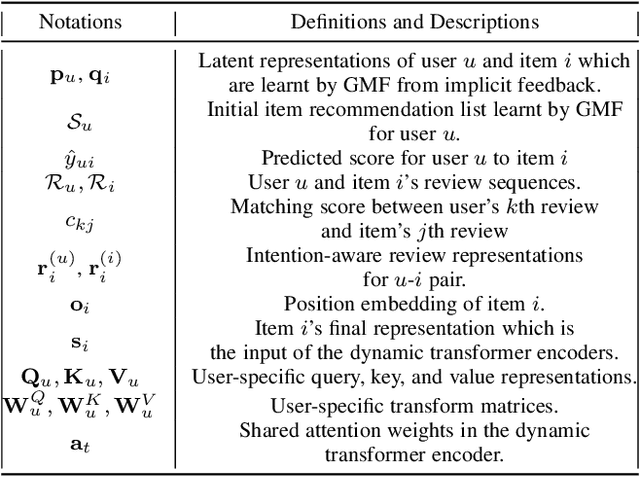

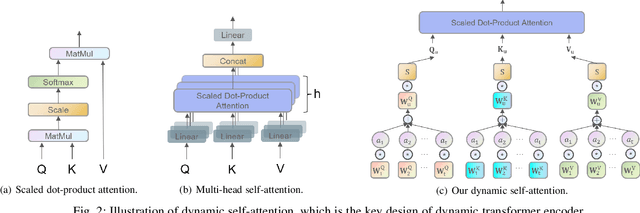

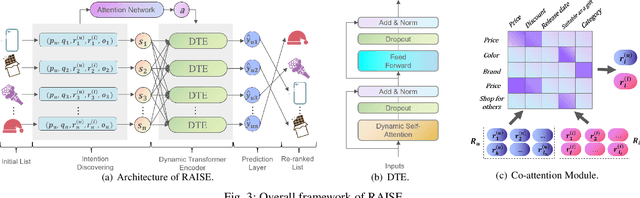

Abstract:Re-ranking models refine the item recommendation list generated by the prior global ranking model with intra-item relationships. However, most existing re-ranking solutions refine recommendation list based on the implicit feedback with a shared re-ranking model, which regrettably ignore the intra-item relationships under diverse user intentions. In this paper, we propose a novel Intention-aware Re-ranking Model with Dynamic Transformer Encoder (RAISE), aiming to perform user-specific prediction for each target user based on her intentions. Specifically, we first propose to mine latent user intentions from text reviews with an intention discovering module (IDM). By differentiating the importance of review information with a co-attention network, the latent user intention can be explicitly modeled for each user-item pair. We then introduce a dynamic transformer encoder (DTE) to capture user-specific intra-item relationships among item candidates by seamlessly accommodating the learnt latent user intentions via IDM. As such, RAISE is able to perform user-specific prediction without increasing the depth (number of blocks) and width (number of heads) of the prediction model. Empirical study on four public datasets shows the superiority of our proposed RAISE, with up to 13.95%, 12.30%, and 13.03% relative improvements evaluated by Precision, MAP, and NDCG respectively.

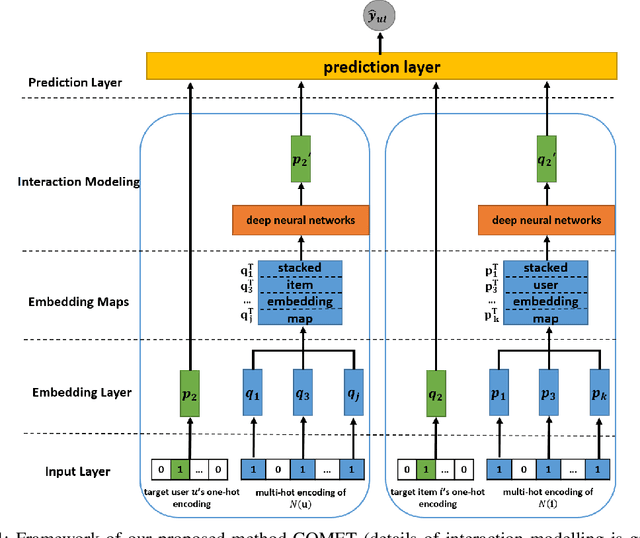

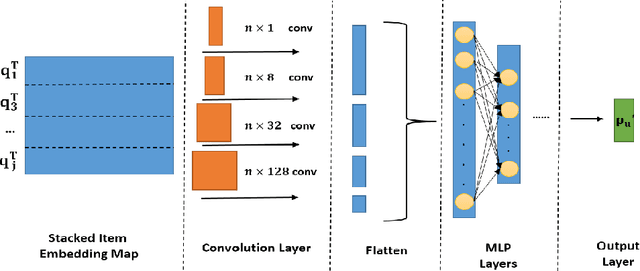

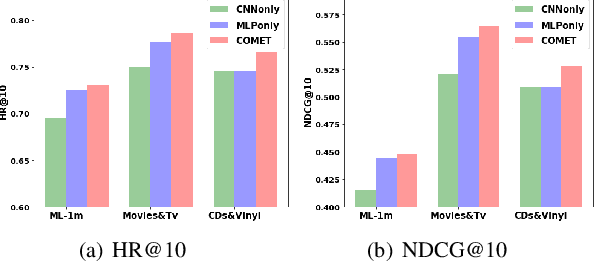

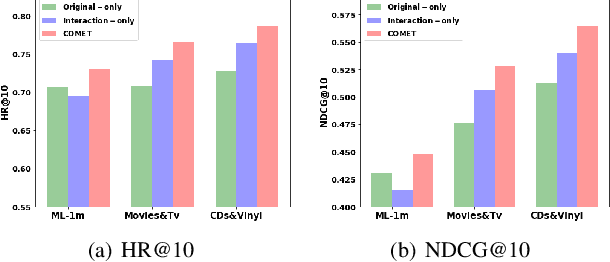

COMET: Convolutional Dimension Interaction for Deep Matrix Factorization

Aug 18, 2020

Abstract:Latent factor models play a dominant role among recommendation techniques. However, most of the existing latent factor models assume embedding dimensions are independent of each other, and thus regrettably ignore the interaction information across different embedding dimensions. In this paper, we propose a novel latent factor model called COMET (COnvolutional diMEnsion inTeraction), which provides the first attempt to model higher-order interaction signals among all latent dimensions in an explicit manner. To be specific, COMET stacks the embeddings of historical interactions horizontally, which results in two "embedding maps" that encode the original dimension information. In this way, users' and items' internal interactions can be exploited by convolutional neural networks with kernels of different sizes and a fully-connected multi-layer perceptron. Furthermore, the representations of users and items are enriched by the learnt interaction vectors, which can further be used to produce the final prediction. Extensive experiments and ablation studies on various public implicit feedback datasets clearly demonstrate the effectiveness and the rationality of our proposed method.

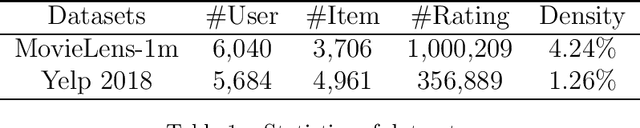

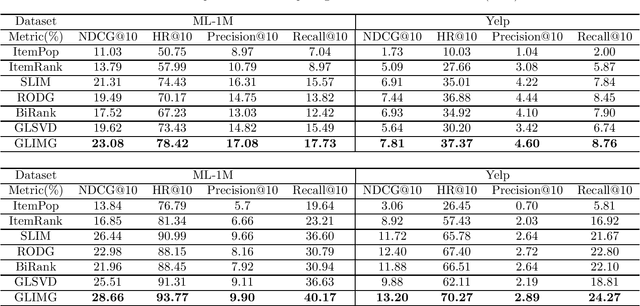

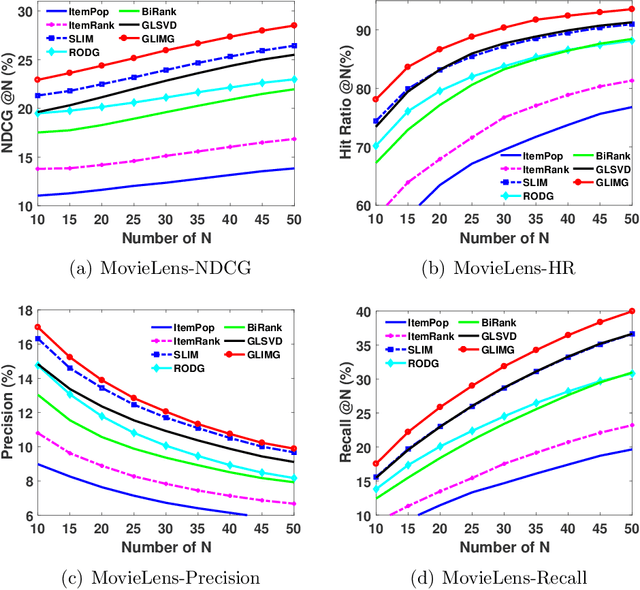

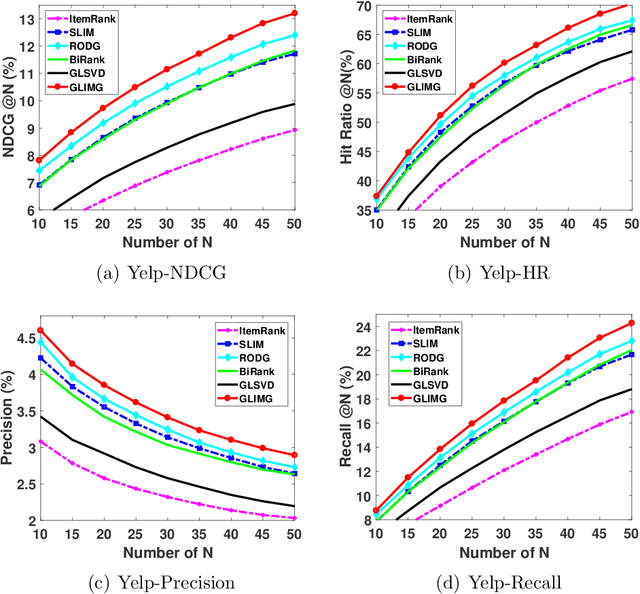

GLIMG: Global and Local Item Graphs for Top-N Recommender Systems

Aug 18, 2020

Abstract:Graph-based recommendation models work well for top-N recommender systems due to their capability to capture the potential relationships between entities. However, most of the existing methods only construct a single global item graph shared by all the users and regrettably ignore the diverse tastes between different user groups. Inspired by the success of local models for recommendation, this paper provides the first attempt to investigate multiple local item graphs along with a global item graph for graph-based recommendation models. We argue that recommendation on global and local graphs outperforms that on a single global graph or multiple local graphs. Specifically, we propose a novel graph-based recommendation model named GLIMG (Global and Local IteM Graphs), which simultaneously captures both the global and local user tastes. By integrating the global and local graphs into an adapted semi-supervised learning model, users' preferences on items are propagated globally and locally. Extensive experimental results on real-world datasets show that our proposed method consistently outperforms the state-of-the art counterparts on the top-N recommendation task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge