Yue Wen

Helen

UI-Venus-1.5 Technical Report

Feb 09, 2026Abstract:GUI agents have emerged as a powerful paradigm for automating interactions in digital environments, yet achieving both broad generality and consistently strong task performance remains challenging.In this report, we present UI-Venus-1.5, a unified, end-to-end GUI Agent designed for robust real-world applications.The proposed model family comprises two dense variants (2B and 8B) and one mixture-of-experts variant (30B-A3B) to meet various downstream application scenarios.Compared to our previous version, UI-Venus-1.5 introduces three key technical advances: (1) a comprehensive Mid-Training stage leveraging 10 billion tokens across 30+ datasets to establish foundational GUI semantics; (2) Online Reinforcement Learning with full-trajectory rollouts, aligning training objectives with long-horizon, dynamic navigation in large-scale environments; and (3) a single unified GUI Agent constructed via Model Merging, which synthesizes domain-specific models (grounding, web, and mobile) into one cohesive checkpoint. Extensive evaluations demonstrate that UI-Venus-1.5 establishes new state-of-the-art performance on benchmarks such as ScreenSpot-Pro (69.6%), VenusBench-GD (75.0%), and AndroidWorld (77.6%), significantly outperforming previous strong baselines. In addition, UI-Venus-1.5 demonstrates robust navigation capabilities across a variety of Chinese mobile apps, effectively executing user instructions in real-world scenarios. Code: https://github.com/inclusionAI/UI-Venus; Model: https://huggingface.co/collections/inclusionAI/ui-venus

SplatBright: Generalizable Low-Light Scene Reconstruction from Sparse Views via Physically-Guided Gaussian Enhancement

Dec 21, 2025

Abstract:Low-light 3D reconstruction from sparse views remains challenging due to exposure imbalance and degraded color fidelity. While existing methods struggle with view inconsistency and require per-scene training, we propose SplatBright, which is, to our knowledge, the first generalizable 3D Gaussian framework for joint low-light enhancement and reconstruction from sparse sRGB inputs. Our key idea is to integrate physically guided illumination modeling with geometry-appearance decoupling for consistent low-light reconstruction. Specifically, we adopt a dual-branch predictor that provides stable geometric initialization of 3D Gaussian parameters. On the appearance side, illumination consistency leverages frequency priors to enable controllable and cross-view coherent lighting, while an appearance refinement module further separates illumination, material, and view-dependent cues to recover fine texture. To tackle the lack of large-scale geometrically consistent paired data, we synthesize dark views via a physics-based camera model for training. Extensive experiments on public and self-collected datasets demonstrate that SplatBright achieves superior novel view synthesis, cross-view consistency, and better generalization to unseen low-light scenes compared with both 2D and 3D methods.

Hyperspectral Super-Resolution with Inter-Image Variability via Degradation-based Low-Rank and Residual Fusion Method

Nov 19, 2025

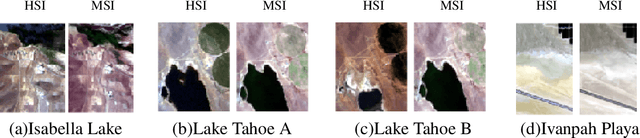

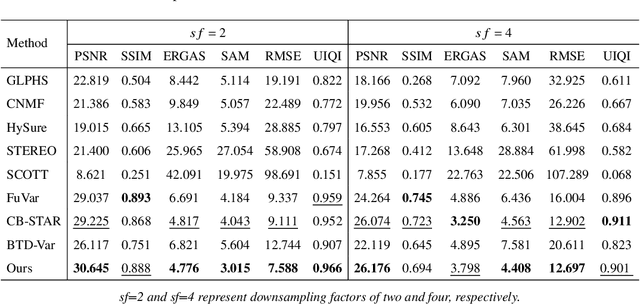

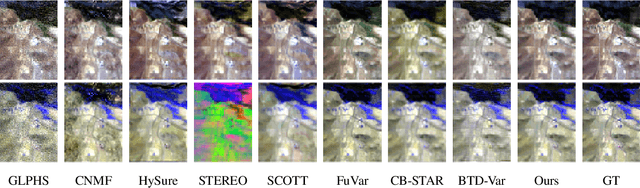

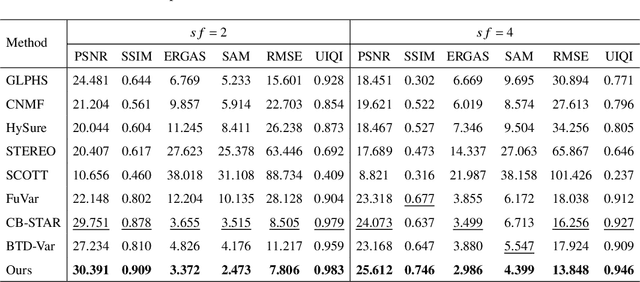

Abstract:The fusion of hyperspectral image (HSI) with multispectral image (MSI) provides an effective way to enhance the spatial resolution of HSI. However, due to different acquisition conditions, there may exist spectral variability and spatially localized changes between HSI and MSI, referred to as inter-image variability, which can significantly affect the fusion performance. Existing methods typically handle inter-image variability by applying direct transformations to the images themselves, which can exacerbate the ill-posedness of the fusion model. To address this challenge, we propose a Degradation-based Low-Rank and Residual Fusion (DLRRF) model. First, we model the spectral variability as change in the spectral degradation operator. Second, to recover the lost spatial details caused by spatially localized changes, we decompose the target HSI into low rank and residual components, where the latter is used to capture the lost details. By exploiting the spectral correlation within the images, we perform dimensionality reduction on both components. Additionally, we introduce an implicit regularizer to utilize the spatial prior information from the images. The proposed DLRRF model is solved using the Proximal Alternating Optimization (PAO) algorithm within a Plug-and-Play (PnP) framework, where the subproblem regarding implicit regularizer is addressed by an external denoiser. We further provide a comprehensive convergence analysis of the algorithm. Finally, extensive numerical experiments demonstrate that DLRRF achieves superior performance in fusing HSI and MSI with inter-image variability.

FreeDriveRF: Monocular RGB Dynamic NeRF without Poses for Autonomous Driving via Point-Level Dynamic-Static Decoupling

May 14, 2025Abstract:Dynamic scene reconstruction for autonomous driving enables vehicles to perceive and interpret complex scene changes more precisely. Dynamic Neural Radiance Fields (NeRFs) have recently shown promising capability in scene modeling. However, many existing methods rely heavily on accurate poses inputs and multi-sensor data, leading to increased system complexity. To address this, we propose FreeDriveRF, which reconstructs dynamic driving scenes using only sequential RGB images without requiring poses inputs. We innovatively decouple dynamic and static parts at the early sampling level using semantic supervision, mitigating image blurring and artifacts. To overcome the challenges posed by object motion and occlusion in monocular camera, we introduce a warped ray-guided dynamic object rendering consistency loss, utilizing optical flow to better constrain the dynamic modeling process. Additionally, we incorporate estimated dynamic flow to constrain the pose optimization process, improving the stability and accuracy of unbounded scene reconstruction. Extensive experiments conducted on the KITTI and Waymo datasets demonstrate the superior performance of our method in dynamic scene modeling for autonomous driving.

Exploring Embodied Emotional Communication: A Human-oriented Review of Mediated Social Touch

Feb 19, 2025Abstract:This paper offers a structured understanding of mediated social touch (MST) using a human-oriented approach, through an extensive review of literature spanning tactile interfaces, emotional information, mapping mechanisms, and the dynamics of human-human and human-robot interactions. By investigating the existing and exploratory mapping strategies of the 37 selected MST cases, we established the emotional expression space of MSTs that accommodated a diverse spectrum of emotions by integrating the categorical and Valence-arousal models, showcasing how emotional cues can be translated into tactile signals. Based on the expressive capacity of MSTs, a practical design space was structured encompassing factors such as the body locations, device form, tactile modalities, and parameters. We also proposed various design strategies for MSTs including workflow, evaluation methods, and ethical and cultural considerations, as well as several future research directions. MSTs' potential is reflected not only in conveying emotional information but also in fostering empathy, comfort, and connection in both human-human and human-robot interactions. This paper aims to serve as a comprehensive reference for design researchers and practitioners, which helps expand the scope of emotional communication of MSTs, facilitating the exploration of diverse applications of affective haptics, and enhancing the naturalness and sociability of haptic interaction.

KN-LIO: Geometric Kinematics and Neural Field Coupled LiDAR-Inertial Odometry

Jan 08, 2025Abstract:Recent advancements in LiDAR-Inertial Odometry (LIO) have boosted a large amount of applications. However, traditional LIO systems tend to focus more on localization rather than mapping, with maps consisting mostly of sparse geometric elements, which is not ideal for downstream tasks. Recent emerging neural field technology has great potential in dense mapping, but pure LiDAR mapping is difficult to work on high-dynamic vehicles. To mitigate this challenge, we present a new solution that tightly couples geometric kinematics with neural fields to enhance simultaneous state estimation and dense mapping capabilities. We propose both semi-coupled and tightly coupled Kinematic-Neural LIO (KN-LIO) systems that leverage online SDF decoding and iterated error-state Kalman filtering to fuse laser and inertial data. Our KN-LIO minimizes information loss and improves accuracy in state estimation, while also accommodating asynchronous multi-LiDAR inputs. Evaluations on diverse high-dynamic datasets demonstrate that our KN-LIO achieves performance on par with or superior to existing state-of-the-art solutions in pose estimation and offers improved dense mapping accuracy over pure LiDAR-based methods. The relevant code and datasets will be made available at https://**.

Virtual Physical Coupling of Two Lower-Limb Exoskeletons

Jul 20, 2023

Abstract:Physical interaction between individuals plays an important role in human motor learning and performance during shared tasks. Using robotic devices, researchers have studied the effects of dyadic haptic interaction mostly focusing on the upper-limb. Developing infrastructure that enables physical interactions between multiple individuals' lower limbs can extend the previous work and facilitate investigation of new dyadic lower-limb rehabilitation schemes. We designed a system to render haptic interactions between two users while they walk in multi-joint lower-limb exoskeletons. Specifically, we developed an infrastructure where desired interaction torques are commanded to the individual lower-limb exoskeletons based on the users' kinematics and the properties of the virtual coupling. In this pilot study, we demonstrated the capacity of the platform to render different haptic properties (e.g., soft and hard), different haptic connection types (e.g., bidirectional and unidirectional), and connections expressed in joint space and in task space. With haptic connection, dyads generated synchronized movement, and the difference between joint angles decreased as the virtual stiffness increased. This is the first study where multi-joint dyadic haptic interactions are created between lower-limb exoskeletons. This platform will be used to investigate effects of haptic interaction on motor learning and task performance during walking, a complex and meaningful task for gait rehabilitation.

Haptic Transparency and Interaction Force Control for a Lower-Limb Exoskeleton

Jan 16, 2023

Abstract:It is an open problem to control the interaction forces of lower-limb exoskeletons designed for unrestricted overground walking, i.e., floating base exoskeletons with feet that contact the ground. For these types of exoskeletons, it is challenging to measure interaction forces as it is not feasible to implement force/torque sensors at every contact between the user and the exoskeleton. Moreover, it is important to compensate for the exoskeleton's whole-body gravitational and dynamical forces. Previous works either simplified the dynamic model by treating the legs as independent double pendulums, or they did not close the loop with interaction force feedback. This paper presents a novel method to calculate interaction torques during the complete gait cycle by using whole-body dynamics and joint torque measurements on a hip-knee exoskeleton. Furthermore, we propose a constrained optimization scheme combined with a virtual model controller to track desired interaction torques in a closed loop while considering physical limits and safety considerations. Together, we call this approach whole-exoskeleton closed-loop compensation (WECC) control. We evaluated the haptic transparency and spring-damper rendering performance of WECC control on three subjects. We also compared the performance of WECC with a controller based on a simplified dynamic model and a passive version of the exoskeleton with disassembled drives. The WECC controller resulted in consistent interaction torque tracking during the whole gait cycle for both zero and nonzero desired interaction torques. On the contrary, the simplified controller failed to track desired interaction torques during the stance phase. The proposed interaction force control method is especially beneficial for heavy lower-limb exoskeletons where the dynamics of the entire exoskeleton should be compensated.

Reinforcement Learning Control of Robotic Knee with Human in the Loop by Flexible Policy Iteration

Jun 16, 2020

Abstract:This study is motivated by a new class of challenging control problems described by automatic tuning of robotic knee control parameters with human in the loop. In addition to inter-person and intra-person variances inherent in such human-robot systems, human user safety and stability, as well as data and time efficiency should also be taken into design consideration. Here by data and time efficiency we mean learning and adaptation of device configurations takes place within countable gait cycles or within minutes of time. As solutions to this problem is not readily available, we therefore propose a new policy iteration based adaptive dynamic programming algorithm, namely the flexible policy iteration (FPI). We show that the FPI solves the control parameters via (weighted) least-squares while it incorporates data flexibly and utilizes prior knowledge. We provide analyses on stable control policies, non-increasing and converging value functions to Bellman optimality, and error bounds on the iterative value functions subject to approximation errors. We extensively evaluated the performance of FPI in a well-established locomotion simulator, the OpenSim under realistic conditions. By inspecting FPI with three other comparable algorithms, we demonstrate the FPI as a feasible data and time efficient design approach for adapting the control parameters of the prosthetic knee to co-adapt with the human user who also places control on the prosthesis. As the proposed FPI algorithm does not require stringent constraints or peculiar assumptions, we expect this reinforcement learning controller can potentially be applied to other challenging adaptive optimal control problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge