Yanye Lu

Multi-level Asymmetric Contrastive Learning for Medical Image Segmentation Pre-training

Sep 21, 2023Abstract:Contrastive learning, which is a powerful technique for learning image-level representations from unlabeled data, leads a promising direction to dealing with the dilemma between large-scale pre-training and limited labeled data. However, most existing contrastive learning strategies are designed mainly for downstream tasks of natural images, therefore they are sub-optimal and even worse than learning from scratch when directly applied to medical images whose downstream tasks are usually segmentation. In this work, we propose a novel asymmetric contrastive learning framework named JCL for medical image segmentation with self-supervised pre-training. Specifically, (1) A novel asymmetric contrastive learning strategy is proposed to pre-train both encoder and decoder simultaneously in one-stage to provide better initialization for segmentation models. (2) A multi-level contrastive loss is designed to take the correspondence among feature-level, image-level and pixel-level projections, respectively into account to make sure multi-level representations can be learned by the encoder and decoder during pre-training. (3) Experiments on multiple medical image datasets indicate our JCL framework outperforms existing SOTA contrastive learning strategies.

Branches Mutual Promotion for End-to-End Weakly Supervised Semantic Segmentation

Aug 09, 2023

Abstract:End-to-end weakly supervised semantic segmentation aims at optimizing a segmentation model in a single-stage training process based on only image annotations. Existing methods adopt an online-trained classification branch to provide pseudo annotations for supervising the segmentation branch. However, this strategy makes the classification branch dominate the whole concurrent training process, hindering these two branches from assisting each other. In our work, we treat these two branches equally by viewing them as diverse ways to generate the segmentation map, and add interactions on both their supervision and operation to achieve mutual promotion. For this purpose, a bidirectional supervision mechanism is elaborated to force the consistency between the outputs of these two branches. Thus, the segmentation branch can also give feedback to the classification branch to enhance the quality of localization seeds. Moreover, our method also designs interaction operations between these two branches to exchange their knowledge to assist each other. Experiments indicate our work outperforms existing end-to-end weakly supervised segmentation methods.

One-Pot Multi-Frame Denoising

Feb 18, 2023Abstract:The performance of learning-based denoising largely depends on clean supervision. However, it is difficult to obtain clean images in many scenes. On the contrary, the capture of multiple noisy frames for the same field of view is available and often natural in real life. Therefore, it is necessary to avoid the restriction of clean labels and make full use of noisy data for model training. So we propose an unsupervised learning strategy named one-pot denoising (OPD) for multi-frame images. OPD is the first proposed unsupervised multi-frame denoising (MFD) method. Different from the traditional supervision schemes including both supervised Noise2Clean (N2C) and unsupervised Noise2Noise (N2N), OPD executes mutual supervision among all of the multiple frames, which gives learning more diversity of supervision and allows models to mine deeper into the correlation among frames. N2N has also been proved to be actually a simplified case of the proposed OPD. From the perspectives of data allocation and loss function, two specific implementations, random coupling (RC) and alienation loss (AL), are respectively provided to accomplish OPD during model training. In practice, our experiments demonstrate that OPD behaves as the SOTA unsupervised denoising method and is comparable to supervised N2C methods for synthetic Gaussian and Poisson noise, and real-world optical coherence tomography (OCT) speckle noise.

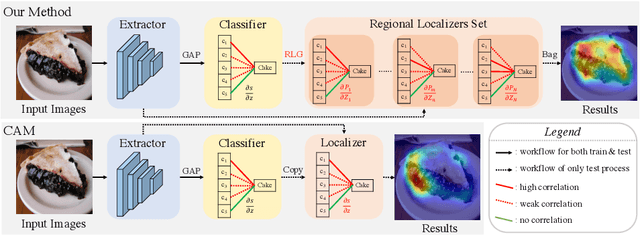

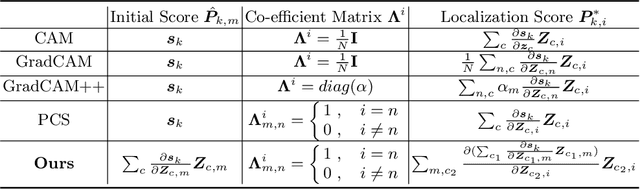

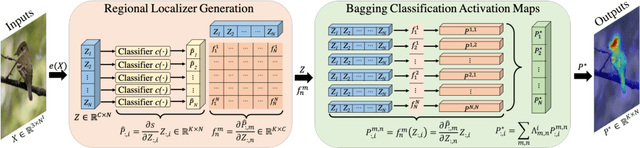

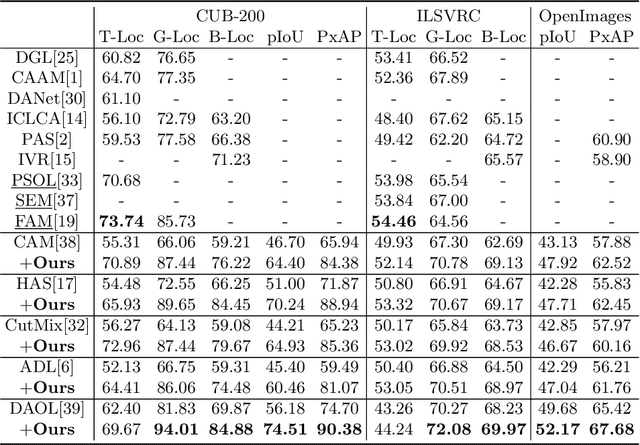

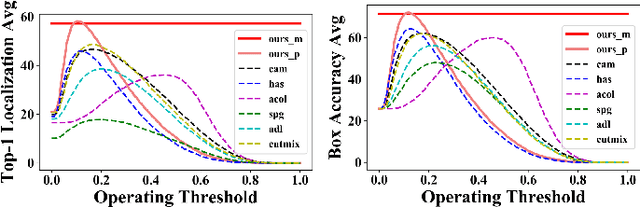

Bagging Regional Classification Activation Maps for Weakly Supervised Object Localization

Jul 16, 2022

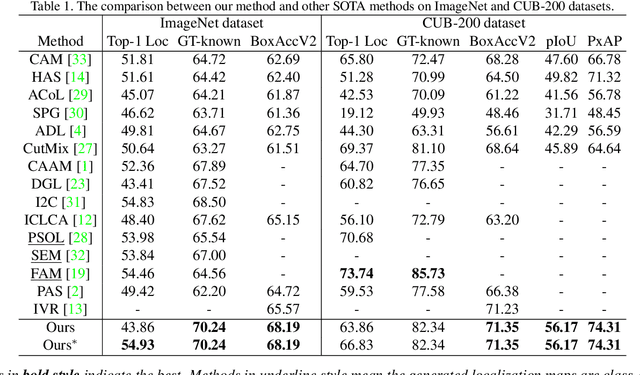

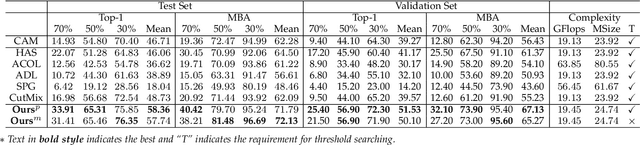

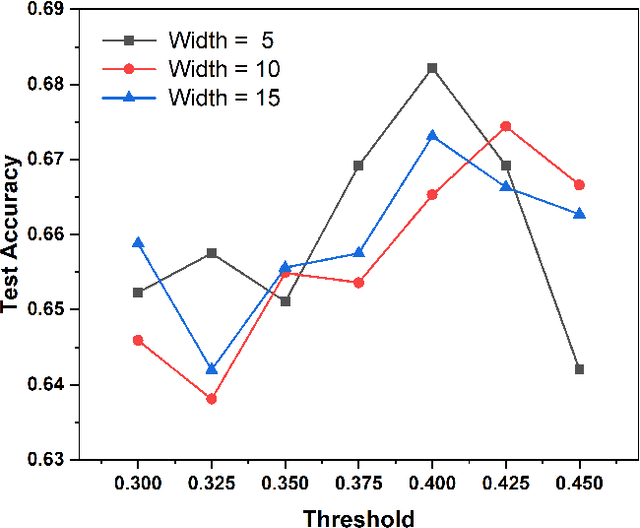

Abstract:Classification activation map (CAM), utilizing the classification structure to generate pixel-wise localization maps, is a crucial mechanism for weakly supervised object localization (WSOL). However, CAM directly uses the classifier trained on image-level features to locate objects, making it prefers to discern global discriminative factors rather than regional object cues. Thus only the discriminative locations are activated when feeding pixel-level features into this classifier. To solve this issue, this paper elaborates a plug-and-play mechanism called BagCAMs to better project a well-trained classifier for the localization task without refining or re-training the baseline structure. Our BagCAMs adopts a proposed regional localizer generation (RLG) strategy to define a set of regional localizers and then derive them from a well-trained classifier. These regional localizers can be viewed as the base learner that only discerns region-wise object factors for localization tasks, and their results can be effectively weighted by our BagCAMs to form the final localization map. Experiments indicate that adopting our proposed BagCAMs can improve the performance of baseline WSOL methods to a great extent and obtains state-of-the-art performance on three WSOL benchmarks. Code are released at https://github.com/zh460045050/BagCAMs.

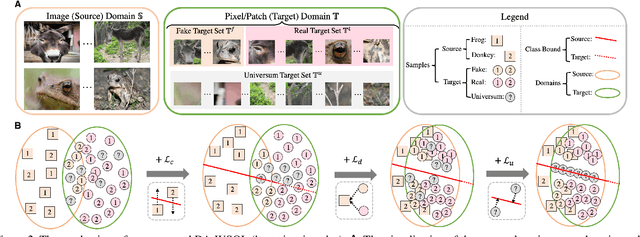

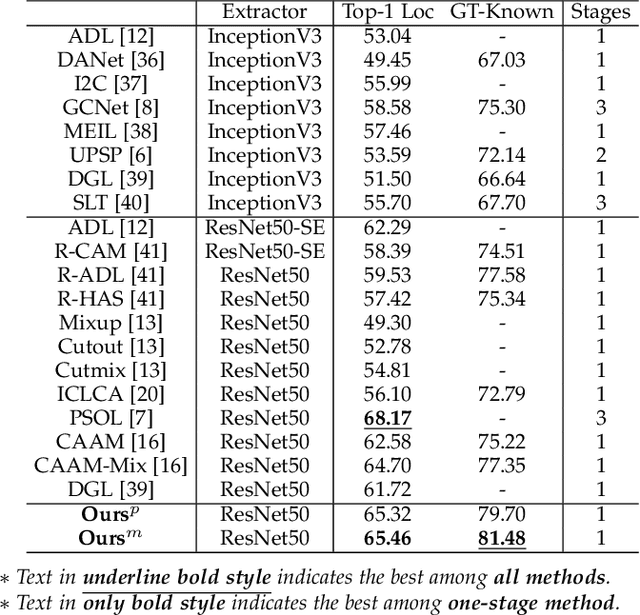

Weakly Supervised Object Localization as Domain Adaption

Mar 25, 2022

Abstract:Weakly supervised object localization (WSOL) focuses on localizing objects only with the supervision of image-level classification masks. Most previous WSOL methods follow the classification activation map (CAM) that localizes objects based on the classification structure with the multi-instance learning (MIL) mechanism. However, the MIL mechanism makes CAM only activate discriminative object parts rather than the whole object, weakening its performance for localizing objects. To avoid this problem, this work provides a novel perspective that models WSOL as a domain adaption (DA) task, where the score estimator trained on the source/image domain is tested on the target/pixel domain to locate objects. Under this perspective, a DA-WSOL pipeline is designed to better engage DA approaches into WSOL to enhance localization performance. It utilizes a proposed target sampling strategy to select different types of target samples. Based on these types of target samples, domain adaption localization (DAL) loss is elaborated. It aligns the feature distribution between the two domains by DA and makes the estimator perceive target domain cues by Universum regularization. Experiments show that our pipeline outperforms SOTA methods on multi benchmarks. Code are released at \url{https://github.com/zh460045050/DA-WSOL_CVPR2022}.

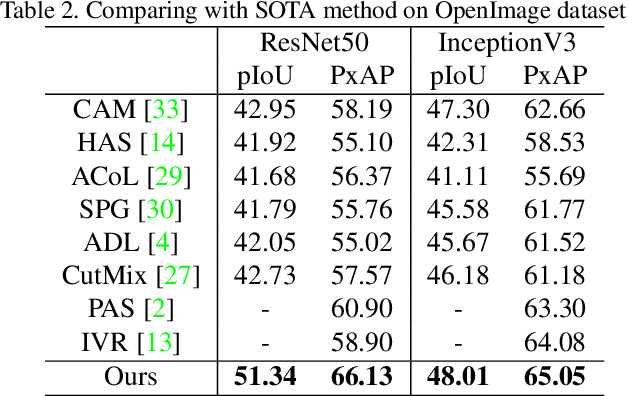

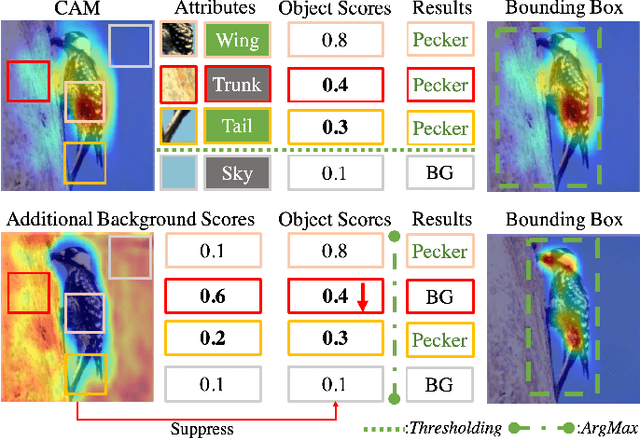

Background-aware Classification Activation Map for Weakly Supervised Object Localization

Dec 29, 2021

Abstract:Weakly supervised object localization (WSOL) relaxes the requirement of dense annotations for object localization by using image-level classification masks to supervise its learning process. However, current WSOL methods suffer from excessive activation of background locations and need post-processing to obtain the localization mask. This paper attributes these issues to the unawareness of background cues, and propose the background-aware classification activation map (B-CAM) to simultaneously learn localization scores of both object and background with only image-level labels. In our B-CAM, two image-level features, aggregated by pixel-level features of potential background and object locations, are used to purify the object feature from the object-related background and to represent the feature of the pure-background sample, respectively. Then based on these two features, both the object classifier and the background classifier are learned to determine the binary object localization mask. Our B-CAM can be trained in end-to-end manner based on a proposed stagger classification loss, which not only improves the objects localization but also suppresses the background activation. Experiments show that our B-CAM outperforms one-stage WSOL methods on the CUB-200, OpenImages and VOC2012 datasets.

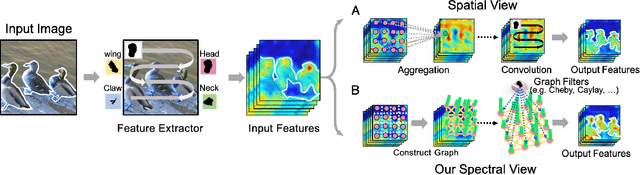

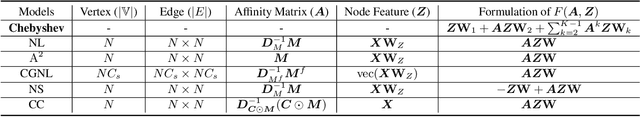

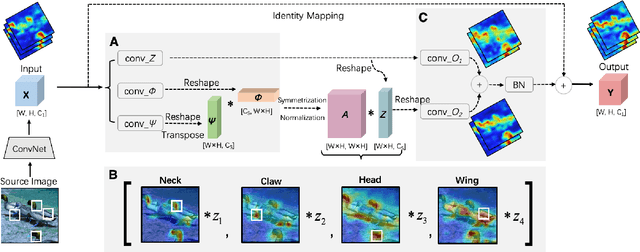

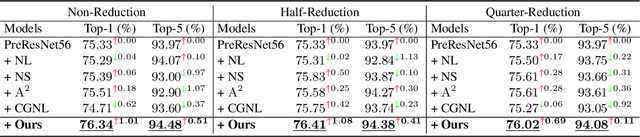

Unifying Nonlocal Blocks for Neural Networks

Aug 17, 2021

Abstract:The nonlocal-based blocks are designed for capturing long-range spatial-temporal dependencies in computer vision tasks. Although having shown excellent performance, they still lack the mechanism to encode the rich, structured information among elements in an image or video. In this paper, to theoretically analyze the property of these nonlocal-based blocks, we provide a new perspective to interpret them, where we view them as a set of graph filters generated on a fully-connected graph. Specifically, when choosing the Chebyshev graph filter, a unified formulation can be derived for explaining and analyzing the existing nonlocal-based blocks (e.g., nonlocal block, nonlocal stage, double attention block). Furthermore, by concerning the property of spectral, we propose an efficient and robust spectral nonlocal block, which can be more robust and flexible to catch long-range dependencies when inserted into deep neural networks than the existing nonlocal blocks. Experimental results demonstrate the clear-cut improvements and practical applicabilities of our method on image classification, action recognition, semantic segmentation, and person re-identification tasks.

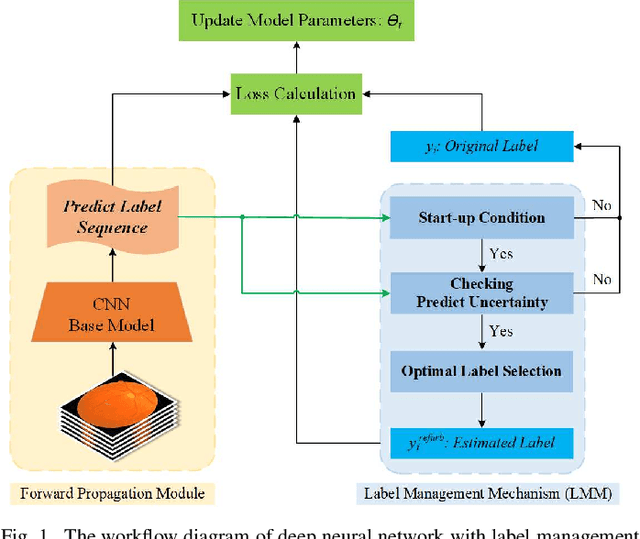

A Label Management Mechanism for Retinal Fundus Image Classification of Diabetic Retinopathy

Jun 23, 2021

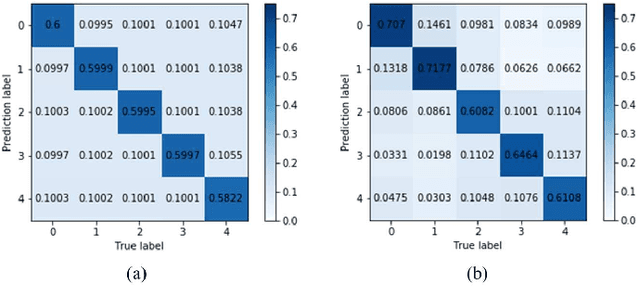

Abstract:Diabetic retinopathy (DR) remains the most prevalent cause of vision impairment and irreversible blindness in the working-age adults. Due to the renaissance of deep learning (DL), DL-based DR diagnosis has become a promising tool for the early screening and severity grading of DR. However, training deep neural networks (DNNs) requires an enormous amount of carefully labeled data. Noisy label data may be introduced when labeling plenty of data, degrading the performance of models. In this work, we propose a novel label management mechanism (LMM) for the DNN to overcome overfitting on the noisy data. LMM utilizes maximum posteriori probability (MAP) in the Bayesian statistic and time-weighted technique to selectively correct the labels of unclean data, which gradually purify the training data and improve classification performance. Comprehensive experiments on both synthetic noise data (Messidor \& our collected DR dataset) and real-world noise data (ANIMAL-10N) demonstrated that LMM could boost performance of models and is superior to three state-of-the-art methods.

Learning the Superpixel in a Non-iterative and Lifelong Manner

Mar 19, 2021

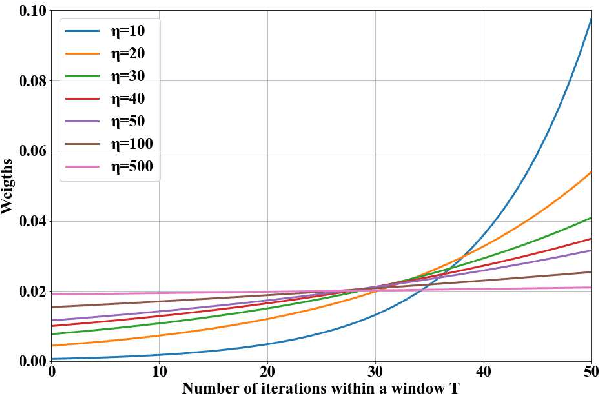

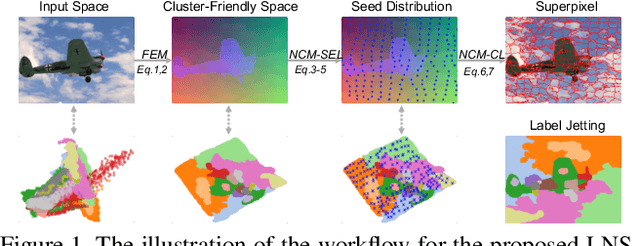

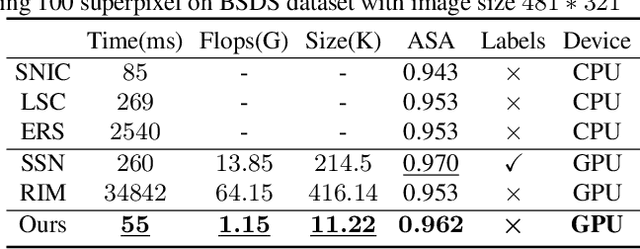

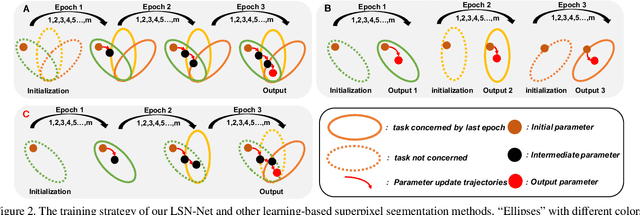

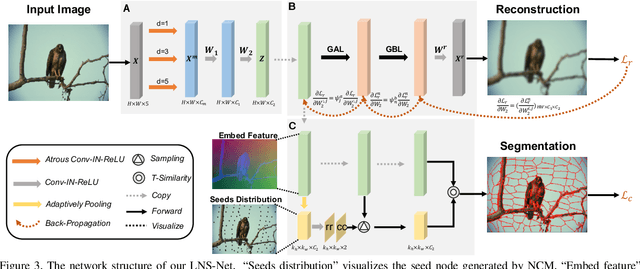

Abstract:Superpixel is generated by automatically clustering pixels in an image into hundreds of compact partitions, which is widely used to perceive the object contours for its excellent contour adherence. Although some works use the Convolution Neural Network (CNN) to generate high-quality superpixel, we challenge the design principles of these networks, specifically for their dependence on manual labels and excess computation resources, which limits their flexibility compared with the traditional unsupervised segmentation methods. We target at redefining the CNN-based superpixel segmentation as a lifelong clustering task and propose an unsupervised CNN-based method called LNS-Net. The LNS-Net can learn superpixel in a non-iterative and lifelong manner without any manual labels. Specifically, a lightweight feature embedder is proposed for LNS-Net to efficiently generate the cluster-friendly features. With those features, seed nodes can be automatically assigned to cluster pixels in a non-iterative way. Additionally, our LNS-Net can adapt the sequentially lifelong learning by rescaling the gradient of weight based on both channel and spatial context to avoid overfitting. Experiments show that the proposed LNS-Net achieves significantly better performance on three benchmarks with nearly ten times lower complexity compared with other state-of-the-art methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge