Xiangfeng Wang

Decentralized Entropic Optimal Transport for Privacy-preserving Distributed Distribution Comparison

Jan 28, 2023Abstract:Privacy-preserving distributed distribution comparison measures the distance between the distributions whose data are scattered across different agents in a distributed system and cannot be shared among the agents. In this study, we propose a novel decentralized entropic optimal transport (EOT) method, which provides a privacy-preserving and communication-efficient solution to this problem with theoretical guarantees. In particular, we design a mini-batch randomized block-coordinate descent (MRBCD) scheme to optimize the decentralized EOT distance in its dual form. The dual variables are scattered across different agents and updated locally and iteratively with limited communications among partial agents. The kernel matrix involved in the gradients of the dual variables is estimated by a distributed kernel approximation method, and each agent only needs to approximate and store a sub-kernel matrix by one-shot communication and without sharing raw data. We analyze our method's communication complexity and provide a theoretical bound for the approximation error caused by the convergence error, the approximated kernel, and the mismatch between the storage and communication protocols. Experiments on synthetic data and real-world distributed domain adaptation tasks demonstrate the effectiveness of our method.

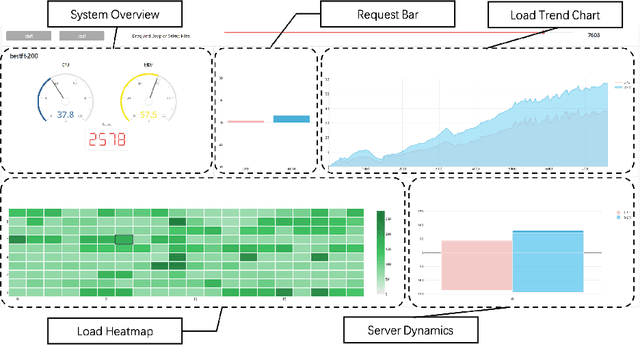

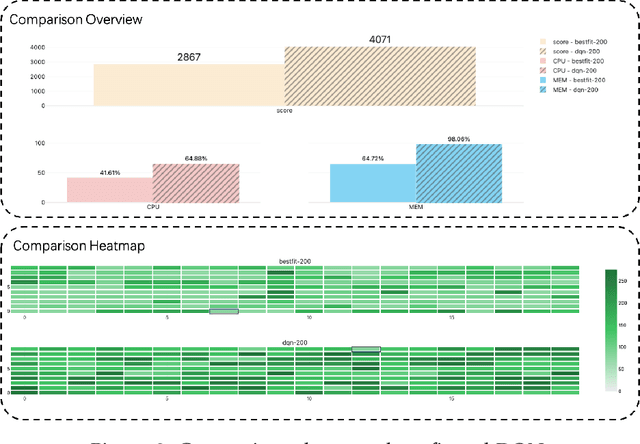

ReAssigner: A Plug-and-Play Virtual Machine Scheduling Intensifier for Heterogeneous Requests

Nov 29, 2022Abstract:With the rapid development of cloud computing, virtual machine scheduling has become one of the most important but challenging issues for the cloud computing community, especially for practical heterogeneous request sequences. By analyzing the impact of request heterogeneity on some popular heuristic schedulers, it can be found that existing scheduling algorithms can not handle the request heterogeneity properly and efficiently. In this paper, a plug-and-play virtual machine scheduling intensifier, called Resource Assigner (ReAssigner), is proposed to enhance the scheduling efficiency of any given scheduler for heterogeneous requests. The key idea of ReAssigner is to pre-assign roles to physical resources and let resources of the same role form a virtual cluster to handle homogeneous requests. ReAssigner can cooperate with arbitrary schedulers by restricting their scheduling space to virtual clusters. With evaluations on the real dataset from Huawei Cloud, the proposed ReAssigner achieves significant scheduling performance improvement compared with some state-of-the-art scheduling methods.

Learning Cooperative Oversubscription for Cloud by Chance-Constrained Multi-Agent Reinforcement Learning

Nov 21, 2022

Abstract:Oversubscription is a common practice for improving cloud resource utilization. It allows the cloud service provider to sell more resources than the physical limit, assuming not all users would fully utilize the resources simultaneously. However, how to design an oversubscription policy that improves utilization while satisfying the some safety constraints remains an open problem. Existing methods and industrial practices are over-conservative, ignoring the coordination of diverse resource usage patterns and probabilistic constraints. To address these two limitations, this paper formulates the oversubscription for cloud as a chance-constrained optimization problem and propose an effective Chance Constrained Multi-Agent Reinforcement Learning (C2MARL) method to solve this problem. Specifically, C2MARL reduces the number of constraints by considering their upper bounds and leverages a multi-agent reinforcement learning paradigm to learn a safe and optimal coordination policy. We evaluate our C2MARL on an internal cloud platform and public cloud datasets. Experiments show that our C2MARL outperforms existing methods in improving utilization ($20\%\sim 86\%$) under different levels of safety constraints.

Multi-Agent Path Finding with Prioritized Communication Learning

Feb 10, 2022Abstract:Multi-agent pathfinding (MAPF) has been widely used to solve large-scale real-world problems, e.g., automation warehouses. The learning-based, fully decentralized framework has been introduced to alleviate real-time problems and simultaneously pursue optimal planning policy. However, existing methods might generate significantly more vertex conflicts (or collisions), which lead to a low success rate or more makespan. In this paper, we propose a PrIoritized COmmunication learning method (PICO), which incorporates the \textit{implicit} planning priorities into the communication topology within the decentralized multi-agent reinforcement learning framework. Assembling with the classic coupled planners, the implicit priority learning module can be utilized to form the dynamic communication topology, which also builds an effective collision-avoiding mechanism. PICO performs significantly better in large-scale MAPF tasks in success rates and collision rates than state-of-the-art learning-based planners.

Obtaining Dyadic Fairness by Optimal Transport

Feb 09, 2022

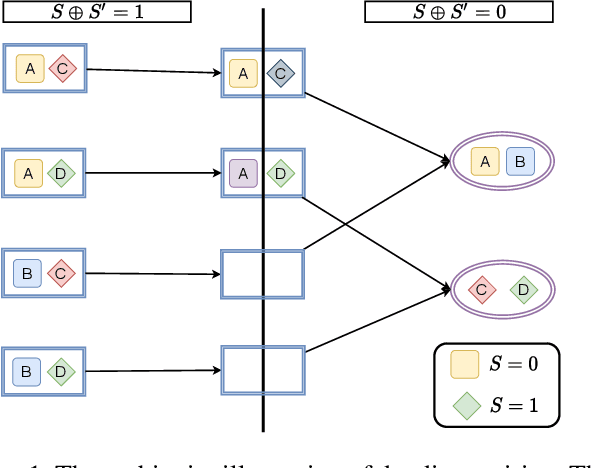

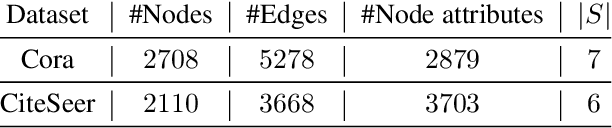

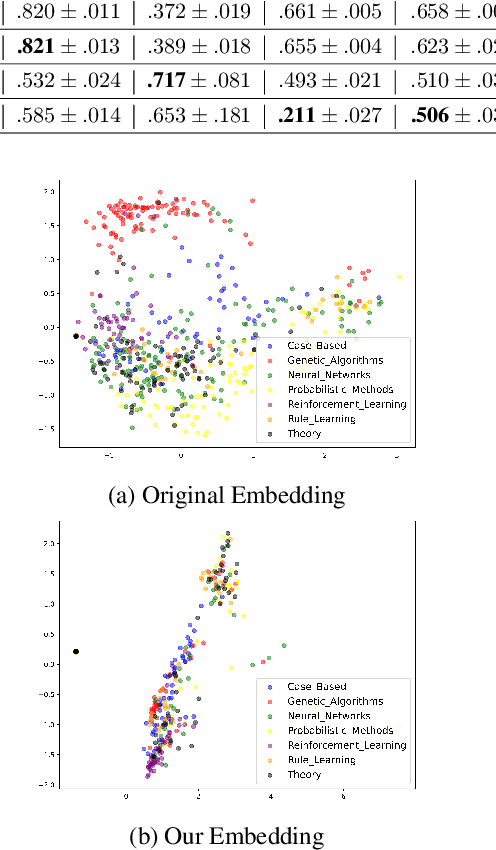

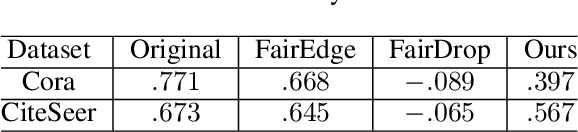

Abstract:Fairness has been taken as a critical metric on machine learning models. Many works studying how to obtain fairness for different tasks emerge. This paper considers obtaining fairness for link prediction tasks, which can be measured by dyadic fairness. We aim to propose a pre-processing methodology to obtain dyadic fairness through data repairing and optimal transport. To obtain dyadic fairness with satisfying flexibility and unambiguity requirements, we transform the dyadic repairing to the conditional distribution alignment problem based on optimal transport and obtain theoretical results on the connection between the proposed alignment and dyadic fairness. The optimal transport-based dyadic fairness algorithm is proposed for graph link prediction. Our proposed algorithm shows superior results on obtaining fairness compared with the other pre-processing methods on two benchmark graph datasets.

VMAgent: Scheduling Simulator for Reinforcement Learning

Dec 09, 2021

Abstract:A novel simulator called VMAgent is introduced to help RL researchers better explore new methods, especially for virtual machine scheduling. VMAgent is inspired by practical virtual machine (VM) scheduling tasks and provides an efficient simulation platform that can reflect the real situations of cloud computing. Three scenarios (fading, recovering, and expansion) are concluded from practical cloud computing and corresponds to many reinforcement learning challenges (high dimensional state and action spaces, high non-stationarity, and life-long demand). VMAgent provides flexible configurations for RL researchers to design their customized scheduling environments considering different problem features. From the VM scheduling perspective, VMAgent also helps to explore better learning-based scheduling solutions.

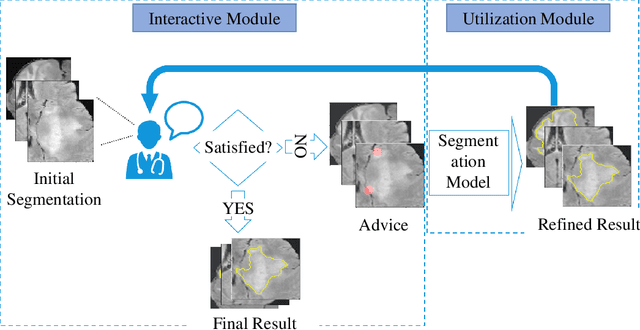

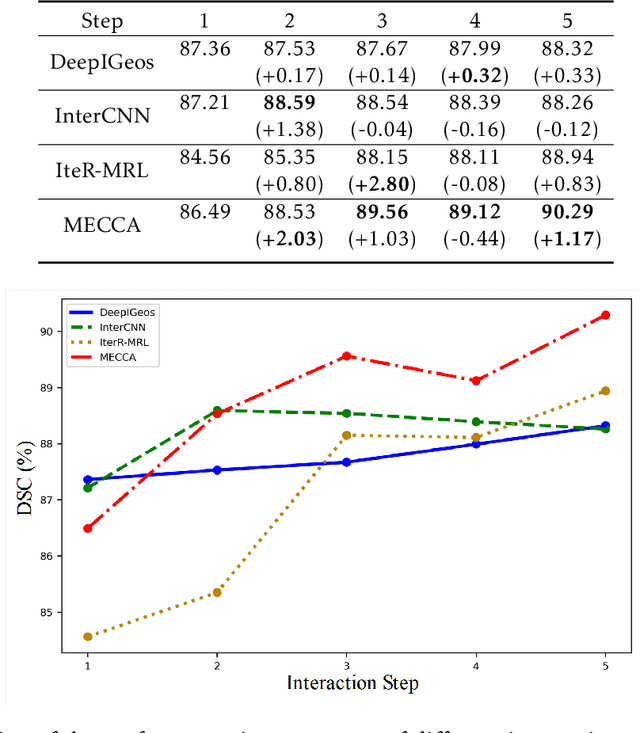

Interactive Medical Image Segmentation with Self-Adaptive Confidence Calibration

Nov 15, 2021

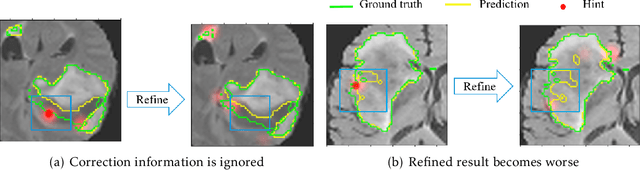

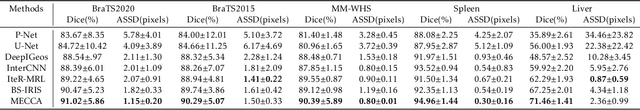

Abstract:Medical image segmentation is one of the fundamental problems for artificial intelligence-based clinical decision systems. Current automatic medical image segmentation methods are often failed to meet clinical requirements. As such, a series of interactive segmentation algorithms are proposed to utilize expert correction information. However, existing methods suffer from some segmentation refining failure problems after long-term interactions and some cost problems from expert annotation, which hinder clinical applications. This paper proposes an interactive segmentation framework, called interactive MEdical segmentation with self-adaptive Confidence CAlibration (MECCA), by introducing the corrective action evaluation, which combines the action-based confidence learning and multi-agent reinforcement learning (MARL). The evaluation is established through a novel action-based confidence network, and the corrective actions are obtained from MARL. Based on the confidential information, a self-adaptive reward function is designed to provide more detailed feedback, and a simulated label generation mechanism is proposed on unsupervised data to reduce over-reliance on labeled data. Experimental results on various medical image datasets have shown the significant performance of the proposed algorithm.

Dealing with Non-Stationarity in Multi-Agent Reinforcement Learning via Trust Region Decomposition

Feb 21, 2021

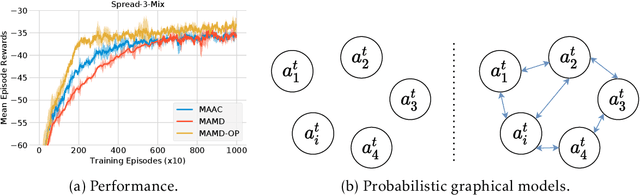

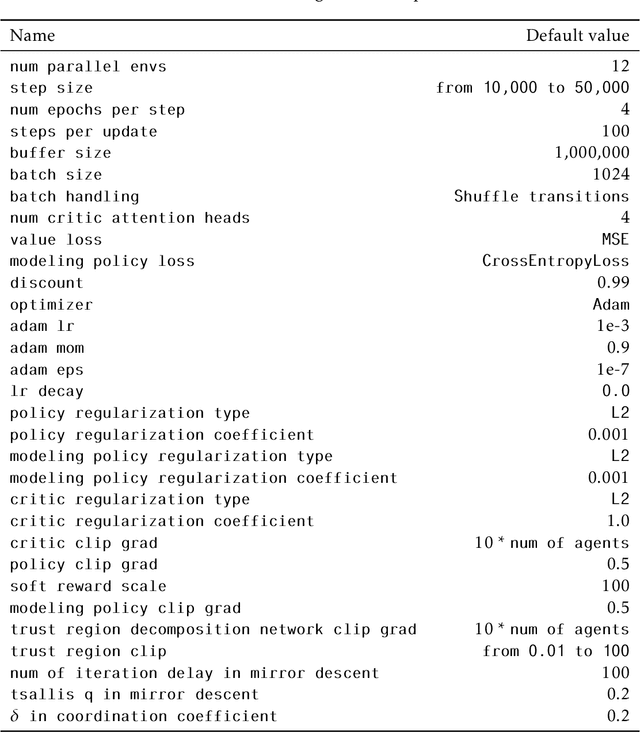

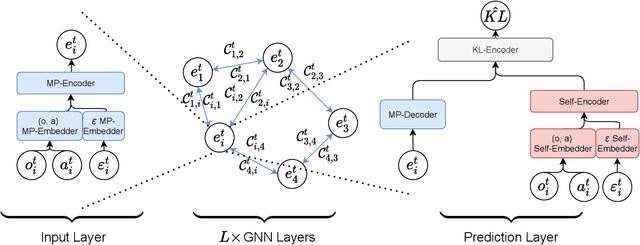

Abstract:Non-stationarity is one thorny issue in multi-agent reinforcement learning, which is caused by the policy changes of agents during the learning procedure. Current works to solve this problem have their own limitations in effectiveness and scalability, such as centralized critic and decentralized actor (CCDA), population-based self-play, modeling of others and etc. In this paper, we novelly introduce a $\delta$-stationarity measurement to explicitly model the stationarity of a policy sequence, which is theoretically proved to be proportional to the joint policy divergence. However, simple policy factorization like mean-field approximation will mislead to larger policy divergence, which can be considered as trust region decomposition dilemma. We model the joint policy as a general Markov random field and propose a trust region decomposition network based on message passing to estimate the joint policy divergence more accurately. The Multi-Agent Mirror descent policy algorithm with Trust region decomposition, called MAMT, is established with the purpose to satisfy $\delta$-stationarity. MAMT can adjust the trust region of the local policies adaptively in an end-to-end manner, thereby approximately constraining the divergence of joint policy to alleviate the non-stationary problem. Our method can bring noticeable and stable performance improvement compared with baselines in coordination tasks of different complexity.

Structured Diversification Emergence via Reinforced Organization Control and Hierarchical Consensus Learning

Feb 09, 2021

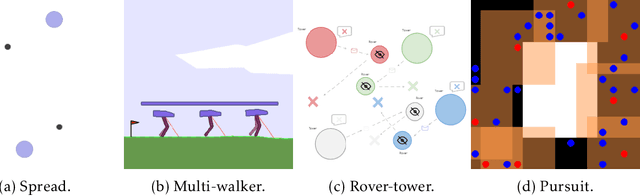

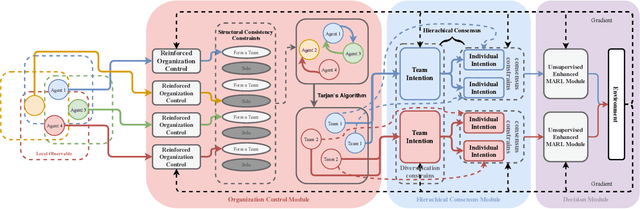

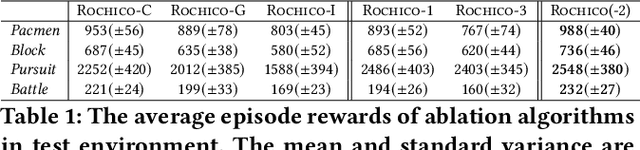

Abstract:When solving a complex task, humans will spontaneously form teams and to complete different parts of the whole task, respectively. Meanwhile, the cooperation between teammates will improve efficiency. However, for current cooperative MARL methods, the cooperation team is constructed through either heuristics or end-to-end blackbox optimization. In order to improve the efficiency of cooperation and exploration, we propose a structured diversification emergence MARL framework named {\sc{Rochico}} based on reinforced organization control and hierarchical consensus learning. {\sc{Rochico}} first learns an adaptive grouping policy through the organization control module, which is established by independent multi-agent reinforcement learning. Further, the hierarchical consensus module based on the hierarchical intentions with consensus constraint is introduced after team formation. Simultaneously, utilizing the hierarchical consensus module and a self-supervised intrinsic reward enhanced decision module, the proposed cooperative MARL algorithm {\sc{Rochico}} can output the final diversified multi-agent cooperative policy. All three modules are organically combined to promote the structured diversification emergence. Comparative experiments on four large-scale cooperation tasks show that {\sc{Rochico}} is significantly better than the current SOTA algorithms in terms of exploration efficiency and cooperation strength.

FDA3 : Federated Defense Against Adversarial Attacks for Cloud-Based IIoT Applications

Jun 28, 2020

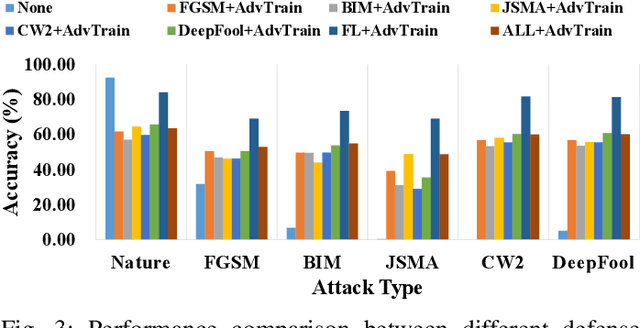

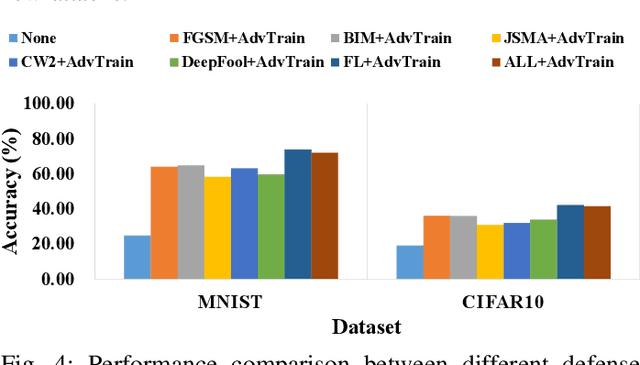

Abstract:Along with the proliferation of Artificial Intelligence (AI) and Internet of Things (IoT) techniques, various kinds of adversarial attacks are increasingly emerging to fool Deep Neural Networks (DNNs) used by Industrial IoT (IIoT) applications. Due to biased training data or vulnerable underlying models, imperceptible modifications on inputs made by adversarial attacks may result in devastating consequences. Although existing methods are promising in defending such malicious attacks, most of them can only deal with limited existing attack types, which makes the deployment of large-scale IIoT devices a great challenge. To address this problem, we present an effective federated defense approach named FDA3 that can aggregate defense knowledge against adversarial examples from different sources. Inspired by federated learning, our proposed cloud-based architecture enables the sharing of defense capabilities against different attacks among IIoT devices. Comprehensive experimental results show that the generated DNNs by our approach can not only resist more malicious attacks than existing attack-specific adversarial training methods, but also can prevent IIoT applications from new attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge