Soon-Jo Chung

MonoTher-Depth: Enhancing Thermal Depth Estimation via Confidence-Aware Distillation

Apr 21, 2025Abstract:Monocular depth estimation (MDE) from thermal images is a crucial technology for robotic systems operating in challenging conditions such as fog, smoke, and low light. The limited availability of labeled thermal data constrains the generalization capabilities of thermal MDE models compared to foundational RGB MDE models, which benefit from datasets of millions of images across diverse scenarios. To address this challenge, we introduce a novel pipeline that enhances thermal MDE through knowledge distillation from a versatile RGB MDE model. Our approach features a confidence-aware distillation method that utilizes the predicted confidence of the RGB MDE to selectively strengthen the thermal MDE model, capitalizing on the strengths of the RGB model while mitigating its weaknesses. Our method significantly improves the accuracy of the thermal MDE, independent of the availability of labeled depth supervision, and greatly expands its applicability to new scenarios. In our experiments on new scenarios without labeled depth, the proposed confidence-aware distillation method reduces the absolute relative error of thermal MDE by 22.88\% compared to the baseline without distillation.

* 8 Pages; The code will be available at https://github.com/ZuoJiaxing/monother_depth

Monte Carlo Tree Search with Spectral Expansion for Planning with Dynamical Systems

Dec 15, 2024Abstract:The ability of a robot to plan complex behaviors with real-time computation, rather than adhering to predesigned or offline-learned routines, alleviates the need for specialized algorithms or training for each problem instance. Monte Carlo Tree Search is a powerful planning algorithm that strategically explores simulated future possibilities, but it requires a discrete problem representation that is irreconcilable with the continuous dynamics of the physical world. We present Spectral Expansion Tree Search (SETS), a real-time, tree-based planner that uses the spectrum of the locally linearized system to construct a low-complexity and approximately equivalent discrete representation of the continuous world. We prove SETS converges to a bound of the globally optimal solution for continuous, deterministic and differentiable Markov Decision Processes, a broad class of problems that includes underactuated nonlinear dynamics, non-convex reward functions, and unstructured environments. We experimentally validate SETS on drone, spacecraft, and ground vehicle robots and one numerical experiment, each of which is not directly solvable with existing methods. We successfully show SETS automatically discovers a diverse set of optimal behaviors and motion trajectories in real time.

* The first two authors contributed equally to this article

Motor Imagery Teleoperation of a Mobile Robot Using a Low-Cost Brain-Computer Interface for Multi-Day Validation

Dec 12, 2024

Abstract:Brain-computer interfaces (BCI) have the potential to provide transformative control in prosthetics, assistive technologies (wheelchairs), robotics, and human-computer interfaces. While Motor Imagery (MI) offers an intuitive approach to BCI control, its practical implementation is often limited by the requirement for expensive devices, extensive training data, and complex algorithms, leading to user fatigue and reduced accessibility. In this paper, we demonstrate that effective MI-BCI control of a mobile robot in real-world settings can be achieved using a fine-tuned Deep Neural Network (DNN) with a sliding window, eliminating the need for complex feature extractions for real-time robot control. The fine-tuning process optimizes the convolutional and attention layers of the DNN to adapt to each user's daily MI data streams, reducing training data by 70% and minimizing user fatigue from extended data collection. Using a low-cost (~$3k), 16-channel, non-invasive, open-source electroencephalogram (EEG) device, four users teleoperated a quadruped robot over three days. The system achieved 78% accuracy on a single-day validation dataset and maintained a 75% validation accuracy over three days without extensive retraining from day-to-day. For real-world robot command classification, we achieved an average of 62% accuracy. By providing empirical evidence that MI-BCI systems can maintain performance over multiple days with reduced training data to DNN and a low-cost EEG device, our work enhances the practicality and accessibility of BCI technology. This advancement makes BCI applications more feasible for real-world scenarios, particularly in controlling robotic systems.

Model Predictive Trees: Sample-Efficient Receding Horizon Planning with Reusable Tree Search

Nov 23, 2024Abstract:We present Model Predictive Trees (MPT), a receding horizon tree search algorithm that improves its performance by reusing information efficiently. Whereas existing solvers reuse only the highest-quality trajectory from the previous iteration as a "hotstart", our method reuses the entire optimal subtree, enabling the search to be simultaneously guided away from the low-quality areas and towards the high-quality areas. We characterize the restrictions on tree reuse by analyzing the induced tracking error under time-varying dynamics, revealing a tradeoff between the search depth and the timescale of the changing dynamics. In numerical studies, our algorithm outperforms state-of-the-art sampling-based cross-entropy methods with hotstarting. We demonstrate our planner on an autonomous vehicle testbed performing a nonprehensile manipulation task: pushing a target object through an obstacle field. Code associated with this work will be made available at https://github.com/jplathrop/mpt.

Meta-Learning Augmented MPC for Disturbance-Aware Motion Planning and Control of Quadrotors

Oct 08, 2024

Abstract:A major challenge in autonomous flights is unknown disturbances, which can jeopardize safety and lead to collisions, especially in obstacle-rich environments. This paper presents a disturbance-aware motion planning and control framework designed for autonomous aerial flights. The framework is composed of two key components: a disturbance-aware motion planner and a tracking controller. The disturbance-aware motion planner consists of a predictive control scheme and a learned model of disturbances that is adapted online. The tracking controller is designed using contraction control methods to provide safety bounds on the quadrotor behaviour in the vicinity of the obstacles with respect to the disturbance-aware motion plan. Finally, the algorithm is tested in simulation scenarios with a quadrotor facing strong crosswind and ground-induced disturbances.

Vision-Based Detection of Uncooperative Targets and Components on Small Satellites

Aug 22, 2024Abstract:Space debris and inactive satellites pose a threat to the safety and integrity of operational spacecraft and motivate the need for space situational awareness techniques. These uncooperative targets create a challenging tracking and detection problem due to a lack of prior knowledge of their features, trajectories, or even existence. Recent advancements in computer vision models can be used to improve upon existing methods for tracking such uncooperative targets to make them more robust and reliable to the wide-ranging nature of the target. This paper introduces an autonomous detection model designed to identify and monitor these objects using learning and computer vision. The autonomous detection method aims to identify and accurately track the uncooperative targets in varied circumstances, including different camera spectral sensitivities, lighting, and backgrounds. Our method adapts to the relative distance between the observing spacecraft and the target, and different detection strategies are adjusted based on distance. At larger distances, we utilize You Only Look Once (YOLOv8), a multitask Convolutional Neural Network (CNN), for zero-shot and domain-specific single-shot real time detection of the target. At shorter distances, we use knowledge distillation to combine visual foundation models with a lightweight fast segmentation CNN (Fast-SCNN) to segment the spacecraft components with low storage requirements and fast inference times, and to enable weight updates from earth and possible onboard training. Lastly, we test our method on a custom dataset simulating the unique conditions encountered in space, as well as a publicly-available dataset.

MAGIC-VFM: Meta-learning Adaptation for Ground Interaction Control with Visual Foundation Models

Jul 17, 2024Abstract:Control of off-road vehicles is challenging due to the complex dynamic interactions with the terrain. Accurate modeling of these interactions is important to optimize driving performance, but the relevant physical phenomena are too complex to model from first principles. Therefore, we present an offline meta-learning algorithm to construct a rapidly-tunable model of residual dynamics and disturbances. Our model processes terrain images into features using a visual foundation model (VFM), then maps these features and the vehicle state to an estimate of the current actuation matrix using a deep neural network (DNN). We then combine this model with composite adaptive control to modify the last layer of the DNN in real time, accounting for the remaining terrain interactions not captured during offline training. We provide mathematical guarantees of stability and robustness for our controller and demonstrate the effectiveness of our method through simulations and hardware experiments with a tracked vehicle and a car-like robot. We evaluate our method outdoors on different slopes with varying slippage and actuator degradation disturbances, and compare against an adaptive controller that does not use the VFM terrain features. We show significant improvement over the baseline in both hardware experimentation and simulation.

Semantics from Space: Satellite-Guided Thermal Semantic Segmentation Annotation for Aerial Field Robots

Mar 21, 2024Abstract:We present a new method to automatically generate semantic segmentation annotations for thermal imagery captured from an aerial vehicle by utilizing satellite-derived data products alongside onboard global positioning and attitude estimates. This new capability overcomes the challenge of developing thermal semantic perception algorithms for field robots due to the lack of annotated thermal field datasets and the time and costs of manual annotation, enabling precise and rapid annotation of thermal data from field collection efforts at a massively-parallelizable scale. By incorporating a thermal-conditioned refinement step with visual foundation models, our approach can produce highly-precise semantic segmentation labels using low-resolution satellite land cover data for little-to-no cost. It achieves 98.5% of the performance from using costly high-resolution options and demonstrates between 70-160% improvement over popular zero-shot semantic segmentation methods based on large vision-language models currently used for generating annotations for RGB imagery. Code will be available at: https://github.com/connorlee77/aerial-auto-segment.

CART: Caltech Aerial RGB-Thermal Dataset in the Wild

Mar 13, 2024

Abstract:We present the first publicly available RGB-thermal dataset designed for aerial robotics operating in natural environments. Our dataset captures a variety of terrains across the continental United States, including rivers, lakes, coastlines, deserts, and forests, and consists of synchronized RGB, long-wave thermal, global positioning, and inertial data. Furthermore, we provide semantic segmentation annotations for 10 classes commonly encountered in natural settings in order to facilitate the development of perception algorithms robust to adverse weather and nighttime conditions. Using this dataset, we propose new and challenging benchmarks for thermal and RGB-thermal semantic segmentation, RGB-to-thermal image translation, and visual-inertial odometry. We present extensive results using state-of-the-art methods and highlight the challenges posed by temporal and geographical domain shifts in our data. Dataset and accompanying code will be provided at https://github.com/aerorobotics/caltech-aerial-rgbt-dataset

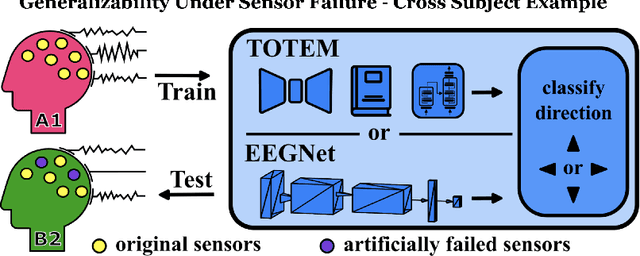

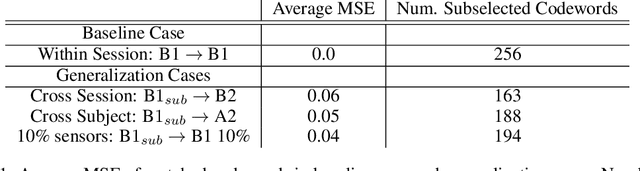

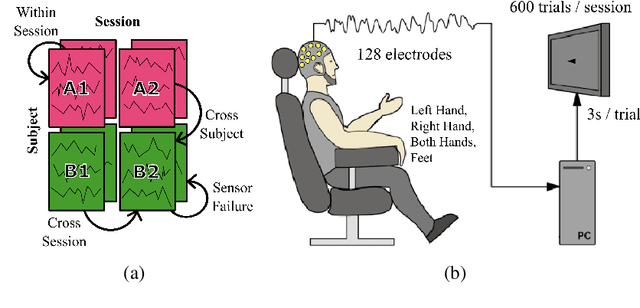

Generalizability Under Sensor Failure: Tokenization + Transformers Enable More Robust Latent Spaces

Feb 29, 2024

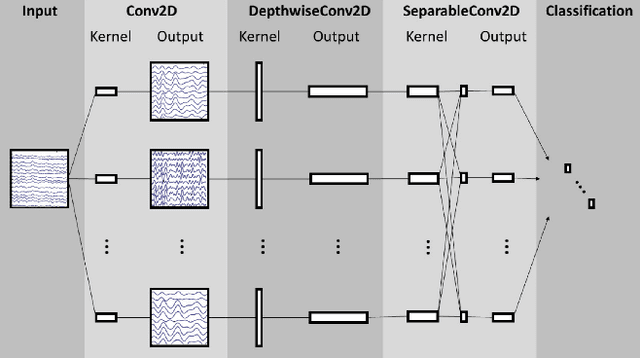

Abstract:A major goal in neuroscience is to discover neural data representations that generalize. This goal is challenged by variability along recording sessions (e.g. environment), subjects (e.g. varying neural structures), and sensors (e.g. sensor noise), among others. Recent work has begun to address generalization across sessions and subjects, but few study robustness to sensor failure which is highly prevalent in neuroscience experiments. In order to address these generalizability dimensions we first collect our own electroencephalography dataset with numerous sessions, subjects, and sensors, then study two time series models: EEGNet (Lawhern et al., 2018) and TOTEM (Talukder et al., 2024). EEGNet is a widely used convolutional neural network, while TOTEM is a discrete time series tokenizer and transformer model. We find that TOTEM outperforms or matches EEGNet across all generalizability cases. Finally through analysis of TOTEM's latent codebook we observe that tokenization enables generalization

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge