Yujin An

Rotational ultrasound and photoacoustic tomography of the human body

Apr 22, 2025Abstract:Imaging the human body's morphological and angiographic information is essential for diagnosing, monitoring, and treating medical conditions. Ultrasonography performs the morphological assessment of the soft tissue based on acoustic impedance variations, whereas photoacoustic tomography (PAT) can visualize blood vessels based on intrinsic hemoglobin absorption. Three-dimensional (3D) panoramic imaging of the vasculature is generally not practical in conventional ultrasonography with limited field-of-view (FOV) probes, and PAT does not provide sufficient scattering-based soft tissue morphological contrast. Complementing each other, fast panoramic rotational ultrasound tomography (RUST) and PAT are integrated for hybrid rotational ultrasound and photoacoustic tomography (RUS-PAT), which obtains 3D ultrasound structural and PAT angiographic images of the human body quasi-simultaneously. The RUST functionality is achieved in a cost-effective manner using a single-element ultrasonic transducer for ultrasound transmission and rotating arc-shaped arrays for 3D panoramic detection. RUST is superior to conventional ultrasonography, which either has a limited FOV with a linear array or is high-cost with a hemispherical array that requires both transmission and receiving. By switching the acoustic source to a light source, the system is conveniently converted to PAT mode to acquire angiographic images in the same region. Using RUS-PAT, we have successfully imaged the human head, breast, hand, and foot with a 10 cm diameter FOV, submillimeter isotropic resolution, and 10 s imaging time for each modality. The 3D RUS-PAT is a powerful tool for high-speed, 3D, dual-contrast imaging of the human body with potential for rapid clinical translation.

Motor Imagery Teleoperation of a Mobile Robot Using a Low-Cost Brain-Computer Interface for Multi-Day Validation

Dec 12, 2024

Abstract:Brain-computer interfaces (BCI) have the potential to provide transformative control in prosthetics, assistive technologies (wheelchairs), robotics, and human-computer interfaces. While Motor Imagery (MI) offers an intuitive approach to BCI control, its practical implementation is often limited by the requirement for expensive devices, extensive training data, and complex algorithms, leading to user fatigue and reduced accessibility. In this paper, we demonstrate that effective MI-BCI control of a mobile robot in real-world settings can be achieved using a fine-tuned Deep Neural Network (DNN) with a sliding window, eliminating the need for complex feature extractions for real-time robot control. The fine-tuning process optimizes the convolutional and attention layers of the DNN to adapt to each user's daily MI data streams, reducing training data by 70% and minimizing user fatigue from extended data collection. Using a low-cost (~$3k), 16-channel, non-invasive, open-source electroencephalogram (EEG) device, four users teleoperated a quadruped robot over three days. The system achieved 78% accuracy on a single-day validation dataset and maintained a 75% validation accuracy over three days without extensive retraining from day-to-day. For real-world robot command classification, we achieved an average of 62% accuracy. By providing empirical evidence that MI-BCI systems can maintain performance over multiple days with reduced training data to DNN and a low-cost EEG device, our work enhances the practicality and accessibility of BCI technology. This advancement makes BCI applications more feasible for real-world scenarios, particularly in controlling robotic systems.

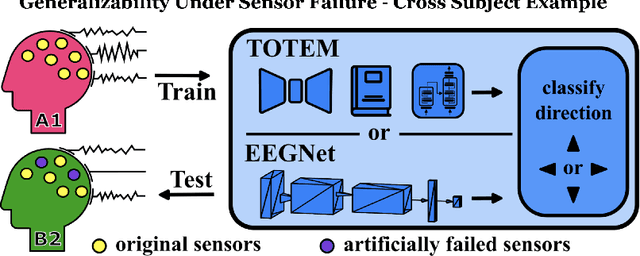

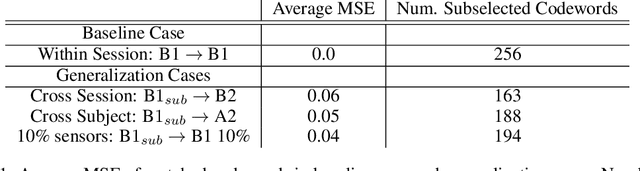

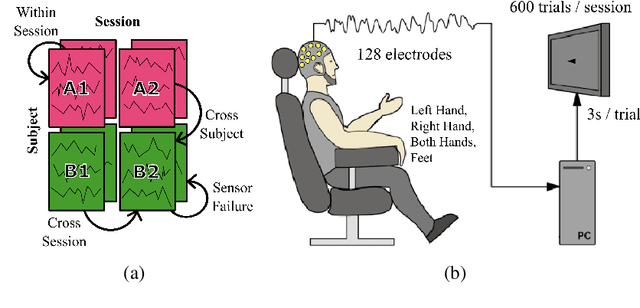

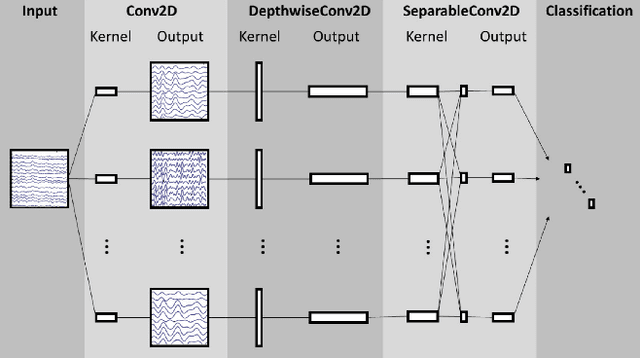

Generalizability Under Sensor Failure: Tokenization + Transformers Enable More Robust Latent Spaces

Feb 29, 2024

Abstract:A major goal in neuroscience is to discover neural data representations that generalize. This goal is challenged by variability along recording sessions (e.g. environment), subjects (e.g. varying neural structures), and sensors (e.g. sensor noise), among others. Recent work has begun to address generalization across sessions and subjects, but few study robustness to sensor failure which is highly prevalent in neuroscience experiments. In order to address these generalizability dimensions we first collect our own electroencephalography dataset with numerous sessions, subjects, and sensors, then study two time series models: EEGNet (Lawhern et al., 2018) and TOTEM (Talukder et al., 2024). EEGNet is a widely used convolutional neural network, while TOTEM is a discrete time series tokenizer and transformer model. We find that TOTEM outperforms or matches EEGNet across all generalizability cases. Finally through analysis of TOTEM's latent codebook we observe that tokenization enables generalization

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge