Sipeng Zhang

A Tale of LLMs and Induced Small Proxies: Scalable Agents for Knowledge Mining

Oct 01, 2025Abstract:At the core of Deep Research is knowledge mining, the task of extracting structured information from massive unstructured text in response to user instructions. Large language models (LLMs) excel at interpreting such instructions but are prohibitively expensive to deploy at scale, while traditional pipelines of classifiers and extractors remain efficient yet brittle and unable to generalize to new tasks. We introduce Falconer, a collaborative framework that combines the agentic reasoning of LLMs with lightweight proxy models for scalable knowledge mining. In Falconer, LLMs act as planners, decomposing user instructions into executable pipelines, and as annotators, generating supervision to train small proxies. The framework unifies classification and extraction into two atomic operations, get label and get span, enabling a single instruction-following model to replace multiple task-specific components. To evaluate the consistency between proxy models incubated by Falconer and annotations provided by humans and large models, we construct new benchmarks covering both planning and end-to-end execution. Experiments show that Falconer closely matches state-of-the-art LLMs in instruction-following accuracy while reducing inference cost by up to 90% and accelerating large-scale knowledge mining by more than 20x, offering an efficient and scalable foundation for Deep Research.

OccTransformer: Improving BEVFormer for 3D camera-only occupancy prediction

Feb 28, 2024

Abstract:This technical report presents our solution, "occTransformer" for the 3D occupancy prediction track in the autonomous driving challenge at CVPR 2023. Our method builds upon the strong baseline BEVFormer and improves its performance through several simple yet effective techniques. Firstly, we employed data augmentation to increase the diversity of the training data and improve the model's generalization ability. Secondly, we used a strong image backbone to extract more informative features from the input data. Thirdly, we incorporated a 3D unet head to better capture the spatial information of the scene. Fourthly, we added more loss functions to better optimize the model. Additionally, we used an ensemble approach with the occ model BevDet and SurroundOcc to further improve the performance. Most importantly, we integrated 3D detection model StreamPETR to enhance the model's ability to detect objects in the scene. Using these methods, our solution achieved 49.23 miou on the 3D occupancy prediction track in the autonomous driving challenge.

Calibrating Cross-modal Feature for Text-Based Person Searching

Apr 05, 2023

Abstract:We present a novel and effective method calibrating cross-modal features for text-based person search. Our method is cost-effective and can easily retrieve specific persons with textual captions. Specifically, its architecture is only a dual-encoder and a detachable cross-modal decoder. Without extra multi-level branches or complex interaction modules as the neck following the backbone, our model makes a high-speed inference only based on the dual-encoder. Besides, our method consists of two novel losses to provide fine-grained cross-modal features. A Sew loss takes the quality of textual captions as guidance and aligns features between image and text modalities. A Masking Caption Modeling (MCM) loss uses a masked captions prediction task to establish detailed and generic relationships between textual and visual parts. We show the top results in three popular benchmarks, including CUHK-PEDES, ICFG-PEDES, and RSTPReID. In particular, our method achieves 73.81% Rank@1, 74.25% Rank@1 and 57.35% Rank@1 on them, respectively. In addition, we also validate each component of our method with extensive experiments. We hope our powerful and scalable paradigm will serve as a solid baseline and help ease future research in text-based person search.

Symmetric Network with Spatial Relationship Modeling for Natural Language-based Vehicle Retrieval

Jun 22, 2022

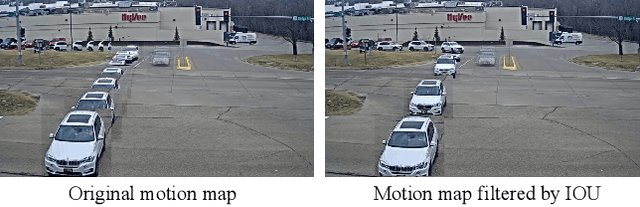

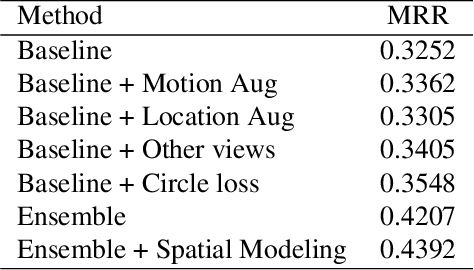

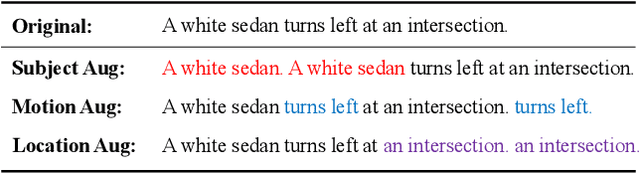

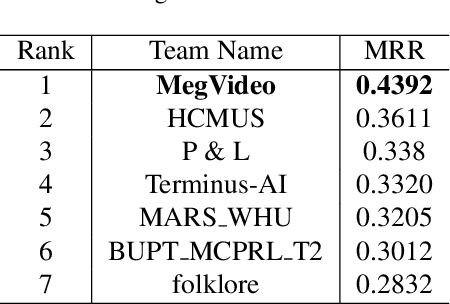

Abstract:Natural language (NL) based vehicle retrieval aims to search specific vehicle given text description. Different from the image-based vehicle retrieval, NL-based vehicle retrieval requires considering not only vehicle appearance, but also surrounding environment and temporal relations. In this paper, we propose a Symmetric Network with Spatial Relationship Modeling (SSM) method for NL-based vehicle retrieval. Specifically, we design a symmetric network to learn the unified cross-modal representations between text descriptions and vehicle images, where vehicle appearance details and vehicle trajectory global information are preserved. Besides, to make better use of location information, we propose a spatial relationship modeling methods to take surrounding environment and mutual relationship between vehicles into consideration. The qualitative and quantitative experiments verify the effectiveness of the proposed method. We achieve 43.92% MRR accuracy on the test set of the 6th AI City Challenge on natural language-based vehicle retrieval track, yielding the 1st place among all valid submissions on the public leaderboard. The code is available at https://github.com/hbchen121/AICITY2022_Track2_SSM.

* 8 pages, 3 figures, publised to CVPRW

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge