Shuangquan Wang

UniHands: Unifying Various Wild-Collected Keypoints for Personalized Hand Reconstruction

Nov 18, 2024

Abstract:Accurate hand motion capture and standardized 3D representation are essential for various hand-related tasks. Collecting keypoints-only data, while efficient and cost-effective, results in low-fidelity representations and lacks surface information. Furthermore, data inconsistencies across sources challenge their integration and use. We present UniHands, a novel method for creating standardized yet personalized hand models from wild-collected keypoints from diverse sources. Unlike existing neural implicit representation methods, UniHands uses the widely-adopted parametric models MANO and NIMBLE, providing a more scalable and versatile solution. It also derives unified hand joints from the meshes, which facilitates seamless integration into various hand-related tasks. Experiments on the FreiHAND and InterHand2.6M datasets demonstrate its ability to precisely reconstruct hand mesh vertices and keypoints, effectively capturing high-degree articulation motions. Empirical studies involving nine participants show a clear preference for our unified joints over existing configurations for accuracy and naturalism (p-value 0.016).

DVIO: Depth aided visual inertial odometry for RGBD sensors

Oct 20, 2021

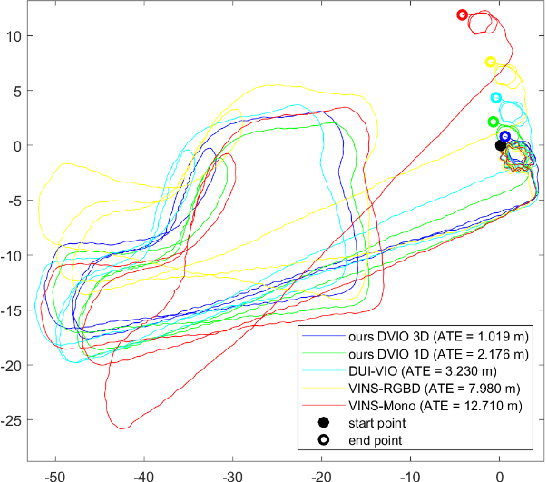

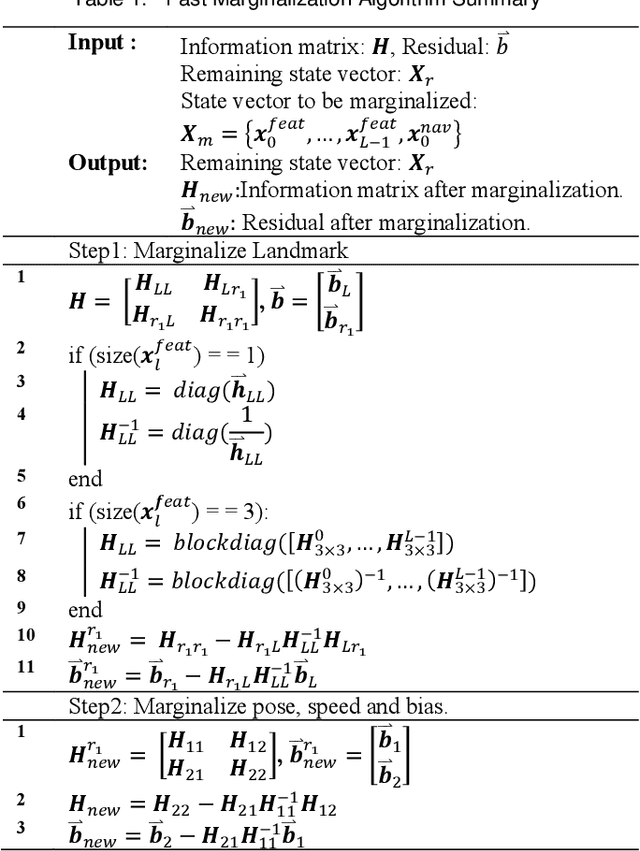

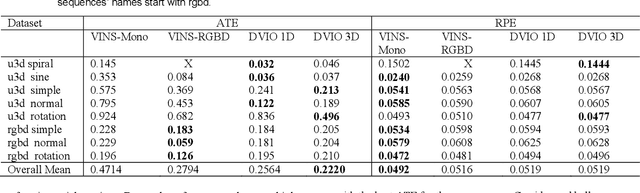

Abstract:In past few years we have observed an increase in the usage of RGBD sensors in mobile devices. These sensors provide a good estimate of the depth map for the camera frame, which can be used in numerous augmented reality applications. This paper presents a new visual inertial odometry (VIO) system, which uses measurements from a RGBD sensor and an inertial measurement unit (IMU) sensor for estimating the motion state of the mobile device. The resulting system is called the depth-aided VIO (DVIO) system. In this system we add the depth measurement as part of the nonlinear optimization process. Specifically, we propose methods to use the depth measurement using one-dimensional (1D) feature parameterization as well as three-dimensional (3D) feature parameterization. In addition, we propose to utilize the depth measurement for estimating time offset between the unsynchronized IMU and the RGBD sensors. Last but not least, we propose a novel block-based marginalization approach to speed up the marginalization processes and maintain the real-time performance of the overall system. Experimental results validate that the proposed DVIO system outperforms the other state-of-the-art VIO systems in terms of trajectory accuracy as well as processing time.

NTIRE 2020 Challenge on Real Image Denoising: Dataset, Methods and Results

May 08, 2020

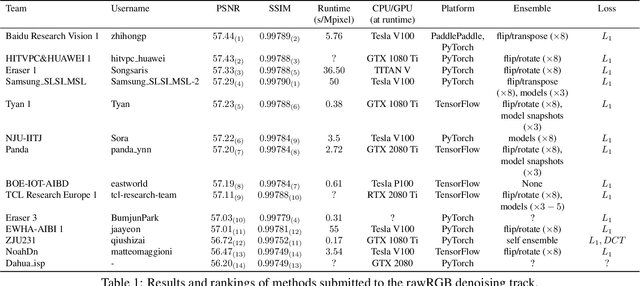

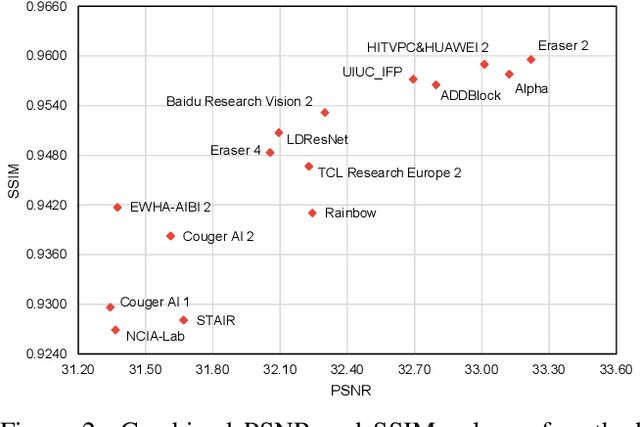

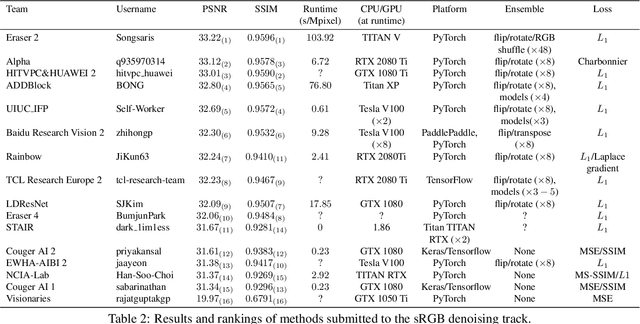

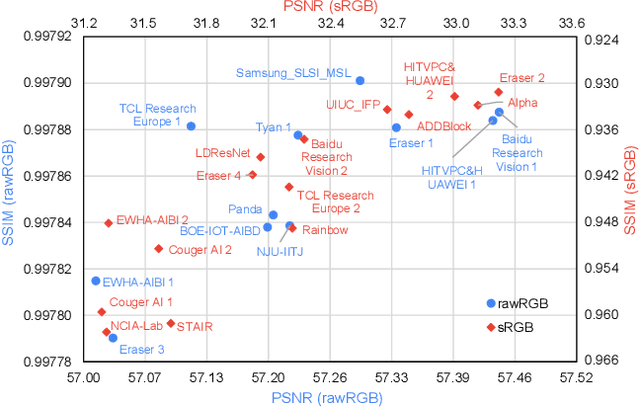

Abstract:This paper reviews the NTIRE 2020 challenge on real image denoising with focus on the newly introduced dataset, the proposed methods and their results. The challenge is a new version of the previous NTIRE 2019 challenge on real image denoising that was based on the SIDD benchmark. This challenge is based on a newly collected validation and testing image datasets, and hence, named SIDD+. This challenge has two tracks for quantitatively evaluating image denoising performance in (1) the Bayer-pattern rawRGB and (2) the standard RGB (sRGB) color spaces. Each track ~250 registered participants. A total of 22 teams, proposing 24 methods, competed in the final phase of the challenge. The proposed methods by the participating teams represent the current state-of-the-art performance in image denoising targeting real noisy images. The newly collected SIDD+ datasets are publicly available at: https://bit.ly/siddplus_data.

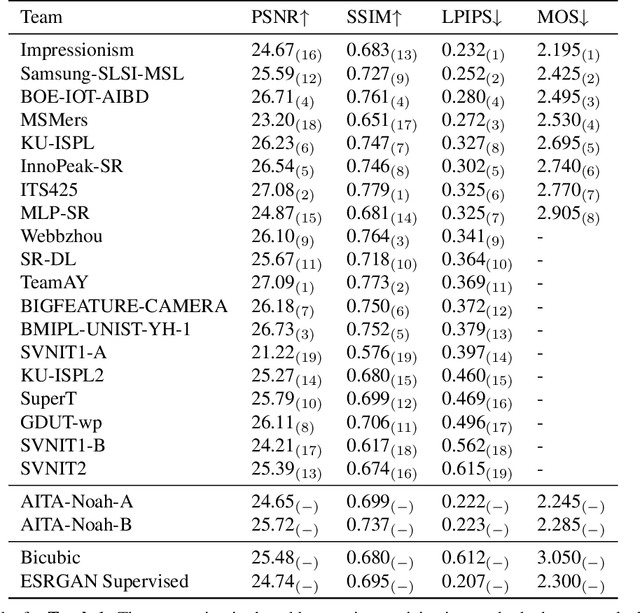

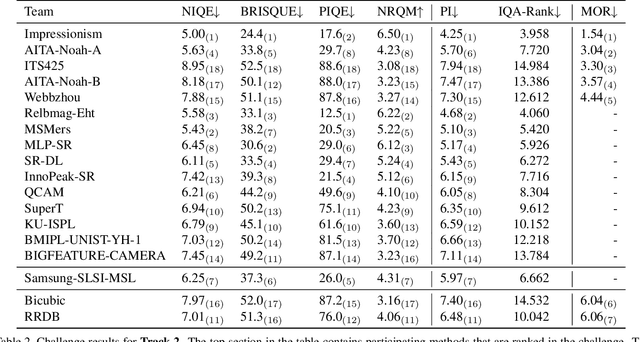

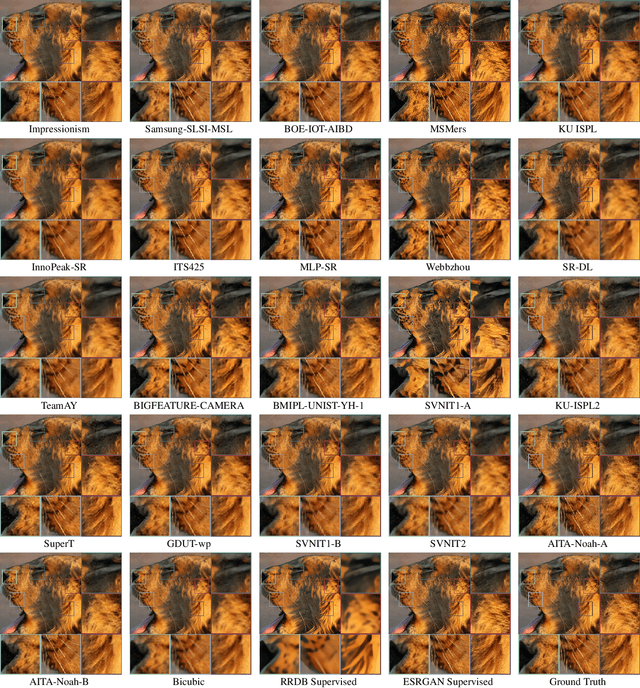

NTIRE 2020 Challenge on Real-World Image Super-Resolution: Methods and Results

May 05, 2020

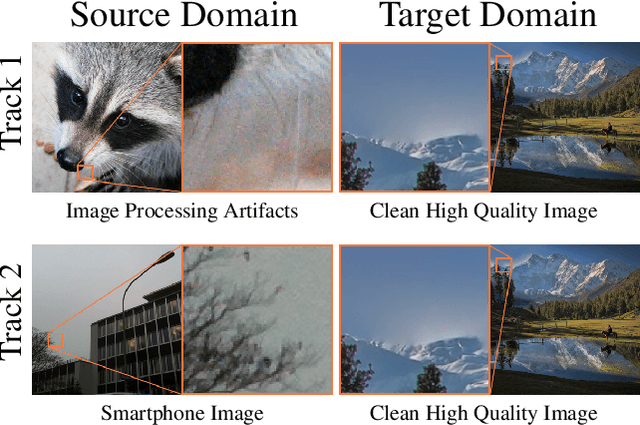

Abstract:This paper reviews the NTIRE 2020 challenge on real world super-resolution. It focuses on the participating methods and final results. The challenge addresses the real world setting, where paired true high and low-resolution images are unavailable. For training, only one set of source input images is therefore provided along with a set of unpaired high-quality target images. In Track 1: Image Processing artifacts, the aim is to super-resolve images with synthetically generated image processing artifacts. This allows for quantitative benchmarking of the approaches \wrt a ground-truth image. In Track 2: Smartphone Images, real low-quality smart phone images have to be super-resolved. In both tracks, the ultimate goal is to achieve the best perceptual quality, evaluated using a human study. This is the second challenge on the subject, following AIM 2019, targeting to advance the state-of-the-art in super-resolution. To measure the performance we use the benchmark protocol from AIM 2019. In total 22 teams competed in the final testing phase, demonstrating new and innovative solutions to the problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge