Qiang Wang

Adaptive Target-Condition Neural Network: DNN-Aided Load Balancing for Hybrid LiFi and WiFi Networks

Aug 09, 2022

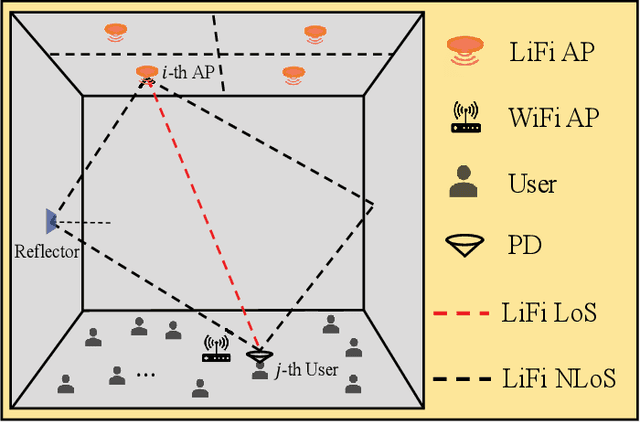

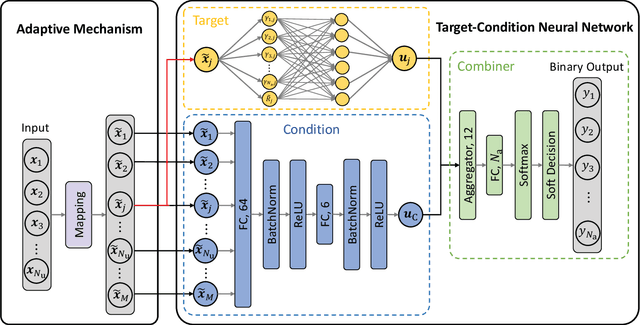

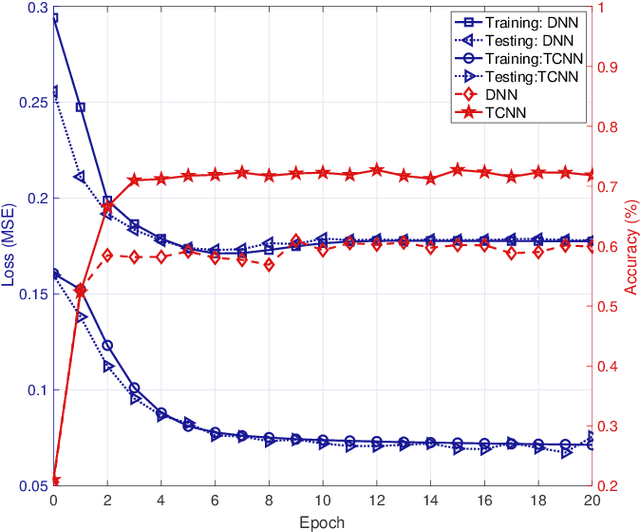

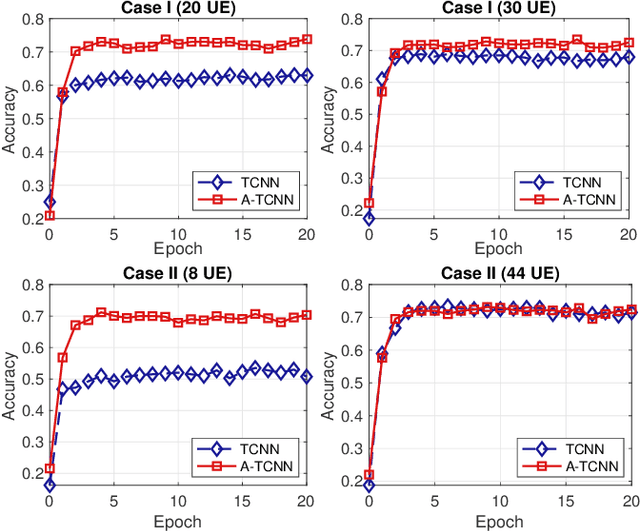

Abstract:Load balancing (LB) is a challenging issue in the hybrid light fidelity (LiFi) and wireless fidelity (WiFi) networks (HLWNets), due to the nature of heterogeneous access points (APs). Machine learning has the potential to provide a complexity-friendly LB solution with near-optimal network performance, at the cost of a training process. The state-of-the-art (SOTA) learning-aided LB methods, however, need retraining when the network environment (especially the number of users) changes, significantly limiting its practicability. In this paper, a novel deep neural network (DNN) structure named adaptive target-condition neural network (A-TCNN) is proposed, which conducts AP selection for one target user upon the condition of other users. Also, an adaptive mechanism is developed to map a smaller number of users to a larger number through splitting their data rate requirements, without affecting the AP selection result for the target user. This enables the proposed method to handle different numbers of users without the need for retraining. Results show that A-TCNN achieves a network throughput very close to that of the testing dataset, with a gap less than 3%. It is also proven that A-TCNN can obtain a network throughput comparable to two SOTA benchmarks, while reducing the runtime by up to three orders of magnitude.

An Efficient Framework for Few-shot Skeleton-based Temporal Action Segmentation

Jul 20, 2022

Abstract:Temporal action segmentation (TAS) aims to classify and locate actions in the long untrimmed action sequence. With the success of deep learning, many deep models for action segmentation have emerged. However, few-shot TAS is still a challenging problem. This study proposes an efficient framework for the few-shot skeleton-based TAS, including a data augmentation method and an improved model. The data augmentation approach based on motion interpolation is presented here to solve the problem of insufficient data, and can increase the number of samples significantly by synthesizing action sequences. Besides, we concatenate a Connectionist Temporal Classification (CTC) layer with a network designed for skeleton-based TAS to obtain an optimized model. Leveraging CTC can enhance the temporal alignment between prediction and ground truth and further improve the segment-wise metrics of segmentation results. Extensive experiments on both public and self-constructed datasets, including two small-scale datasets and one large-scale dataset, show the effectiveness of two proposed methods in improving the performance of the few-shot skeleton-based TAS task.

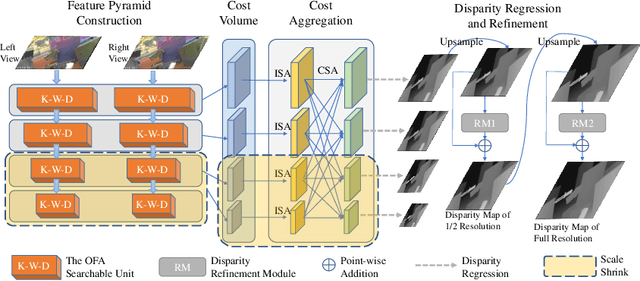

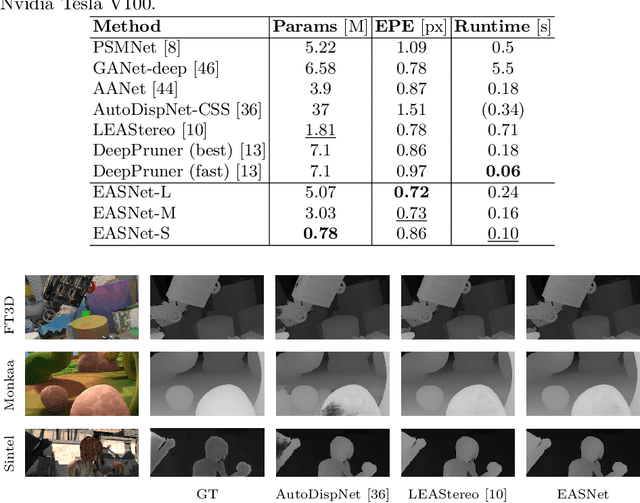

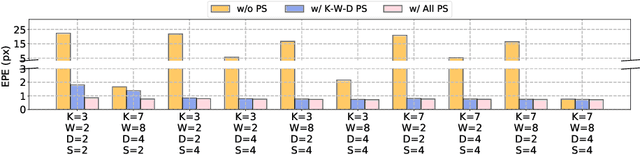

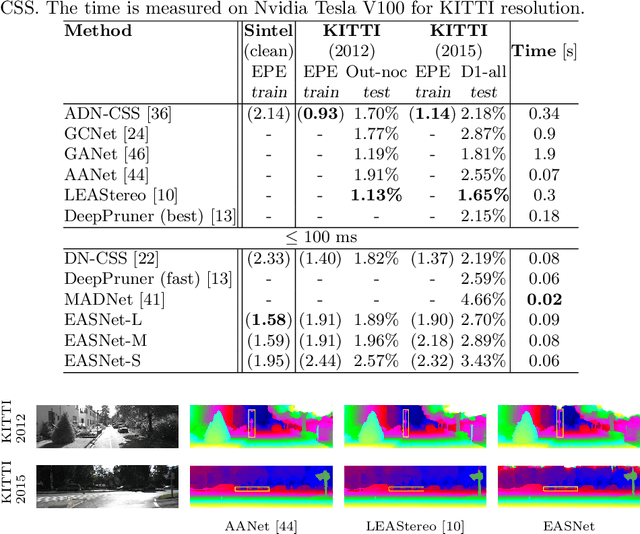

EASNet: Searching Elastic and Accurate Network Architecture for Stereo Matching

Jul 20, 2022

Abstract:Recent advanced studies have spent considerable human efforts on optimizing network architectures for stereo matching but hardly achieved both high accuracy and fast inference speed. To ease the workload in network design, neural architecture search (NAS) has been applied with great success to various sparse prediction tasks, such as image classification and object detection. However, existing NAS studies on the dense prediction task, especially stereo matching, still cannot be efficiently and effectively deployed on devices of different computing capabilities. To this end, we propose to train an elastic and accurate network for stereo matching (EASNet) that supports various 3D architectural settings on devices with different computing capabilities. Given the deployment latency constraint on the target device, we can quickly extract a sub-network from the full EASNet without additional training while the accuracy of the sub-network can still be maintained. Extensive experiments show that our EASNet outperforms both state-of-the-art human-designed and NAS-based architectures on Scene Flow and MPI Sintel datasets in terms of model accuracy and inference speed. Particularly, deployed on an inference GPU, EASNet achieves a new SOTA 0.73 EPE on the Scene Flow dataset with 100 ms, which is 4.5$\times$ faster than LEAStereo with a better quality model.

Automatic dataset generation for specific object detection

Jul 16, 2022

Abstract:In the past decade, object detection tasks are defined mostly by large public datasets. However, building object detection datasets is not scalable due to inefficient image collecting and labeling. Furthermore, most labels are still in the form of bounding boxes, which provide much less information than the real human visual system. In this paper, we present a method to synthesize object-in-scene images, which can preserve the objects' detailed features without bringing irrelevant information. In brief, given a set of images containing a target object, our algorithm first trains a model to find an approximate center of the object as an anchor, then makes an outline regression to estimate its boundary, and finally blends the object into a new scene. Our result shows that in the synthesized image, the boundaries of objects blend very well with the background. Experiments also show that SOTA segmentation models work well with our synthesized data.

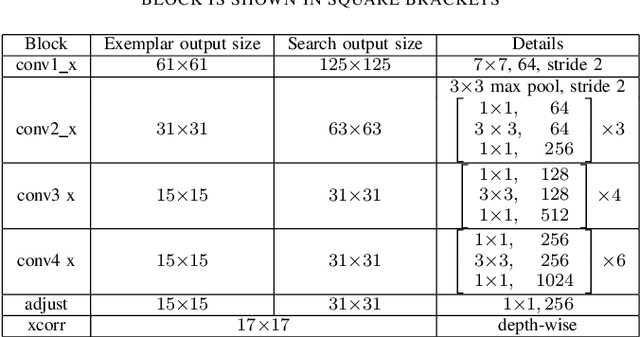

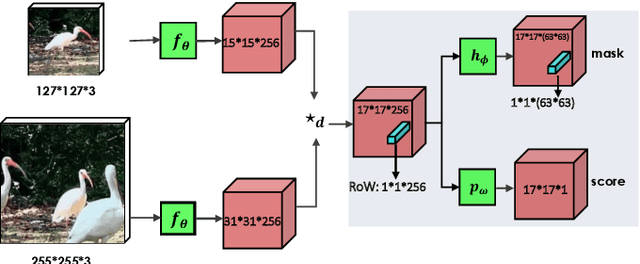

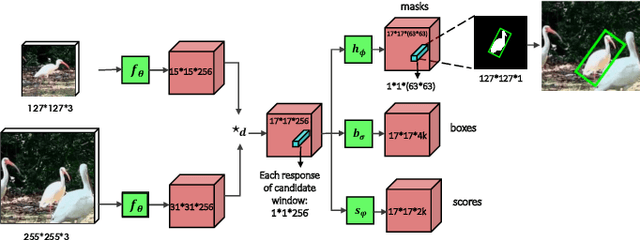

SiamMask: A Framework for Fast Online Object Tracking and Segmentation

Jul 05, 2022

Abstract:In this paper we introduce SiamMask, a framework to perform both visual object tracking and video object segmentation, in real-time, with the same simple method. We improve the offline training procedure of popular fully-convolutional Siamese approaches by augmenting their losses with a binary segmentation task. Once the offline training is completed, SiamMask only requires a single bounding box for initialization and can simultaneously carry out visual object tracking and segmentation at high frame-rates. Moreover, we show that it is possible to extend the framework to handle multiple object tracking and segmentation by simply re-using the multi-task model in a cascaded fashion. Experimental results show that our approach has high processing efficiency, at around 55 frames per second. It yields real-time state-of-the-art results on visual-object tracking benchmarks, while at the same time demonstrating competitive performance at a high speed for video object segmentation benchmarks.

* 17 pages, Accepted by TPAMI 2022. arXiv admin note: substantial text overlap with arXiv:1812.05050

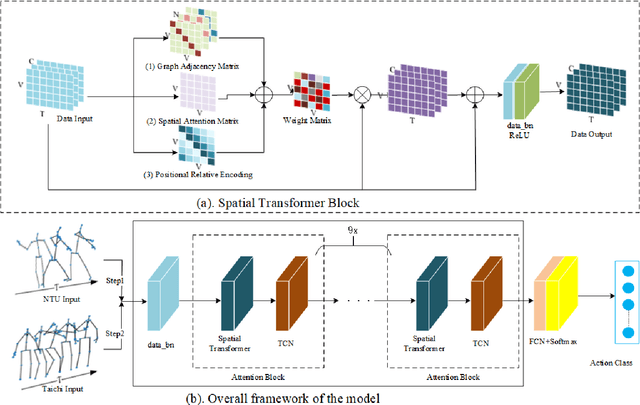

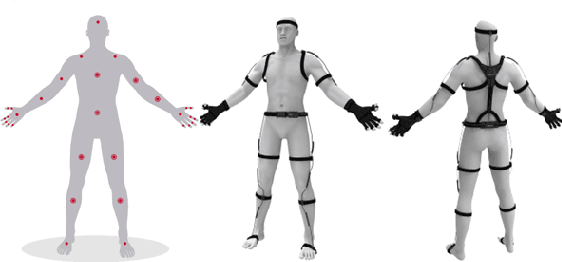

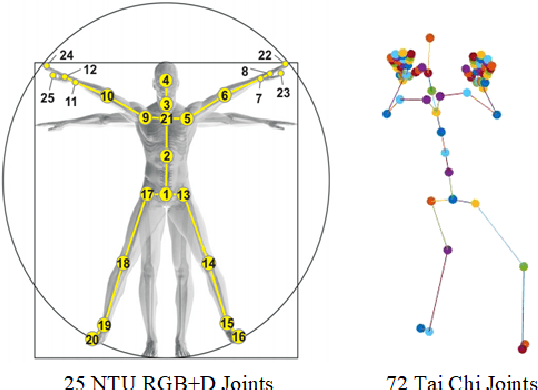

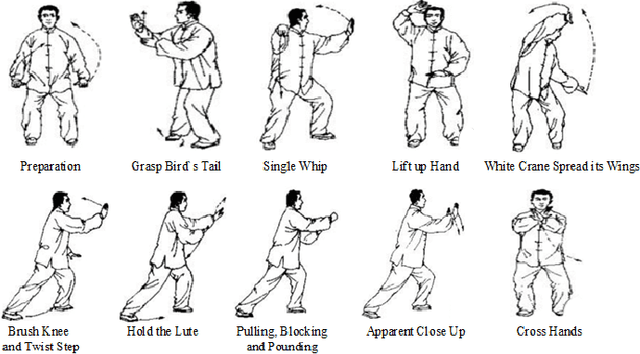

Spatial Transformer Network with Transfer Learning for Small-scale Fine-grained Skeleton-based Tai Chi Action Recognition

Jun 30, 2022

Abstract:Human action recognition is a quite hugely investigated area where most remarkable action recognition networks usually use large-scale coarse-grained action datasets of daily human actions as inputs to state the superiority of their networks. We intend to recognize our small-scale fine-grained Tai Chi action dataset using neural networks and propose a transfer-learning method using NTU RGB+D dataset to pre-train our network. More specifically, the proposed method first uses a large-scale NTU RGB+D dataset to pre-train the Transformer-based network for action recognition to extract common features among human motion. Then we freeze the network weights except for the fully connected (FC) layer and take our Tai Chi actions as inputs only to train the initialized FC weights. Experimental results show that our general model pipeline can reach a high accuracy of small-scale fine-grained Tai Chi action recognition with even few inputs and demonstrate that our method achieves the state-of-the-art performance compared with previous Tai Chi action recognition methods.

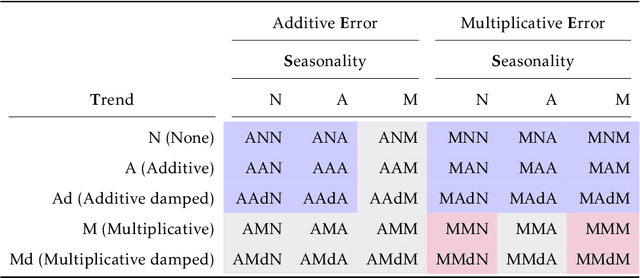

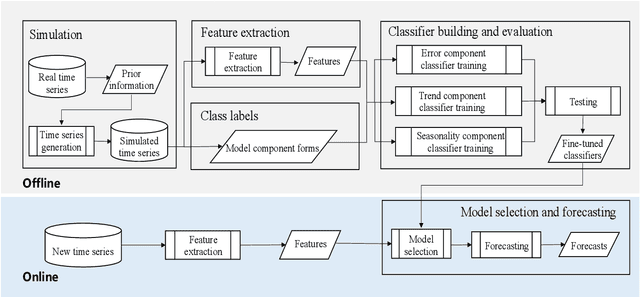

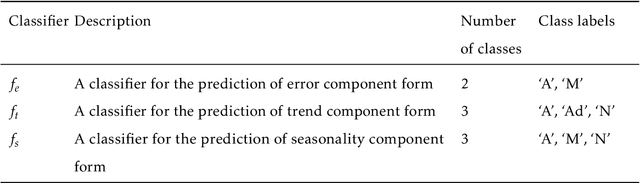

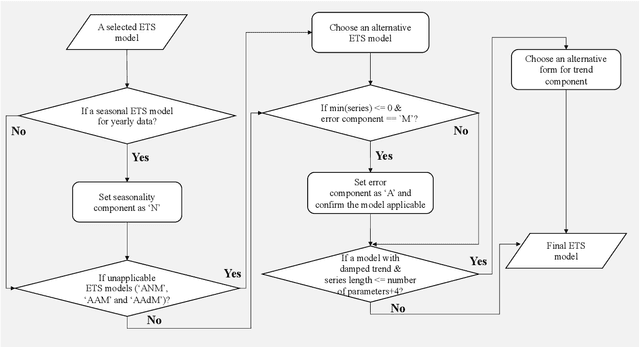

fETSmcs: Feature-based ETS model component selection

Jun 26, 2022

Abstract:The well-developed ETS (ExponenTial Smoothing or Error, Trend, Seasonality) method incorporating a family of exponential smoothing models in state space representation has been widely used for automatic forecasting. The existing ETS method uses information criteria for model selection by choosing an optimal model with the smallest information criterion among all models fitted to a given time series. The ETS method under such a model selection scheme suffers from computational complexity when applied to large-scale time series data. To tackle this issue, we propose an efficient approach for ETS model selection by training classifiers on simulated data to predict appropriate model component forms for a given time series. We provide a simulation study to show the model selection ability of the proposed approach on simulated data. We evaluate our approach on the widely used forecasting competition data set M4, in terms of both point forecasts and prediction intervals. To demonstrate the practical value of our method, we showcase the performance improvements from our approach on a monthly hospital data set.

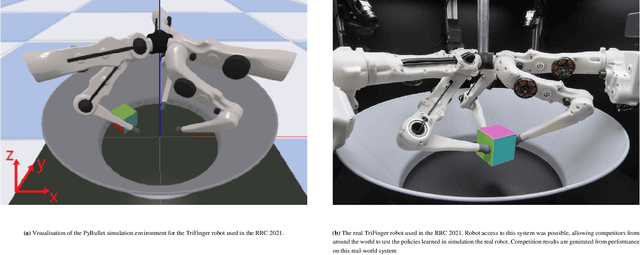

Dexterous Robotic Manipulation using Deep Reinforcement Learning and Knowledge Transfer for Complex Sparse Reward-based Tasks

May 19, 2022

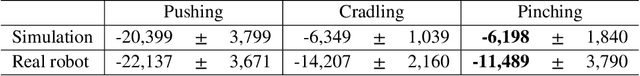

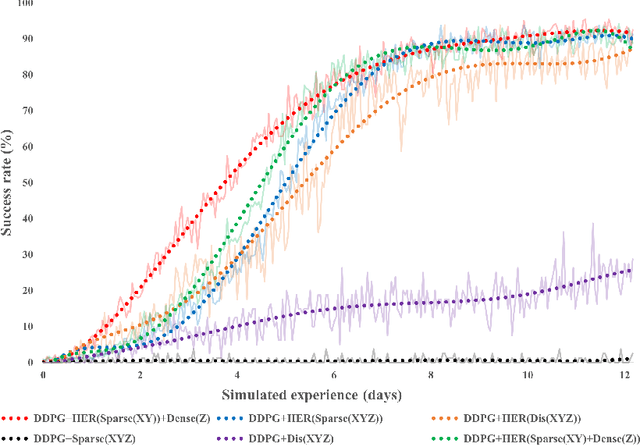

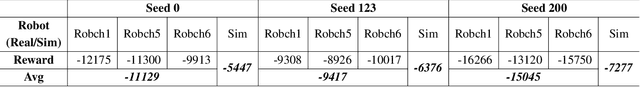

Abstract:This paper describes a deep reinforcement learning (DRL) approach that won Phase 1 of the Real Robot Challenge (RRC) 2021, and then extends this method to a more difficult manipulation task. The RRC consisted of using a TriFinger robot to manipulate a cube along a specified positional trajectory, but with no requirement for the cube to have any specific orientation. We used a relatively simple reward function, a combination of goal-based sparse reward and distance reward, in conjunction with Hindsight Experience Replay (HER) to guide the learning of the DRL agent (Deep Deterministic Policy Gradient (DDPG)). Our approach allowed our agents to acquire dexterous robotic manipulation strategies in simulation. These strategies were then applied to the real robot and outperformed all other competition submissions, including those using more traditional robotic control techniques, in the final evaluation stage of the RRC. Here we extend this method, by modifying the task of Phase 1 of the RRC to require the robot to maintain the cube in a particular orientation, while the cube is moved along the required positional trajectory. The requirement to also orient the cube makes the agent unable to learn the task through blind exploration due to increased problem complexity. To circumvent this issue, we make novel use of a Knowledge Transfer (KT) technique that allows the strategies learned by the agent in the original task (which was agnostic to cube orientation) to be transferred to this task (where orientation matters). KT allowed the agent to learn and perform the extended task in the simulator, which improved the average positional deviation from 0.134 m to 0.02 m, and average orientation deviation from 142{\deg} to 76{\deg} during evaluation. This KT concept shows good generalisation properties and could be applied to any actor-critic learning algorithm.

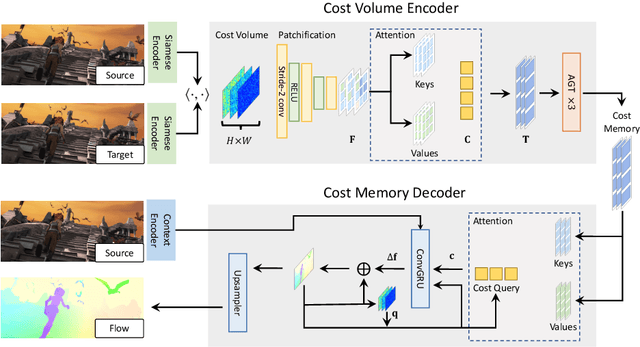

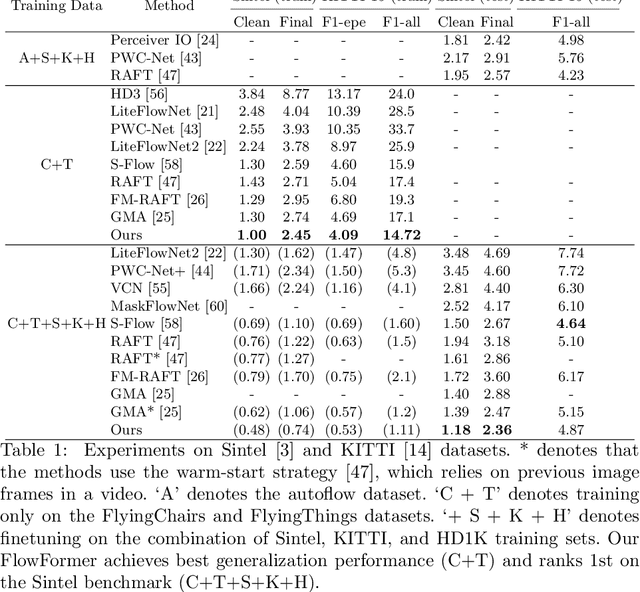

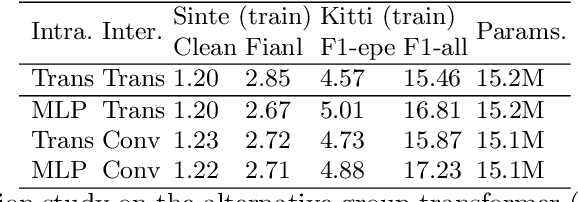

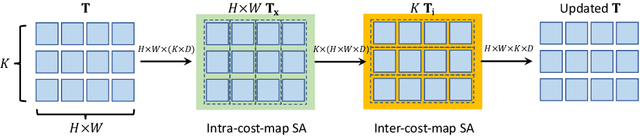

FlowFormer: A Transformer Architecture for Optical Flow

Mar 30, 2022

Abstract:We introduce Optical Flow TransFormer (FlowFormer), a transformer-based neural network architecture for learning optical flow. FlowFormer tokenizes the 4D cost volume built from an image pair, encodes the cost tokens into a cost memory with alternate-group transformer (AGT) layers in a novel latent space, and decodes the cost memory via a recurrent transformer decoder with dynamic positional cost queries. On the Sintel benchmark clean pass, FlowFormer achieves 1.178 average end-ponit-error (AEPE), a 15.1% error reduction from the best published result (1.388). Besides, FlowFormer also achieves strong generalization performance. Without being trained on Sintel, FlowFormer achieves 1.00 AEPE on the Sintel training set clean pass, outperforming the best published result (1.29) by 22.4%.

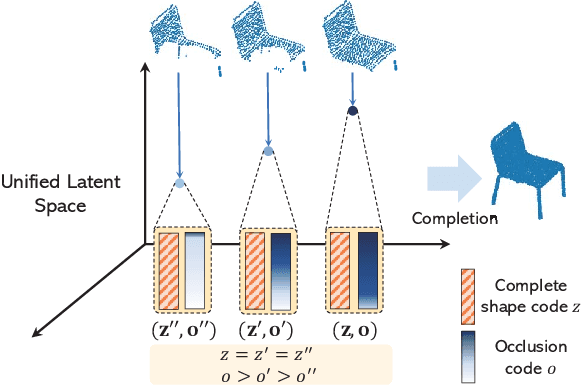

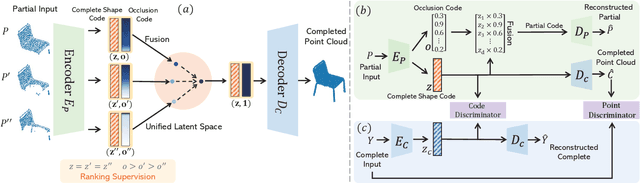

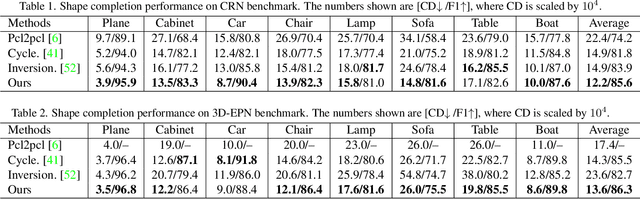

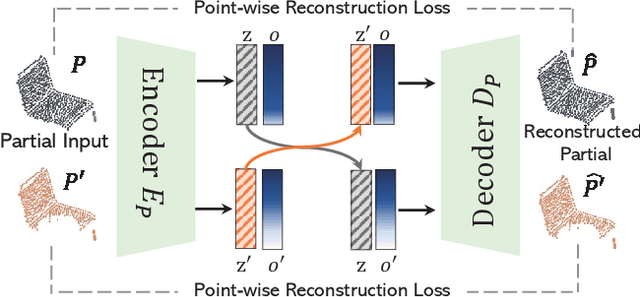

Learning a Structured Latent Space for Unsupervised Point Cloud Completion

Mar 29, 2022

Abstract:Unsupervised point cloud completion aims at estimating the corresponding complete point cloud of a partial point cloud in an unpaired manner. It is a crucial but challenging problem since there is no paired partial-complete supervision that can be exploited directly. In this work, we propose a novel framework, which learns a unified and structured latent space that encoding both partial and complete point clouds. Specifically, we map a series of related partial point clouds into multiple complete shape and occlusion code pairs and fuse the codes to obtain their representations in the unified latent space. To enforce the learning of such a structured latent space, the proposed method adopts a series of constraints including structured ranking regularization, latent code swapping constraint, and distribution supervision on the related partial point clouds. By establishing such a unified and structured latent space, better partial-complete geometry consistency and shape completion accuracy can be achieved. Extensive experiments show that our proposed method consistently outperforms state-of-the-art unsupervised methods on both synthetic ShapeNet and real-world KITTI, ScanNet, and Matterport3D datasets.

* 8 pages, 5 figures, cvpr2022

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge