Pratap Tokekar

University of Maryland, College Park

LANCAR: Leveraging Language for Context-Aware Robot Locomotion in Unstructured Environments

Sep 30, 2023

Abstract:Robotic locomotion is a challenging task, especially in unstructured terrains. In practice, the optimal locomotion policy can be context-dependent by using the contextual information of encountered terrains in decision-making. Humans can interpret the environmental context for robots, but the ambiguity of human language makes it challenging to use in robot locomotion directly. In this paper, we propose a novel approach, LANCAR, that introduces a context translator that works with reinforcement learning (RL) agents for context-aware locomotion. Our formulation allows a robot to interpret the contextual information from environments generated by human observers or Vision-Language Models (VLM) with Large Language Models (LLM) and use this information to generate contextual embeddings. We incorporate the contextual embeddings with the robot's internal environmental observations as the input to the RL agent's decision neural network. We evaluate LANCAR with contextual information in varying ambiguity levels and compare its performance using several alternative approaches. Our experimental results demonstrate that our approach exhibits good generalizability and adaptability across diverse terrains, by achieving at least 10% of performance improvement in episodic reward over baselines. The experiment video can be found at the following link: https://raaslab.org/projects/LLM_Context_Estimation/.

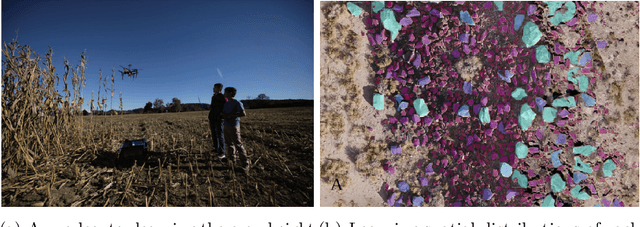

UIVNAV: Underwater Information-driven Vision-based Navigation via Imitation Learning

Sep 15, 2023Abstract:Autonomous navigation in the underwater environment is challenging due to limited visibility, dynamic changes, and the lack of a cost-efficient accurate localization system. We introduce UIVNav, a novel end-to-end underwater navigation solution designed to drive robots over Objects of Interest (OOI) while avoiding obstacles, without relying on localization. UIVNav uses imitation learning and is inspired by the navigation strategies used by human divers who do not rely on localization. UIVNav consists of the following phases: (1) generating an intermediate representation (IR), and (2) training the navigation policy based on human-labeled IR. By training the navigation policy on IR instead of raw data, the second phase is domain-invariant -- the navigation policy does not need to be retrained if the domain or the OOI changes. We show this by deploying the same navigation policy for surveying two different OOIs, oyster and rock reefs, in two different domains, simulation, and a real pool. We compared our method with complete coverage and random walk methods which showed that our method is more efficient in gathering information for OOIs while also avoiding obstacles. The results show that UIVNav chooses to visit the areas with larger area sizes of oysters or rocks with no prior information about the environment or localization. Moreover, a robot using UIVNav compared to complete coverage method surveys on average 36% more oysters when traveling the same distances. We also demonstrate the feasibility of real-time deployment of UIVNavin pool experiments with BlueROV underwater robot for surveying a bed of oyster shells.

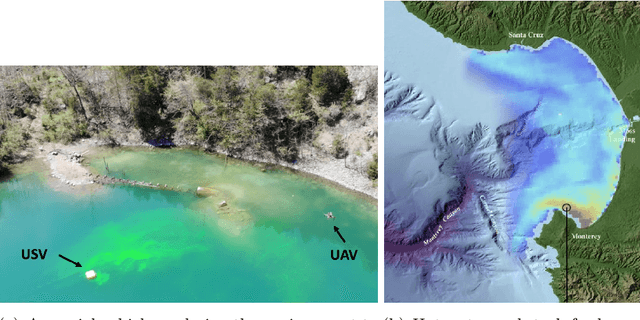

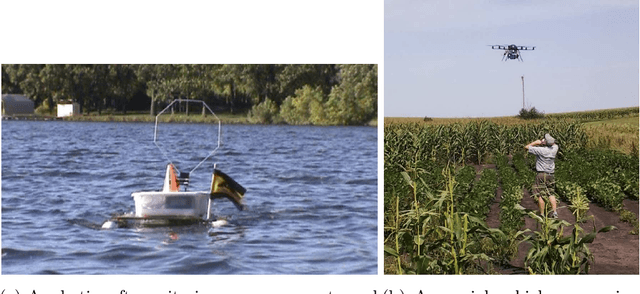

Efficiently Identifying Hotspots in a Spatially Varying Field with Multiple Robots

Sep 14, 2023Abstract:In this paper, we present algorithms to identify environmental hotspots using mobile sensors. We examine two approaches: one involving a single robot and another using multiple robots coordinated through a decentralized robot system. We introduce an adaptive algorithm that does not require precise knowledge of Gaussian Processes (GPs) hyperparameters, making the modeling process more flexible. The robots operate for a pre-defined time in the environment. The multi-robot system uses Voronoi partitioning to divide tasks and a Monte Carlo Tree Search for optimal path planning. Our tests on synthetic and a real-world dataset of Chlorophyll density from a Pacific Ocean sub-region suggest that accurate estimation of GP hyperparameters may not be essential for hotspot detection, potentially simplifying environmental monitoring tasks.

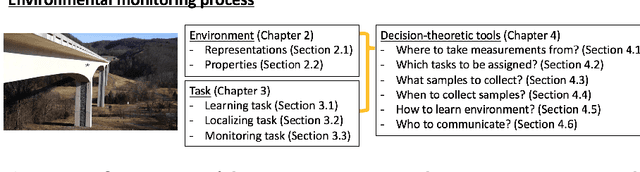

Decision-Theoretic Approaches for Robotic Environmental Monitoring -- A Survey

Aug 04, 2023

Abstract:Robotics has dramatically increased our ability to gather data about our environments. This is an opportune time for the robotics and algorithms community to come together to contribute novel solutions to pressing environmental monitoring problems. In order to do so, it is useful to consider a taxonomy of problems and methods in this realm. We present the first comprehensive summary of decision theoretic approaches that are enabling efficient sampling of various kinds of environmental processes. Representations for different kinds of environments are explored, followed by a discussion of tasks of interest such as learning, localization, or monitoring. Finally, various algorithms to carry out these tasks are presented, along with a few illustrative prior results from the community.

Where to Drop Sensors from Aerial Robots to Monitor a Surface-Level Phenomenon?

Jul 10, 2023

Abstract:We consider the problem of routing a team of energy-constrained Unmanned Aerial Vehicles (UAVs) to drop unmovable sensors for monitoring a task area in the presence of stochastic wind disturbances. In prior work on mobile sensor routing problems, sensors and their carrier are one integrated platform, and sensors are assumed to be able to take measurements at exactly desired locations. By contrast, airdropping the sensors onto the ground can introduce stochasticity in the landing locations of the sensors. We focus on addressing this stochasticity in sensor locations from the path-planning perspective. Specifically, we formulate the problem (Multi-UAV Sensor Drop) as a variant of the Submodular Team Orienteering Problem with one additional constraint on the number of sensors on each UAV. The objective is to maximize the Mutual Information between the phenomenon at Points of Interest (PoIs) and the measurements that sensors will take at stochastic locations. We show that such an objective is computationally expensive to evaluate. To tackle this challenge, we propose a surrogate objective with a closed-form expression based on the expected mean and expected covariance of the Gaussian Process. We propose a heuristic algorithm to solve the optimization problem with the surrogate objective. The formulation and the algorithms are validated through extensive simulations.

MAP-NBV: Multi-agent Prediction-guided Next-Best-View Planning for Active 3D Object Reconstruction

Jul 08, 2023

Abstract:We propose MAP-NBV, a prediction-guided active algorithm for 3D reconstruction with multi-agent systems. Prediction-based approaches have shown great improvement in active perception tasks by learning the cues about structures in the environment from data. But these methods primarily focus on single-agent systems. We design a next-best-view approach that utilizes geometric measures over the predictions and jointly optimizes the information gain and control effort for efficient collaborative 3D reconstruction of the object. Our method achieves 22.75% improvement over the prediction-based single-agent approach and 15.63% improvement over the non-predictive multi-agent approach. We make our code publicly available through our project website: http://raaslab.org/projects/MAPNBV/

ProxMaP: Proximal Occupancy Map Prediction for Efficient Indoor Robot Navigation

May 10, 2023Abstract:In a typical path planning pipeline for a ground robot, we build a map (e.g., an occupancy grid) of the environment as the robot moves around. While navigating indoors, a ground robot's knowledge about the environment may be limited due to occlusions. Therefore, the map will have many as-yet-unknown regions that may need to be avoided by a conservative planner. Instead, if a robot is able to correctly predict what its surroundings and occluded regions look like, the robot may be more efficient in navigation. In this work, we focus on predicting occupancy within the reachable distance of the robot to enable faster navigation and present a self-supervised proximity occupancy map prediction method, named ProxMaP. We show that ProxMaP generalizes well across realistic and real domains, and improves the robot navigation efficiency in simulation by \textbf{$12.40\%$} against the traditional navigation method. We share our findings on our project webpage (see https://raaslab.org/projects/ProxMaP ).

Pred-NBV: Prediction-guided Next-Best-View for 3D Object Reconstruction

Apr 22, 2023Abstract:Prediction-based active perception has shown the potential to improve the navigation efficiency and safety of the robot by anticipating the uncertainty in the unknown environment. The existing works for 3D shape prediction make an implicit assumption about the partial observations and therefore cannot be used for real-world planning and do not consider the control effort for next-best-view planning. We present Pred-NBV, a realistic object shape reconstruction method consisting of PoinTr-C, an enhanced 3D prediction model trained on the ShapeNet dataset, and an information and control effort-based next-best-view method to address these issues. Pred-NBV shows an improvement of 25.46% in object coverage over the traditional method in the AirSim simulator, and performs better shape completion than PoinTr, the state-of-the-art shape completion model, even on real data obtained from a Velodyne 3D LiDAR mounted on DJI M600 Pro.

RE-MOVE: An Adaptive Policy Design Approach for Dynamic Environments via Language-Based Feedback

Mar 14, 2023Abstract:Reinforcement learning-based policies for continuous control robotic navigation tasks often fail to adapt to changes in the environment during real-time deployment, which may result in catastrophic failures. To address this limitation, we propose a novel approach called RE-MOVE (\textbf{RE}quest help and \textbf{MOVE} on), which uses language-based feedback to adjust trained policies to real-time changes in the environment. In this work, we enable the trained policy to decide \emph{when to ask for feedback} and \emph{how to incorporate feedback into trained policies}. RE-MOVE incorporates epistemic uncertainty to determine the optimal time to request feedback from humans and uses language-based feedback for real-time adaptation. We perform extensive synthetic and real-world evaluations to demonstrate the benefits of our proposed approach in several test-time dynamic navigation scenarios. Our approach enable robots to learn from human feedback and adapt to previously unseen adversarial situations.

Data-Driven Distributionally Robust Optimal Control with State-Dependent Noise

Mar 04, 2023Abstract:This paper introduces innovative data-driven techniques for estimating the noise distribution and KL divergence bound for distributionally robust optimal control (DROC). The proposed approach addresses the limitation of traditional DROC approaches that require known ambiguity sets for the noise distribution, our approach can learn these distributions and bounds in real-world scenarios where they may not be known a priori. To evaluate the effectiveness of our approach, a navigation problem involving a car-like robot under different noise distributions is used as a numerical example. The results demonstrate that DROC combined with the proposed data-driven approaches, what we call D3ROC, provide robust and efficient control policies that outperform the traditional iterative linear quadratic Gaussian (iLQG) control approach. Moreover, it shows the effectiveness of our proposed approach in handling different noise distributions. Overall, the proposed approach offers a promising solution to real-world DROC problems where the noise distribution and KL divergence bounds may not be known a priori, increasing the practicality and applicability of the DROC framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge