Noah D. Goodman

Endless Terminals: Scaling RL Environments for Terminal Agents

Jan 27, 2026Abstract:Environments are the bottleneck for self-improving agents. Current terminal benchmarks were built for evaluation, not training; reinforcement learning requires a scalable pipeline, not just a dataset. We introduce Endless Terminals, a fully autonomous pipeline that procedurally generates terminal-use tasks without human annotation. The pipeline has four stages: generating diverse task descriptions, building and validating containerized environments, producing completion tests, and filtering for solvability. From this pipeline we obtain 3255 tasks spanning file operations, log management, data processing, scripting, and database operations. We train agents using vanilla PPO with binary episode level rewards and a minimal interaction loop: no retrieval, multi-agent coordination, or specialized tools. Despite this simplicity, models trained on Endless Terminals show substantial gains: on our held-out dev set, Llama-3.2-3B improves from 4.0% to 18.2%, Qwen2.5-7B from 10.7% to 53.3%, and Qwen3-8B-openthinker-sft from 42.6% to 59.0%. These improvements transfer to human-curated benchmarks: models trained on Endless Terminals show substantial gains on held out human curated benchmarks: on TerminalBench 2.0, Llama-3.2-3B improves from 0.0% to 2.2%, Qwen2.5-7B from 2.2% to 3.4%, and Qwen3-8B-openthinker-sft from 1.1% to 6.7%, in each case outperforming alternative approaches including models with more complex agentic scaffolds. These results demonstrate that simple RL succeeds when environments scale.

Learning to Simulate Human Dialogue

Jan 07, 2026Abstract:To predict what someone will say is to model how they think. We study this through next-turn dialogue prediction: given a conversation, predict the next utterance produced by a person. We compare learning approaches along two dimensions: (1) whether the model is allowed to think before responding, and (2) how learning is rewarded either through an LLM-as-a-judge that scores semantic similarity and information completeness relative to the ground-truth response, or by directly maximizing the log-probability of the true human dialogue. We find that optimizing for judge-based rewards indeed increases judge scores throughout training, however it decreases the likelihood assigned to ground truth human responses and decreases the win rate when human judges choose the most human-like response among a real and synthetic option. This failure is amplified when the model is allowed to think before answering. In contrast, by directly maximizing the log-probability of observed human responses, the model learns to better predict what people actually say, improving on both log-probability and win rate evaluations. Treating chain-of-thought as a latent variable, we derive a lower bound on the log-probability. Optimizing this objective yields the best results on all our evaluations. These results suggest that thinking helps primarily when trained with a distribution-matching objective grounded in real human dialogue, and that scaling this approach to broader conversational data may produce models with a more nuanced understanding of human behavior.

Scaling up the think-aloud method

May 29, 2025Abstract:The think-aloud method, where participants voice their thoughts as they solve a task, is a valuable source of rich data about human reasoning processes. Yet, it has declined in popularity in contemporary cognitive science, largely because labor-intensive transcription and annotation preclude large sample sizes. Here, we develop methods to automate the transcription and annotation of verbal reports of reasoning using natural language processing tools, allowing for large-scale analysis of think-aloud data. In our study, 640 participants thought aloud while playing the Game of 24, a mathematical reasoning task. We automatically transcribed the recordings and coded the transcripts as search graphs, finding moderate inter-rater reliability with humans. We analyze these graphs and characterize consistency and variation in human reasoning traces. Our work demonstrates the value of think-aloud data at scale and serves as a proof of concept for the automated analysis of verbal reports.

Cognitive Behaviors that Enable Self-Improving Reasoners, or, Four Habits of Highly Effective STaRs

Mar 03, 2025

Abstract:Test-time inference has emerged as a powerful paradigm for enabling language models to ``think'' longer and more carefully about complex challenges, much like skilled human experts. While reinforcement learning (RL) can drive self-improvement in language models on verifiable tasks, some models exhibit substantial gains while others quickly plateau. For instance, we find that Qwen-2.5-3B far exceeds Llama-3.2-3B under identical RL training for the game of Countdown. This discrepancy raises a critical question: what intrinsic properties enable effective self-improvement? We introduce a framework to investigate this question by analyzing four key cognitive behaviors -- verification, backtracking, subgoal setting, and backward chaining -- that both expert human problem solvers and successful language models employ. Our study reveals that Qwen naturally exhibits these reasoning behaviors, whereas Llama initially lacks them. In systematic experimentation with controlled behavioral datasets, we find that priming Llama with examples containing these reasoning behaviors enables substantial improvements during RL, matching or exceeding Qwen's performance. Importantly, the presence of reasoning behaviors, rather than correctness of answers, proves to be the critical factor -- models primed with incorrect solutions containing proper reasoning patterns achieve comparable performance to those trained on correct solutions. Finally, leveraging continued pretraining with OpenWebMath data, filtered to amplify reasoning behaviors, enables the Llama model to match Qwen's self-improvement trajectory. Our findings establish a fundamental relationship between initial reasoning behaviors and the capacity for improvement, explaining why some language models effectively utilize additional computation while others plateau.

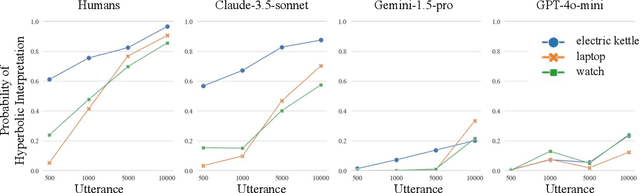

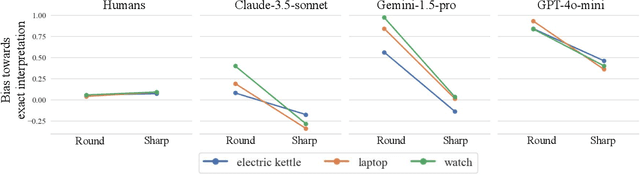

Non-literal Understanding of Number Words by Language Models

Feb 10, 2025

Abstract:Humans naturally interpret numbers non-literally, effortlessly combining context, world knowledge, and speaker intent. We investigate whether large language models (LLMs) interpret numbers similarly, focusing on hyperbole and pragmatic halo effects. Through systematic comparison with human data and computational models of pragmatic reasoning, we find that LLMs diverge from human interpretation in striking ways. By decomposing pragmatic reasoning into testable components, grounded in the Rational Speech Act framework, we pinpoint where LLM processing diverges from human cognition -- not in prior knowledge, but in reasoning with it. This insight leads us to develop a targeted solution -- chain-of-thought prompting inspired by an RSA model makes LLMs' interpretations more human-like. Our work demonstrates how computational cognitive models can both diagnose AI-human differences and guide development of more human-like language understanding capabilities.

Emergent Symbol-like Number Variables in Artificial Neural Networks

Jan 10, 2025

Abstract:What types of numeric representations emerge in Neural Networks (NNs)? To what degree do NNs induce abstract, mutable, slot-like numeric variables, and in what situations do these representations emerge? How do these representations change over learning, and how can we understand the neural implementations in ways that are unified across different NNs? In this work, we approach these questions by first training sequence based neural systems using Next Token Prediction (NTP) objectives on numeric tasks. We then seek to understand the neural solutions through the lens of causal abstractions or symbolic algorithms. We use a combination of causal interventions and visualization methods to find that artificial neural models do indeed develop analogs of interchangeable, mutable, latent number variables purely from the NTP objective. We then ask how variations on the tasks and model architectures affect the models' learned solutions to find that these symbol-like numeric representations do not form for every variant of the task, and transformers solve the problem in a notably different way than their recurrent counterparts. We then show how the symbol-like variables change over the course of training to find a strong correlation between the models' task performance and the alignment of their symbol-like representations. Lastly, we show that in all cases, some degree of gradience exists in these neural symbols, highlighting the difficulty of finding simple, interpretable symbolic stories of how neural networks perform numeric tasks. Taken together, our results are consistent with the view that neural networks can approximate interpretable symbolic programs of number cognition, but the particular program they approximate and the extent to which they approximate it can vary widely, depending on the network architecture, training data, extent of training, and network size.

BoxingGym: Benchmarking Progress in Automated Experimental Design and Model Discovery

Jan 02, 2025

Abstract:Understanding the world and explaining it with scientific theories is a central aspiration of artificial intelligence research. Proposing theories, designing experiments to test them, and then revising them based on data are fundamental to scientific discovery. Despite the significant promise of LLM-based scientific agents, no benchmarks systematically test LLM's ability to propose scientific models, collect experimental data, and revise them in light of new data. We introduce BoxingGym, a benchmark with 10 environments for systematically evaluating both experimental design (e.g. collecting data to test a scientific theory) and model discovery (e.g. proposing and revising scientific theories). To enable tractable and quantitative evaluation, we implement each environment as a generative probabilistic model with which a scientific agent can run interactive experiments. These probabilistic models are drawn from various real-world scientific domains ranging from psychology to ecology. To quantitatively evaluate a scientific agent's ability to collect informative experimental data, we compute the expected information gain (EIG), an information-theoretic quantity which measures how much an experiment reduces uncertainty about the parameters of a generative model. A good scientific theory is a concise and predictive explanation. Therefore, to quantitatively evaluate model discovery, we ask a scientific agent to explain their model and then assess whether this explanation enables another scientific agent to make reliable predictions about this environment. In addition to this explanation-based evaluation, we compute standard model evaluation metrics such as prediction errors. We find that current LLMs, such as GPT-4o, struggle with both experimental design and model discovery. We find that augmenting the LLM-based agent with an explicit statistical model does not reliably improve these results.

CriticAL: Critic Automation with Language Models

Nov 10, 2024Abstract:Understanding the world through models is a fundamental goal of scientific research. While large language model (LLM) based approaches show promise in automating scientific discovery, they often overlook the importance of criticizing scientific models. Criticizing models deepens scientific understanding and drives the development of more accurate models. Automating model criticism is difficult because it traditionally requires a human expert to define how to compare a model with data and evaluate if the discrepancies are significant--both rely heavily on understanding the modeling assumptions and domain. Although LLM-based critic approaches are appealing, they introduce new challenges: LLMs might hallucinate the critiques themselves. Motivated by this, we introduce CriticAL (Critic Automation with Language Models). CriticAL uses LLMs to generate summary statistics that capture discrepancies between model predictions and data, and applies hypothesis tests to evaluate their significance. We can view CriticAL as a verifier that validates models and their critiques by embedding them in a hypothesis testing framework. In experiments, we evaluate CriticAL across key quantitative and qualitative dimensions. In settings where we synthesize discrepancies between models and datasets, CriticAL reliably generates correct critiques without hallucinating incorrect ones. We show that both human and LLM judges consistently prefer CriticAL's critiques over alternative approaches in terms of transparency and actionability. Finally, we show that CriticAL's critiques enable an LLM scientist to improve upon human-designed models on real-world datasets.

Bayesian scaling laws for in-context learning

Oct 21, 2024Abstract:In-context learning (ICL) is a powerful technique for getting language models to perform complex tasks with no training updates. Prior work has established strong correlations between the number of in-context examples provided and the accuracy of the model's predictions. In this paper, we seek to explain this correlation by showing that ICL approximates a Bayesian learner. This perspective gives rise to a family of novel Bayesian scaling laws for ICL. In experiments with \mbox{GPT-2} models of different sizes, our scaling laws exceed or match existing scaling laws in accuracy while also offering interpretable terms for task priors, learning efficiency, and per-example probabilities. To illustrate the analytic power that such interpretable scaling laws provide, we report on controlled synthetic dataset experiments designed to inform real-world studies of safety alignment. In our experimental protocol, we use SFT to suppress an unwanted existing model capability and then use ICL to try to bring that capability back (many-shot jailbreaking). We then experiment on real-world instruction-tuned LLMs using capabilities benchmarks as well as a new many-shot jailbreaking dataset. In all cases, Bayesian scaling laws accurately predict the conditions under which ICL will cause the suppressed behavior to reemerge, which sheds light on the ineffectiveness of post-training at increasing LLM safety.

Human-like Affective Cognition in Foundation Models

Sep 19, 2024Abstract:Understanding emotions is fundamental to human interaction and experience. Humans easily infer emotions from situations or facial expressions, situations from emotions, and do a variety of other affective cognition. How adept is modern AI at these inferences? We introduce an evaluation framework for testing affective cognition in foundation models. Starting from psychological theory, we generate 1,280 diverse scenarios exploring relationships between appraisals, emotions, expressions, and outcomes. We evaluate the abilities of foundation models (GPT-4, Claude-3, Gemini-1.5-Pro) and humans (N = 567) across carefully selected conditions. Our results show foundation models tend to agree with human intuitions, matching or exceeding interparticipant agreement. In some conditions, models are ``superhuman'' -- they better predict modal human judgements than the average human. All models benefit from chain-of-thought reasoning. This suggests foundation models have acquired a human-like understanding of emotions and their influence on beliefs and behavior.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge