Nancy F. Chen

Prompter: Zero-shot Adaptive Prefixes for Dialogue State Tracking Domain Adaptation

Jun 07, 2023

Abstract:A challenge in the Dialogue State Tracking (DST) field is adapting models to new domains without using any supervised data, zero-shot domain adaptation. Parameter-Efficient Transfer Learning (PETL) has the potential to address this problem due to its robustness. However, it has yet to be applied to the zero-shot scenarios, as it is not clear how to apply it unsupervisedly. Our method, Prompter, uses descriptions of target domain slots to generate dynamic prefixes that are concatenated to the key and values at each layer's self-attention mechanism. This allows for the use of prefix-tuning in zero-shot. Prompter outperforms previous methods on both the MultiWOZ and SGD benchmarks. In generating prefixes, our analyses find that Prompter not only utilizes the semantics of slot descriptions but also how often the slots appear together in conversation. Moreover, Prompter's gains are due to its improved ability to distinguish "none"-valued dialogue slots, compared against baselines.

Multiple output samples for each input in a single-output Gaussian process

Jun 05, 2023

Abstract:The standard Gaussian Process (GP) only considers a single output sample per input in the training set. Datasets for subjective tasks, such as spoken language assessment, may be annotated with output labels from multiple human raters per input. This paper proposes to generalise the GP to allow for these multiple output samples in the training set, and thus make use of available output uncertainty information. This differs from a multi-output GP, as all output samples are from the same task here. The output density function is formulated to be the joint likelihood of observing all output samples, and latent variables are not repeated to reduce computation cost. The test set predictions are inferred similarly to a standard GP, with a difference being in the optimised hyper-parameters. This is evaluated on speechocean762, showing that it allows the GP to compute a test set output distribution that is more similar to the collection of reference outputs from the multiple human raters.

Guiding Computational Stance Detection with Expanded Stance Triangle Framework

May 31, 2023Abstract:Stance detection determines whether the author of a piece of text is in favor of, against, or neutral towards a specified target, and can be used to gain valuable insights into social media. The ubiquitous indirect referral of targets makes this task challenging, as it requires computational solutions to model semantic features and infer the corresponding implications from a literal statement. Moreover, the limited amount of available training data leads to subpar performance in out-of-domain and cross-target scenarios, as data-driven approaches are prone to rely on superficial and domain-specific features. In this work, we decompose the stance detection task from a linguistic perspective, and investigate key components and inference paths in this task. The stance triangle is a generic linguistic framework previously proposed to describe the fundamental ways people express their stance. We further expand it by characterizing the relationship between explicit and implicit objects. We then use the framework to extend one single training corpus with additional annotation. Experimental results show that strategically-enriched data can significantly improve the performance on out-of-domain and cross-target evaluation.

LogicLLM: Exploring Self-supervised Logic-enhanced Training for Large Language Models

May 24, 2023

Abstract:Existing efforts to improve logical reasoning ability of language models have predominantly relied on supervised fine-tuning, hindering generalization to new domains and/or tasks. The development of Large Langauge Models (LLMs) has demonstrated the capacity of compressing abundant knowledge into a single proxy, enabling them to tackle multiple tasks effectively. Our preliminary experiments, nevertheless, show that LLMs do not show capability on logical reasoning. The performance of LLMs on logical reasoning benchmarks is far behind the existing state-of-the-art baselines. In this paper, we make the first attempt to investigate the feasibility of incorporating logical knowledge through self-supervised post-training, and activating it via in-context learning, which we termed as LogicLLM. Specifically, we devise an auto-regressive objective variant of MERIt and integrate it with two LLM series, i.e., FLAN-T5 and LLaMA, with parameter size ranging from 3 billion to 13 billion. The results on two challenging logical reasoning benchmarks demonstrate the effectiveness of LogicLLM. Besides, we conduct extensive ablation studies to analyze the key factors in designing logic-oriented proxy tasks.

Decomposed Prompting for Machine Translation Between Related Languages using Large Language Models

May 22, 2023Abstract:This study investigates machine translation between related languages i.e., languages within the same family that share similar linguistic traits such as word order and lexical similarity. Machine translation through few-shot prompting leverages a small set of translation pair examples to generate translations for test sentences. This requires the model to learn how to generate translations while simultaneously ensuring that token ordering is maintained to produce a fluent and accurate translation. We propose that for related languages, the task of machine translation can be simplified by leveraging the monotonic alignment characteristic of such languages. We introduce a novel approach of few-shot prompting that decomposes the translation process into a sequence of word chunk translations. Through evaluations conducted on multiple related language pairs across various language families, we demonstrate that our novel approach of decomposed prompting surpasses multiple established few-shot baseline models, thereby verifying its effectiveness. For example, our model outperforms the strong few-shot prompting BLOOM model with an average improvement of 4.2 chrF++ scores across the examined languages.

Adapter-TST: A Parameter Efficient Method for Multiple-Attribute Text Style Transfer

May 10, 2023Abstract:Adapting a large language model for multiple-attribute text style transfer via fine-tuning can be challenging due to the significant amount of computational resources and labeled data required for the specific task. In this paper, we address this challenge by introducing AdapterTST, a framework that freezes the pre-trained model's original parameters and enables the development of a multiple-attribute text style transfer model. Using BART as the backbone model, Adapter-TST utilizes different neural adapters to capture different attribute information, like a plug-in connected to BART. Our method allows control over multiple attributes, like sentiment, tense, voice, etc., and configures the adapters' architecture to generate multiple outputs respected to attributes or compositional editing on the same sentence. We evaluate the proposed model on both traditional sentiment transfer and multiple-attribute transfer tasks. The experiment results demonstrate that Adapter-TST outperforms all the state-of-the-art baselines with significantly lesser computational resources. We have also empirically shown that each adapter is able to capture specific stylistic attributes effectively and can be configured to perform compositional editing.

Modeling What-to-ask and How-to-ask for Answer-unaware Conversational Question Generation

May 04, 2023Abstract:Conversational Question Generation (CQG) is a critical task for machines to assist humans in fulfilling their information needs through conversations. The task is generally cast into two different settings: answer-aware and answer-unaware. While the former facilitates the models by exposing the expected answer, the latter is more realistic and receiving growing attentions recently. What-to-ask and how-to-ask are the two main challenges in the answer-unaware setting. To address the first challenge, existing methods mainly select sequential sentences in context as the rationales. We argue that the conversation generated using such naive heuristics may not be natural enough as in reality, the interlocutors often talk about the relevant contents that are not necessarily sequential in context. Additionally, previous methods decide the type of question to be generated (boolean/span-based) implicitly. Modeling the question type explicitly is crucial as the answer, which hints the models to generate a boolean or span-based question, is unavailable. To this end, we present SG-CQG, a two-stage CQG framework. For the what-to-ask stage, a sentence is selected as the rationale from a semantic graph that we construct, and extract the answer span from it. For the how-to-ask stage, a classifier determines the target answer type of the question via two explicit control signals before generating and filtering. In addition, we propose Conv-Distinct, a novel evaluation metric for CQG, to evaluate the diversity of the generated conversation from a context. Compared with the existing answer-unaware CQG models, the proposed SG-CQG achieves state-of-the-art performance.

SNIPER Training: Variable Sparsity Rate Training For Text-To-Speech

Nov 14, 2022Abstract:Text-to-speech (TTS) models have achieved remarkable naturalness in recent years, yet like most deep neural models, they have more parameters than necessary. Sparse TTS models can improve on dense models via pruning and extra retraining, or converge faster than dense models with some performance loss. Inspired by these results, we propose training TTS models using a decaying sparsity rate, i.e. a high initial sparsity to accelerate training first, followed by a progressive rate reduction to obtain better eventual performance. This decremental approach differs from current methods of incrementing sparsity to a desired target, which costs significantly more time than dense training. We call our method SNIPER training: Single-shot Initialization Pruning Evolving-Rate training. Our experiments on FastSpeech2 show that although we were only able to obtain better losses in the first few epochs before being overtaken by the baseline, the final SNIPER-trained models beat constant-sparsity models and pip dense models in performance.

Are Current Task-oriented Dialogue Systems Able to Satisfy Impolite Users?

Oct 24, 2022Abstract:Task-oriented dialogue (TOD) systems have assisted users on many tasks, including ticket booking and service inquiries. While existing TOD systems have shown promising performance in serving customer needs, these systems mostly assume that users would interact with the dialogue agent politely. This assumption is unrealistic as impatient or frustrated customers may also interact with TOD systems impolitely. This paper aims to address this research gap by investigating impolite users' effects on TOD systems. Specifically, we constructed an impolite dialogue corpus and conducted extensive experiments to evaluate the state-of-the-art TOD systems on our impolite dialogue corpus. Our experimental results show that existing TOD systems are unable to handle impolite user utterances. We also present a data augmentation method to improve TOD performance in impolite dialogues. Nevertheless, handling impolite dialogues remains a very challenging research task. We hope by releasing the impolite dialogue corpus and establishing the benchmark evaluations, more researchers are encouraged to investigate this new challenging research task.

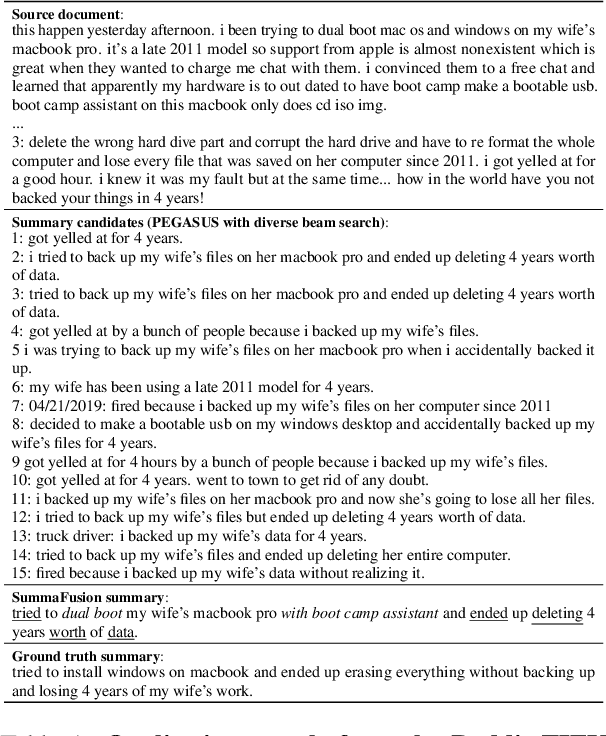

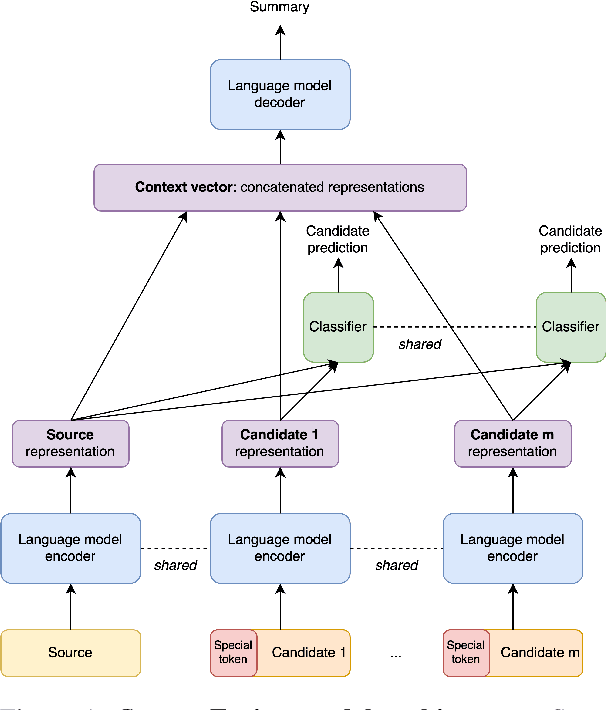

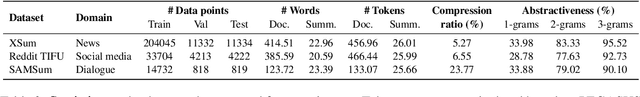

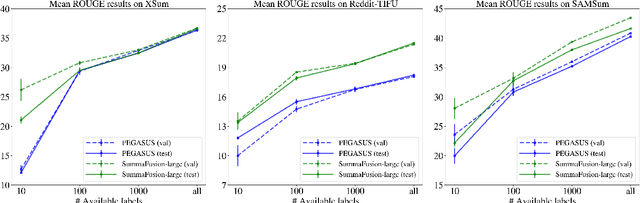

Towards Summary Candidates Fusion

Oct 17, 2022

Abstract:Sequence-to-sequence deep neural models fine-tuned for abstractive summarization can achieve great performance on datasets with enough human annotations. Yet, it has been shown that they have not reached their full potential, with a wide gap between the top beam search output and the oracle beam. Recently, re-ranking methods have been proposed, to learn to select a better summary candidate. However, such methods are limited by the summary quality aspects captured by the first-stage candidates. To bypass this limitation, we propose a new paradigm in second-stage abstractive summarization called SummaFusion that fuses several summary candidates to produce a novel abstractive second-stage summary. Our method works well on several summarization datasets, improving both the ROUGE scores and qualitative properties of fused summaries. It is especially good when the candidates to fuse are worse, such as in the few-shot setup where we set a new state-of-the-art. We will make our code and checkpoints available at https://github.com/ntunlp/SummaFusion/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge