Jiri Matas

Progressive NAPSAC: sampling from gradually growing neighborhoods

Jun 05, 2019

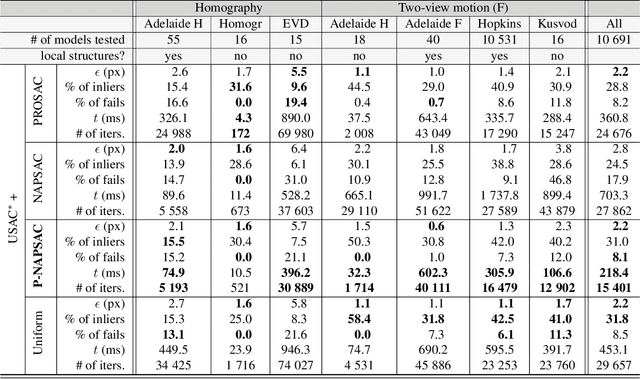

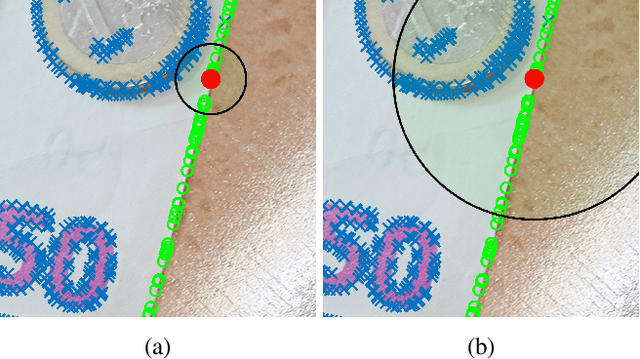

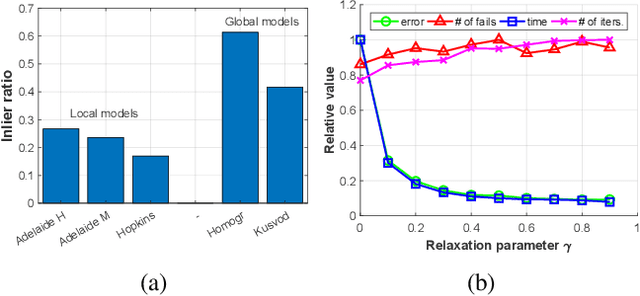

Abstract:We propose Progressive NAPSAC, P-NAPSAC in short, which merges the advantages of local and global sampling by drawing samples from gradually growing neighborhoods. Exploiting the fact that nearby points are more likely to originate from the same geometric model, P-NAPSAC finds local structures earlier than global samplers. We show that the progressive spatial sampling in P-NAPSAC can be integrated with PROSAC sampling, which is applied to the first, location-defining, point. P-NAPSAC is embedded in USAC, a state-of-the-art robust estimation pipeline, which we further improve by implementing its local optimization as in Graph-Cut RANSAC. We call the resulting estimator USAC*. The method is tested on homography and fundamental matrix fitting on a total of 10,691 models from seven publicly available datasets. USAC* with P-NAPSAC outperforms reference methods in terms of speed on all problems.

Progressive-X: Efficient, Anytime, Multi-Model Fitting Algorithm

Jun 05, 2019

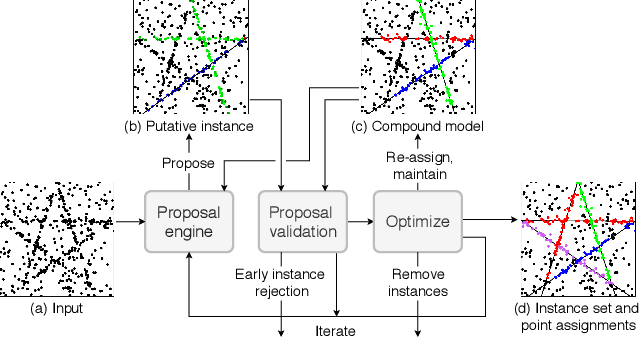

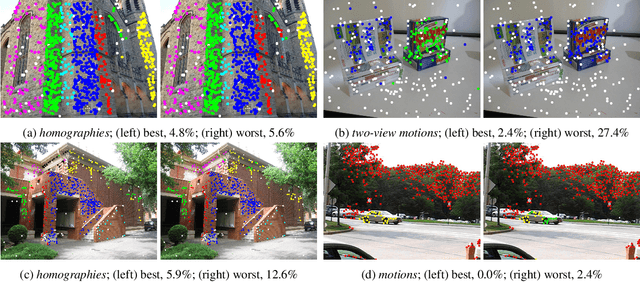

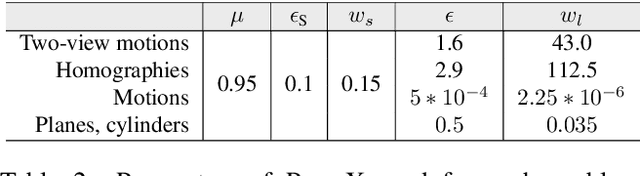

Abstract:The Progressive-X algorithm, Prog-X in short, is proposed for geometric multi-model fitting. The method interleaves sampling and consolidation of the current data interpretation via repetitive hypothesis proposal, fast rejection, and integration of the new hypothesis into the kept instance set by labeling energy minimization. Due to exploring the data progressively, the method has several beneficial properties compared with the state-of-the-art. First, a clear criterion, adopted from RANSAC, controls the termination and stops the algorithm when the probability of finding a new model with a reasonable number of inliers falls below a threshold. Second, Prog-X is an any-time algorithm. Thus, whenever is interrupted, e.g. due to a time limit, the returned instances cover real and, likely, the most dominant ones. The method is superior to the state-of-the-art in terms of accuracy in both synthetic experiments and on publicly available real-world datasets for homography, two-view motion, and motion segmentation.

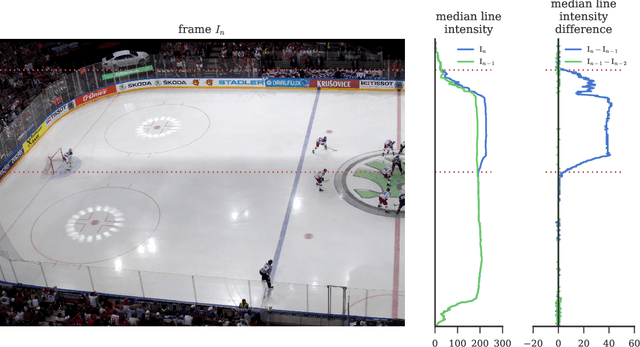

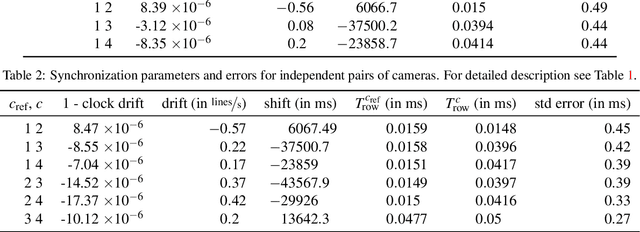

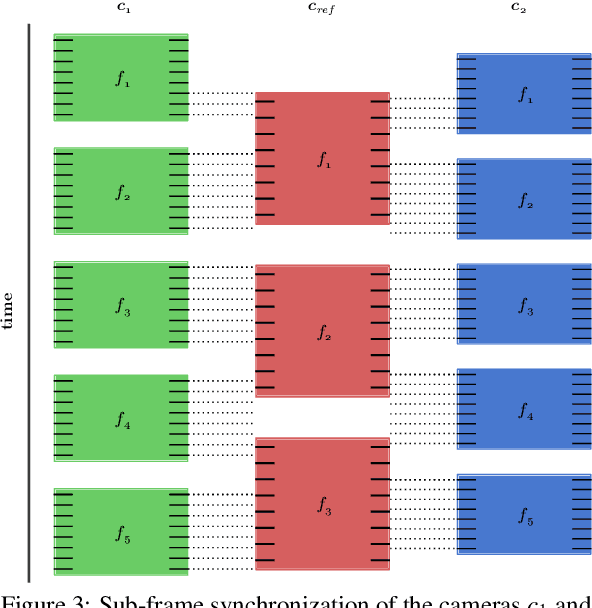

Rolling Shutter Camera Synchronization with Sub-millisecond Accuracy

Feb 28, 2019

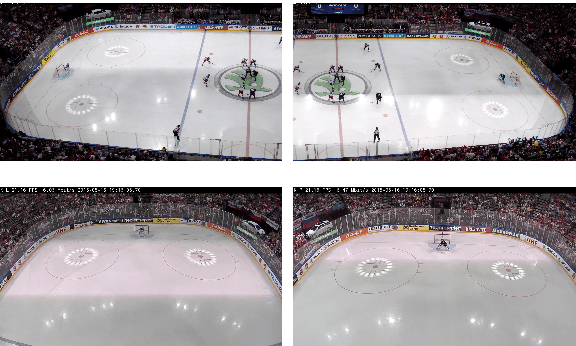

Abstract:A simple method for synchronization of video streams with a precision better than one millisecond is proposed. The method is applicable to any number of rolling shutter cameras and when a few photographic flashes or other abrupt lighting changes are present in the video. The approach exploits the rolling shutter sensor property that every sensor row starts its exposure with a small delay after the onset of the previous row. The cameras may have different frame rates and resolutions, and need not have overlapping fields of view. The method was validated on five minutes of four streams from an ice hockey match. The found transformation maps events visible in all cameras to a reference time with a standard deviation of the temporal error in the range of 0.3 to 0.5 milliseconds. The quality of the synchronization is demonstrated on temporally and spatially overlapping images of a fast moving puck observed in two cameras.

* 8 pages, 10 figures, published at VISAPP 2017

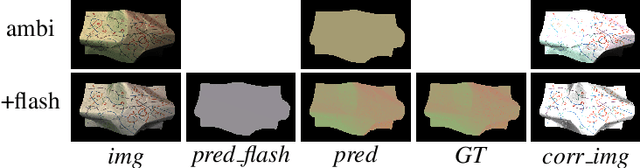

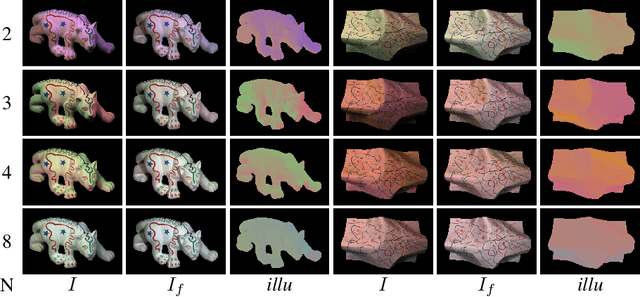

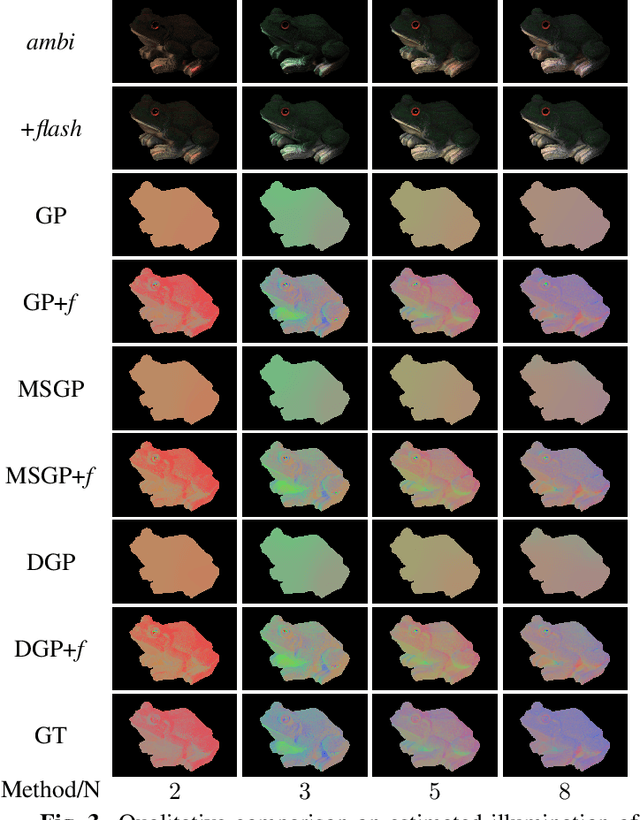

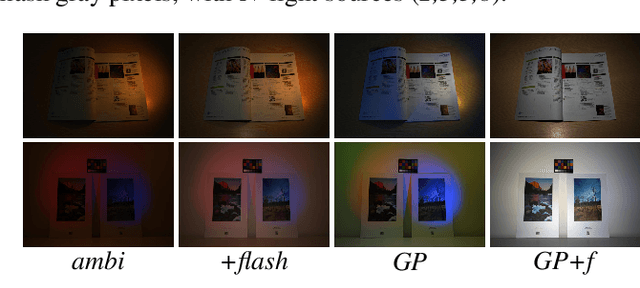

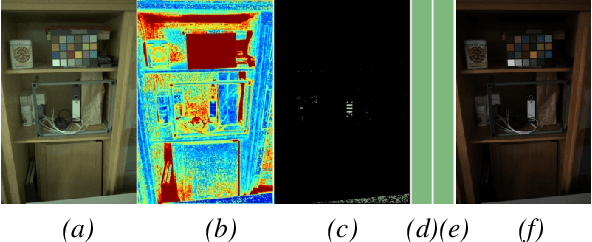

Flash Lightens Gray Pixels

Feb 27, 2019

Abstract:In the real world, a scene is usually cast by multiple illuminants and herein we address the problem of spatial illumination estimation. Our solution is based on detecting gray pixels with the help of flash photography. We show that flash photography significantly improves the performance of gray pixel detection without illuminant prior, training data or calibration of the flash. We also introduce a novel flash photography dataset generated from the MIT intrinsic dataset.

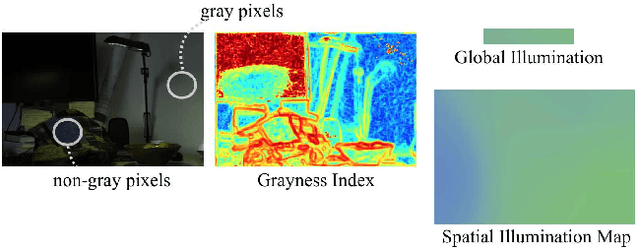

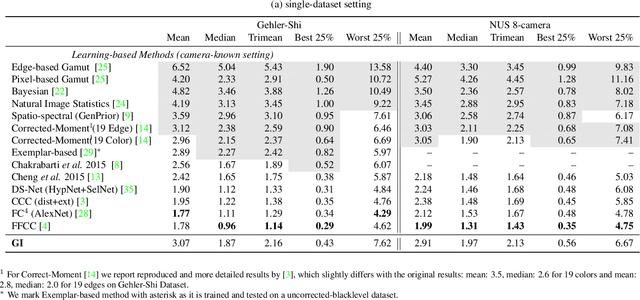

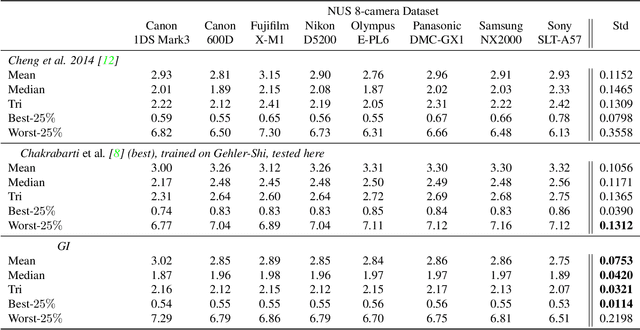

On Finding Gray Pixels

Jan 12, 2019

Abstract:We propose a novel grayness index for finding gray pixels and demonstrate its effectiveness and efficiency in illumination estimation. The grayness index, GI in short, is derived using the Dichromatic Reflection Model and is learning-free. The proposed GI allows estimating one or multiple illumination sources in color-biased images. On standard single-illumination and multiple-illumination estimation benchmarks, GI outperforms state-of-the-art statistical methods and many recent deep net methods. GI is simple and fast, written in a few dozen lines, processing a 1080p image in about 0.4 seconds with a non-optimized Matlab code.

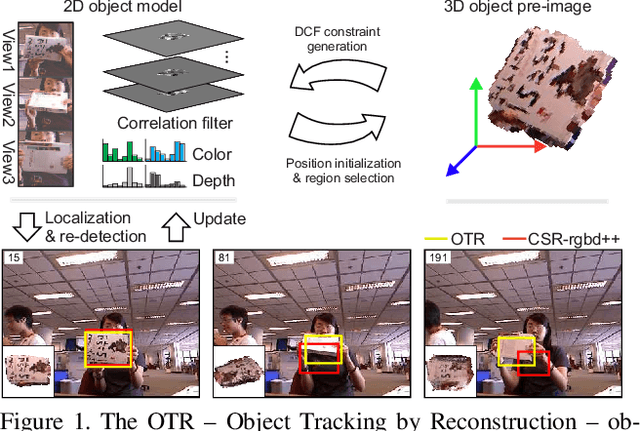

Object Tracking by Reconstruction with View-Specific Discriminative Correlation Filters

Nov 27, 2018

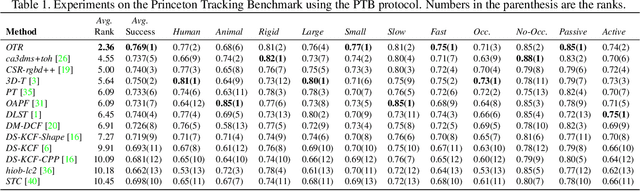

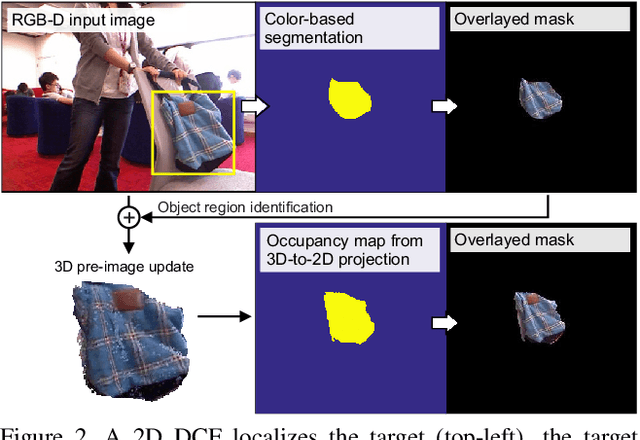

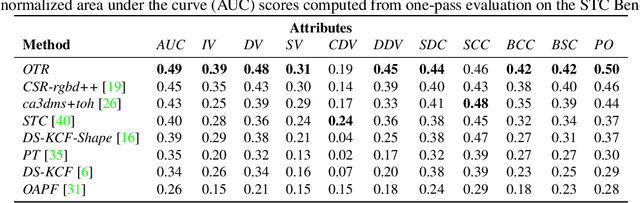

Abstract:Standard RGB-D trackers treat the target as an inherently 2D structure, which makes modelling appearance changes related even to simple out-of-plane rotation highly challenging. We address this limitation by proposing a novel long-term RGB-D tracker - Object Tracking by Reconstruction (OTR). The tracker performs online 3D target reconstruction to facilitate robust learning of a set of view-specific discriminative correlation filters (DCFs). The 3D reconstruction supports two performance-enhancing features: (i) generation of accurate spatial support for constrained DCF learning from its 2D projection and (ii) point cloud based estimation of 3D pose change for selection and storage of view-specific DCFs which are used to robustly localize the target after out-of-view rotation or heavy occlusion. Extensive evaluation of OTR on the challenging Princeton RGB-D tracking and STC Benchmarks shows it outperforms the state-of-the-art by a large margin.

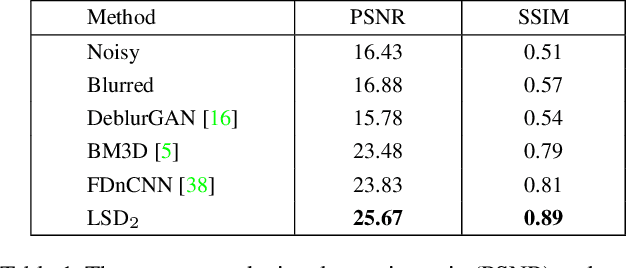

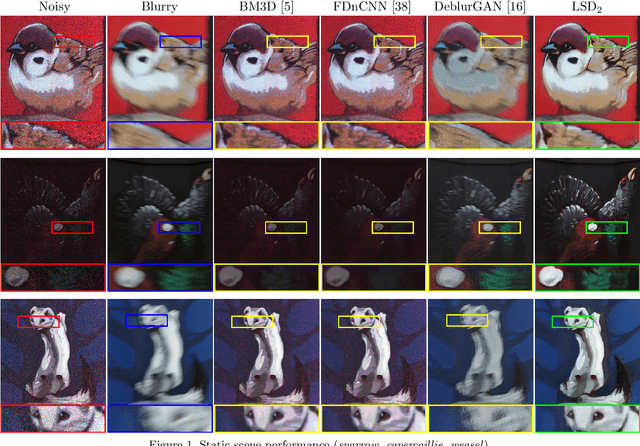

LSD$_2$ - Joint Denoising and Deblurring of Short and Long Exposure Images with Convolutional Neural Networks

Nov 23, 2018

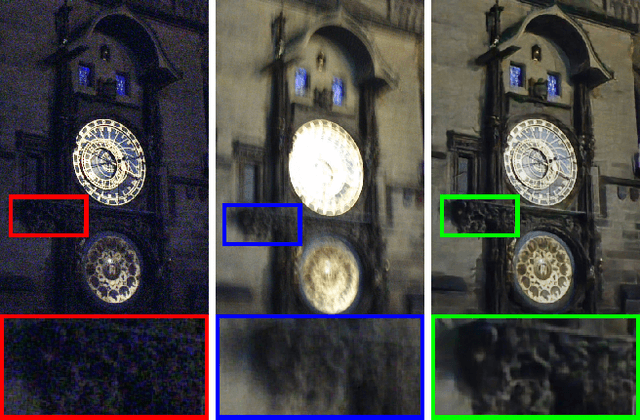

Abstract:This paper addresses the challenging problem of acquiring high-quality photographs with handheld smartphone cameras in low-light imaging conditions. We propose an approach based on capturing pairs of short and long exposure images in rapid succession and fusing them into a single high-quality photograph using a convolutional neural network. The network input consists of a pair of images, where the short exposure image is typically noisy and has poor colors due to low lighting and the long exposure image is susceptible to motion blur when the camera or scene objects are moving. The network is trained using a combination of real and simulated data and we propose a novel approach for generating realistic synthetic short-long exposure image pairs. Our approach is the first one to address the joint denoising and deblurring problem using deep networks. It outperforms the existing denoising and deblurring methods in this task and allows to produce good images in extremely challenging conditions. Our source code, pretrained models and data will be made publicly available to facilitate future research.

A Summary of the 4th International Workshop on Recovering 6D Object Pose

Oct 09, 2018

Abstract:This document summarizes the 4th International Workshop on Recovering 6D Object Pose which was organized in conjunction with ECCV 2018 in Munich. The workshop featured four invited talks, oral and poster presentations of accepted workshop papers, and an introduction of the BOP benchmark for 6D object pose estimation. The workshop was attended by 100+ people working on relevant topics in both academia and industry who shared up-to-date advances and discussed open problems.

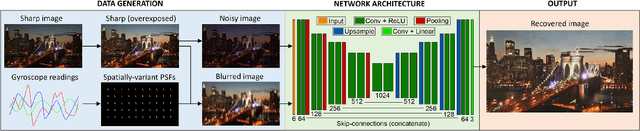

Inertial-aided Motion Deblurring with Deep Networks

Oct 01, 2018

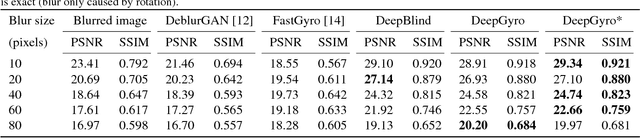

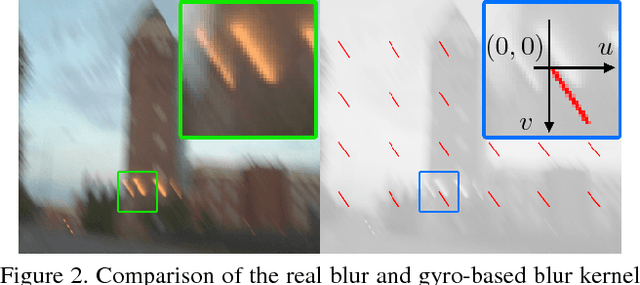

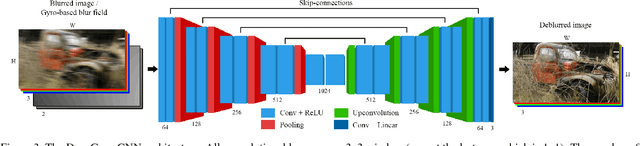

Abstract:We propose an inertial-aided deblurring method that incorporates gyroscope measurements into a convolutional neural network (CNN). With the help of inertial measurements, it can handle extremely strong and spatially-variant motion blur. At the same time, the image data is used to overcome the limitations of gyro-based blur estimation. To train our network, we also introduce a novel way of generating realistic training data using the gyroscope. The evaluation shows a clear improvement in visual quality over the state-of-the-art while achieving real-time performance. Furthermore, the method is shown to improve the performance of existing feature detectors and descriptors against the motion blur.

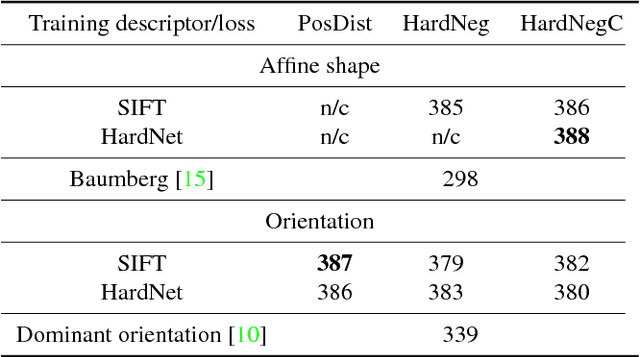

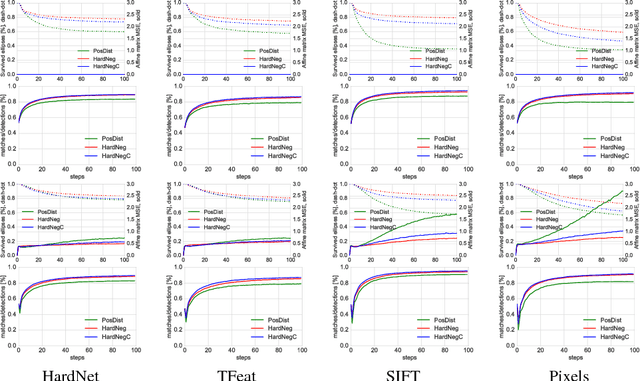

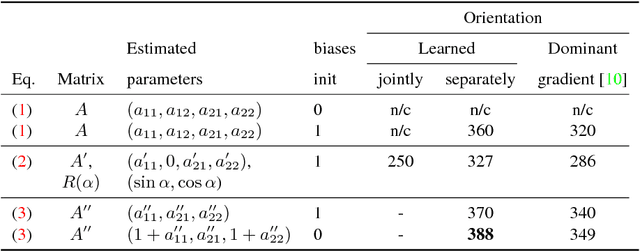

Repeatability Is Not Enough: Learning Affine Regions via Discriminability

Aug 28, 2018

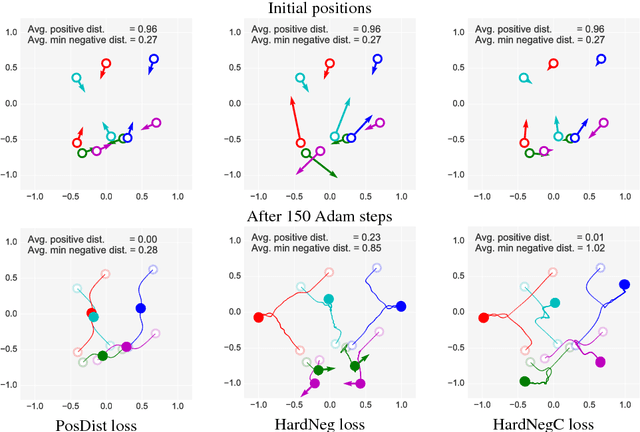

Abstract:A method for learning local affine-covariant regions is presented. We show that maximizing geometric repeatability does not lead to local regions, a.k.a features,that are reliably matched and this necessitates descriptor-based learning. We explore factors that influence such learning and registration: the loss function, descriptor type, geometric parametrization and the trade-off between matchability and geometric accuracy and propose a novel hard negative-constant loss function for learning of affine regions. The affine shape estimator -- AffNet -- trained with the hard negative-constant loss outperforms the state-of-the-art in bag-of-words image retrieval and wide baseline stereo. The proposed training process does not require precisely geometrically aligned patches.The source codes and trained weights are available at https://github.com/ducha-aiki/affnet

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge