Jinwoo Shin

Korea Advanced Institute of Science and Technology

Co$^2$L: Contrastive Continual Learning

Jun 28, 2021

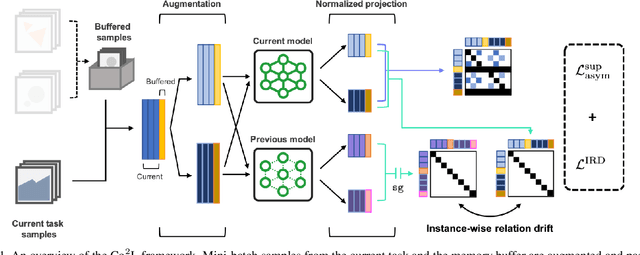

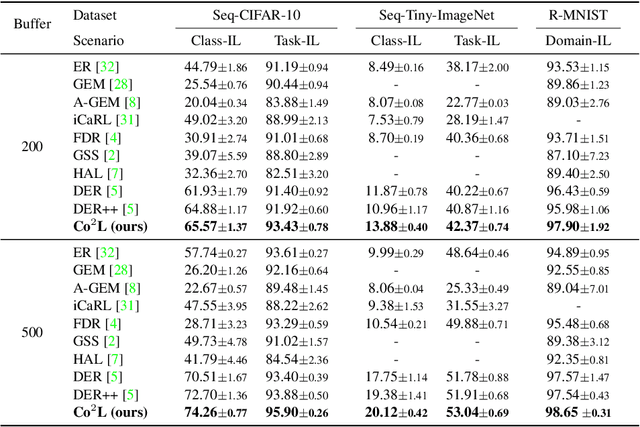

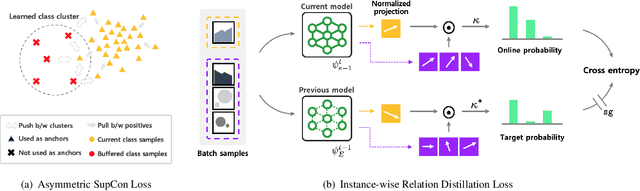

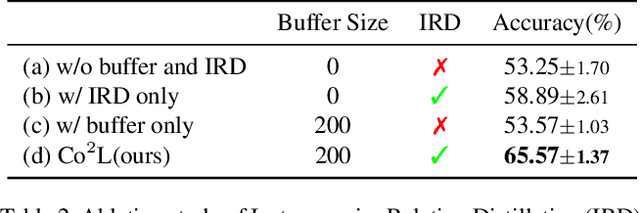

Abstract:Recent breakthroughs in self-supervised learning show that such algorithms learn visual representations that can be transferred better to unseen tasks than joint-training methods relying on task-specific supervision. In this paper, we found that the similar holds in the continual learning con-text: contrastively learned representations are more robust against the catastrophic forgetting than jointly trained representations. Based on this novel observation, we propose a rehearsal-based continual learning algorithm that focuses on continually learning and maintaining transferable representations. More specifically, the proposed scheme (1) learns representations using the contrastive learning objective, and (2) preserves learned representations using a self-supervised distillation step. We conduct extensive experimental validations under popular benchmark image classification datasets, where our method sets the new state-of-the-art performance.

Scaling Neural Tangent Kernels via Sketching and Random Features

Jun 15, 2021

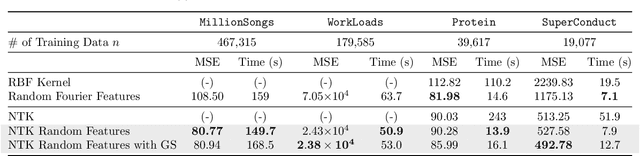

Abstract:The Neural Tangent Kernel (NTK) characterizes the behavior of infinitely-wide neural networks trained under least squares loss by gradient descent. Recent works also report that NTK regression can outperform finitely-wide neural networks trained on small-scale datasets. However, the computational complexity of kernel methods has limited its use in large-scale learning tasks. To accelerate learning with NTK, we design a near input-sparsity time approximation algorithm for NTK, by sketching the polynomial expansions of arc-cosine kernels: our sketch for the convolutional counterpart of NTK (CNTK) can transform any image using a linear runtime in the number of pixels. Furthermore, we prove a spectral approximation guarantee for the NTK matrix, by combining random features (based on leverage score sampling) of the arc-cosine kernels with a sketching algorithm. We benchmark our methods on various large-scale regression and classification tasks and show that a linear regressor trained on our CNTK features matches the accuracy of exact CNTK on CIFAR-10 dataset while achieving 150x speedup.

Self-Improved Retrosynthetic Planning

Jun 09, 2021

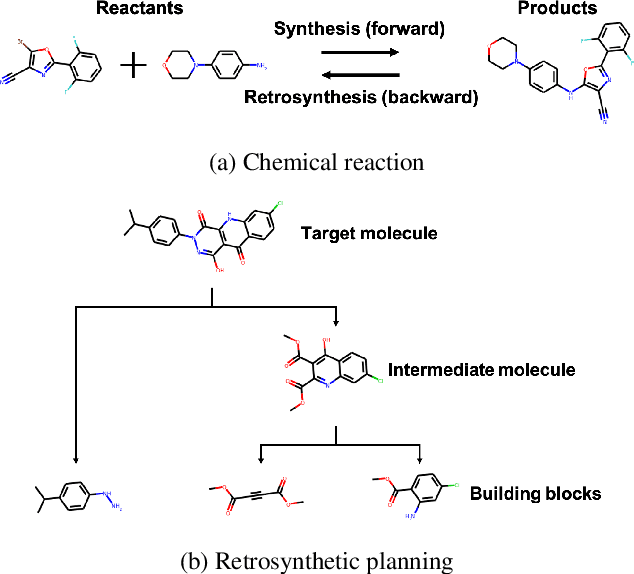

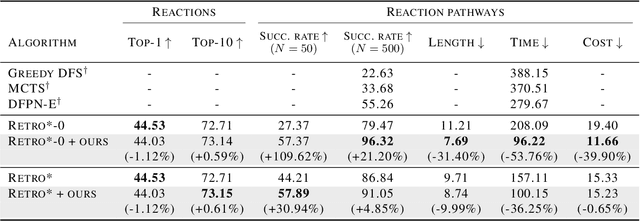

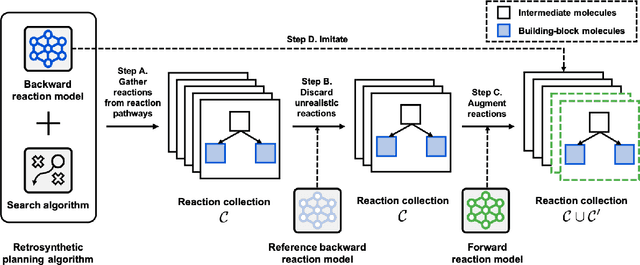

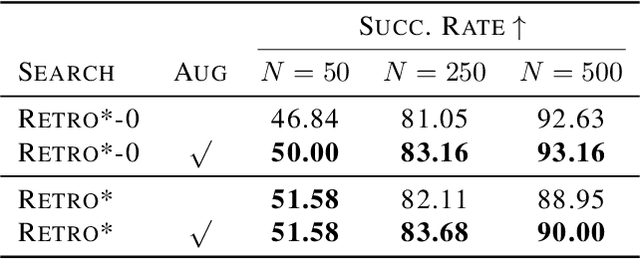

Abstract:Retrosynthetic planning is a fundamental problem in chemistry for finding a pathway of reactions to synthesize a target molecule. Recently, search algorithms have shown promising results for solving this problem by using deep neural networks (DNNs) to expand their candidate solutions, i.e., adding new reactions to reaction pathways. However, the existing works on this line are suboptimal; the retrosynthetic planning problem requires the reaction pathways to be (a) represented by real-world reactions and (b) executable using "building block" molecules, yet the DNNs expand reaction pathways without fully incorporating such requirements. Motivated by this, we propose an end-to-end framework for directly training the DNNs towards generating reaction pathways with the desirable properties. Our main idea is based on a self-improving procedure that trains the model to imitate successful trajectories found by itself. We also propose a novel reaction augmentation scheme based on a forward reaction model. Our experiments demonstrate that our scheme significantly improves the success rate of solving the retrosynthetic problem from 86.84% to 96.32% while maintaining the performance of DNN for predicting valid reactions.

RetCL: A Selection-based Approach for Retrosynthesis via Contrastive Learning

Jun 03, 2021

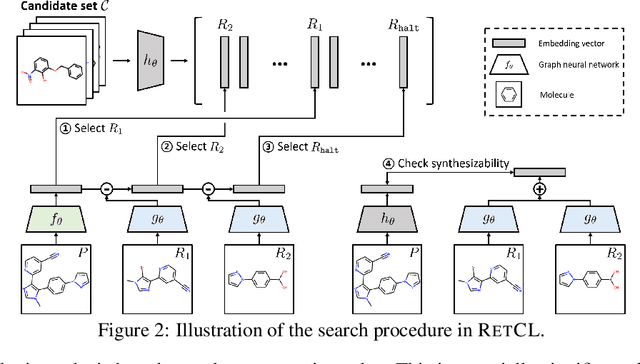

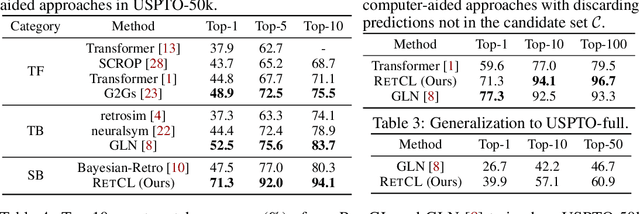

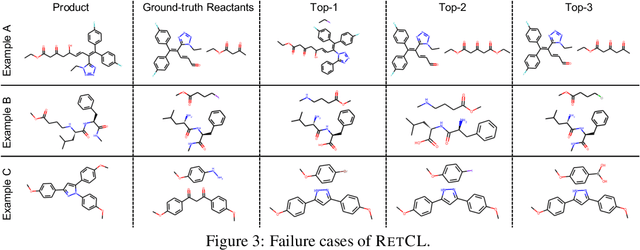

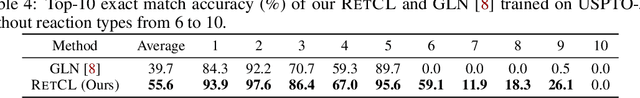

Abstract:Retrosynthesis, of which the goal is to find a set of reactants for synthesizing a target product, is an emerging research area of deep learning. While the existing approaches have shown promising results, they currently lack the ability to consider availability (e.g., stability or purchasability) of the reactants or generalize to unseen reaction templates (i.e., chemical reaction rules). In this paper, we propose a new approach that mitigates the issues by reformulating retrosynthesis into a selection problem of reactants from a candidate set of commercially available molecules. To this end, we design an efficient reactant selection framework, named RetCL (retrosynthesis via contrastive learning), for enumerating all of the candidate molecules based on selection scores computed by graph neural networks. For learning the score functions, we also propose a novel contrastive training scheme with hard negative mining. Extensive experiments demonstrate the benefits of the proposed selection-based approach. For example, when all 671k reactants in the USPTO {database} are given as candidates, our RetCL achieves top-1 exact match accuracy of $71.3\%$ for the USPTO-50k benchmark, while a recent transformer-based approach achieves $59.6\%$. We also demonstrate that RetCL generalizes well to unseen templates in various settings in contrast to template-based approaches.

Consistency and Monotonicity Regularization for Neural Knowledge Tracing

May 03, 2021

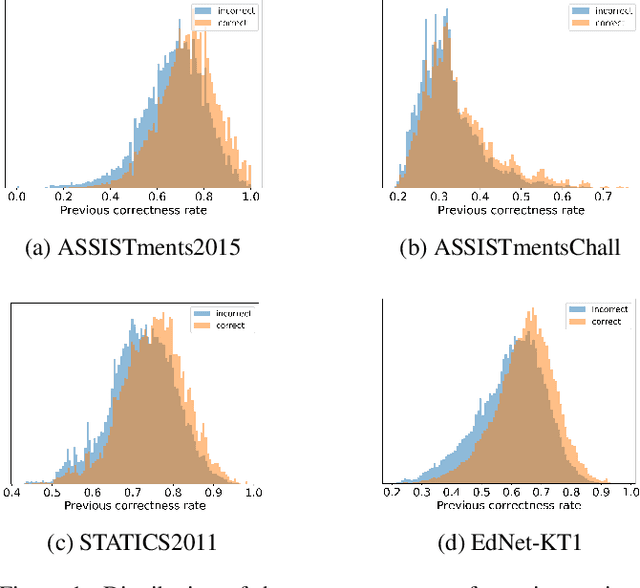

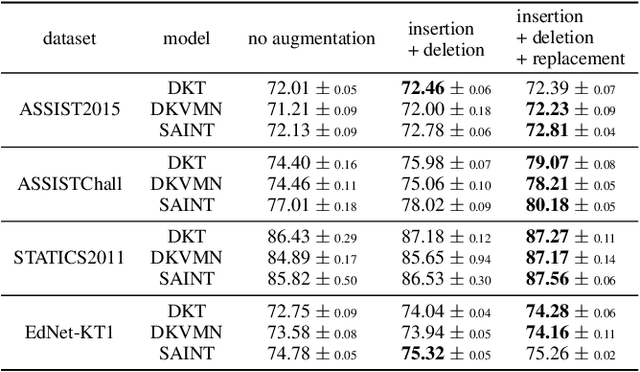

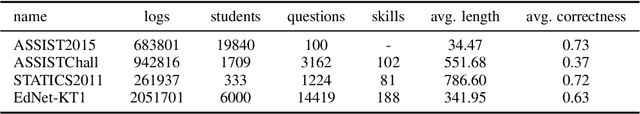

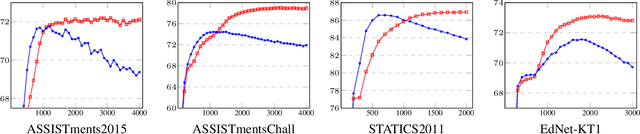

Abstract:Knowledge Tracing (KT), tracking a human's knowledge acquisition, is a central component in online learning and AI in Education. In this paper, we present a simple, yet effective strategy to improve the generalization ability of KT models: we propose three types of novel data augmentation, coined replacement, insertion, and deletion, along with corresponding regularization losses that impose certain consistency or monotonicity biases on the model's predictions for the original and augmented sequence. Extensive experiments on various KT benchmarks show that our regularization scheme consistently improves the model performances, under 3 widely-used neural networks and 4 public benchmarks, e.g., it yields 6.3% improvement in AUC under the DKT model and the ASSISTmentsChall dataset.

Random Features for the Neural Tangent Kernel

Apr 03, 2021

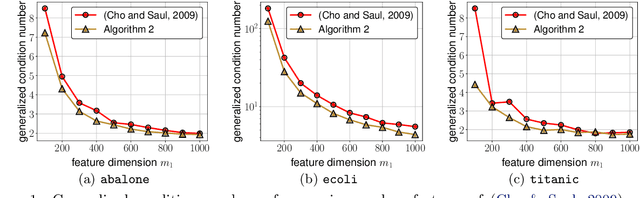

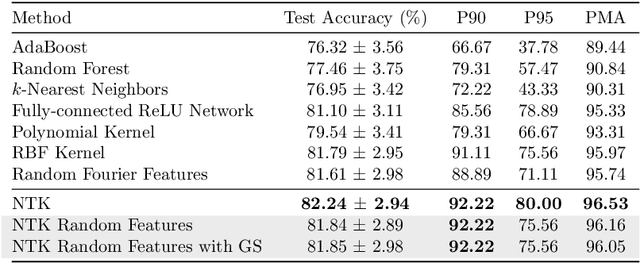

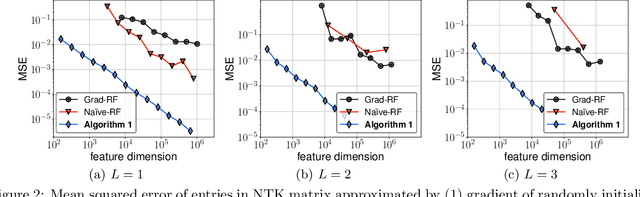

Abstract:The Neural Tangent Kernel (NTK) has discovered connections between deep neural networks and kernel methods with insights of optimization and generalization. Motivated by this, recent works report that NTK can achieve better performances compared to training neural networks on small-scale datasets. However, results under large-scale settings are hardly studied due to the computational limitation of kernel methods. In this work, we propose an efficient feature map construction of the NTK of fully-connected ReLU network which enables us to apply it to large-scale datasets. We combine random features of the arc-cosine kernels with a sketching-based algorithm which can run in linear with respect to both the number of data points and input dimension. We show that dimension of the resulting features is much smaller than other baseline feature map constructions to achieve comparable error bounds both in theory and practice. We additionally utilize the leverage score based sampling for improved bounds of arc-cosine random features and prove a spectral approximation guarantee of the proposed feature map to the NTK matrix of two-layer neural network. We benchmark a variety of machine learning tasks to demonstrate the superiority of the proposed scheme. In particular, our algorithm can run tens of magnitude faster than the exact kernel methods for large-scale settings without performance loss.

Training GANs with Stronger Augmentations via Contrastive Discriminator

Mar 17, 2021

Abstract:Recent works in Generative Adversarial Networks (GANs) are actively revisiting various data augmentation techniques as an effective way to prevent discriminator overfitting. It is still unclear, however, that which augmentations could actually improve GANs, and in particular, how to apply a wider range of augmentations in training. In this paper, we propose a novel way to address these questions by incorporating a recent contrastive representation learning scheme into the GAN discriminator, coined ContraD. This "fusion" enables the discriminators to work with much stronger augmentations without increasing their training instability, thereby preventing the discriminator overfitting issue in GANs more effectively. Even better, we observe that the contrastive learning itself also benefits from our GAN training, i.e., by maintaining discriminative features between real and fake samples, suggesting a strong coherence between the two worlds: good contrastive representations are also good for GAN discriminators, and vice versa. Our experimental results show that GANs with ContraD consistently improve FID and IS compared to other recent techniques incorporating data augmentations, still maintaining highly discriminative features in the discriminator in terms of the linear evaluation. Finally, as a byproduct, we also show that our GANs trained in an unsupervised manner (without labels) can induce many conditional generative models via a simple latent sampling, leveraging the learned features of ContraD. Code is available at https://github.com/jh-jeong/ContraD.

Consistency Regularization for Adversarial Robustness

Mar 08, 2021

Abstract:Adversarial training (AT) is currently one of the most successful methods to obtain the adversarial robustness of deep neural networks. However, a significant generalization gap in the robustness obtained from AT has been problematic, making practitioners to consider a bag of tricks for a successful training, e.g., early stopping. In this paper, we investigate data augmentation (DA) techniques to address the issue. In contrast to the previous reports in the literature that DA is not effective for regularizing AT, we discover that DA can mitigate overfitting in AT surprisingly well, but they should be chosen deliberately. To utilize the effect of DA further, we propose a simple yet effective auxiliary 'consistency' regularization loss to optimize, which forces predictive distributions after attacking from two different augmentations to be similar to each other. Our experimental results demonstrate that our simple regularization scheme is applicable for a wide range of AT methods, showing consistent yet significant improvements in the test robust accuracy. More remarkably, we also show that our method could significantly help the model to generalize its robustness against unseen adversaries, e.g., other types or larger perturbations compared to those used during training. Code is available at https://github.com/alinlab/consistency-adversarial.

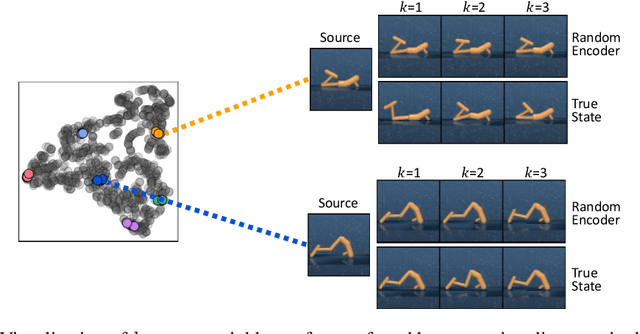

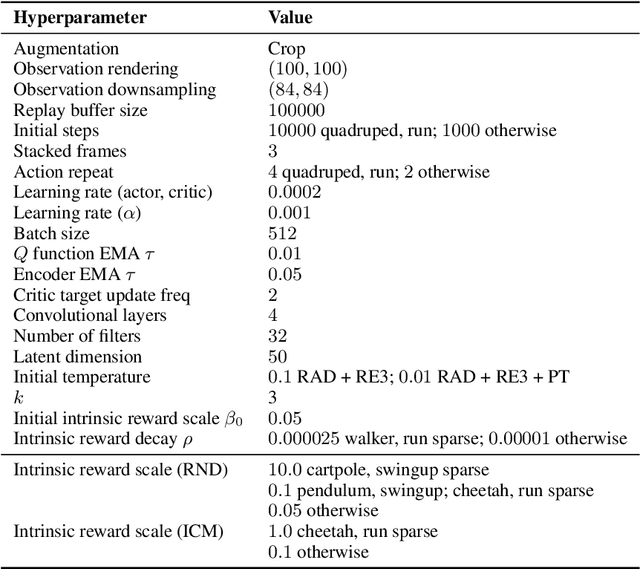

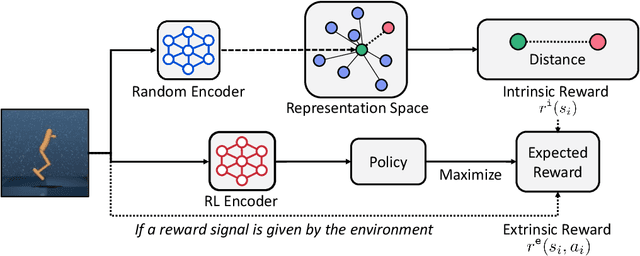

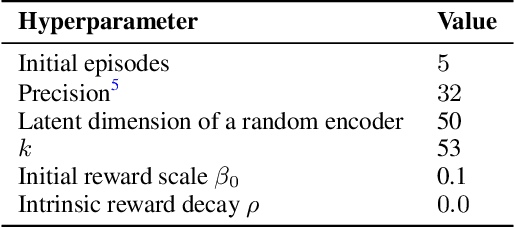

State Entropy Maximization with Random Encoders for Efficient Exploration

Feb 18, 2021

Abstract:Recent exploration methods have proven to be a recipe for improving sample-efficiency in deep reinforcement learning (RL). However, efficient exploration in high-dimensional observation spaces still remains a challenge. This paper presents Random Encoders for Efficient Exploration (RE3), an exploration method that utilizes state entropy as an intrinsic reward. In order to estimate state entropy in environments with high-dimensional observations, we utilize a k-nearest neighbor entropy estimator in the low-dimensional representation space of a convolutional encoder. In particular, we find that the state entropy can be estimated in a stable and compute-efficient manner by utilizing a randomly initialized encoder, which is fixed throughout training. Our experiments show that RE3 significantly improves the sample-efficiency of both model-free and model-based RL methods on locomotion and navigation tasks from DeepMind Control Suite and MiniGrid benchmarks. We also show that RE3 allows learning diverse behaviors without extrinsic rewards, effectively improving sample-efficiency in downstream tasks. Source code and videos are available at https://sites.google.com/view/re3-rl.

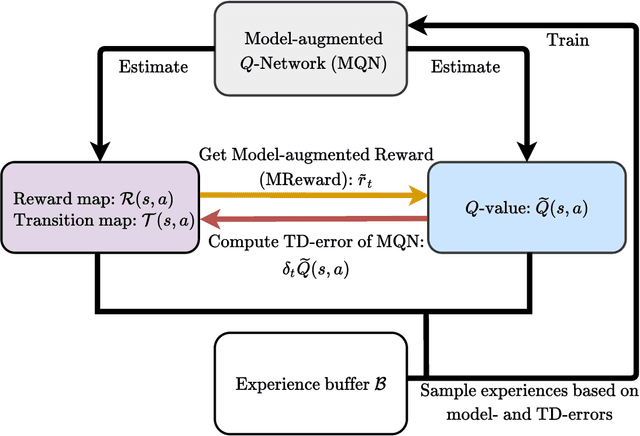

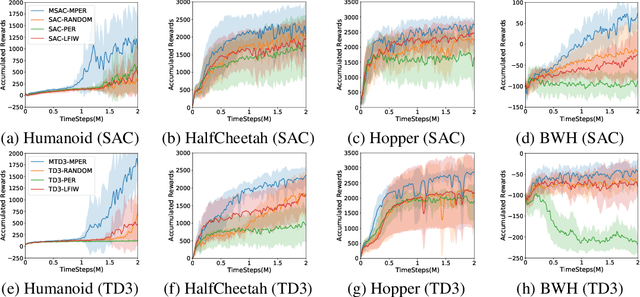

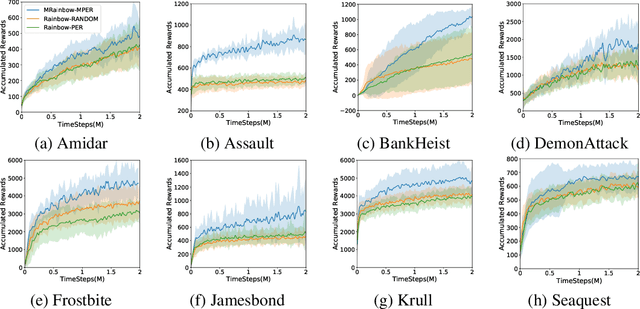

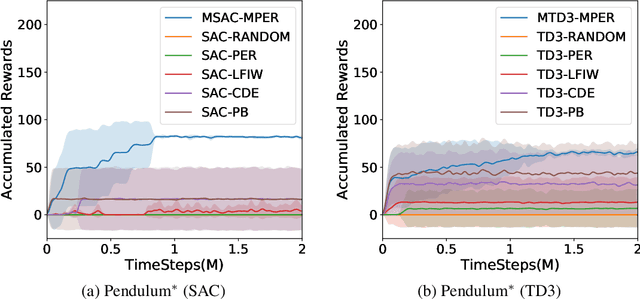

Model-Augmented Q-learning

Feb 07, 2021

Abstract:In recent years, $Q$-learning has become indispensable for model-free reinforcement learning (MFRL). However, it suffers from well-known problems such as under- and overestimation bias of the value, which may adversely affect the policy learning. To resolve this issue, we propose a MFRL framework that is augmented with the components of model-based RL. Specifically, we propose to estimate not only the $Q$-values but also both the transition and the reward with a shared network. We further utilize the estimated reward from the model estimators for $Q$-learning, which promotes interaction between the estimators. We show that the proposed scheme, called Model-augmented $Q$-learning (MQL), obtains a policy-invariant solution which is identical to the solution obtained by learning with true reward. Finally, we also provide a trick to prioritize past experiences in the replay buffer by utilizing model-estimation errors. We experimentally validate MQL built upon state-of-the-art off-policy MFRL methods, and show that MQL largely improves their performance and convergence. The proposed scheme is simple to implement and does not require additional training cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge