Jeff Delaune

Bob

Structure-Invariant Range-Visual-Inertial Odometry

Sep 06, 2024

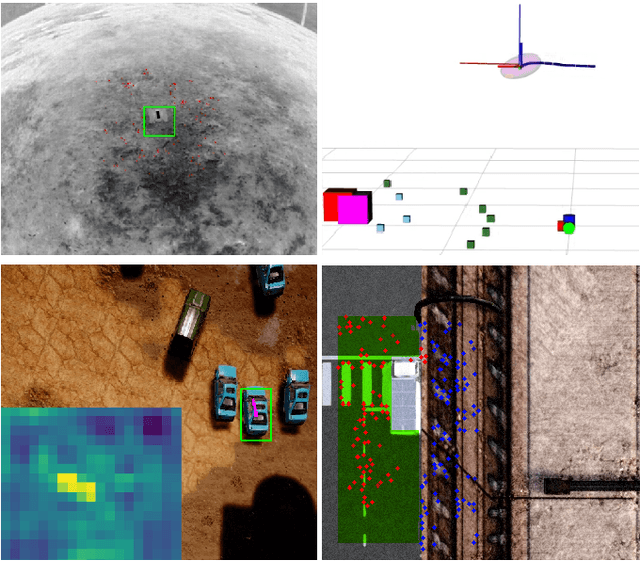

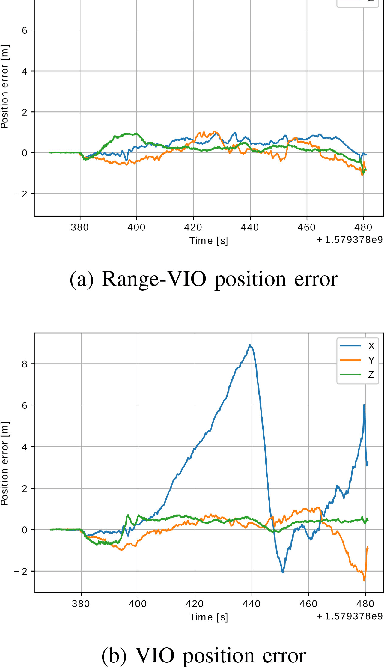

Abstract:The Mars Science Helicopter (MSH) mission aims to deploy the next generation of unmanned helicopters on Mars, targeting landing sites in highly irregular terrain such as Valles Marineris, the largest canyons in the Solar system with elevation variances of up to 8000 meters. Unlike its predecessor, the Mars 2020 mission, which relied on a state estimation system assuming planar terrain, MSH requires a novel approach due to the complex topography of the landing site. This work introduces a novel range-visual-inertial odometry system tailored for the unique challenges of the MSH mission. Our system extends the state-of-the-art xVIO framework by fusing consistent range information with visual and inertial measurements, preventing metric scale drift in the absence of visual-inertial excitation (mono camera and constant velocity descent), and enabling landing on any terrain structure, without requiring any planar terrain assumption. Through extensive testing in image-based simulations using actual terrain structure and textures collected in Mars orbit, we demonstrate that our range-VIO approach estimates terrain-relative velocity meeting the stringent mission requirements, and outperforming existing methods.

INSANE: Cross-Domain UAV Data Sets with Increased Number of Sensors for developing Advanced and Novel Estimators

Oct 17, 2022

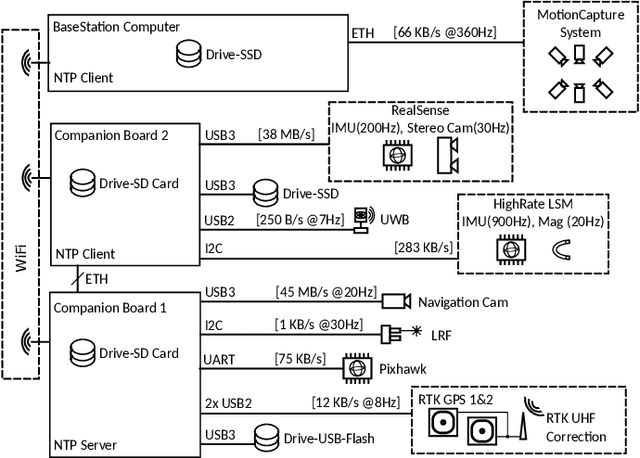

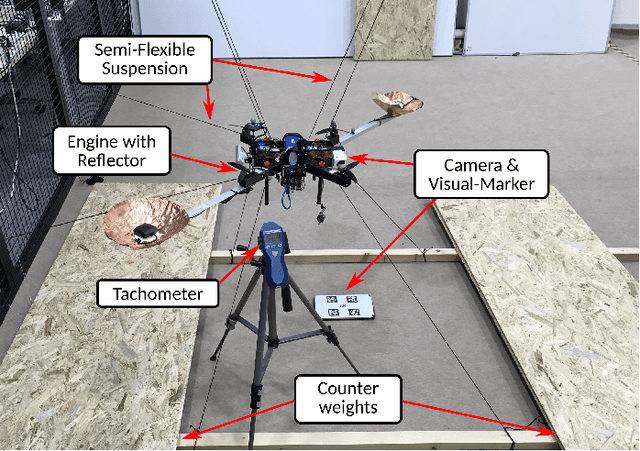

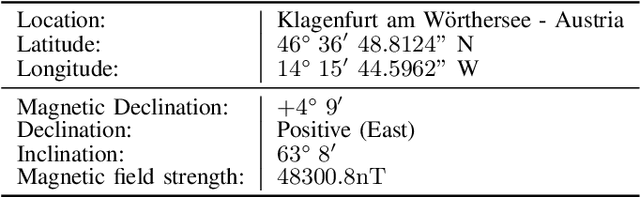

Abstract:For real-world applications, autonomous mobile robotic platforms must be capable of navigating safely in a multitude of different and dynamic environments with accurate and robust localization being a key prerequisite. To support further research in this domain, we present the INSANE data sets - a collection of versatile Micro Aerial Vehicle (MAV) data sets for cross-environment localization. The data sets provide various scenarios with multiple stages of difficulty for localization methods. These scenarios range from trajectories in the controlled environment of an indoor motion capture facility, to experiments where the vehicle performs an outdoor maneuver and transitions into a building, requiring changes of sensor modalities, up to purely outdoor flight maneuvers in a challenging Mars analog environment to simulate scenarios which current and future Mars helicopters would need to perform. The presented work aims to provide data that reflects real-world scenarios and sensor effects. The extensive sensor suite includes various sensor categories, including multiple Inertial Measurement Units (IMUs) and cameras. Sensor data is made available as raw measurements and each data set provides highly accurate ground truth, including the outdoor experiments where a dual Real-Time Kinematic (RTK) Global Navigation Satellite System (GNSS) setup provides sub-degree and centimeter accuracy (1-sigma). The sensor suite also includes a dedicated high-rate IMU to capture all the vibration dynamics of the vehicle during flight to support research on novel machine learning-based sensor signal enhancement methods for improved localization. The data sets and post-processing tools are available at: https://sst.aau.at/cns/datasets

TRADE: Object Tracking with 3D Trajectory and Ground Depth Estimates for UAVs

Oct 07, 2022

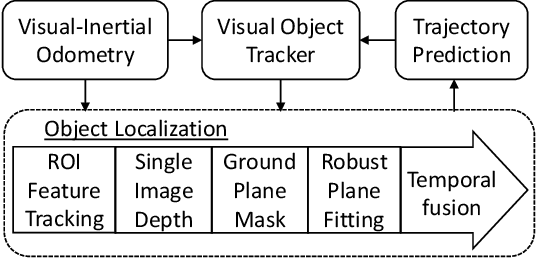

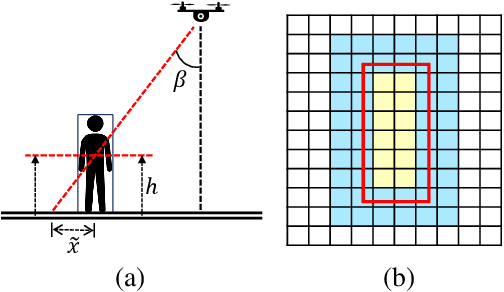

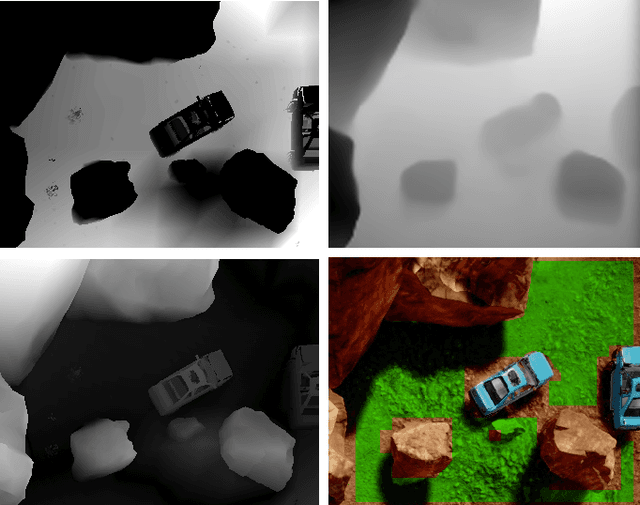

Abstract:We propose TRADE for robust tracking and 3D localization of a moving target in cluttered environments, from UAVs equipped with a single camera. Ultimately TRADE enables 3d-aware target following. Tracking-by-detection approaches are vulnerable to target switching, especially between similar objects. Thus, TRADE predicts and incorporates the target 3D trajectory to select the right target from the tracker's response map. Unlike static environments, depth estimation of a moving target from a single camera is a ill-posed problem. Therefore we propose a novel 3D localization method for ground targets on complex terrain. It reasons about scene geometry by combining ground plane segmentation, depth-from-motion and single-image depth estimation. The benefits of using TRADE are demonstrated as tracking robustness and depth accuracy on several dynamic scenes simulated in this work. Additionally, we demonstrate autonomous target following using a thermal camera by running TRADE on a quadcopter's board computer.

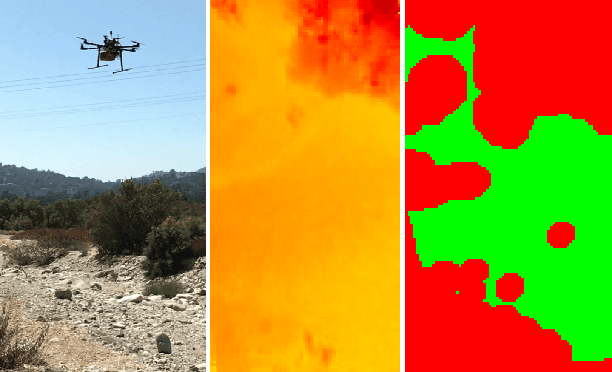

Data-Efficient Collaborative Decentralized Thermal-Inertial Odometry

Sep 14, 2022

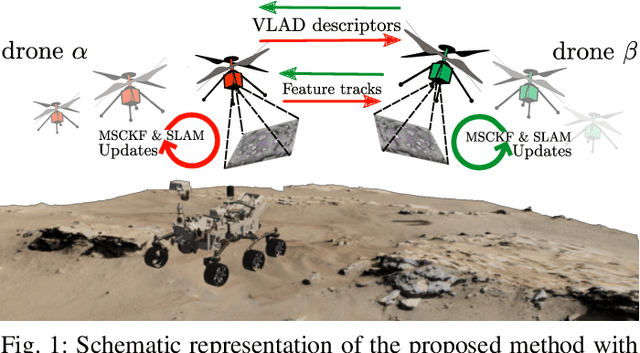

Abstract:We propose a system solution to achieve data-efficient, decentralized state estimation for a team of flying robots using thermal images and inertial measurements. Each robot can fly independently, and exchange data when possible to refine its state estimate. Our system front-end applies an online photometric calibration to refine the thermal images so as to enhance feature tracking and place recognition. Our system back-end uses a covariance-intersection fusion strategy to neglect the cross-correlation between agents so as to lower memory usage and computational cost. The communication pipeline uses Vector of Locally Aggregated Descriptors (VLAD) to construct a request-response policy that requires low bandwidth usage. We test our collaborative method on both synthetic and real-world data. Our results show that the proposed method improves by up to 46 % trajectory estimation with respect to an individual-agent approach, while reducing up to 89 % the communication exchange. Datasets and code are released to the public, extending the already-public JPL xVIO library.

* 8 pages, 8 figures

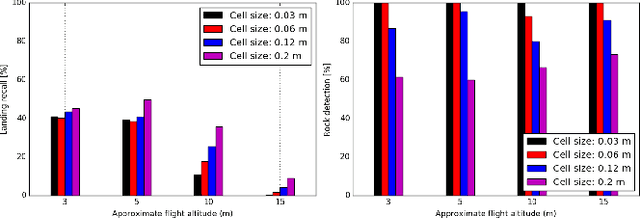

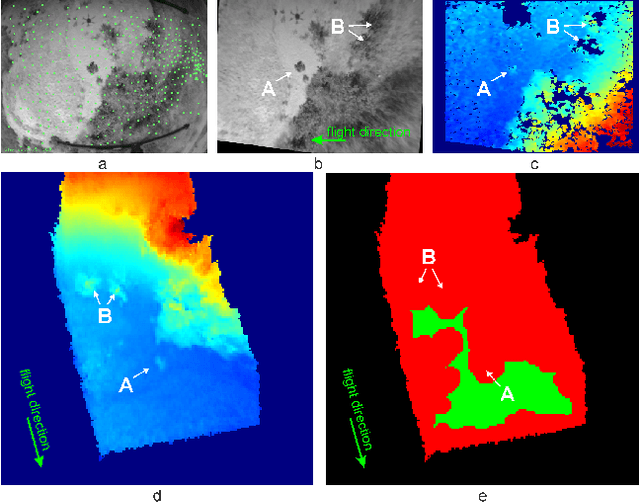

Optimizing Terrain Mapping and Landing Site Detection for Autonomous UAVs

May 07, 2022

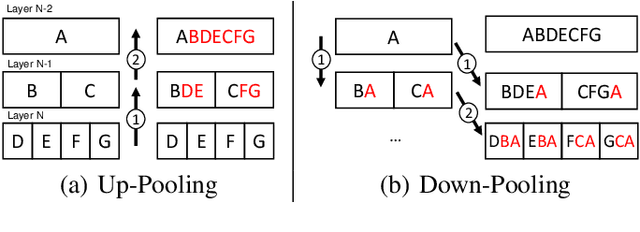

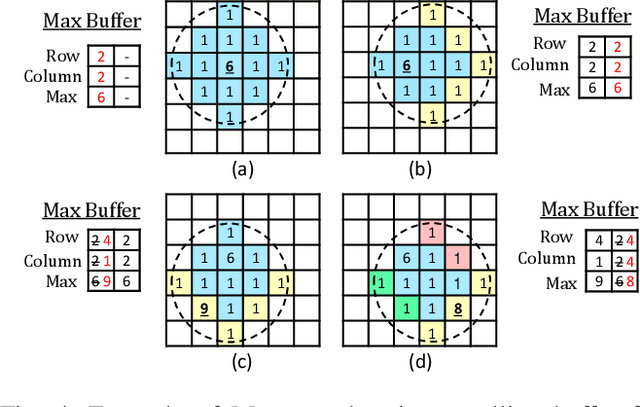

Abstract:The next generation of Mars rotorcrafts requires on-board autonomous hazard avoidance landing. To this end, this work proposes a system that performs continuous multi-resolution height map reconstruction and safe landing spot detection. Structure-from-Motion measurements are aggregated in a pyramid structure using a novel Optimal Mixture of Gaussians formulation that provides a comprehensive uncertainty model. Our multiresolution pyramid is built more efficiently and accurately than past work by decoupling pyramid filling from the measurement updates of different resolutions. To detect the safest landing location, after an optimized hazard segmentation, we use a mean shift algorithm on multiple distance transform peaks to account for terrain roughness and uncertainty. The benefits of our contributions are evaluated on real and synthetic flight data.

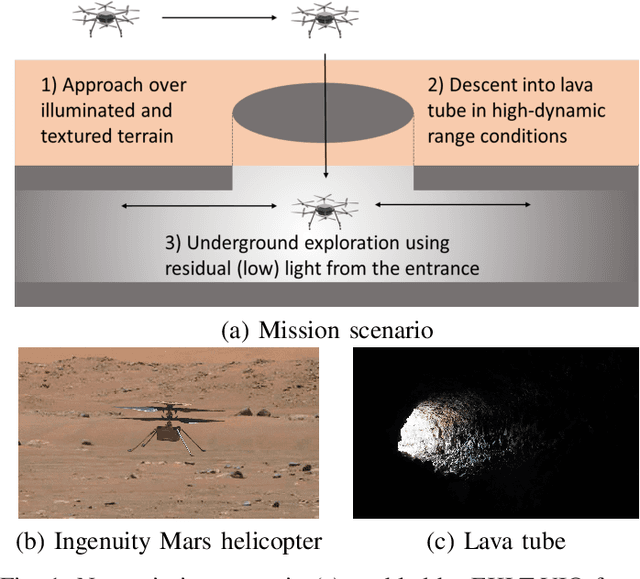

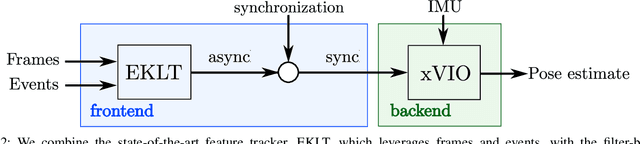

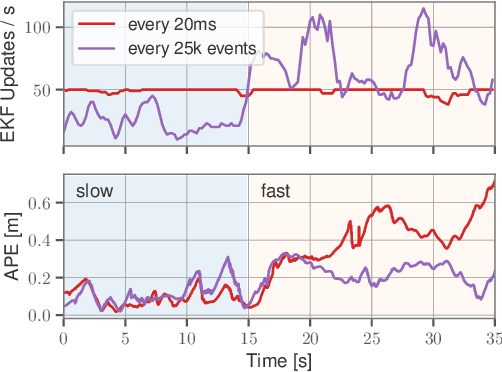

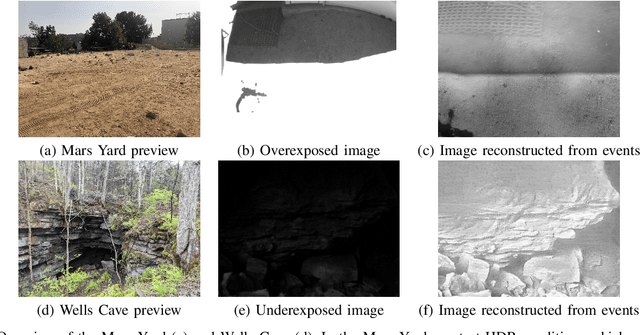

Exploring Event Camera-based Odometry for Planetary Robots

Apr 12, 2022

Abstract:Due to their resilience to motion blur and high robustness in low-light and high dynamic range conditions, event cameras are poised to become enabling sensors for vision-based exploration on future Mars helicopter missions. However, existing event-based visual-inertial odometry (VIO) algorithms either suffer from high tracking errors or are brittle, since they cannot cope with significant depth uncertainties caused by an unforeseen loss of tracking or other effects. In this work, we introduce EKLT-VIO, which addresses both limitations by combining a state-of-the-art event-based frontend with a filter-based backend. This makes it both accurate and robust to uncertainties, outperforming event- and frame-based VIO algorithms on challenging benchmarks by 32%. In addition, we demonstrate accurate performance in hover-like conditions (outperforming existing event-based methods) as well as high robustness in newly collected Mars-like and high-dynamic-range sequences, where existing frame-based methods fail. In doing so, we show that event-based VIO is the way forward for vision-based exploration on Mars.

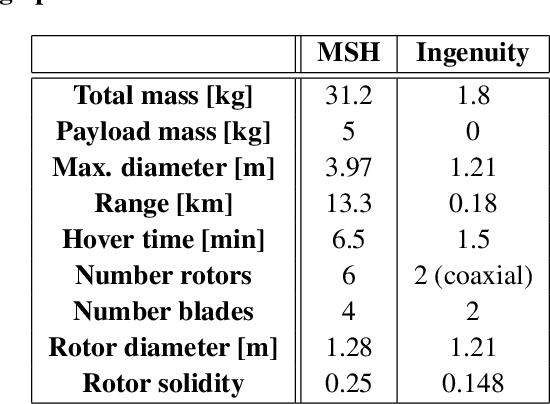

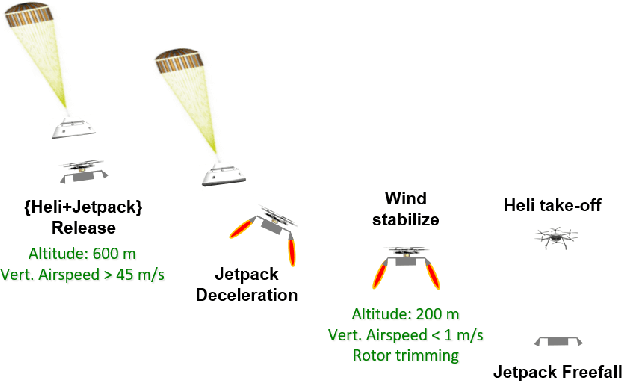

Mid-Air Helicopter Delivery at Mars Using a Jetpack

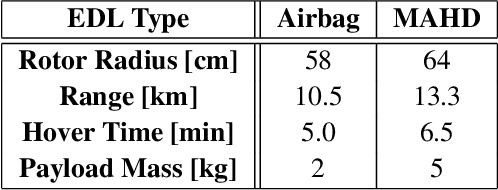

Mar 07, 2022

Abstract:Mid-Air Helicopter Delivery (MAHD) is a new Entry, Descent and Landing (EDL) architecture to enable in situ mobility for Mars science at lower cost than previous missions. It uses a jetpack to slow down a Mars Science Helicopter (MSH) after separation from the backshell, and reach aerodynamic conditions suitable for helicopter take-off in mid air. For given aeroshell dimensions, only MAHD's lander-free approach leaves enough room in the aeroshell to accommodate the largest rotor option for MSH. This drastically improves flight performance, notably allowing +150\% increased science payload mass. Compared to heritage EDL approaches, the simpler MAHD architecture is also likely to reduce cost, and enables access to more hazardous and higher-elevation terrains on Mars. This paper introduces a design for the MAHD system architecture and operations. We present a mechanical configuration that fits both MSH and the jetpack within the 2.65-m Mars heritage aeroshell, and a jetpack control architecture which fully leverages the available helicopter avionics. We discuss preliminary numerical models of the flow dynamics resulting from the interaction between the jets, the rotors and the side winds. We define a force-torque sensing architecture capable of handling the wind and trimming the rotors to prepare for safe take-off. Finally, we analyze the dynamic environment and closed-loop control simulation results to demonstrate the preliminary feasibility of MAHD.

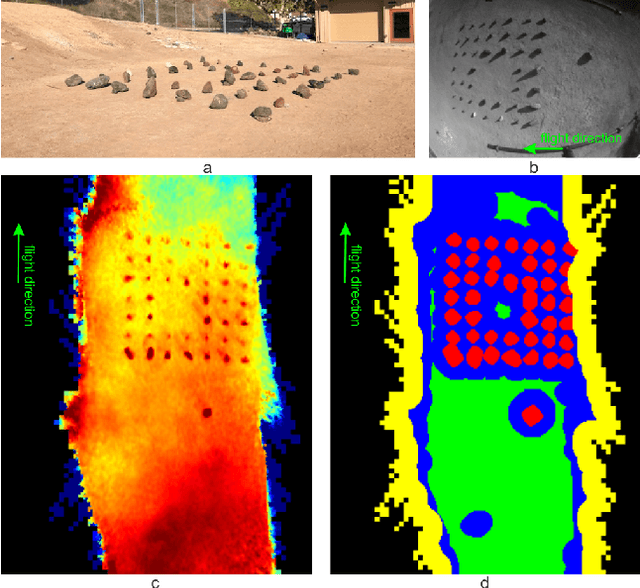

Multi-Resolution Elevation Mapping and Safe Landing Site Detection with Applications to Planetary Rotorcraft

Nov 11, 2021

Abstract:In this paper, we propose a resource-efficient approach to provide an autonomous UAV with an on-board perception method to detect safe, hazard-free landing sites during flights over complex 3D terrain. We aggregate 3D measurements acquired from a sequence of monocular images by a Structure-from-Motion approach into a local, robot-centric, multi-resolution elevation map of the overflown terrain, which fuses depth measurements according to their lateral surface resolution (pixel-footprint) in a probabilistic framework based on the concept of dynamic Level of Detail. Map aggregation only requires depth maps and the associated poses, which are obtained from an onboard Visual Odometry algorithm. An efficient landing site detection method then exploits the features of the underlying multi-resolution map to detect safe landing sites based on slope, roughness, and quality of the reconstructed terrain surface. The evaluation of the performance of the mapping and landing site detection modules are analyzed independently and jointly in simulated and real-world experiments in order to establish the efficacy of the proposed approach.

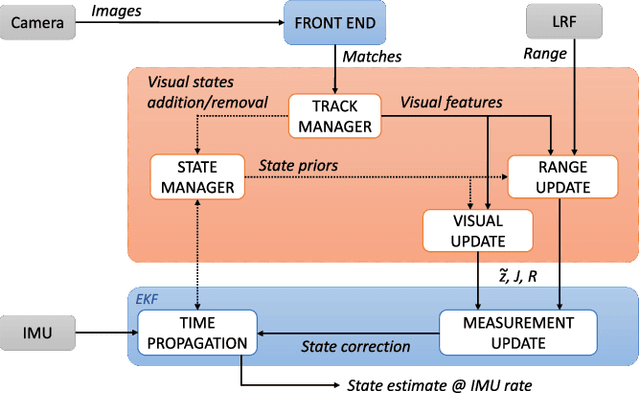

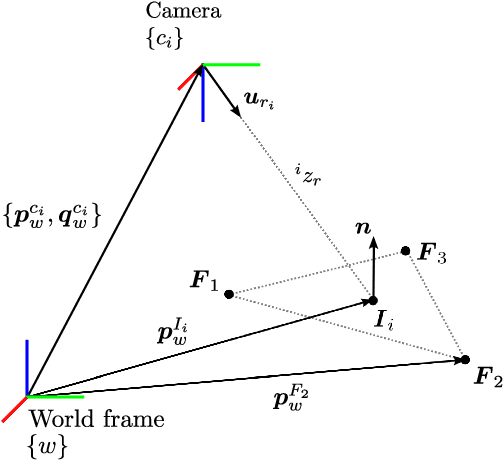

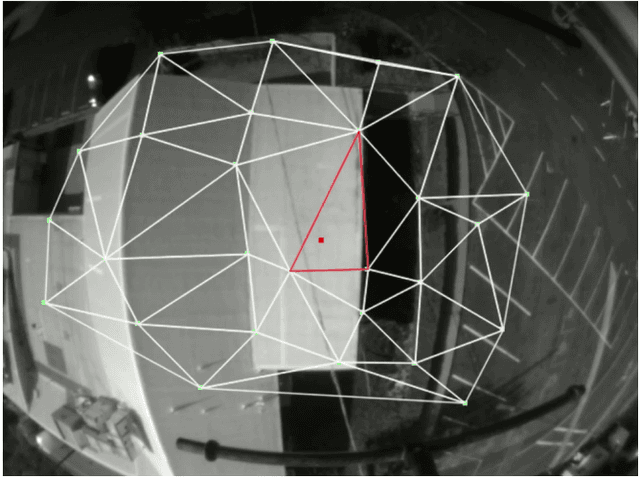

Range-Visual-Inertial Odometry: Scale Observability Without Excitation

Mar 28, 2021

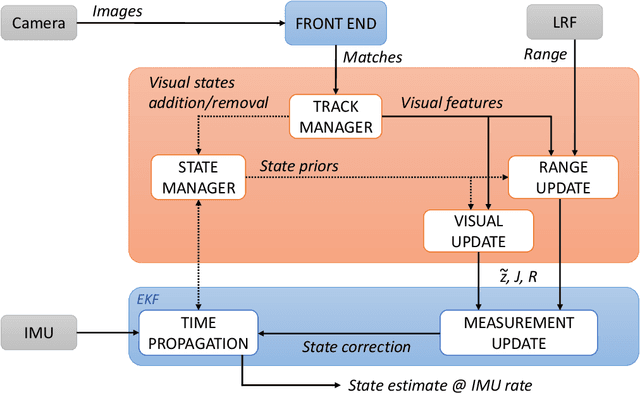

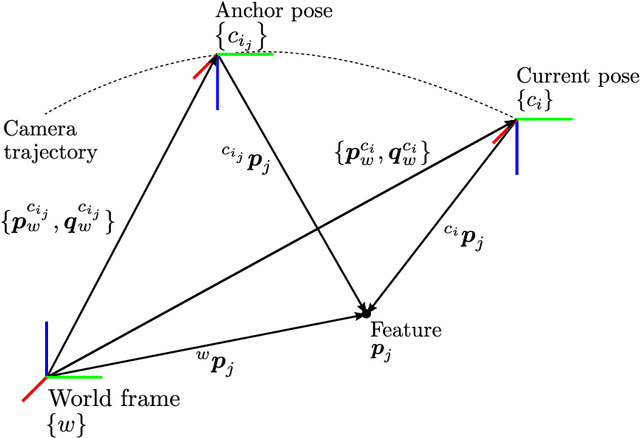

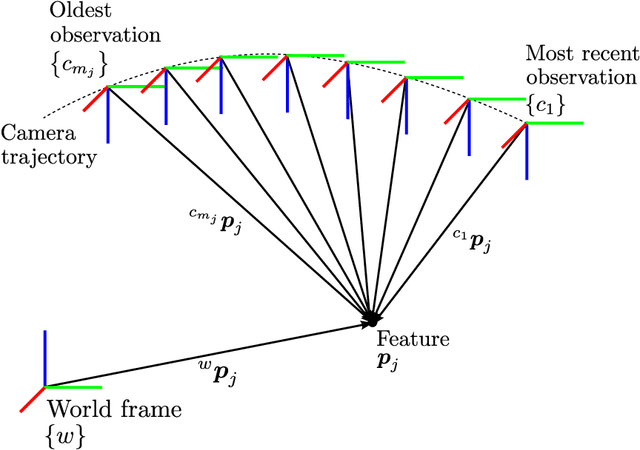

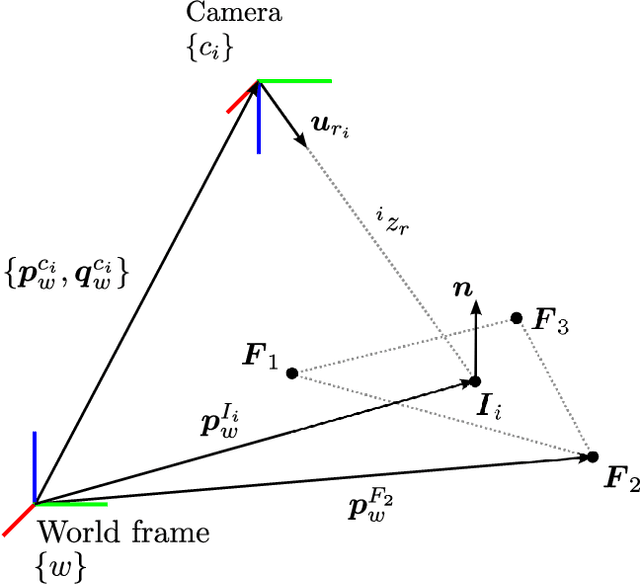

Abstract:Traveling at constant velocity is the most efficient trajectory for most robotics applications. Unfortunately without accelerometer excitation, monocular Visual-Inertial Odometry (VIO) cannot observe scale and suffers severe error drift. This was the main motivation for incorporating a 1D laser range finder in the navigation system for NASA's Ingenuity Mars Helicopter. However, Ingenuity's simplified approach was limited to flat terrains. The current paper introduces a novel range measurement update model based on using facet constraints. The resulting range-VIO approach is no longer limited to flat scenes, but extends to any arbitrary structure for generic robotic applications. An important theoretical result shows that scale is no longer in the right nullspace of the observability matrix for zero or constant acceleration motion. In practical terms, this means that scale becomes observable under constant-velocity motion, which enables simple and robust autonomous operations over arbitrary terrain. Due to the small range finder footprint, range-VIO retains the minimal size, weight, and power attributes of VIO, with similar runtime. The benefits are evaluated on real flight data representative of common aerial robotics scenarios. Robustness is demonstrated using indoor stress data and fullstate ground truth. We release our software framework, called xVIO, as open source.

xVIO: A Range-Visual-Inertial Odometry Framework

Oct 13, 2020

Abstract:xVIO is a range-visual-inertial odometry algorithm implemented at JPL. It has been demonstrated with closed-loop controls on-board unmanned rotorcraft equipped with off-the-shelf embedded computers and sensors. It can operate at daytime with visible-spectrum cameras, or at night time using thermal infrared cameras. This report is a complete technical description of xVIO. It includes an overview of the system architecture, the implementation of the navigation filter, along with the derivations of the Jacobian matrices which are not already published in the literature.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge