Huiqi Deng

AgentDoG: A Diagnostic Guardrail Framework for AI Agent Safety and Security

Jan 26, 2026Abstract:The rise of AI agents introduces complex safety and security challenges arising from autonomous tool use and environmental interactions. Current guardrail models lack agentic risk awareness and transparency in risk diagnosis. To introduce an agentic guardrail that covers complex and numerous risky behaviors, we first propose a unified three-dimensional taxonomy that orthogonally categorizes agentic risks by their source (where), failure mode (how), and consequence (what). Guided by this structured and hierarchical taxonomy, we introduce a new fine-grained agentic safety benchmark (ATBench) and a Diagnostic Guardrail framework for agent safety and security (AgentDoG). AgentDoG provides fine-grained and contextual monitoring across agent trajectories. More Crucially, AgentDoG can diagnose the root causes of unsafe actions and seemingly safe but unreasonable actions, offering provenance and transparency beyond binary labels to facilitate effective agent alignment. AgentDoG variants are available in three sizes (4B, 7B, and 8B parameters) across Qwen and Llama model families. Extensive experimental results demonstrate that AgentDoG achieves state-of-the-art performance in agentic safety moderation in diverse and complex interactive scenarios. All models and datasets are openly released.

The Interaction Bottleneck of Deep Neural Networks: Discovery, Proof, and Modulation

Dec 21, 2025Abstract:Understanding what kinds of cooperative structures deep neural networks (DNNs) can represent remains a fundamental yet insufficiently understood problem. In this work, we treat interactions as the fundamental units of such structure and investigate a largely unexplored question: how DNNs encode interactions under different levels of contextual complexity, and how these microscopic interaction patterns shape macroscopic representation capacity. To quantify this complexity, we use multi-order interactions [57], where each order reflects the amount of contextual information required to evaluate the joint interaction utility of a variable pair. This formulation enables a stratified analysis of cooperative patterns learned by DNNs. Building on this formulation, we develop a comprehensive study of interaction structure in DNNs. (i) We empirically discover a universal interaction bottleneck: across architectures and tasks, DNNs easily learn low-order and high-order interactions but consistently under-represent mid-order ones. (ii) We theoretically explain this bottleneck by proving that mid-order interactions incur the highest contextual variability, yielding large gradient variance and making them intrinsically difficult to learn. (iii) We further modulate the bottleneck by introducing losses that steer models toward emphasizing interactions of selected orders. Finally, we connect microscopic interaction structures with macroscopic representational behavior: low-order-emphasized models exhibit stronger generalization and robustness, whereas high-order-emphasized models demonstrate greater structural modeling and fitting capability. Together, these results uncover an inherent representational bias in modern DNNs and establish interaction order as a powerful lens for interpreting and guiding deep representations.

Attribution Explanations for Deep Neural Networks: A Theoretical Perspective

Aug 11, 2025Abstract:Attribution explanation is a typical approach for explaining deep neural networks (DNNs), inferring an importance or contribution score for each input variable to the final output. In recent years, numerous attribution methods have been developed to explain DNNs. However, a persistent concern remains unresolved, i.e., whether and which attribution methods faithfully reflect the actual contribution of input variables to the decision-making process. The faithfulness issue undermines the reliability and practical utility of attribution explanations. We argue that these concerns stem from three core challenges. First, difficulties arise in comparing attribution methods due to their unstructured heterogeneity, differences in heuristics, formulations, and implementations that lack a unified organization. Second, most methods lack solid theoretical underpinnings, with their rationales remaining absent, ambiguous, or unverified. Third, empirically evaluating faithfulness is challenging without ground truth. Recent theoretical advances provide a promising way to tackle these challenges, attracting increasing attention. We summarize these developments, with emphasis on three key directions: (i) Theoretical unification, which uncovers commonalities and differences among methods, enabling systematic comparisons; (ii) Theoretical rationale, clarifying the foundations of existing methods; (iii) Theoretical evaluation, rigorously proving whether methods satisfy faithfulness principles. Beyond a comprehensive review, we provide insights into how these studies help deepen theoretical understanding, inform method selection, and inspire new attribution methods. We conclude with a discussion of promising open problems for further work.

Towards Attributions of Input Variables in a Coalition

Sep 23, 2023

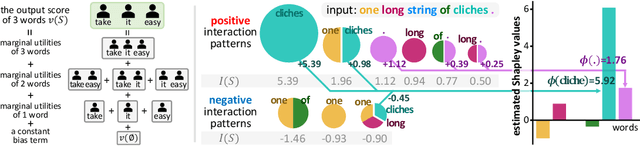

Abstract:This paper aims to develop a new attribution method to explain the conflict between individual variables' attributions and their coalition's attribution from a fully new perspective. First, we find that the Shapley value can be reformulated as the allocation of Harsanyi interactions encoded by the AI model. Second, based the re-alloction of interactions, we extend the Shapley value to the attribution of coalitions. Third we ective. We derive the fundamental mechanism behind the conflict. This conflict come from the interaction containing partial variables in their coalition.

Explainability for Large Language Models: A Survey

Sep 17, 2023Abstract:Large language models (LLMs) have demonstrated impressive capabilities in natural language processing. However, their internal mechanisms are still unclear and this lack of transparency poses unwanted risks for downstream applications. Therefore, understanding and explaining these models is crucial for elucidating their behaviors, limitations, and social impacts. In this paper, we introduce a taxonomy of explainability techniques and provide a structured overview of methods for explaining Transformer-based language models. We categorize techniques based on the training paradigms of LLMs: traditional fine-tuning-based paradigm and prompting-based paradigm. For each paradigm, we summarize the goals and dominant approaches for generating local explanations of individual predictions and global explanations of overall model knowledge. We also discuss metrics for evaluating generated explanations, and discuss how explanations can be leveraged to debug models and improve performance. Lastly, we examine key challenges and emerging opportunities for explanation techniques in the era of LLMs in comparison to conventional machine learning models.

Mitigating Shortcuts in Language Models with Soft Label Encoding

Sep 17, 2023

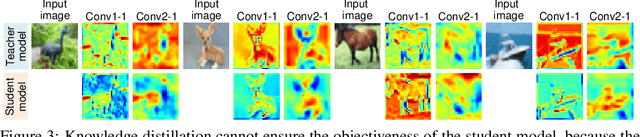

Abstract:Recent research has shown that large language models rely on spurious correlations in the data for natural language understanding (NLU) tasks. In this work, we aim to answer the following research question: Can we reduce spurious correlations by modifying the ground truth labels of the training data? Specifically, we propose a simple yet effective debiasing framework, named Soft Label Encoding (SoftLE). We first train a teacher model with hard labels to determine each sample's degree of relying on shortcuts. We then add one dummy class to encode the shortcut degree, which is used to smooth other dimensions in the ground truth label to generate soft labels. This new ground truth label is used to train a more robust student model. Extensive experiments on two NLU benchmark tasks demonstrate that SoftLE significantly improves out-of-distribution generalization while maintaining satisfactory in-distribution accuracy.

Understanding and Unifying Fourteen Attribution Methods with Taylor Interactions

Mar 06, 2023

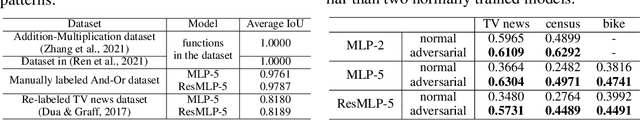

Abstract:Various attribution methods have been developed to explain deep neural networks (DNNs) by inferring the attribution/importance/contribution score of each input variable to the final output. However, existing attribution methods are often built upon different heuristics. There remains a lack of a unified theoretical understanding of why these methods are effective and how they are related. To this end, for the first time, we formulate core mechanisms of fourteen attribution methods, which were designed on different heuristics, into the same mathematical system, i.e., the system of Taylor interactions. Specifically, we prove that attribution scores estimated by fourteen attribution methods can all be reformulated as the weighted sum of two types of effects, i.e., independent effects of each individual input variable and interaction effects between input variables. The essential difference among the fourteen attribution methods mainly lies in the weights of allocating different effects. Based on the above findings, we propose three principles for a fair allocation of effects to evaluate the faithfulness of the fourteen attribution methods.

Bayesian Neural Networks Tend to Ignore Complex and Sensitive Concepts

Feb 25, 2023

Abstract:In this paper, we focus on mean-field variational Bayesian Neural Networks (BNNs) and explore the representation capacity of such BNNs by investigating which types of concepts are less likely to be encoded by the BNN. It has been observed and studied that a relatively small set of interactive concepts usually emerge in the knowledge representation of a sufficiently-trained neural network, and such concepts can faithfully explain the network output. Based on this, our study proves that compared to standard deep neural networks (DNNs), it is less likely for BNNs to encode complex concepts. Experiments verify our theoretical proofs. Note that the tendency to encode less complex concepts does not necessarily imply weak representation power, considering that complex concepts exhibit low generalization power and high adversarial vulnerability.

Concept-Level Explanation for the Generalization of a DNN

Feb 25, 2023

Abstract:This paper explains the generalization power of a deep neural network (DNN) from the perspective of interactive concepts. Many recent studies have quantified a clear emergence of interactive concepts encoded by the DNN, which have been observed on different DNNs during the learning process. Therefore, in this paper, we investigate the generalization power of each interactive concept, and we use the generalization power of different interactive concepts to explain the generalization power of the entire DNN. Specifically, we define the complexity of each interactive concept. We find that simple concepts can be better generalized to testing data than complex concepts. The DNN with strong generalization power usually learns simple concepts more quickly and encodes fewer complex concepts. More crucially, we discover the detouring dynamics of learning complex concepts, which explain both the high learning difficulty and the low generalization power of complex concepts.

Towards Axiomatic, Hierarchical, and Symbolic Explanation for Deep Models

Nov 30, 2021

Abstract:This paper proposes a hierarchical and symbolic And-Or graph (AOG) to objectively explain the internal logic encoded by a well-trained deep model for inference. We first define the objectiveness of an explainer model in game theory, and we develop a rigorous representation of the And-Or logic encoded by the deep model. The objectiveness and trustworthiness of the AOG explainer are both theoretically guaranteed and experimentally verified. Furthermore, we propose several techniques to boost the conciseness of the explanation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge