Heng-Chao Li

Toward distortion-aware change detection in realistic scenarios

Jan 10, 2024Abstract:In the conventional change detection (CD) pipeline, two manually registered and labeled remote sensing datasets serve as the input of the model for training and prediction. However, in realistic scenarios, data from different periods or sensors could fail to be aligned as a result of various coordinate systems. Geometric distortion caused by coordinate shifting remains a thorny issue for CD algorithms. In this paper, we propose a reusable self-supervised framework for bitemporal geometric distortion in CD tasks. The whole framework is composed of Pretext Representation Pre-training, Bitemporal Image Alignment, and Down-stream Decoder Fine-Tuning. With only single-stage pre-training, the key components of the framework can be reused for assistance in the bitemporal image alignment, while simultaneously enhancing the performance of the CD decoder. Experimental results in 2 large-scale realistic scenarios demonstrate that our proposed method can alleviate the bitemporal geometric distortion in CD tasks.

Semi-Supervised Object Detection with Uncurated Unlabeled Data for Remote Sensing Images

Oct 09, 2023Abstract:Annotating remote sensing images (RSIs) presents a notable challenge due to its labor-intensive nature. Semi-supervised object detection (SSOD) methods tackle this issue by generating pseudo-labels for the unlabeled data, assuming that all classes found in the unlabeled dataset are also represented in the labeled data. However, real-world situations introduce the possibility of out-of-distribution (OOD) samples being mixed with in-distribution (ID) samples within the unlabeled dataset. In this paper, we delve into techniques for conducting SSOD directly on uncurated unlabeled data, which is termed Open-Set Semi-Supervised Object Detection (OSSOD). Our approach commences by employing labeled in-distribution data to dynamically construct a class-wise feature bank (CFB) that captures features specific to each class. Subsequently, we compare the features of predicted object bounding boxes with the corresponding entries in the CFB to calculate OOD scores. We design an adaptive threshold based on the statistical properties of the CFB, allowing us to filter out OOD samples effectively. The effectiveness of our proposed method is substantiated through extensive experiments on two widely used remote sensing object detection datasets: DIOR and DOTA. These experiments showcase the superior performance and efficacy of our approach for OSSOD on RSIs.

Convolution and Attention Mixer for Synthetic Aperture Radar Image Change Detection

Sep 21, 2023Abstract:Synthetic aperture radar (SAR) image change detection is a critical task and has received increasing attentions in the remote sensing community. However, existing SAR change detection methods are mainly based on convolutional neural networks (CNNs), with limited consideration of global attention mechanism. In this letter, we explore Transformer-like architecture for SAR change detection to incorporate global attention. To this end, we propose a convolution and attention mixer (CAMixer). First, to compensate the inductive bias for Transformer, we combine self-attention with shift convolution in a parallel way. The parallel design effectively captures the global semantic information via the self-attention and performs local feature extraction through shift convolution simultaneously. Second, we adopt a gating mechanism in the feed-forward network to enhance the non-linear feature transformation. The gating mechanism is formulated as the element-wise multiplication of two parallel linear layers. Important features can be highlighted, leading to high-quality representations against speckle noise. Extensive experiments conducted on three SAR datasets verify the superior performance of the proposed CAMixer. The source codes will be publicly available at https://github.com/summitgao/CAMixer .

SVDinsTN: An Integrated Method for Tensor Network Representation with Efficient Structure Search

May 24, 2023

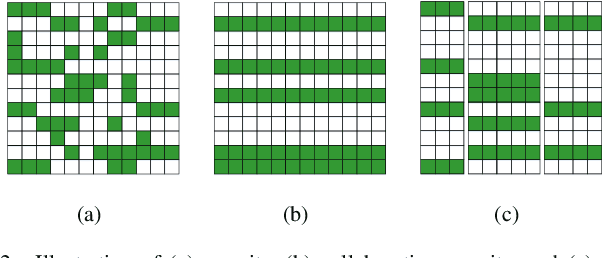

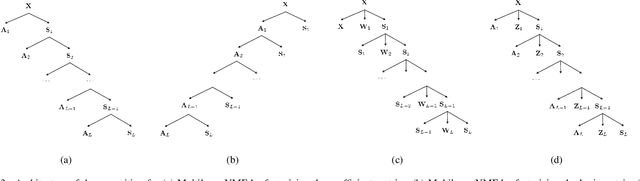

Abstract:Tensor network (TN) representation is a powerful technique for data analysis and machine learning. It practically involves a challenging TN structure search (TN-SS) problem, which aims to search for the optimal structure to achieve a compact representation. Existing TN-SS methods mainly adopt a bi-level optimization method that leads to excessive computational costs due to repeated structure evaluations. To address this issue, we propose an efficient integrated (single-level) method named SVD-inspired TN decomposition (SVDinsTN), eliminating the need for repeated tedious structure evaluation. By inserting a diagonal factor for each edge of the fully-connected TN, we calculate TN cores and diagonal factors simultaneously, with factor sparsity revealing the most compact TN structure. Experimental results on real-world data demonstrate that SVDinsTN achieves approximately $10^2\sim{}10^3$ times acceleration in runtime compared to the existing TN-SS methods while maintaining a comparable level of representation ability.

Transformation-Invariant Network for Few-Shot Object Detection in Remote Sensing Images

Mar 13, 2023

Abstract:Object detection in remote sensing images relies on a large amount of labeled data for training. The growing new categories and class imbalance render exhaustive annotation non-scalable. Few-shot object detection~(FSOD) tackles this issue by meta-learning on seen base classes and then fine-tuning on novel classes with few labeled samples. However, the object's scale and orientation variations are particularly large in remote sensing images, thus posing challenges to existing few-shot object detection methods. To tackle these challenges, we first propose to integrate a feature pyramid network and use prototype features to highlight query features to improve upon existing FSOD methods. We refer to the modified FSOD as a Strong Baseline which is demonstrated to perform significantly better than the original baselines. To improve the robustness of orientation variation, we further propose a transformation-invariant network (TINet) to allow the network to be invariant to geometric transformations. Extensive experiments on three widely used remote sensing object detection datasets, i.e., NWPU VHR-10.v2, DIOR, and HRRSD demonstrated the effectiveness of the proposed method. Finally, we reproduced multiple FSOD methods for remote sensing images to create an extensive benchmark for follow-up works.

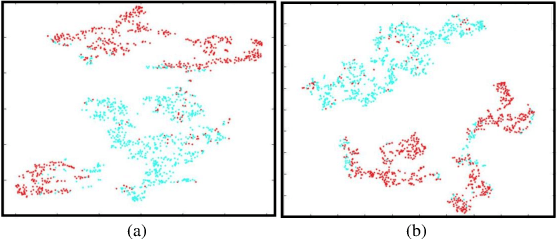

Nearest Neighbor-Based Contrastive Learning for Hyperspectral and LiDAR Data Classification

Jan 09, 2023Abstract:The joint hyperspectral image (HSI) and LiDAR data classification aims to interpret ground objects at more detailed and precise level. Although deep learning methods have shown remarkable success in the multisource data classification task, self-supervised learning has rarely been explored. It is commonly nontrivial to build a robust self-supervised learning model for multisource data classification, due to the fact that the semantic similarities of neighborhood regions are not exploited in existing contrastive learning framework. Furthermore, the heterogeneous gap induced by the inconsistent distribution of multisource data impedes the classification performance. To overcome these disadvantages, we propose a Nearest Neighbor-based Contrastive Learning Network (NNCNet), which takes full advantage of large amounts of unlabeled data to learn discriminative feature representations. Specifically, we propose a nearest neighbor-based data augmentation scheme to use enhanced semantic relationships among nearby regions. The intermodal semantic alignments can be captured more accurately. In addition, we design a bilinear attention module to exploit the second-order and even high-order feature interactions between the HSI and LiDAR data. Extensive experiments on four public datasets demonstrate the superiority of our NNCNet over state-of-the-art methods. The source codes are available at \url{https://github.com/summitgao/NNCNet}.

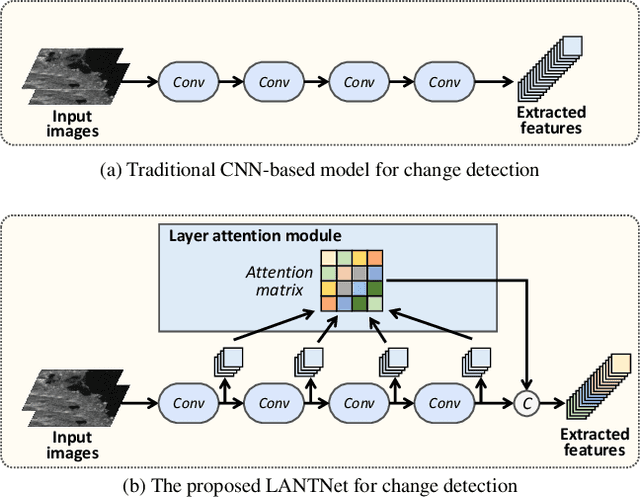

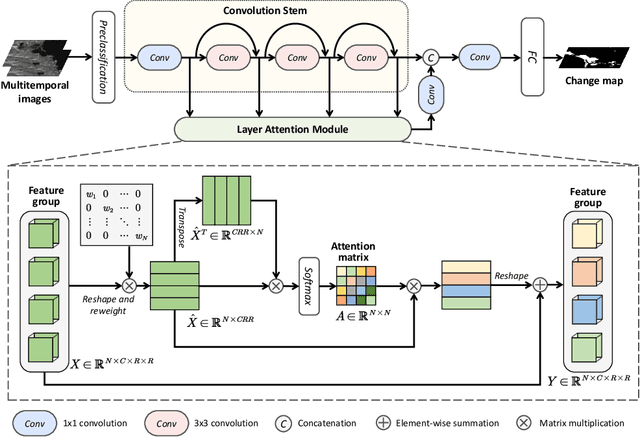

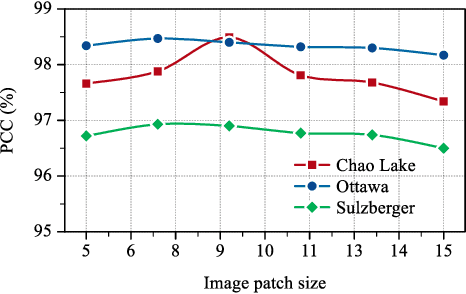

Synthetic Aperture Radar Image Change Detection via Layer Attention-Based Noise-Tolerant Network

Aug 09, 2022

Abstract:Recently, change detection methods for synthetic aperture radar (SAR) images based on convolutional neural networks (CNN) have gained increasing research attention. However, existing CNN-based methods neglect the interactions among multilayer convolutions, and errors involved in the preclassification restrict the network optimization. To this end, we proposed a layer attention-based noise-tolerant network, termed LANTNet. In particular, we design a layer attention module that adaptively weights the feature of different convolution layers. In addition, we design a noise-tolerant loss function that effectively suppresses the impact of noisy labels. Therefore, the model is insensitive to noisy labels in the preclassification results. The experimental results on three SAR datasets show that the proposed LANTNet performs better compared to several state-of-the-art methods. The source codes are available at https://github.com/summitgao/LANTNet

Hyperspectral Unmixing Based on Nonnegative Matrix Factorization: A Comprehensive Review

May 20, 2022

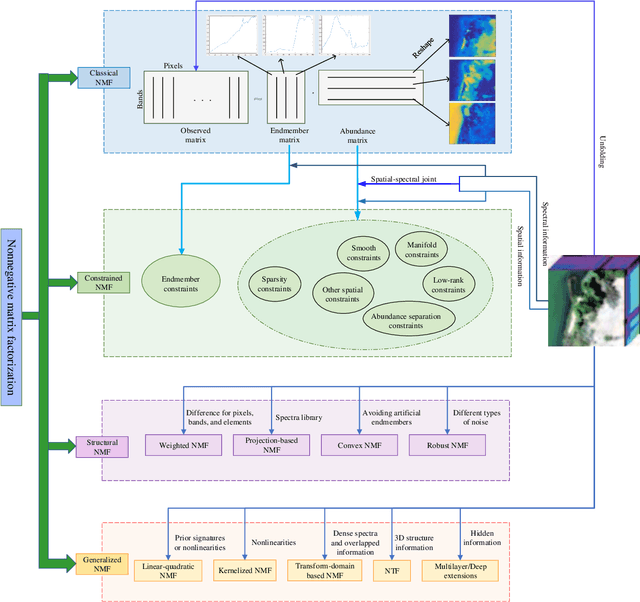

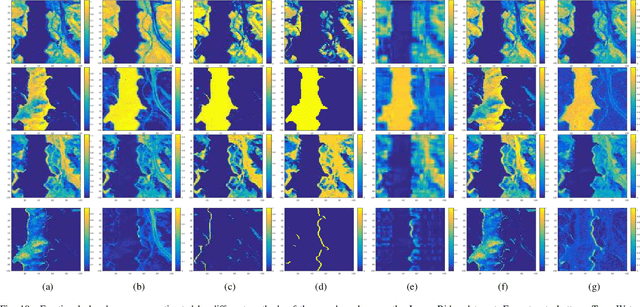

Abstract:Hyperspectral unmixing has been an important technique that estimates a set of endmembers and their corresponding abundances from a hyperspectral image (HSI). Nonnegative matrix factorization (NMF) plays an increasingly significant role in solving this problem. In this article, we present a comprehensive survey of the NMF-based methods proposed for hyperspectral unmixing. Taking the NMF model as a baseline, we show how to improve NMF by utilizing the main properties of HSIs (e.g., spectral, spatial, and structural information). We categorize three important development directions including constrained NMF, structured NMF, and generalized NMF. Furthermore, several experiments are conducted to illustrate the effectiveness of associated algorithms. Finally, we conclude the article with possible future directions with the purposes of providing guidelines and inspiration to promote the development of hyperspectral unmixing.

Adaptive Cross-Attention-Driven Spatial-Spectral Graph Convolutional Network for Hyperspectral Image Classification

Apr 12, 2022

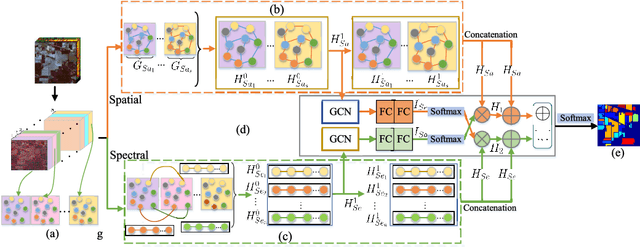

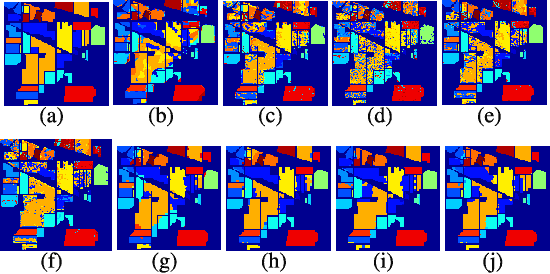

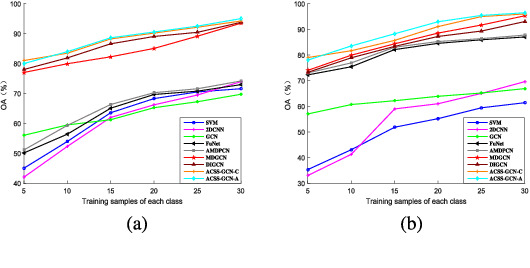

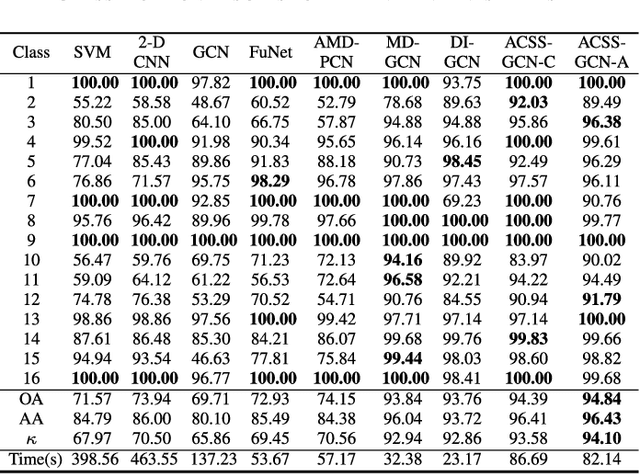

Abstract:Recently, graph convolutional networks (GCNs) have been developed to explore spatial relationship between pixels, achieving better classification performance of hyperspectral images (HSIs). However, these methods fail to sufficiently leverage the relationship between spectral bands in HSI data. As such, we propose an adaptive cross-attention-driven spatial-spectral graph convolutional network (ACSS-GCN), which is composed of a spatial GCN (Sa-GCN) subnetwork, a spectral GCN (Se-GCN) subnetwork, and a graph cross-attention fusion module (GCAFM). Specifically, Sa-GCN and Se-GCN are proposed to extract the spatial and spectral features by modeling correlations between spatial pixels and between spectral bands, respectively. Then, by integrating attention mechanism into information aggregation of graph, the GCAFM, including three parts, i.e., spatial graph attention block, spectral graph attention block, and fusion block, is designed to fuse the spatial and spectral features and suppress noise interference in Sa-GCN and Se-GCN. Moreover, the idea of the adaptive graph is introduced to explore an optimal graph through back propagation during the training process. Experiments on two HSI data sets show that the proposed method achieves better performance than other classification methods.

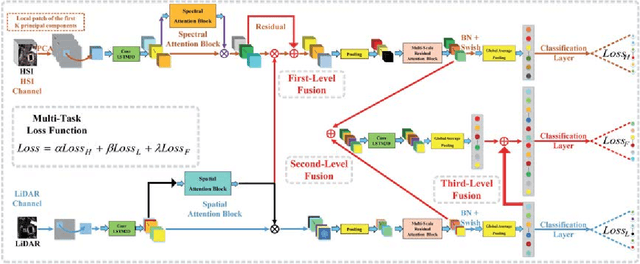

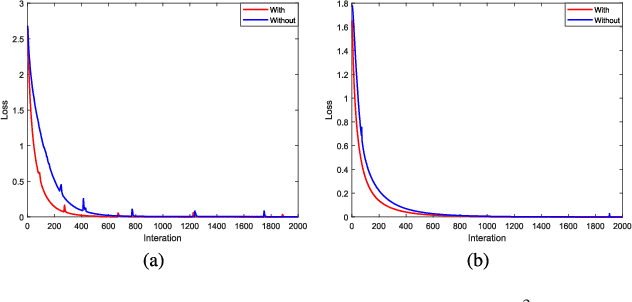

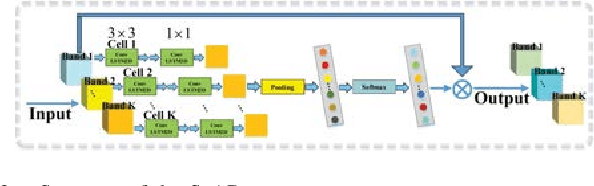

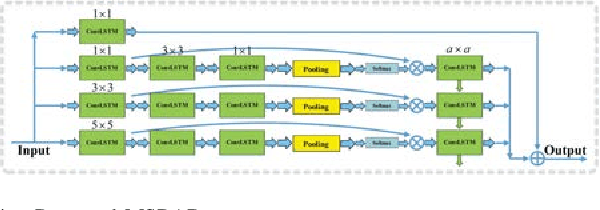

A3CLNN: Spatial, Spectral and Multiscale Attention ConvLSTM Neural Network for Multisource Remote Sensing Data Classification

Apr 09, 2022

Abstract:The problem of effectively exploiting the information multiple data sources has become a relevant but challenging research topic in remote sensing. In this paper, we propose a new approach to exploit the complementarity of two data sources: hyperspectral images (HSIs) and light detection and ranging (LiDAR) data. Specifically, we develop a new dual-channel spatial, spectral and multiscale attention convolutional long short-term memory neural network (called dual-channel A3CLNN) for feature extraction and classification of multisource remote sensing data. Spatial, spectral and multiscale attention mechanisms are first designed for HSI and LiDAR data in order to learn spectral- and spatial-enhanced feature representations, and to represent multiscale information for different classes. In the designed fusion network, a novel composite attention learning mechanism (combined with a three-level fusion strategy) is used to fully integrate the features in these two data sources. Finally, inspired by the idea of transfer learning, a novel stepwise training strategy is designed to yield a final classification result. Our experimental results, conducted on several multisource remote sensing data sets, demonstrate that the newly proposed dual-channel A3CLNN exhibits better feature representation ability (leading to more competitive classification performance) than other state-of-the-art methods.

* 16 pages, 10 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge