Hao Ma

State Key Laboratory of Information Engineering in Survering, Mapping and Remote Sensing, Wuhan University

A Robust Open-source Tendon-driven Robot Arm for Learning Control of Dynamic Motions

Jul 10, 2023

Abstract:A long-lasting goal of robotics research is to operate robots safely, while achieving high performance which often involves fast motions. Traditional motor-driven systems frequently struggle to balance these competing demands. Addressing this trade-off is crucial for advancing fields such as manufacturing and healthcare, where seamless collaboration between robots and humans is essential. We introduce a four degree-of-freedom (DoF) tendon-driven robot arm, powered by pneumatic artificial muscles (PAMs), to tackle this challenge. Our new design features low friction, passive compliance, and inherent impact resilience, enabling rapid, precise, high-force, and safe interactions during dynamic tasks. In addition to fostering safer human-robot collaboration, the inherent safety properties are particularly beneficial for reinforcement learning, where the robot's ability to explore dynamic motions without causing self-damage is crucial. We validate our robotic arm through various experiments, including long-term dynamic motions, impact resilience tests, and assessments of its ease of control. On a challenging dynamic table tennis task, we further demonstrate our robot's capabilities in rapid and precise movements. By showcasing our new design's potential, we aim to inspire further research on robotic systems that balance high performance and safety in diverse tasks. Our open-source hardware design, software, and a large dataset of diverse robot motions can be found at https://webdav.tuebingen.mpg.de/pamy2/.

Black-Box vs. Gray-Box: A Case Study on Learning Table Tennis Ball Trajectory Prediction with Spin and Impacts

May 24, 2023Abstract:In this paper, we present a method for table tennis ball trajectory filtering and prediction. Our gray-box approach builds on a physical model. At the same time, we use data to learn parameters of the dynamics model, of an extended Kalman filter, and of a neural model that infers the ball's initial condition. We demonstrate superior prediction performance of our approach over two black-box approaches, which are not supplied with physical prior knowledge. We demonstrate that initializing the spin from parameters of the ball launcher using a neural network drastically improves long-time prediction performance over estimating the spin purely from measured ball positions. An accurate prediction of the ball trajectory is crucial for successful returns. We therefore evaluate the return performance with a pneumatic artificial muscular robot and achieve a return rate of 29/30 (97.7%).

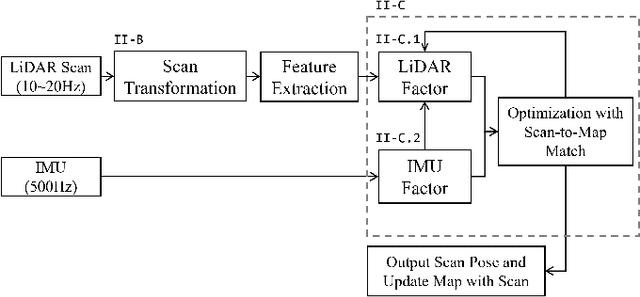

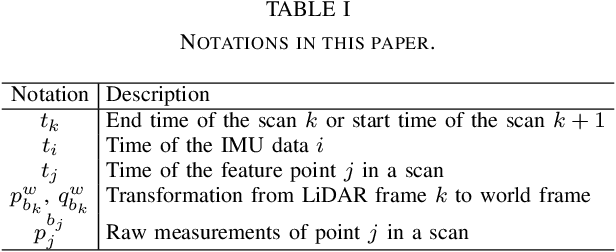

A Tightly Coupled LiDAR-IMU Odometry through Iterated Point-Level Undistortion

Sep 28, 2022

Abstract:Scan undistortion is a key module for LiDAR odometry in high dynamic environment with high rotation and translation speed. The existing line of studies mostly focuses on one pass undistortion, which means undistortion for each point is conducted only once in the whole LiDAR-IMU odometry pipeline. In this paper, we propose an optimization based tightly coupled LiDAR-IMU odometry addressing iterated point-level undistortion. By jointly minimizing the cost derived from LiDAR and IMU measurements, our LiDAR-IMU odometry method performs more accurate and robust in high dynamic environment. Besides, the method characters good computation efficiency by limiting the quantity of parameters.

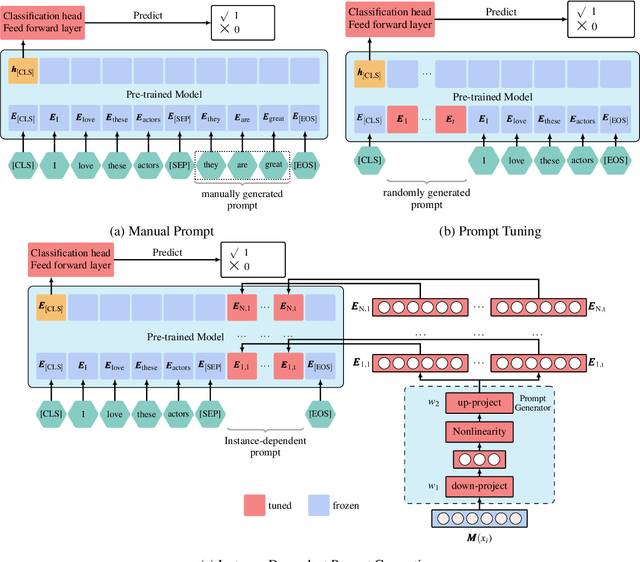

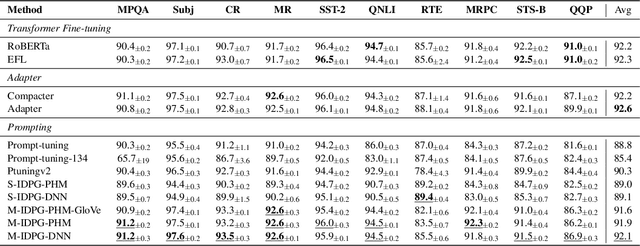

IDPG: An Instance-Dependent Prompt Generation Method

Apr 09, 2022

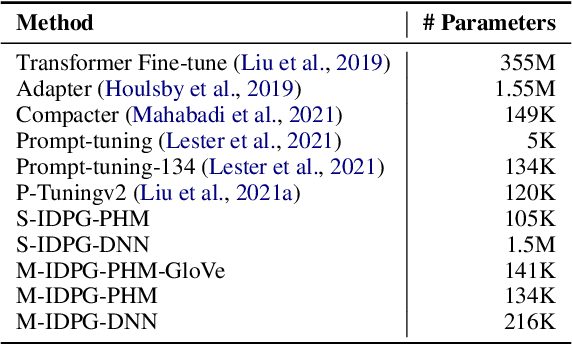

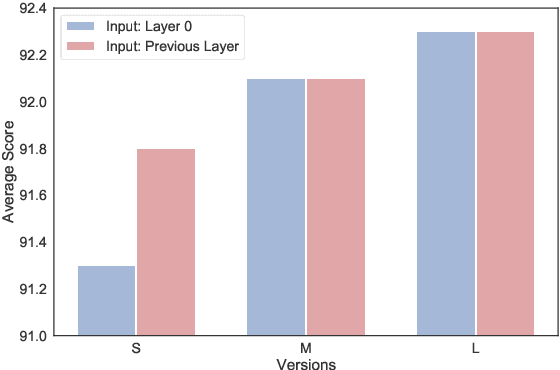

Abstract:Prompt tuning is a new, efficient NLP transfer learning paradigm that adds a task-specific prompt in each input instance during the model training stage. It freezes the pre-trained language model and only optimizes a few task-specific prompts. In this paper, we propose a conditional prompt generation method to generate prompts for each input instance, referred to as the Instance-Dependent Prompt Generation (IDPG). Unlike traditional prompt tuning methods that use a fixed prompt, IDPG introduces a lightweight and trainable component to generate prompts based on each input sentence. Extensive experiments on ten natural language understanding (NLU) tasks show that the proposed strategy consistently outperforms various prompt tuning baselines and is on par with other efficient transfer learning methods such as Compacter while tuning far fewer model parameters.

Heat Conduction Plate Layout Optimization using Physics-driven Convolutional Neural Networks

Jan 21, 2022Abstract:The layout optimization of the heat conduction is essential during design in engineering, especially for thermal sensible products. When the optimization algorithm iteratively evaluates different loading cases, the traditional numerical simulation methods used usually lead to a substantial computational cost. To effectively reduce the computational effort, data-driven approaches are used to train a surrogate model as a mapping between the prescribed external loads and various geometry. However, the existing model are trained by data-driven methods which requires intensive training samples that from numerical simulations and not really effectively solve the problem. Choosing the steady heat conduction problems as examples, this paper proposes a Physics-driven Convolutional Neural Networks (PD-CNN) method to infer the physical field solutions for random varied loading cases. After that, the Particle Swarm Optimization (PSO) algorithm is used to optimize the sizes and the positions of the hole masks in the prescribed design domain, and the average temperature value of the entire heat conduction field is minimized, and the goal of minimizing heat transfer is achieved. Compared with the existing data-driven approaches, the proposed PD-CNN optimization framework not only predict field solutions that are highly consistent with conventional simulation results, but also generate the solution space with without any pre-obtained training data.

Reducing Target Group Bias in Hate Speech Detectors

Dec 07, 2021

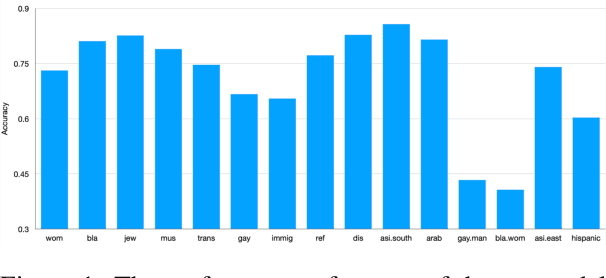

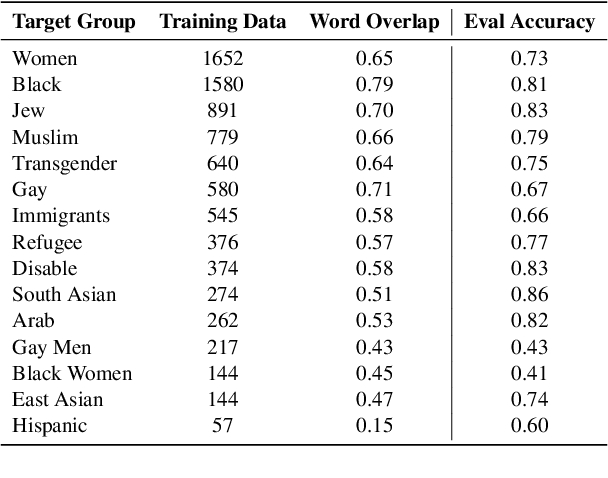

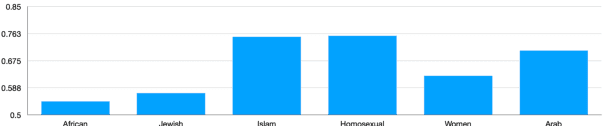

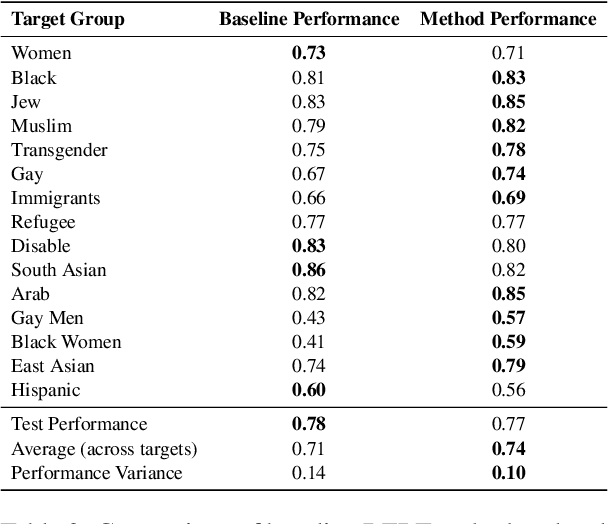

Abstract:The ubiquity of offensive and hateful content on online fora necessitates the need for automatic solutions that detect such content competently across target groups. In this paper we show that text classification models trained on large publicly available datasets despite having a high overall performance, may significantly under-perform on several protected groups. On the \citet{vidgen2020learning} dataset, we find the accuracy to be 37\% lower on an under annotated Black Women target group and 12\% lower on Immigrants, where hate speech involves a distinct style. To address this, we propose to perform token-level hate sense disambiguation, and utilize tokens' hate sense representations for detection, modeling more general signals. On two publicly available datasets, we observe that the variance in model accuracy across target groups drops by at least 30\%, improving the average target group performance by 4\% and worst case performance by 13\%.

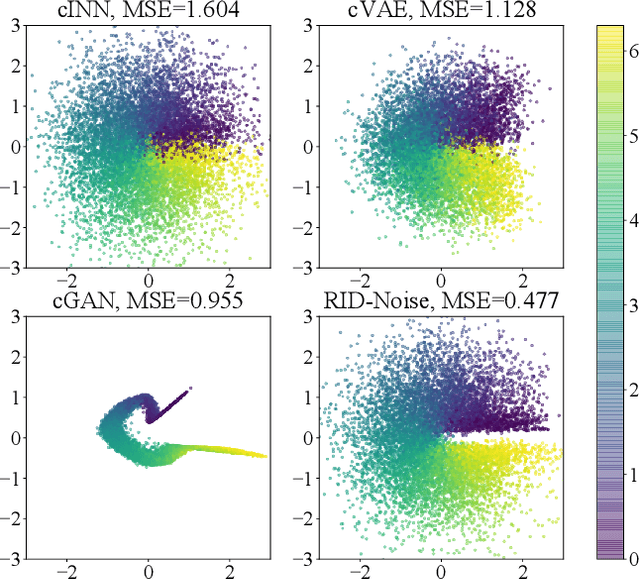

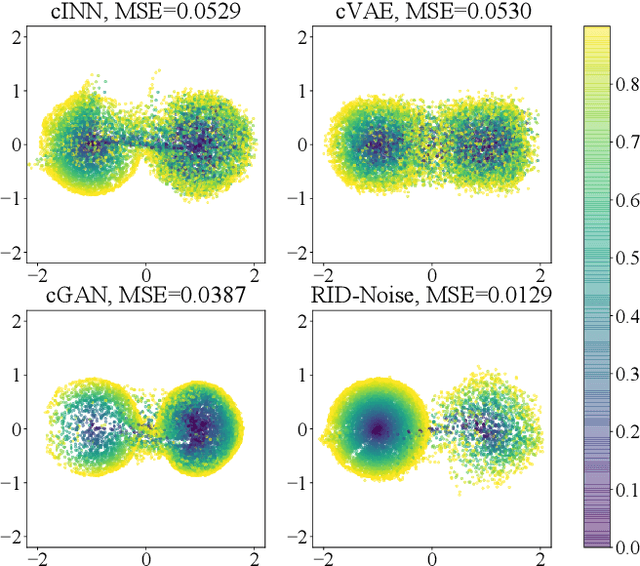

RID-Noise: Towards Robust Inverse Design under Noisy Environments

Dec 07, 2021

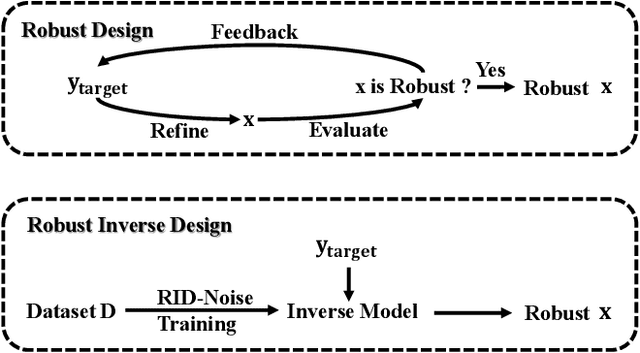

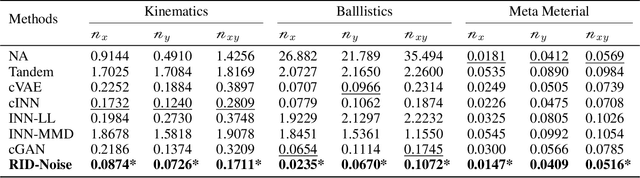

Abstract:From an engineering perspective, a design should not only perform well in an ideal condition, but should also resist noises. Such a design methodology, namely robust design, has been widely implemented in the industry for product quality control. However, classic robust design requires a lot of evaluations for a single design target, while the results of these evaluations could not be reused for a new target. To achieve a data-efficient robust design, we propose Robust Inverse Design under Noise (RID-Noise), which can utilize existing noisy data to train a conditional invertible neural network (cINN). Specifically, we estimate the robustness of a design parameter by its predictability, measured by the prediction error of a forward neural network. We also define a sample-wise weight, which can be used in the maximum weighted likelihood estimation of an inverse model based on a cINN. With the visual results from experiments, we clearly justify how RID-Noise works by learning the distribution and robustness from data. Further experiments on several real-world benchmark tasks with noises confirm that our method is more effective than other state-of-the-art inverse design methods. Code and supplementary is publicly available at https://github.com/ThyrixYang/rid-noise-aaai22

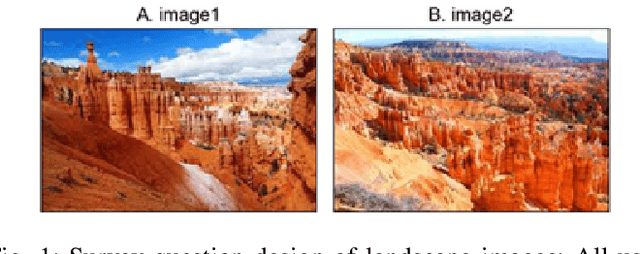

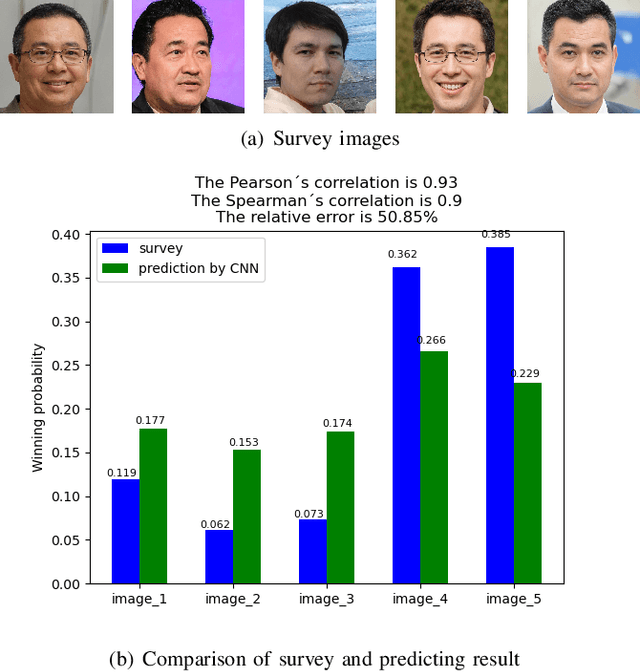

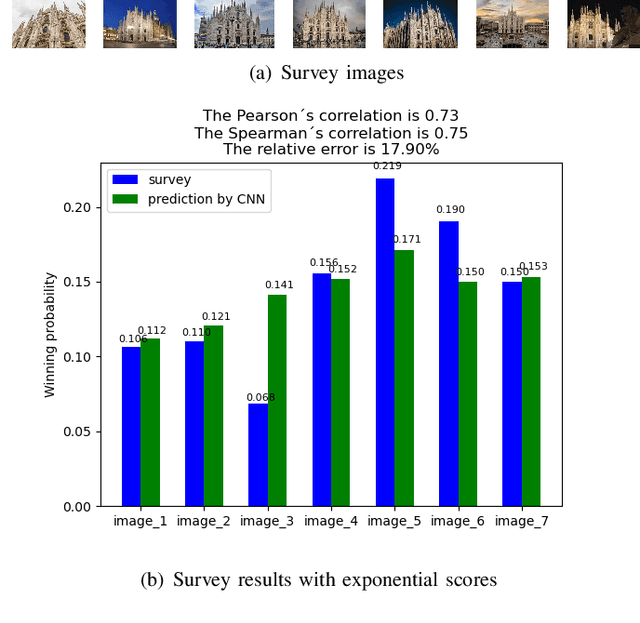

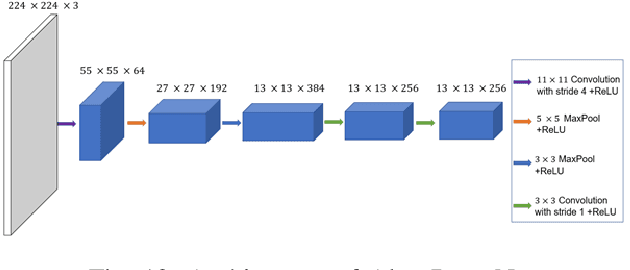

Neural Image Beauty Predictor Based on Bradley-Terry Model

Nov 19, 2021

Abstract:Image beauty assessment is an important subject of computer vision. Therefore, building a model to mimic the image beauty assessment becomes an important task. To better imitate the behaviours of the human visual system (HVS), a complete survey about images of different categories should be implemented. This work focuses on image beauty assessment. In this study, the pairwise evaluation method was used, which is based on the Bradley-Terry model. We believe that this method is more accurate than other image rating methods within an image group. Additionally, Convolution neural network (CNN), which is fit for image quality assessment, is used in this work. The first part of this study is a survey about the image beauty comparison of different images. The Bradley-Terry model is used for the calculated scores, which are the target of CNN model. The second part of this work focuses on the results of the image beauty prediction, including landscape images, architecture images and portrait images. The models are pretrained by the AVA dataset to improve the performance later. Then, the CNN model is trained with the surveyed images and corresponding scores. Furthermore, this work compares the results of four CNN base networks, i.e., Alex net, VGG net, Squeeze net and LSiM net, as discussed in literature. In the end, the model is evaluated by the accuracy in pairs, correlation coefficient and relative error calculated by survey results. Satisfactory results are achieved by our proposed methods with about 70 percent accuracy in pairs. Our work sheds more light on the novel image beauty assessment method. While more studies should be conducted, this method is a promising step.

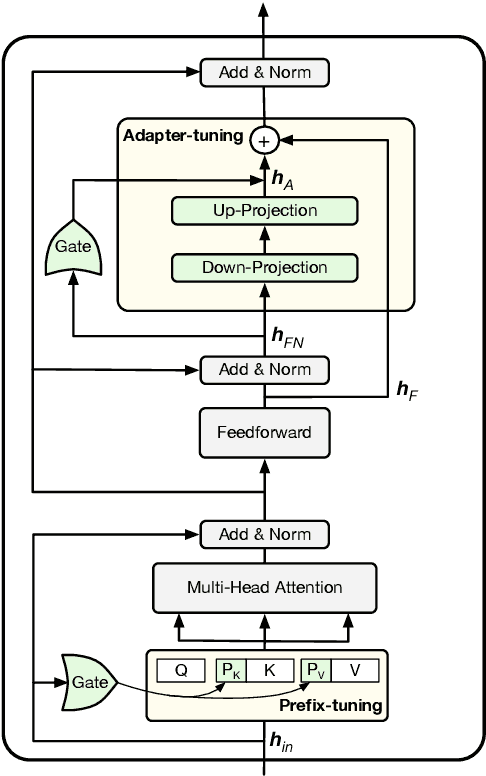

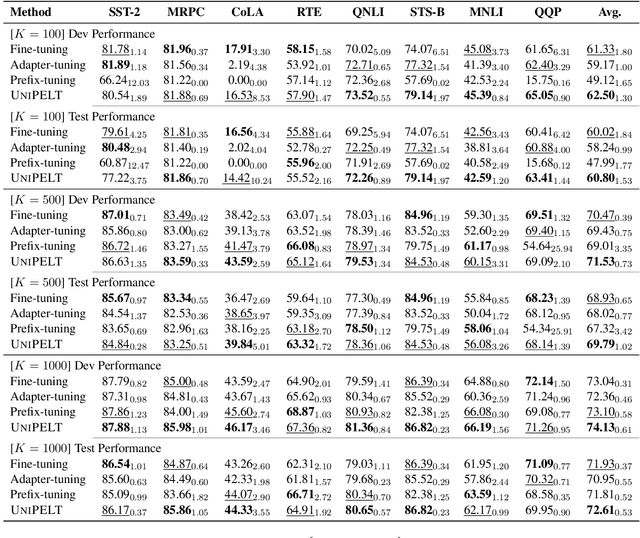

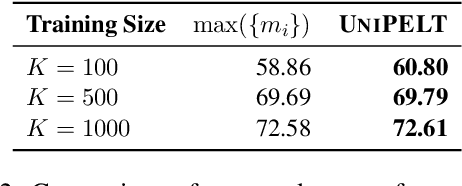

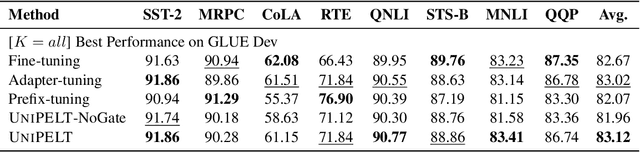

UniPELT: A Unified Framework for Parameter-Efficient Language Model Tuning

Oct 14, 2021

Abstract:Conventional fine-tuning of pre-trained language models tunes all model parameters and stores a full model copy for each downstream task, which has become increasingly infeasible as the model size grows larger. Recent parameter-efficient language model tuning (PELT) methods manage to match the performance of fine-tuning with much fewer trainable parameters and perform especially well when the training data is limited. However, different PELT methods may perform rather differently on the same task, making it nontrivial to select the most appropriate method for a specific task, especially considering the fast-growing number of new PELT methods and downstream tasks. In light of model diversity and the difficulty of model selection, we propose a unified framework, UniPELT, which incorporates different PELT methods as submodules and learns to activate the ones that best suit the current data or task setup. Remarkably, on the GLUE benchmark, UniPELT consistently achieves 1~3pt gains compared to the best individual PELT method that it incorporates and even outperforms fine-tuning under different setups. Moreover, UniPELT often surpasses the upper bound when taking the best performance of all its submodules used individually on each task, indicating that a mixture of multiple PELT methods may be inherently more effective than single methods.

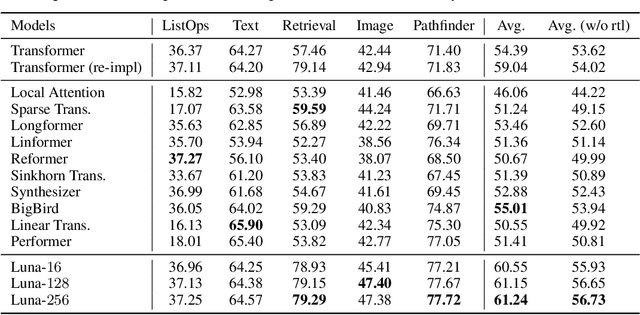

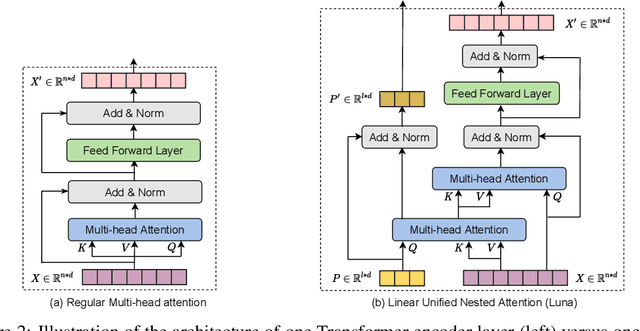

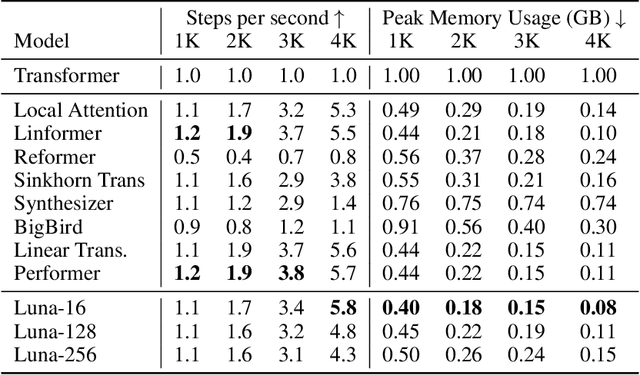

Luna: Linear Unified Nested Attention

Jun 03, 2021

Abstract:The quadratic computational and memory complexities of the Transformer's attention mechanism have limited its scalability for modeling long sequences. In this paper, we propose Luna, a linear unified nested attention mechanism that approximates softmax attention with two nested linear attention functions, yielding only linear (as opposed to quadratic) time and space complexity. Specifically, with the first attention function, Luna packs the input sequence into a sequence of fixed length. Then, the packed sequence is unpacked using the second attention function. As compared to a more traditional attention mechanism, Luna introduces an additional sequence with a fixed length as input and an additional corresponding output, which allows Luna to perform attention operation linearly, while also storing adequate contextual information. We perform extensive evaluations on three benchmarks of sequence modeling tasks: long-context sequence modeling, neural machine translation and masked language modeling for large-scale pretraining. Competitive or even better experimental results demonstrate both the effectiveness and efficiency of Luna compared to a variety

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge