Haibin Ling

Modeling Deep Learning Based Privacy Attacks on Physical Mail

Dec 22, 2020

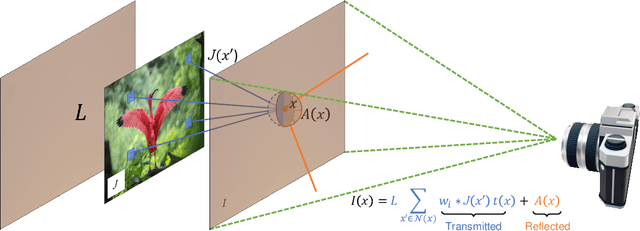

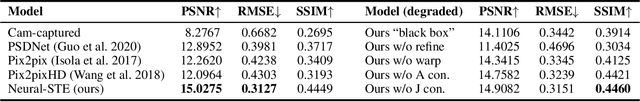

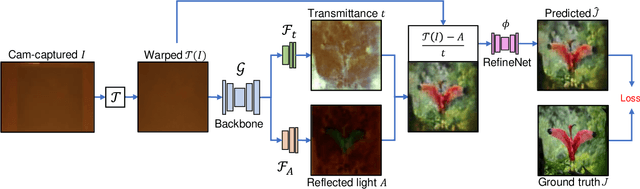

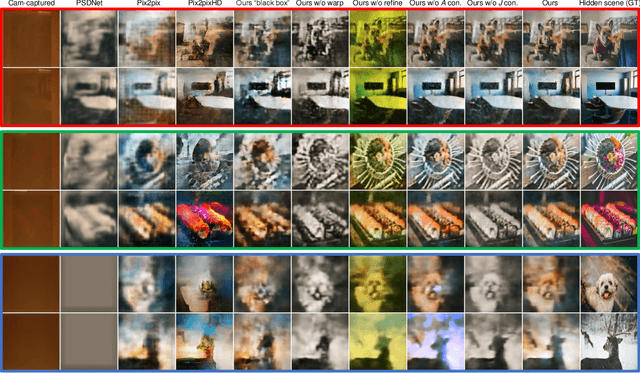

Abstract:Mail privacy protection aims to prevent unauthorized access to hidden content within an envelope since normal paper envelopes are not as safe as we think. In this paper, for the first time, we show that with a well designed deep learning model, the hidden content may be largely recovered without opening the envelope. We start by modeling deep learning-based privacy attacks on physical mail content as learning the mapping from the camera-captured envelope front face image to the hidden content, then we explicitly model the mapping as a combination of perspective transformation, image dehazing and denoising using a deep convolutional neural network, named Neural-STE (See-Through-Envelope). We show experimentally that hidden content details, such as texture and image structure, can be clearly recovered. Finally, our formulation and model allow us to design envelopes that can counter deep learning-based privacy attacks on physical mail.

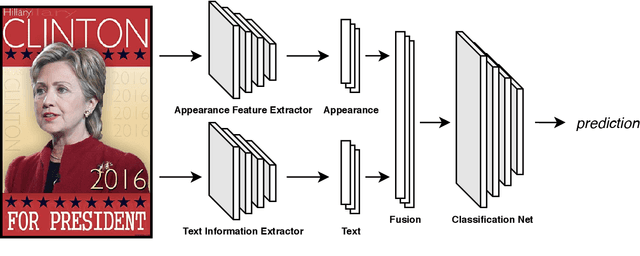

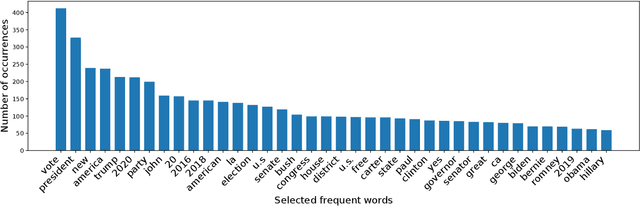

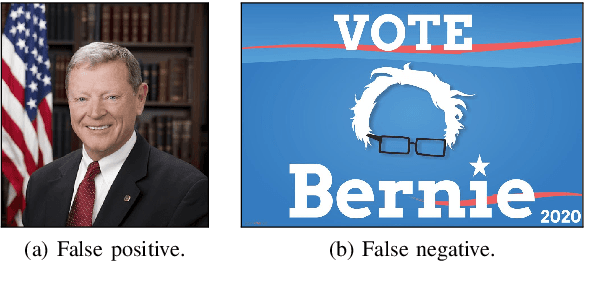

Political Posters Identification with Appearance-Text Fusion

Dec 19, 2020

Abstract:In this paper, we propose a method that efficiently utilizes appearance features and text vectors to accurately classify political posters from other similar political images. The majority of this work focuses on political posters that are designed to serve as a promotion of a certain political event, and the automated identification of which can lead to the generation of detailed statistics and meets the judgment needs in a variety of areas. Starting with a comprehensive keyword list for politicians and political events, we curate for the first time an effective and practical political poster dataset containing 13K human-labeled political images, including 3K political posters that explicitly support a movement or a campaign. Second, we make a thorough case study for this dataset and analyze common patterns and outliers of political posters. Finally, we propose a model that combines the power of both appearance and text information to classify political posters with significantly high accuracy.

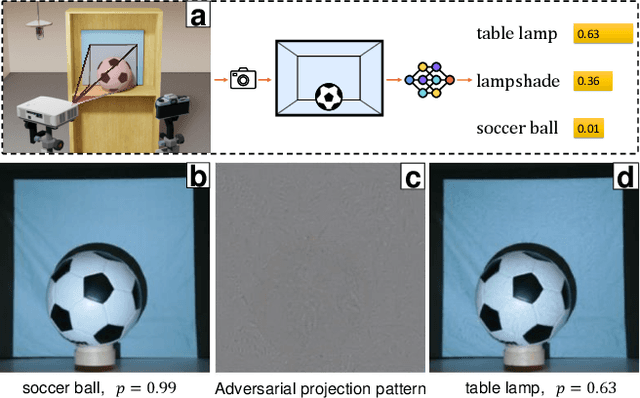

SPAA: Stealthy Projector-based Adversarial Attacks on Deep Image Classifiers

Dec 10, 2020

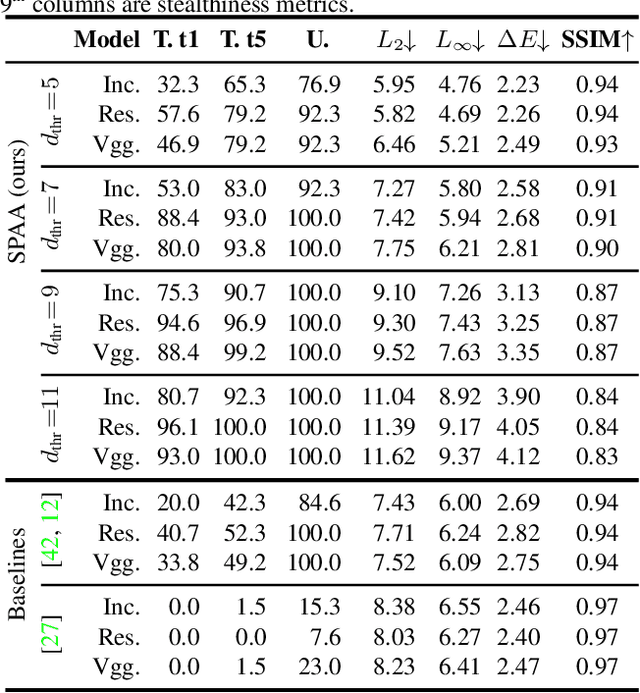

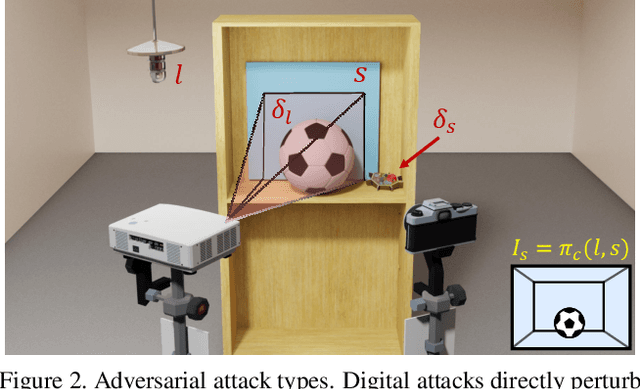

Abstract:Light-based adversarial attacks aim to fool deep learning-based image classifiers by altering the physical light condition using a controllable light source, e.g., a projector. Compared with physical attacks that place carefully designed stickers or printed adversarial objects, projector-based ones obviate modifying the physical entities. Moreover, projector-based attacks can be performed transiently and dynamically by altering the projection pattern. However, existing approaches focus on projecting adversarial patterns that result in clearly perceptible camera-captured perturbations, while the more interesting yet challenging goal, stealthy projector-based attack, remains an open problem. In this paper, for the first time, we formulate this problem as an end-to-end differentiable process and propose Stealthy Projector-based Adversarial Attack (SPAA). In SPAA, we approximate the real project-and-capture operation using a deep neural network named PCNet, then we include PCNet in the optimization of projector-based attacks such that the generated adversarial projection is physically plausible. Finally, to generate robust and stealthy adversarial projections, we propose an optimization algorithm that uses minimum perturbation and adversarial confidence thresholds to alternate between the adversarial loss and stealthiness loss optimization. Our experimental evaluations show that the proposed SPAA clearly outperforms other methods by achieving higher attack success rates and meanwhile being stealthier.

GMOT-40: A Benchmark for Generic Multiple Object Tracking

Dec 02, 2020

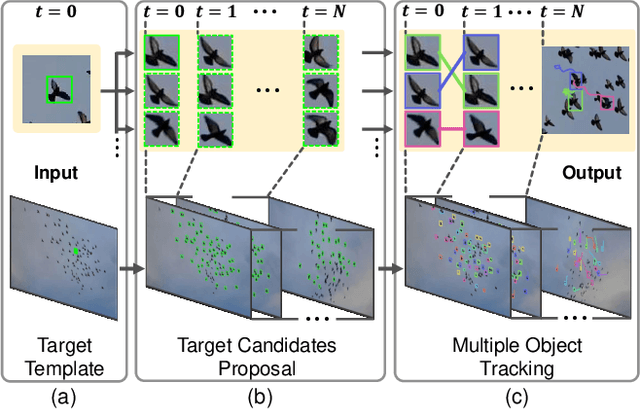

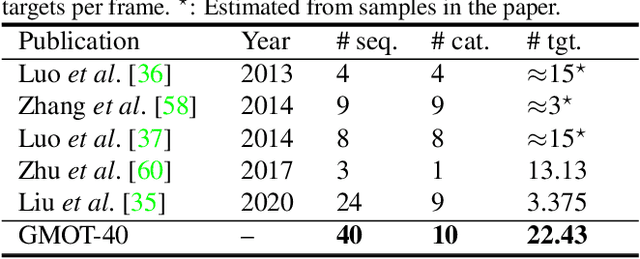

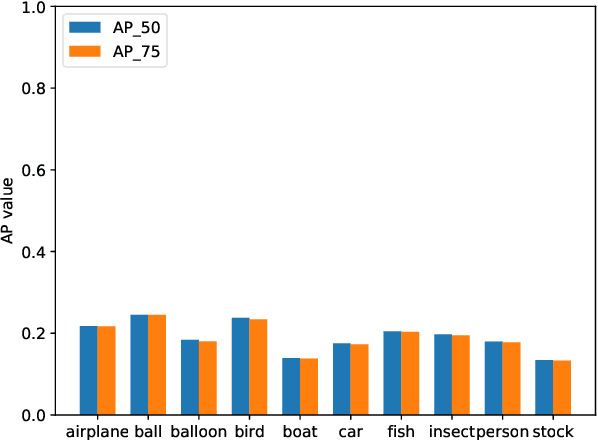

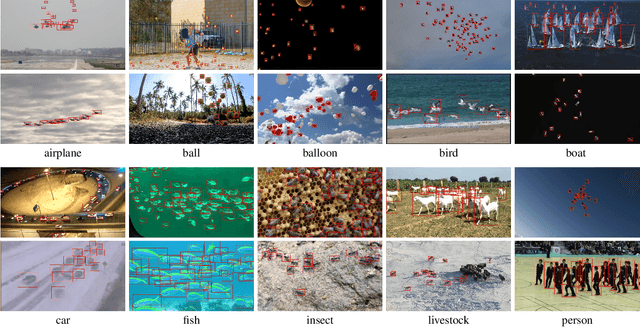

Abstract:Multiple Object Tracking (MOT) has witnessed remarkable advances in recent years. However, existing studies dominantly request prior knowledge of the tracking target, and hence may not generalize well to unseen categories. In contrast, Generic Multiple Object Tracking (GMOT), which requires little prior information about the target, is largely under-explored. In this paper, we make contributions to boost the study of GMOT in three aspects. First, we construct the first public GMOT dataset, dubbed GMOT-40, which contains 40 carefully annotated sequences evenly distributed among 10 object categories. In addition, two tracking protocols are adopted to evaluate different characteristics of tracking algorithms. Second, by noting the lack of devoted tracking algorithms, we have designed a series of baseline GMOT algorithms. Third, we perform a thorough evaluation on GMOT-40, involving popular MOT algorithms (with necessary modifications) and the proposed baselines. We will release the GMOT-40 benchmark, the evaluation results, as well as the baseline algorithm to the public upon the publication of the paper.

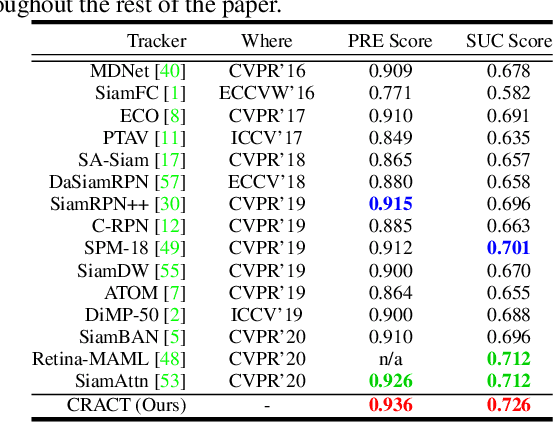

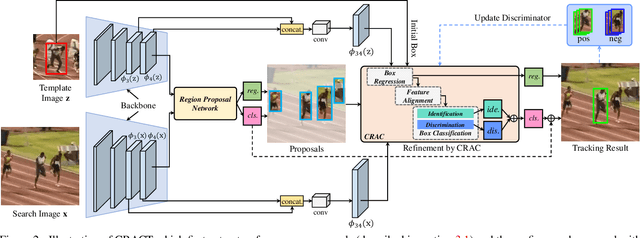

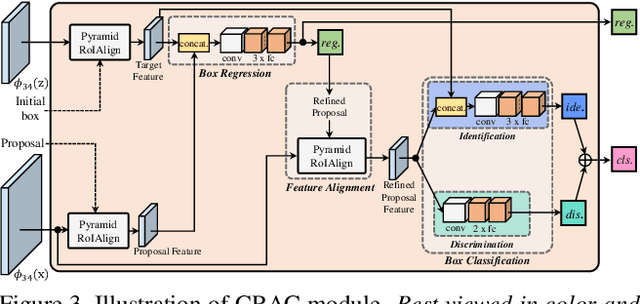

CRACT: Cascaded Regression-Align-Classification for Robust Visual Tracking

Nov 25, 2020

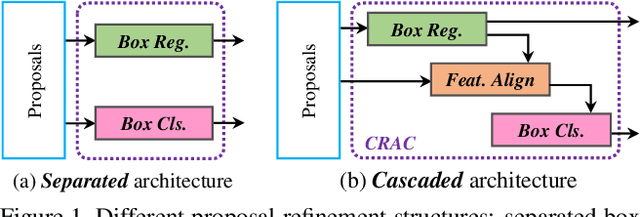

Abstract:High quality object proposals are crucial in visual tracking algorithms that utilize region proposal network (RPN). Refinement of these proposals, typically by box regression and classification in parallel, has been popularly adopted to boost tracking performance. However, it still meets problems when dealing with complex and dynamic background. Thus motivated, in this paper we introduce an improved proposal refinement module, Cascaded Regression-Align-Classification (CRAC), which yields new state-of-the-art performances on many benchmarks. First, having observed that the offsets from box regression can serve as guidance for proposal feature refinement, we design CRAC as a cascade of box regression, feature alignment and box classification. The key is to bridge box regression and classification via an alignment step, which leads to more accurate features for proposal classification with improved robustness. To address the variation in object appearance, we introduce an identification-discrimination component for box classification, which leverages offline reliable fine-grained template and online rich background information to distinguish the target from background. Moreover, we present pyramid RoIAlign that benefits CRAC by exploiting both the local and global cues of proposals. During inference, tracking proceeds by ranking all refined proposals and selecting the best one. In experiments on seven benchmarks including OTB-2015, UAV123, NfS, VOT-2018, TrackingNet, GOT-10k and LaSOT, our CRACT exhibits very promising results in comparison with state-of-the-art competitors and runs in real-time.

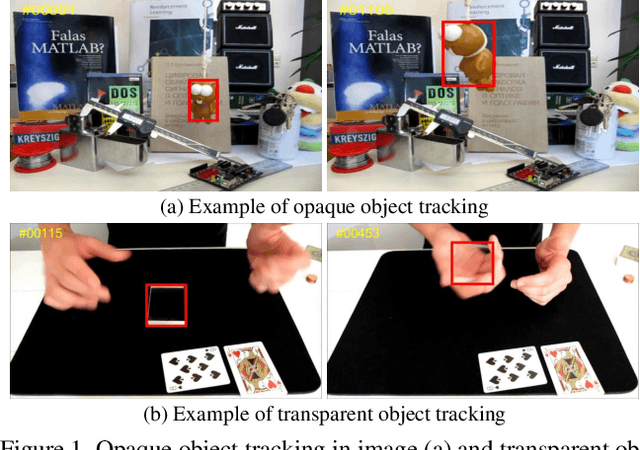

Transparent Object Tracking Benchmark

Nov 21, 2020

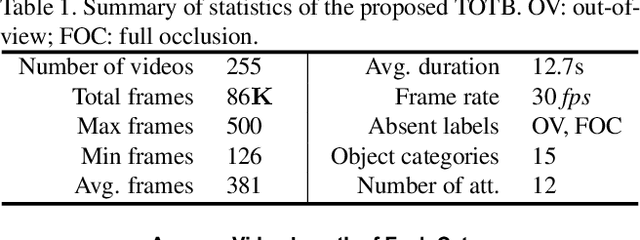

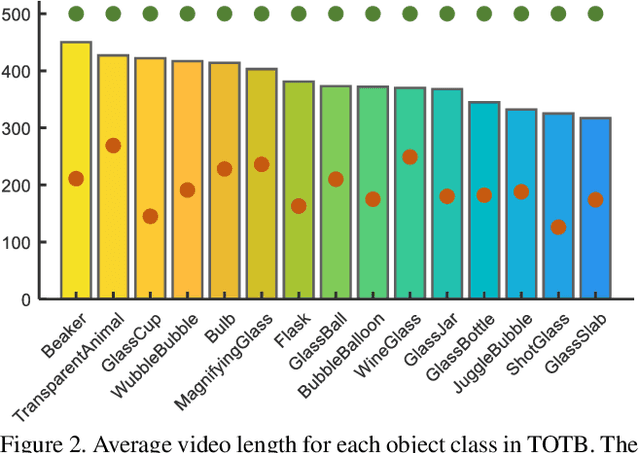

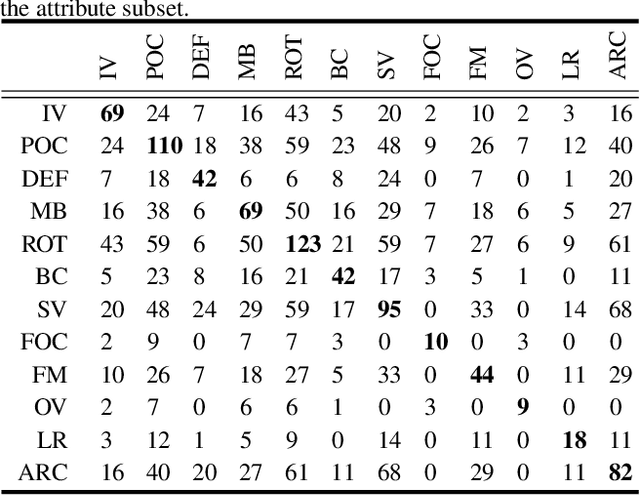

Abstract:Visual tracking has achieved considerable progress in recent years. However, current research in the field mainly focuses on tracking of opaque objects, while little attention is paid to transparent object tracking. In this paper, we make the first attempt in exploring this problem by proposing a Transparent Object Tracking Benchmark (TOTB). Specifically, TOTB consists of 225 videos (86K frames) from 15 diverse transparent object categories. Each sequence is manually labeled with axis-aligned bounding boxes. To the best of our knowledge, TOTB is the first benchmark dedicated to transparent object tracking. In order to understand how existing trackers perform and to provide comparison for future research on TOTB, we extensively evaluate 25 state-of-the-art tracking algorithms. The evaluation results exhibit that more efforts are needed to improve transparent object tracking. Besides, we observe some nontrivial findings from the evaluation that are discrepant with some common beliefs in opaque object tracking. For example, we find that deep(er) features are not always good for improvements. Moreover, to encourage future research, we introduce a novel tracker, named TransATOM, which leverages transparency features for tracking and surpasses all 25 evaluated approaches by a large margin. By releasing TOTB, we expect to facilitate future research and application of transparent object tracking in both the academia and industry. The TOTB and evaluation results as well as TransATOM will be made available at https://hengfan2010.github.io/projects/TOTB.

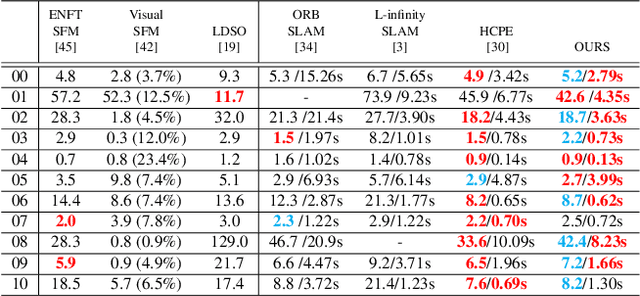

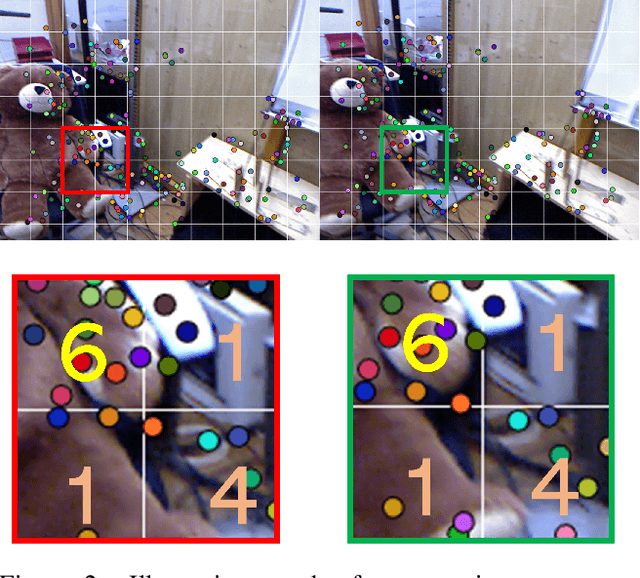

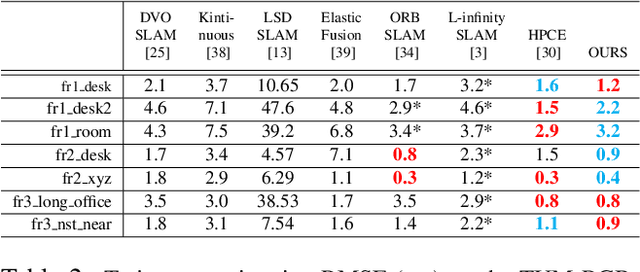

Pushing the Envelope of Rotation Averaging for Visual SLAM

Nov 02, 2020

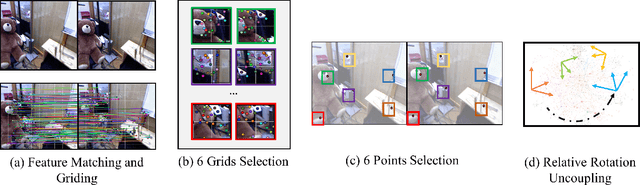

Abstract:As an essential part of structure from motion (SfM) and Simultaneous Localization and Mapping (SLAM) systems, motion averaging has been extensively studied in the past years and continues to attract surging research attention. While canonical approaches such as bundle adjustment are predominantly inherited in most of state-of-the-art SLAM systems to estimate and update the trajectory in the robot navigation, the practical implementation of bundle adjustment in SLAM systems is intrinsically limited by the high computational complexity, unreliable convergence and strict requirements of ideal initializations. In this paper, we lift these limitations and propose a novel optimization backbone for visual SLAM systems, where we leverage rotation averaging to improve the accuracy, efficiency and robustness of conventional monocular SLAM pipelines. In our approach, we first decouple the rotational and translational parameters in the camera rigid body transformation and convert the high-dimensional non-convex nonlinear problem into tractable linear subproblems in lower dimensions, and show that the subproblems can be solved independently with proper constraints. We apply the scale parameter with $l_1$-norm in the pose-graph optimization to address the rotation averaging robustness against outliers. We further validate the global optimality of our proposed approach, revisit and address the initialization schemes, pure rotational scene handling and outlier treatments. We demonstrate that our approach can exhibit up to 10x faster speed with comparable accuracy against the state of the art on public benchmarks.

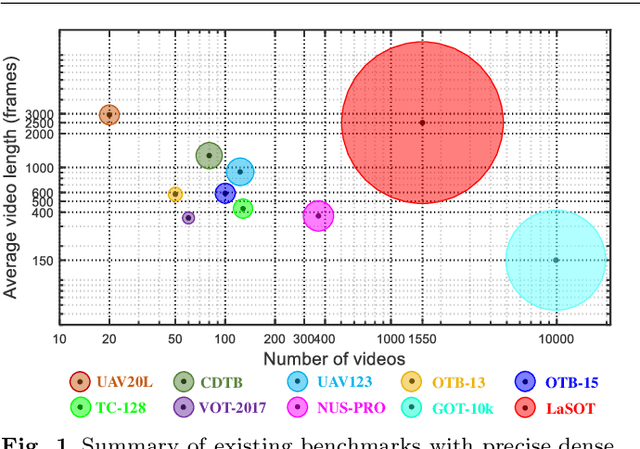

LaSOT: A High-quality Large-scale Single Object Tracking Benchmark

Sep 12, 2020

Abstract:Despite great recent advances in visual tracking, its further development, including both algorithm design and evaluation, is limited due to lack of dedicated large-scale benchmarks. To address this problem, we present LaSOT, a high-quality Large-scale Single Object Tracking benchmark. LaSOT contains a diverse selection of 85 object classes, and offers 1,550 totaling more than 3.87 million frames. Each video frame is carefully and manually annotated with a bounding box. This makes LaSOT, to our knowledge, the largest densely annotated tracking benchmark. Our goal in releasing LaSOT is to provide a dedicated high quality platform for both training and evaluation of trackers. The average video length of LaSOT is around 2,500 frames, where each video contains various challenge factors that exist in real world video footage,such as the targets disappearing and re-appearing. These longer video lengths allow for the assessment of long-term trackers. To take advantage of the close connection between visual appearance and natural language, we provide language specification for each video in LaSOT. We believe such additions will allow for future research to use linguistic features to improve tracking. Two protocols, full-overlap and one-shot, are designated for flexible assessment of trackers. We extensively evaluate 48 baseline trackers on LaSOT with in-depth analysis, and results reveal that there still exists significant room for improvement. The complete benchmark, tracking results as well as analysis are available at http://vision.cs.stonybrook.edu/~lasot/.

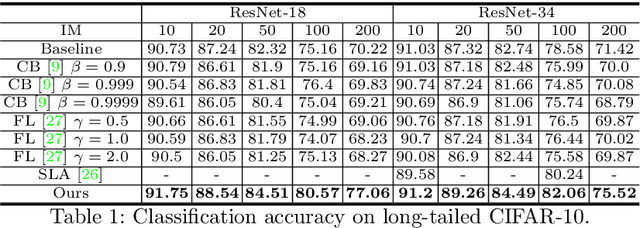

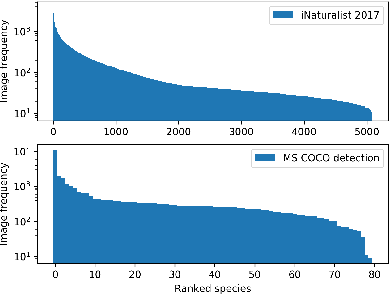

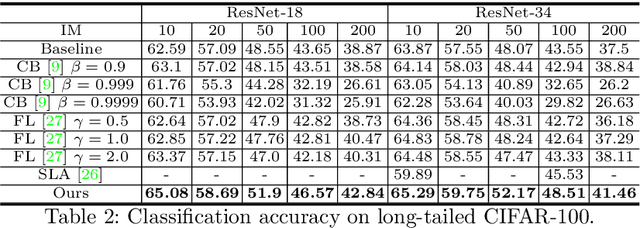

Feature Space Augmentation for Long-Tailed Data

Aug 09, 2020

Abstract:Real-world data often follow a long-tailed distribution as the frequency of each class is typically different. For example, a dataset can have a large number of under-represented classes and a few classes with more than sufficient data. However, a model to represent the dataset is usually expected to have reasonably homogeneous performances across classes. Introducing class-balanced loss and advanced methods on data re-sampling and augmentation are among the best practices to alleviate the data imbalance problem. However, the other part of the problem about the under-represented classes will have to rely on additional knowledge to recover the missing information. In this work, we present a novel approach to address the long-tailed problem by augmenting the under-represented classes in the feature space with the features learned from the classes with ample samples. In particular, we decompose the features of each class into a class-generic component and a class-specific component using class activation maps. Novel samples of under-represented classes are then generated on the fly during training stages by fusing the class-specific features from the under-represented classes with the class-generic features from confusing classes. Our results on different datasets such as iNaturalist, ImageNet-LT, Places-LT and a long-tailed version of CIFAR have shown the state of the art performances.

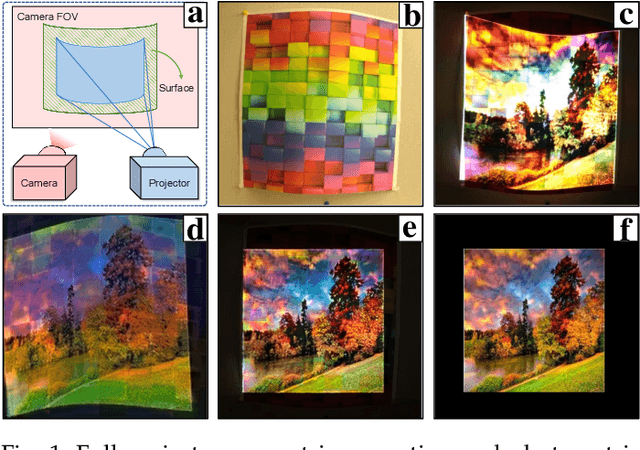

End-to-end Full Projector Compensation

Aug 04, 2020

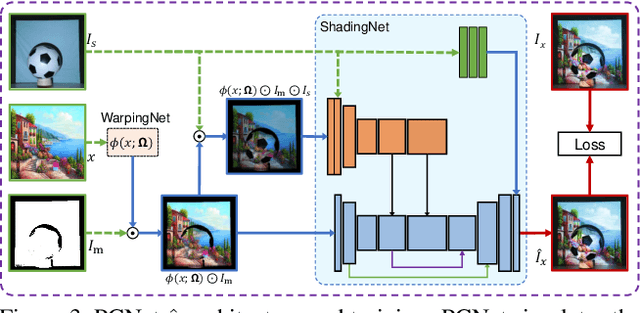

Abstract:Full projector compensation aims to modify a projector input image to compensate for both geometric and photometric disturbance of the projection surface. Traditional methods usually solve the two parts separately and may suffer from suboptimal solutions. In this paper, we propose the first end-to-end differentiable solution, named CompenNeSt++, to solve the two problems jointly. First, we propose a novel geometric correction subnet, named WarpingNet, which is designed with a cascaded coarse-to-fine structure to learn the sampling grid directly from sampling images. Second, we propose a novel photometric compensation subnet, named CompenNeSt, which is designed with a siamese architecture to capture the photometric interactions between the projection surface and the projected images, and to use such information to compensate the geometrically corrected images. By concatenating WarpingNet with CompenNeSt, CompenNeSt++ accomplishes full projector compensation and is end-to-end trainable. Third, to improve practicability, we propose a novel synthetic data-based pre-training strategy to significantly reduce the number of training images and training time. Moreover, we construct the first setup-independent full compensation benchmark to facilitate future studies. In thorough experiments, our method shows clear advantages over prior art with promising compensation quality and meanwhile being practically convenient.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge