Hai Zhao

Department of Computer Science and Engineering, Shanghai Jiao Tong University, Key Laboratory of Shanghai Education Commission for Intelligent Interaction and Cognitive Engineering, Shanghai Jiao Tong University, MoE Key Lab of Artificial Intelligence, AI Institute, Shanghai Jiao Tong University

Unsupervised Neural Machine Translation with Indirect Supervision

Apr 07, 2020

Abstract:Neural machine translation~(NMT) is ineffective for zero-resource languages. Recent works exploring the possibility of unsupervised neural machine translation (UNMT) with only monolingual data can achieve promising results. However, there are still big gaps between UNMT and NMT with parallel supervision. In this work, we introduce a multilingual unsupervised NMT (\method) framework to leverage weakly supervised signals from high-resource language pairs to zero-resource translation directions. More specifically, for unsupervised language pairs \texttt{En-De}, we can make full use of the information from parallel dataset \texttt{En-Fr} to jointly train the unsupervised translation directions all in one model. \method is based on multilingual models which require no changes to the standard unsupervised NMT. Empirical results demonstrate that \method significantly improves the translation quality by more than 3 BLEU score on six benchmark unsupervised translation directions.

Reference Language based Unsupervised Neural Machine Translation

Apr 05, 2020

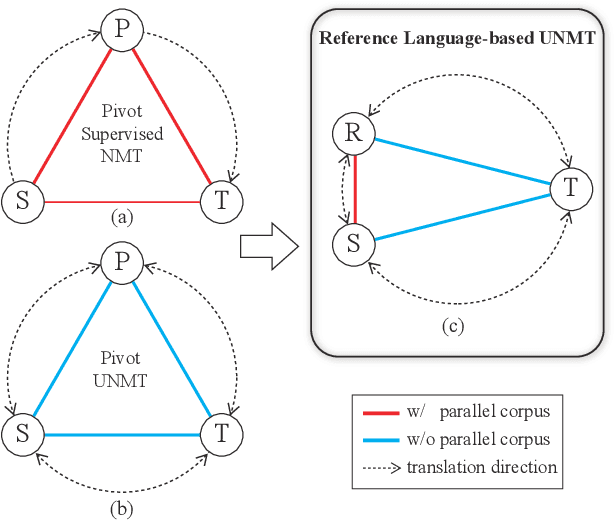

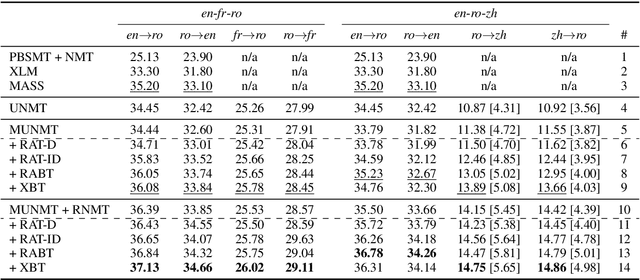

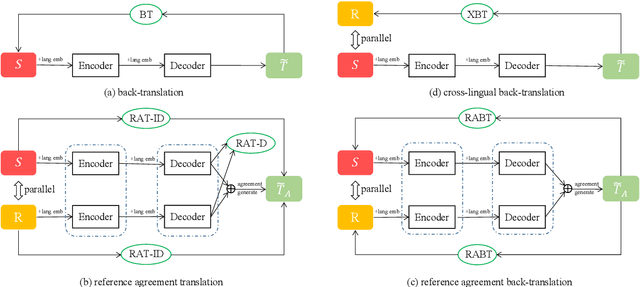

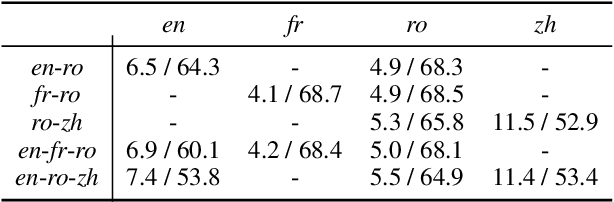

Abstract:Exploiting common language as an auxiliary for better translation has a long tradition in machine translation, which lets supervised learning based machine translation enjoy the enhancement delivered by the well-used pivot language, in case that the prerequisite of parallel corpus from source language to target language cannot be fully satisfied. The rising of unsupervised neural machine translation (UNMT) seems completely relieving the parallel corpus curse, though still subject to unsatisfactory performance so far due to vague clues available used for its core back-translation training. Further enriching the idea of pivot translation by freeing the use of parallel corpus other than its specified source and target, we propose a new reference language based UNMT framework, in which the reference language only shares parallel corpus with the source, indicating clear enough signal to help the reconstruction training of UNMT through a proposed reference agreement mechanism. Experimental results show that our methods improve the quality of UNMT over that of a strong baseline in terms of only one auxiliary language, demonstrating the usefulness of the proposed reference language based UNMT with a good start.

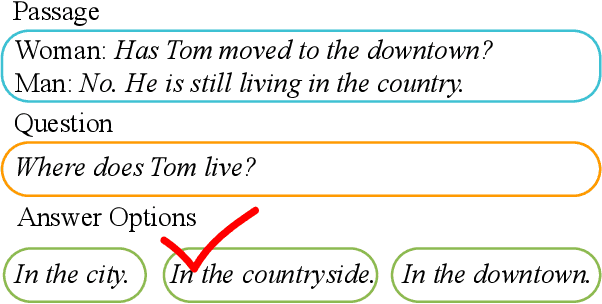

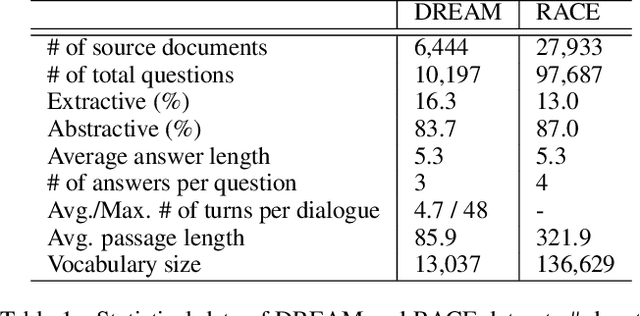

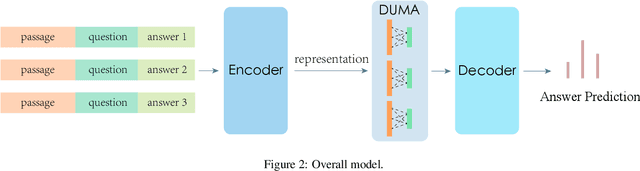

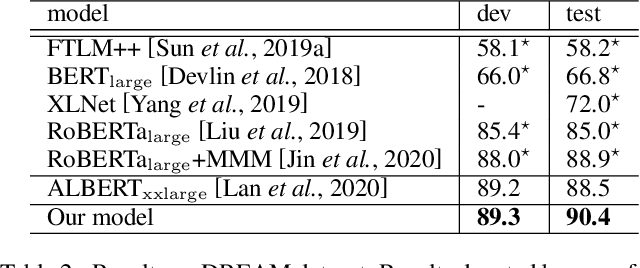

Dual Multi-head Co-attention for Multi-choice Reading Comprehension

Feb 08, 2020

Abstract:Multi-choice Machine Reading Comprehension (MRC) requires model to decide the correct answer from a set of answer options when given a passage and a question. Thus in addition to a powerful pre-trained Language Model as encoder, multi-choice MRC especially relies on a matching network design which is supposed to effectively capture the relationship among the triplet of passage, question and answers. While the latest pre-trained Language Models have shown powerful enough even without the support from a matching network, and the latest matching network has been complicated enough, we thus propose a novel going-back-to-the-basic solution which straightforwardly models the MRC relationship as attention mechanism inside network. The proposed DUal Multi-head Co-Attention (DUMA) has been shown simple but effective and is capable of generally promoting pre-trained Language Models. Our proposed method is evaluated on two benchmark multi-choice MRC tasks, DREAM and RACE, showing that in terms of strong Language Models, DUMA may still boost the model to reach new state-of-the-art performance.

Retrospective Reader for Machine Reading Comprehension

Jan 27, 2020

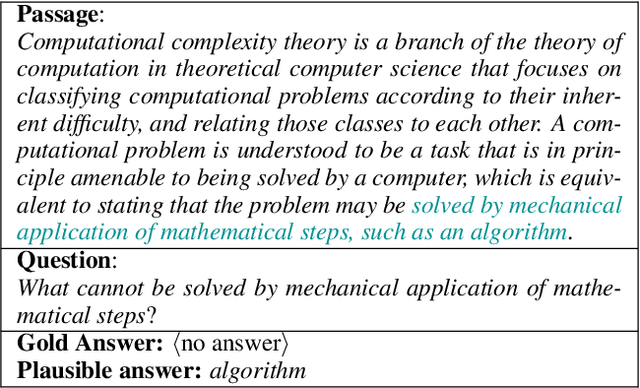

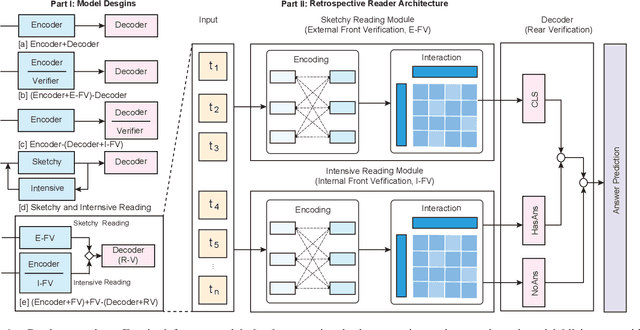

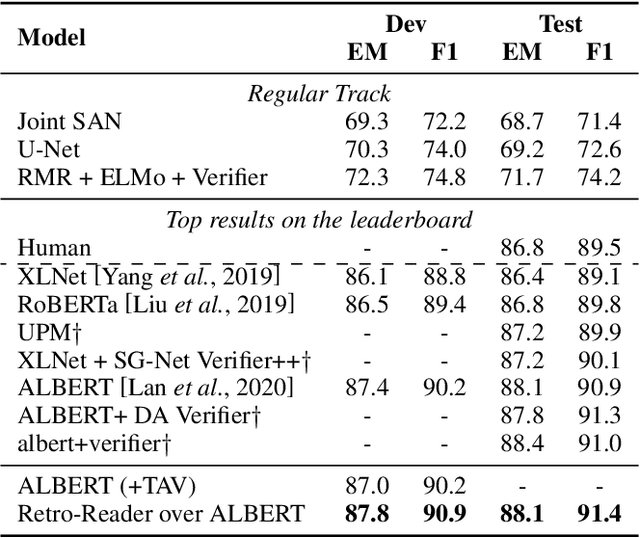

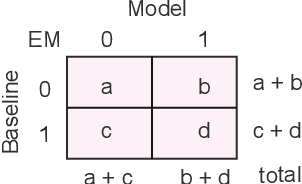

Abstract:Machine reading comprehension (MRC) is an AI challenge that requires machine to determine the correct answers to questions based on a given passage. MRC systems must not only answer question when necessary but also distinguish when no answer is available according to the given passage and then tactfully abstain from answering. When unanswerable questions are involved in the MRC task, an essential verification module called verifier is especially required in addition to the encoder, though the latest practice on MRC modeling still most benefits from adopting well pre-trained language models as the encoder block by only focusing on the "reading". This paper devotes itself to exploring better verifier design for the MRC task with unanswerable questions. Inspired by how humans solve reading comprehension questions, we proposed a retrospective reader (Retro-Reader) that integrates two stages of reading and verification strategies: 1) sketchy reading that briefly investigates the overall interactions of passage and question, and yield an initial judgment; 2) intensive reading that verifies the answer and gives the final prediction. The proposed reader is evaluated on two benchmark MRC challenge datasets SQuAD2.0 and NewsQA, achieving new state-of-the-art results. Significance tests show that our model is significantly better than the strong ALBERT baseline. A series of analysis is also conducted to interpret the effectiveness of the proposed reader.

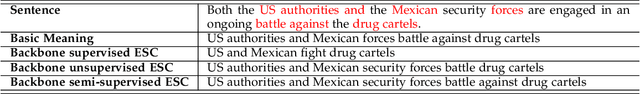

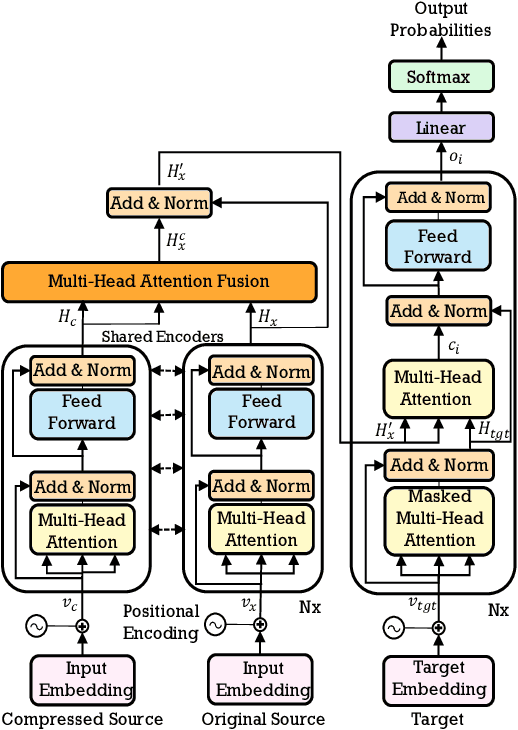

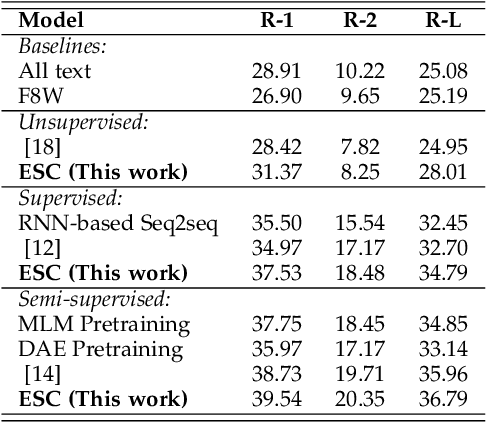

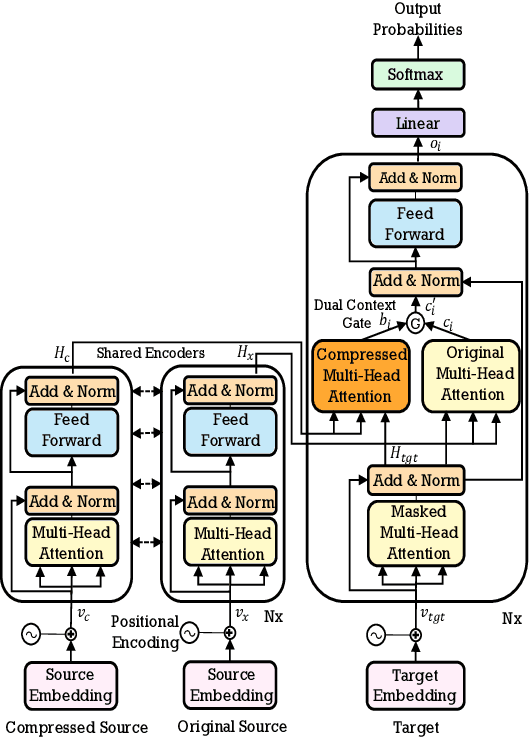

Explicit Sentence Compression for Neural Machine Translation

Dec 27, 2019

Abstract:State-of-the-art Transformer-based neural machine translation (NMT) systems still follow a standard encoder-decoder framework, in which source sentence representation can be well done by an encoder with self-attention mechanism. Though Transformer-based encoder may effectively capture general information in its resulting source sentence representation, the backbone information, which stands for the gist of a sentence, is not specifically focused on. In this paper, we propose an explicit sentence compression method to enhance the source sentence representation for NMT. In practice, an explicit sentence compression goal used to learn the backbone information in a sentence. We propose three ways, including backbone source-side fusion, target-side fusion, and both-side fusion, to integrate the compressed sentence into NMT. Our empirical tests on the WMT English-to-French and English-to-German translation tasks show that the proposed sentence compression method significantly improves the translation performances over strong baselines.

Korean-to-Chinese Machine Translation using Chinese Character as Pivot Clue

Nov 25, 2019

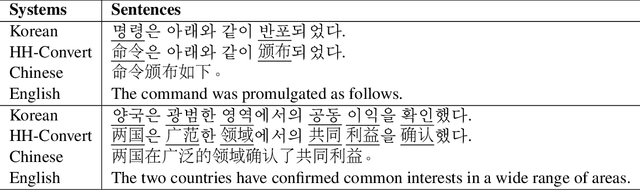

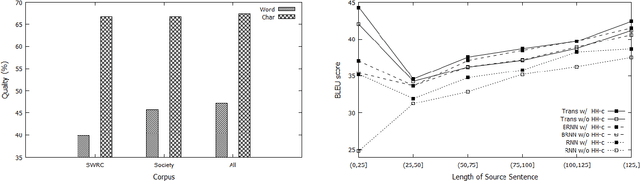

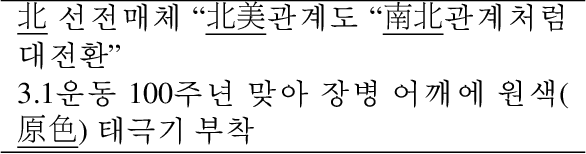

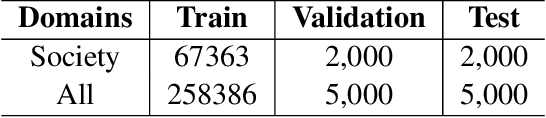

Abstract:Korean-Chinese is a low resource language pair, but Korean and Chinese have a lot in common in terms of vocabulary. Sino-Korean words, which can be converted into corresponding Chinese characters, account for more than fifty of the entire Korean vocabulary. Motivated by this, we propose a simple linguistically motivated solution to improve the performance of the Korean-to-Chinese neural machine translation model by using their common vocabulary. We adopt Chinese characters as a translation pivot by converting Sino-Korean words in Korean sentences to Chinese characters and then train the machine translation model with the converted Korean sentences as source sentences. The experimental results on Korean-to-Chinese translation demonstrate that the models with the proposed method improve translation quality up to 1.5 BLEU points in comparison to the baseline models.

* 9 pages

Global Greedy Dependency Parsing

Nov 20, 2019

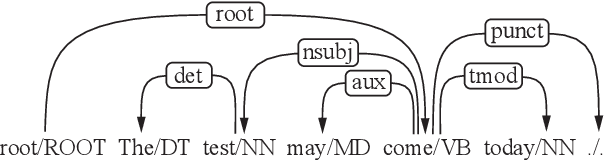

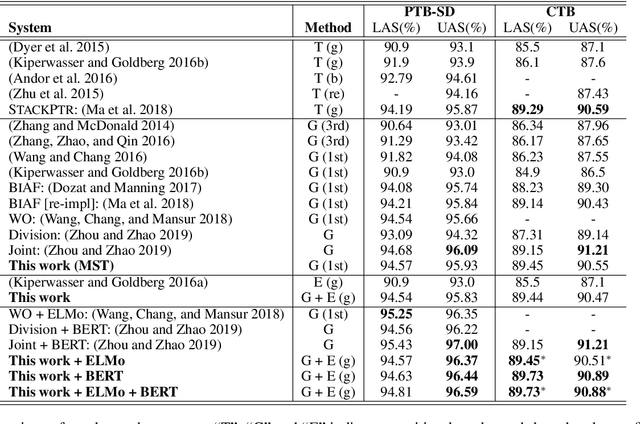

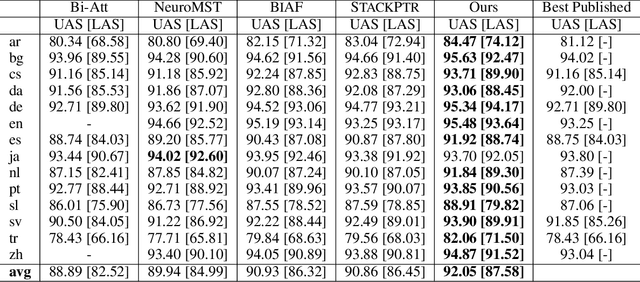

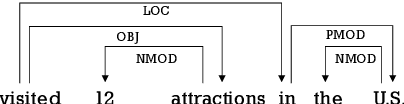

Abstract:Most syntactic dependency parsing models may fall into one of two categories: transition- and graph-based models. The former models enjoy high inference efficiency with linear time complexity, but they rely on the stacking or re-ranking of partially-built parse trees to build a complete parse tree and are stuck with slower training for the necessity of dynamic oracle training. The latter, graph-based models, may boast better performance but are unfortunately marred by polynomial time inference. In this paper, we propose a novel parsing order objective, resulting in a novel dependency parsing model capable of both global (in sentence scope) feature extraction as in graph models and linear time inference as in transitional models. The proposed global greedy parser only uses two arc-building actions, left and right arcs, for projective parsing. When equipped with two extra non-projective arc-building actions, the proposed parser may also smoothly support non-projective parsing. Using multiple benchmark treebanks, including the Penn Treebank (PTB), the CoNLL-X treebanks, and the Universal Dependency Treebanks, we evaluate our parser and demonstrate that the proposed novel parser achieves good performance with faster training and decoding.

Hierarchical Contextualized Representation for Named Entity Recognition

Nov 19, 2019

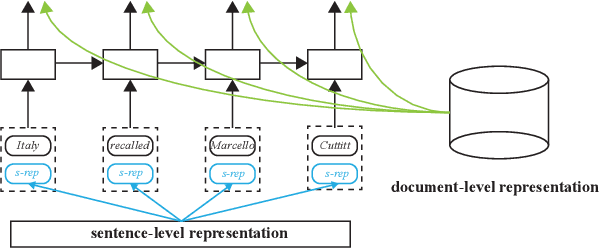

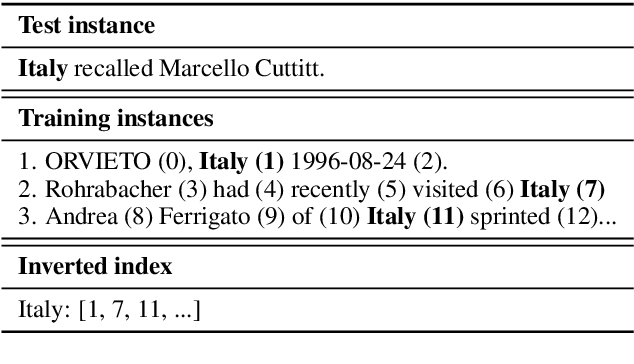

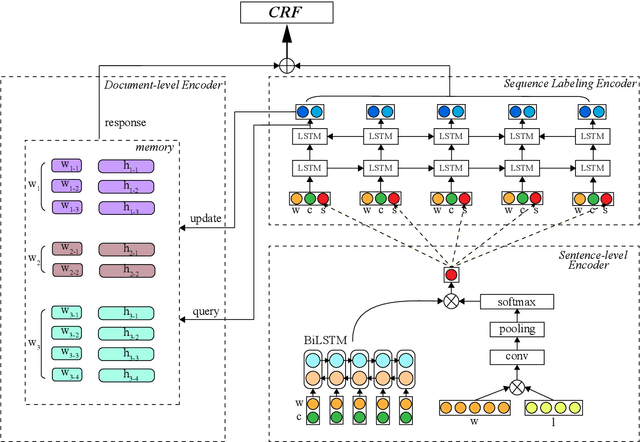

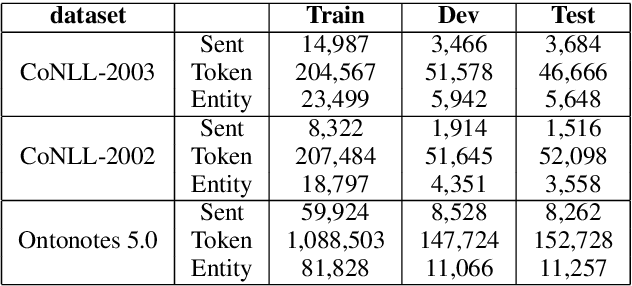

Abstract:Named entity recognition (NER) models are typically based on the architecture of Bi-directional LSTM (BiLSTM). The constraints of sequential nature and the modeling of single input prevent the full utilization of global information from larger scope, not only in the entire sentence, but also in the entire document (dataset). In this paper, we address these two deficiencies and propose a model augmented with hierarchical contextualized representation: sentence-level representation and document-level representation. In sentence-level, we take different contributions of words in a single sentence into consideration to enhance the sentence representation learned from an independent BiLSTM via label embedding attention mechanism. In document-level, the key-value memory network is adopted to record the document-aware information for each unique word which is sensitive to similarity of context information. Our two-level hierarchical contextualized representations are fused with each input token embedding and corresponding hidden state of BiLSTM, respectively. The experimental results on three benchmark NER datasets (CoNLL-2003 and Ontonotes 5.0 English datasets, CoNLL-2002 Spanish dataset) show that we establish new state-of-the-art results.

Probing Contextualized Sentence Representations with Visual Awareness

Nov 07, 2019

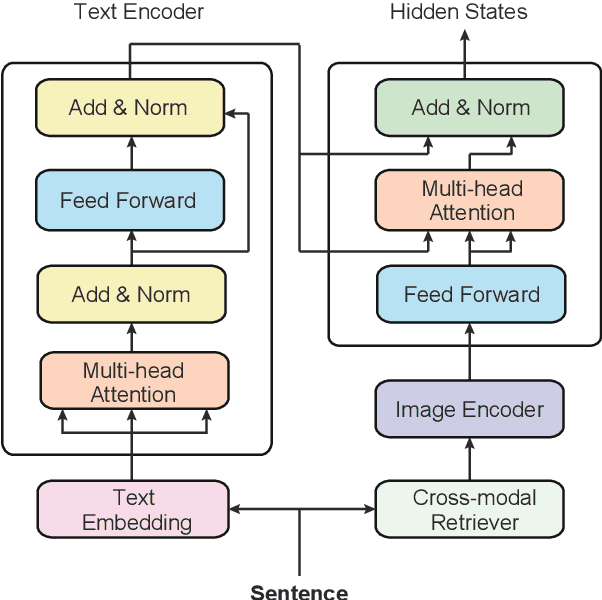

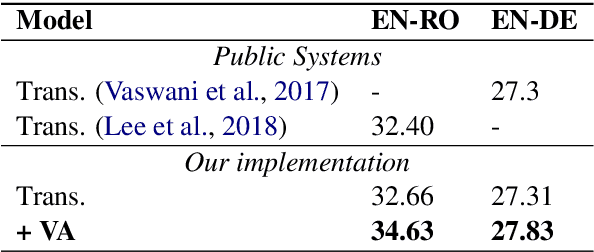

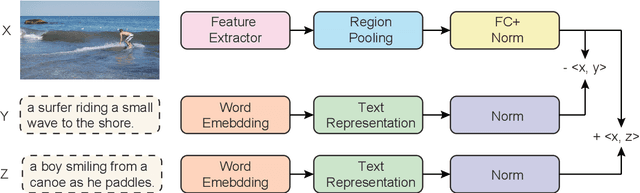

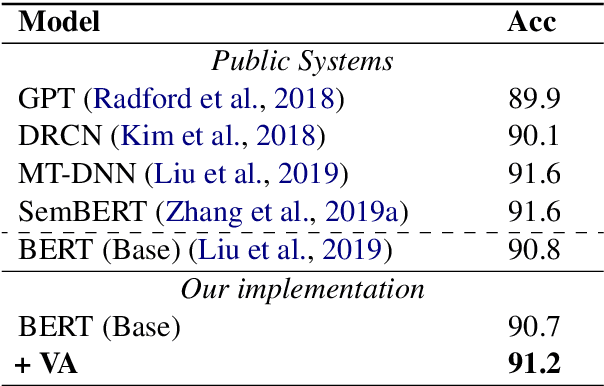

Abstract:We present a universal framework to model contextualized sentence representations with visual awareness that is motivated to overcome the shortcomings of the multimodal parallel data with manual annotations. For each sentence, we first retrieve a diversity of images from a shared cross-modal embedding space, which is pre-trained on a large-scale of text-image pairs. Then, the texts and images are respectively encoded by transformer encoder and convolutional neural network. The two sequences of representations are further fused by a simple and effective attention layer. The architecture can be easily applied to text-only natural language processing tasks without manually annotating multimodal parallel corpora. We apply the proposed method on three tasks, including neural machine translation, natural language inference and sequence labeling and experimental results verify the effectiveness.

Dependency and Span, Cross-Style Semantic Role Labeling on PropBank and NomBank

Nov 07, 2019

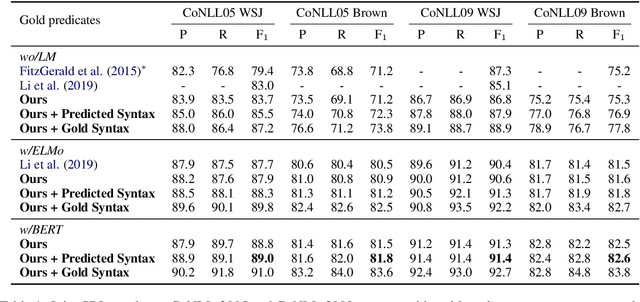

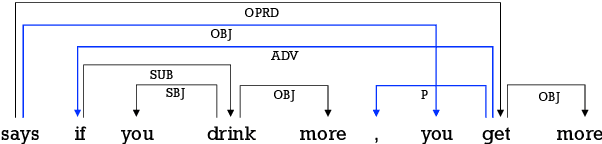

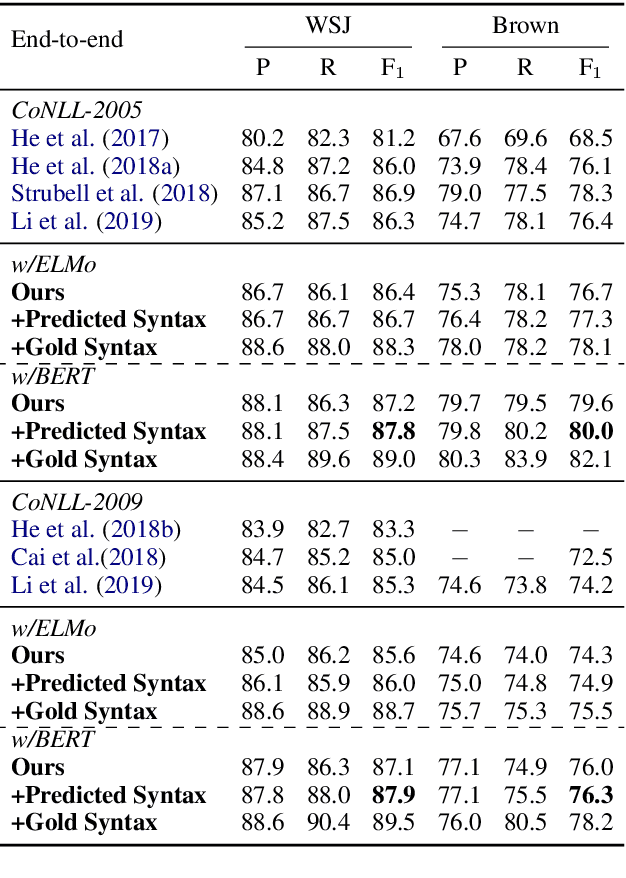

Abstract:The latest developments in neural semantic role labeling (SRL), including both dependency and span representation formalisms, have shown great performance improvements. Although the two styles share many similarities in linguistic meaning and computation, most previous studies focus on a single style. In this paper, we define a new cross-style semantic role label convention and propose a new cross-style joint optimization model designed according to the linguistic meaning of semantic role, which provides an agreed way to make the results of two styles more comparable and let both types of SRL enjoy their natural connection on both linguistics and computation. Our model learns a general semantic argument structure and is capable of outputting optional style alone. Additionally, we propose a syntax aided method to enhance the learning of both dependency and span representations uniformly. Experiments show that the proposed methods are effective on both span (CoNLL-2005) and dependency (CoNLL-2009) SRL benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge