Feifei Gao

Sherman

Federated Dynamic Neural Network for Deep MIMO Detection

Nov 24, 2021

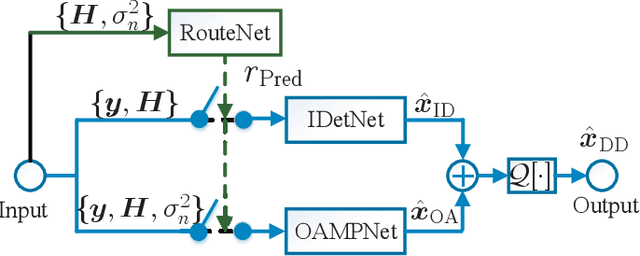

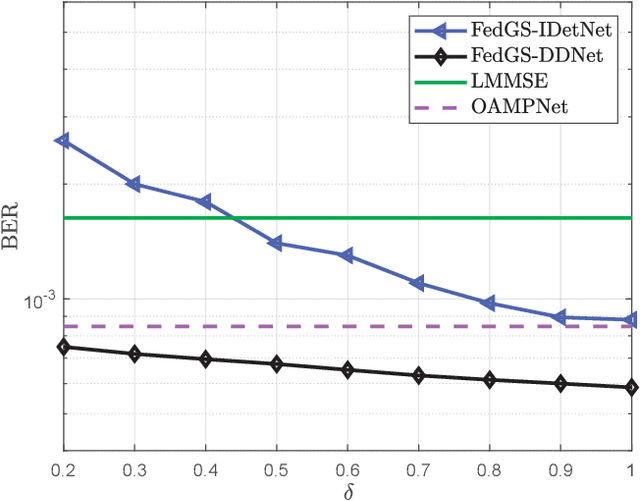

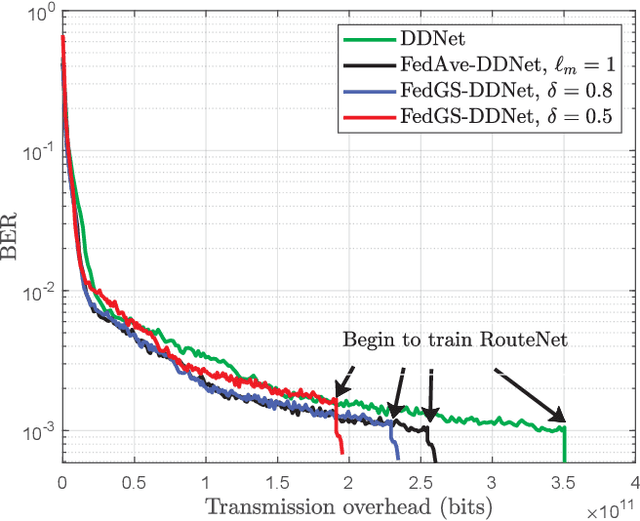

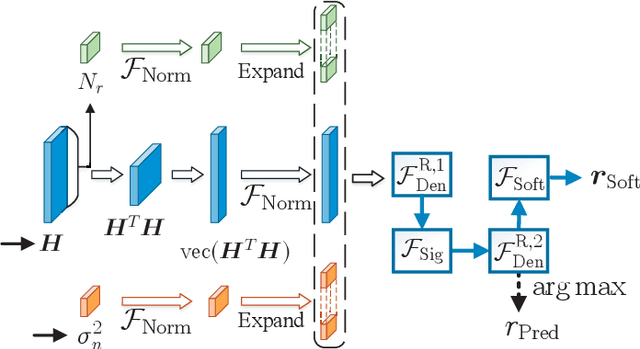

Abstract:In this paper, we develop a dynamic detection network (DDNet) based detector for multiple-input multiple-output (MIMO) systems. By constructing an improved DetNet (IDetNet) detector and the OAMPNet detector as two independent network branches, the DDNet detector performs sample-wise dynamic routing to adaptively select a better one between the IDetNet and the OAMPNet detectors for every samples under different system conditions. To avoid the prohibitive transmission overhead of dataset collection in centralized learning (CL), we propose the federated averaging (FedAve)-DDNet detector, where all raw data are kept at local clients and only locally trained model parameters are transmitted to the central server for aggregation. To further reduce the transmission overhead, we develop the federated gradient sparsification (FedGS)-DDNet detector by randomly sampling gradients with elaborately calculated probability when uploading gradients to the central server. Based on simulation results, the proposed DDNet detector consistently outperforms other detectors under all system conditions thanks to the sample-wise dynamic routing. Moreover, the federated DDNet detectors, especially the FedGS-DDNet detector, can reduce the transmission overhead by at least 25.7\% while maintaining satisfactory detection accuracy.

Resilient UAV Swarm Communications with Graph Convolutional Neural Network

Jun 30, 2021

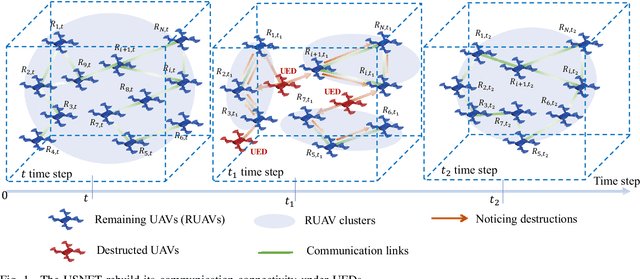

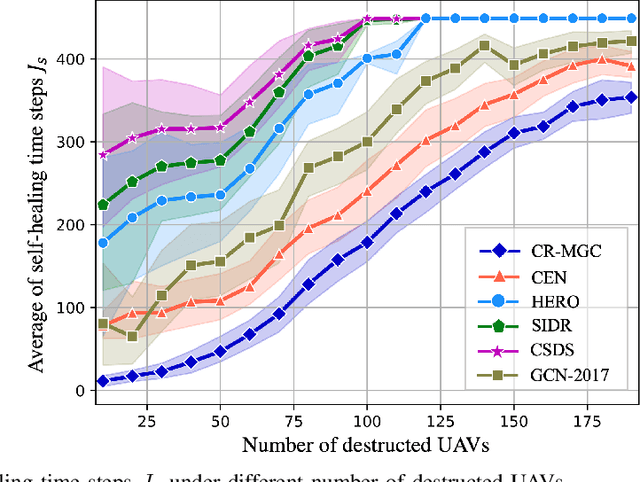

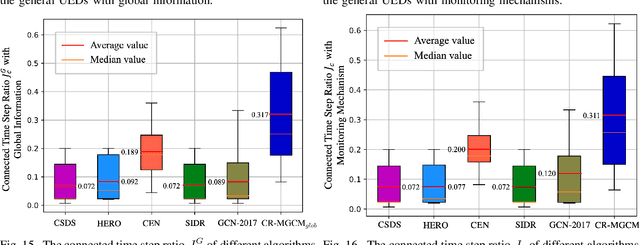

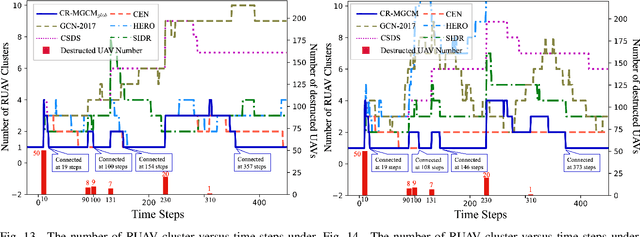

Abstract:In this paper, we study the self-healing problem of unmanned aerial vehicle (UAV) swarm network (USNET) that is required to quickly rebuild the communication connectivity under unpredictable external disruptions (UEDs). Firstly, to cope with the one-off UEDs, we propose a graph convolutional neural network (GCN) and find the recovery topology of the USNET in an on-line manner. Secondly, to cope with general UEDs, we develop a GCN based trajectory planning algorithm that can make UAVs rebuild the communication connectivity during the self-healing process. We also design a meta learning scheme to facilitate the on-line executions of the GCN. Numerical results show that the proposed algorithms can rebuild the communication connectivity of the USNET more quickly than the existing algorithms under both one-off UEDs and general UEDs. The simulation results also show that the meta learning scheme can not only enhance the performance of the GCN but also reduce the time complexity of the on-line executions.

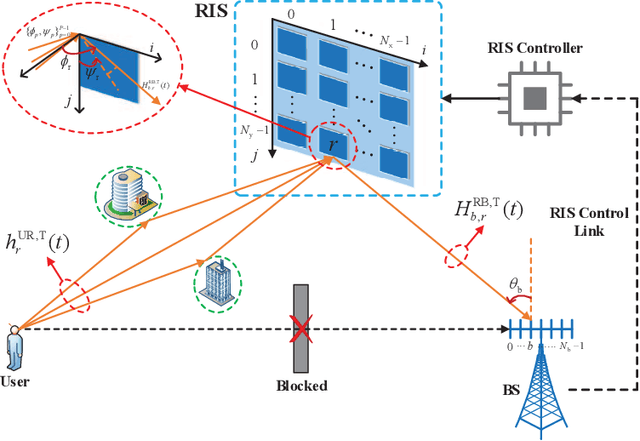

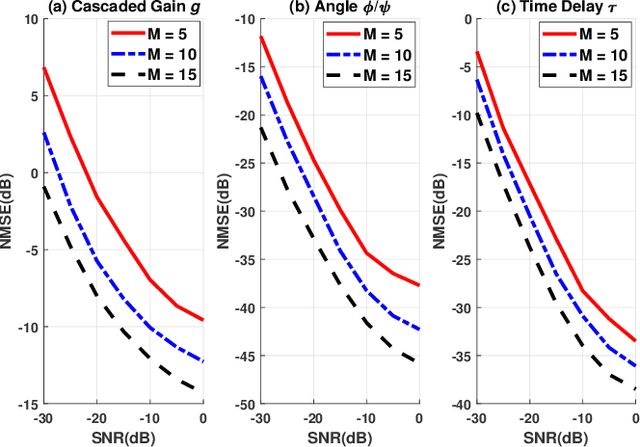

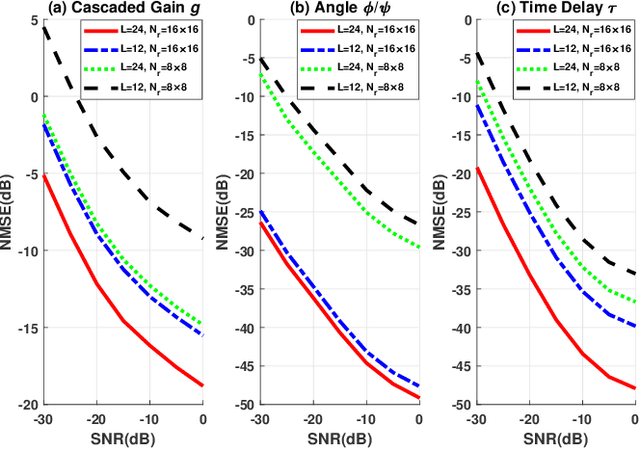

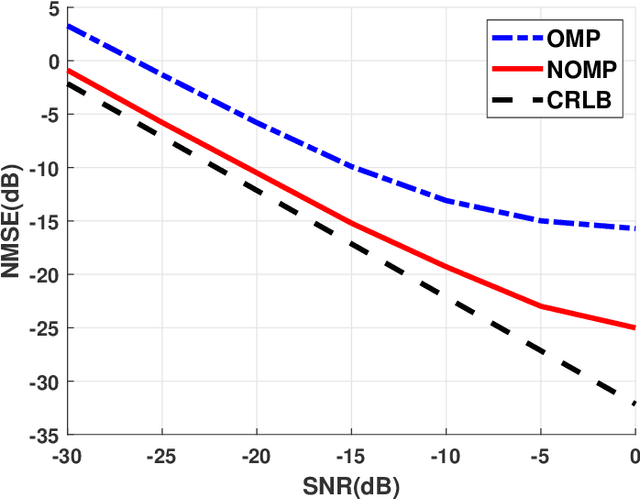

Cascaded Channel Estimation for RIS Assisted mmWave MIMO Transmissions

Jun 19, 2021

Abstract:Channel estimation is challenging for the reconfigurable intelligence surface (RIS) assisted millimeter wave (mmWave) communications. Since the number of coefficients of the cascaded channels in such systems is closely dependent on the product of the number of base station antennas and the number of RIS elements, the pilot overhead would be prohibitively high. In this letter, we propose a cascaded channel estimation framework for an RIS assisted mmWave multiple-input multiple-output system, where the wideband effect on transmission model is considered. Then, we transform the wideband channel estimation into a parameter recovery problem and use a few pilot symbols to detect the channel parameters by the Newtonized orthogonal matching pursuit algorithm. Moreover, the Cramer-Rao lower bound on the channel estimation is introduced. Numerical results show the effectiveness of the proposed channel estimation scheme.

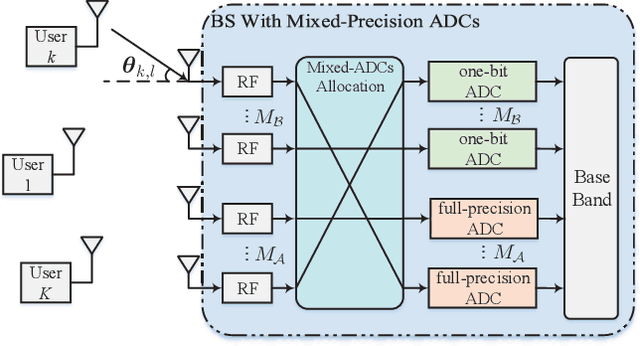

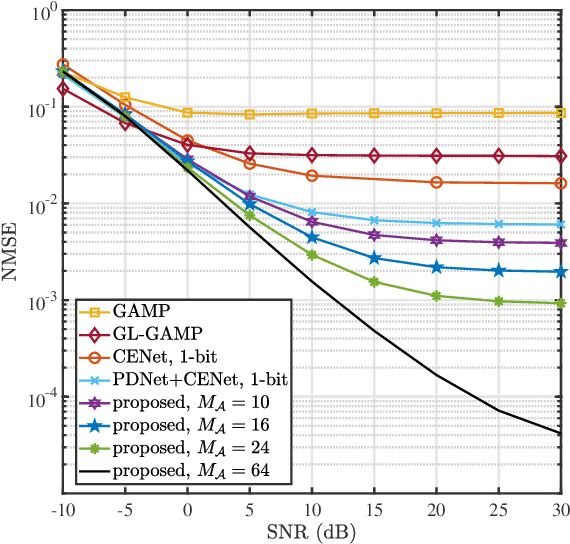

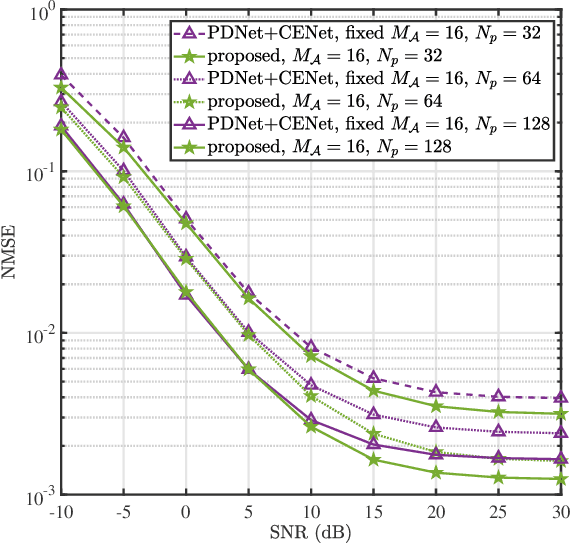

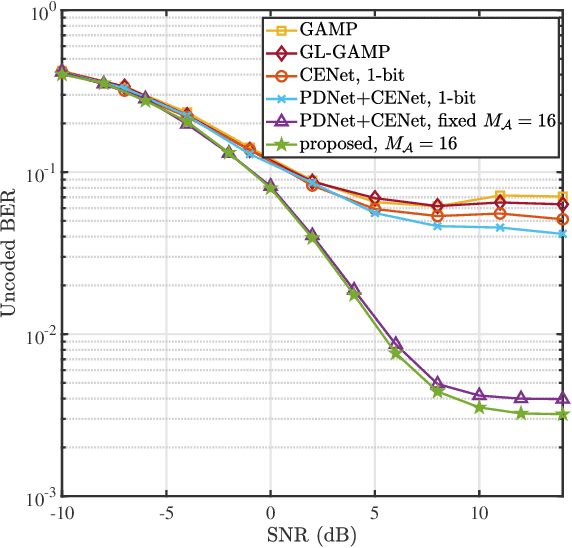

Joint Channel Estimation and Mixed-ADCs Allocation for Massive MIMO via Deep Learning

Jun 08, 2021

Abstract:Millimeter wave (mmWave) multi-user massive multi-input multi-output (MIMO) is a promising technique for the next generation communication systems. However, the hardware cost and power consumption grow significantly as the number of radio frequency (RF) components increases, which hampers the deployment of practical massive MIMO systems. To address this issue and further facilitate the commercialization of massive MIMO, mixed analog-to-digital converters (ADCs) architecture has been considered, where parts of conventionally assumed full-resolution ADCs are replaced by one-bit ADCs. In this paper, we first propose a deep learning-based (DL) joint pilot design and channel estimation method for mixed-ADCs mmWave massive MIMO. Specifically, we devise a pilot design neural network whose weights directly represent the optimized pilots, and develop a Runge-Kutta model-driven densely connected network as the channel estimator. Instead of randomly assigning the mixed-ADCs, we then design a novel antenna selection network for mixed-ADCs allocation to further improve the channel estimation accuracy. Moreover, we adopt an autoencoder-inspired end-to-end architecture to jointly optimize the pilot design, channel estimation and mixed-ADCs allocation networks. Simulation results show that the proposed DL-based methods have advantages over the traditional channel estimators as well as the state-of-the-art networks.

Deep Unsupervised Learning for Joint Antenna Selection and Hybrid Beamforming

Jun 06, 2021

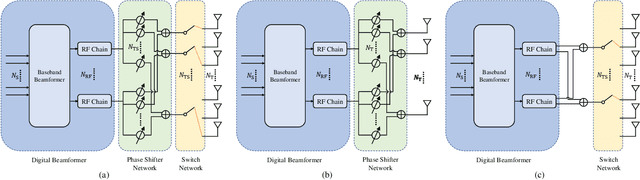

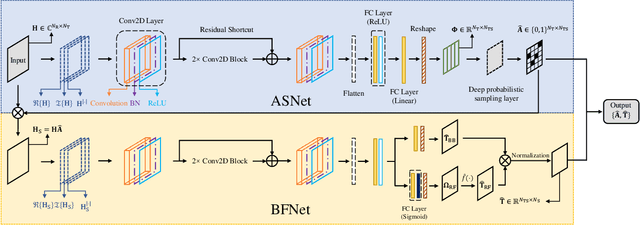

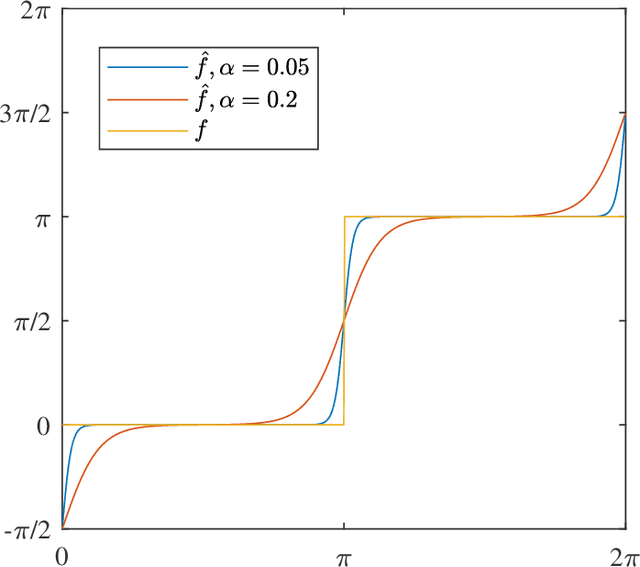

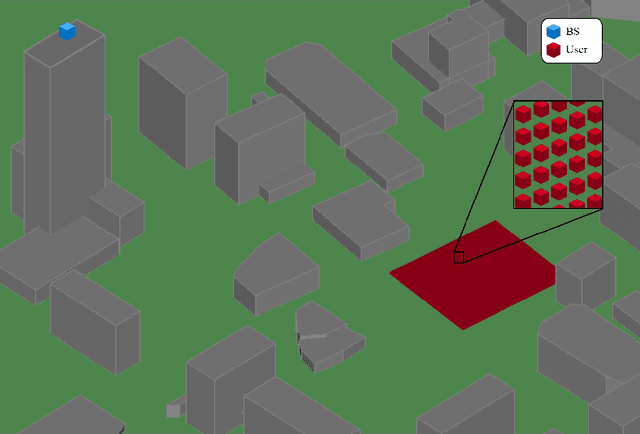

Abstract:In this paper, we consider a massive multiple-input-multiple-output (MIMO) downlink system that improves the hardware efficiency by dynamically selecting the antenna subarray and utilizing 1-bit phase shifters for hybrid beamforming. To maximize the spectral efficiency, we propose a novel deep unsupervised learning-based approach that avoids the computationally prohibitive process of acquiring training labels. The proposed design has its input as the channel matrix and consists of two convolutional neural networks (CNNs). To enable unsupervised training, the problem constraints are embedded in the neural networks: the first CNN adopts deep probabilistic sampling, while the second CNN features a quantization layer designed for 1-bit phase shifters. The two networks can be trained jointly without labels by sharing an unsupervised loss function. We next propose a phased training approach to promote the convergence of the proposed networks. Simulation results demonstrate the advantage of the proposed approach over conventional optimization-based algorithms in terms of both achieved rate and computational complexity.

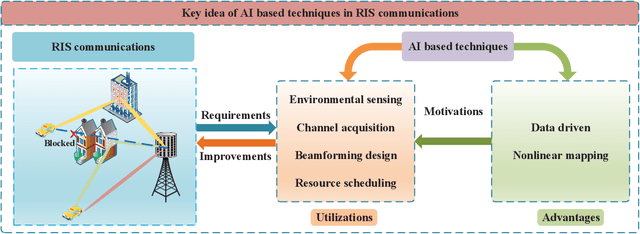

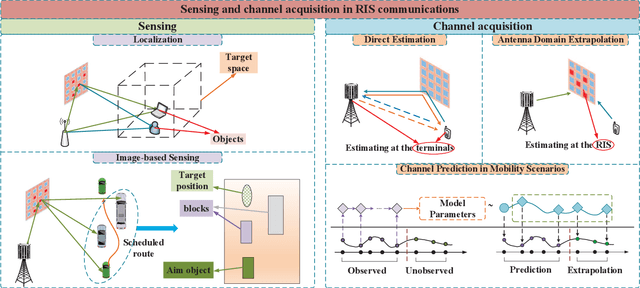

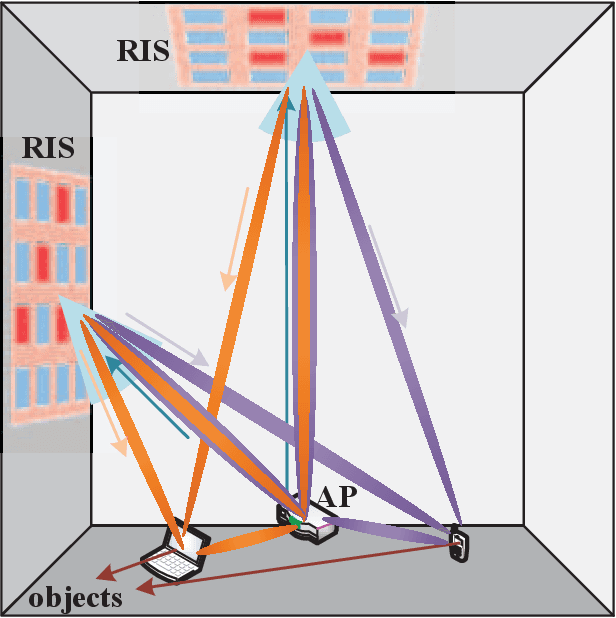

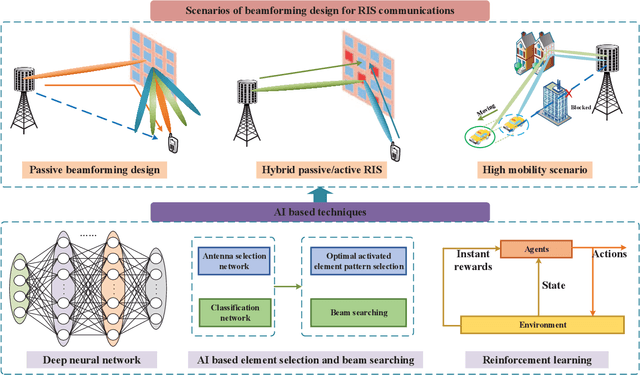

AIRIS: Artificial Intelligence Enhanced Signal Processing in Reconfigurable Intelligent Surface Communications

Jun 01, 2021

Abstract:Reconfigurable intelligent surface (RIS) is an emerging meta-surface that can provide additional communications links through reflecting the signals, and has been recognized as a strong candidate of 6G mobile communications systems. Meanwhile, it has been recently admitted that implementing artificial intelligence (AI) into RIS communications will extensively benefit the reconfiguration capacity and enhance the robustness to complicated transmission environments. Besides the conventional model-driven approaches, AI can also deal with the existing signal processing problems in a data-driven manner via digging the inherent characteristic from the real data. Hence, AI is particularly suitable for the signal processing problems over RIS networks under unideal scenarios like modeling mismatching, insufficient resource, hardware impairment, as well as dynamical transmissions. As one of the earliest survey papers, we will introduce the merging of AI and RIS, called AIRIS, over various signal processing topics, including environmental sensing, channel acquisition, beamforming design, and resource scheduling, etc. We will also discuss the challenges of AIRIS and present some interesting future directions.

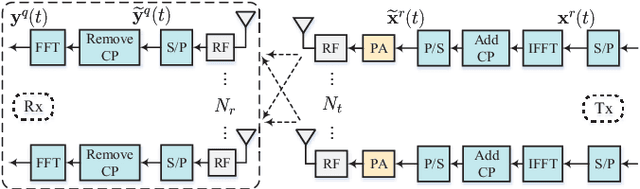

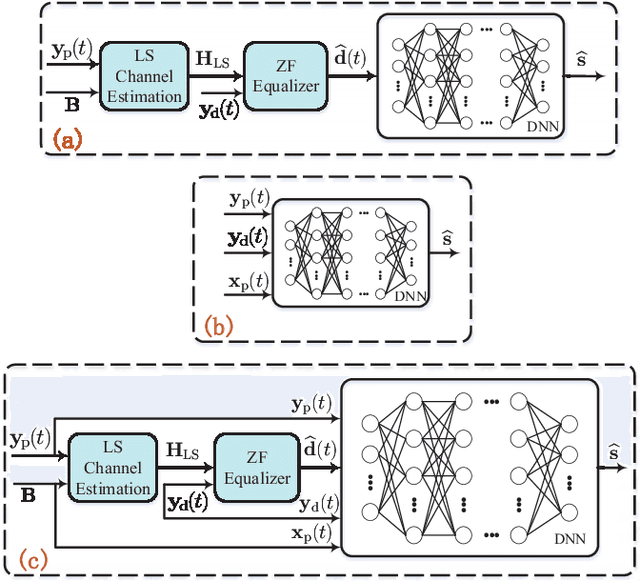

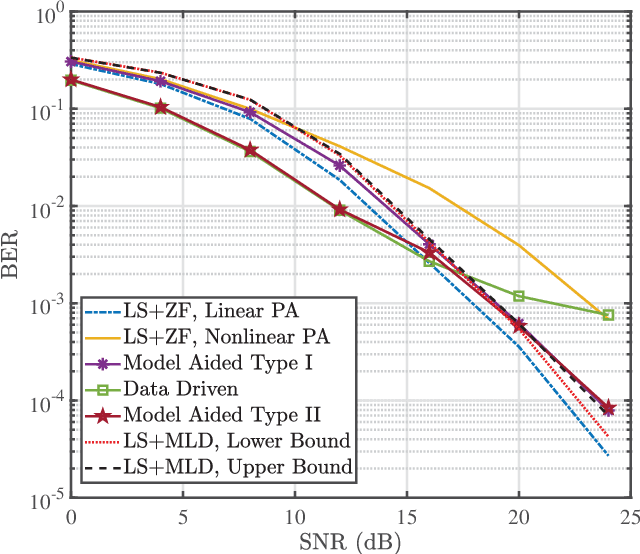

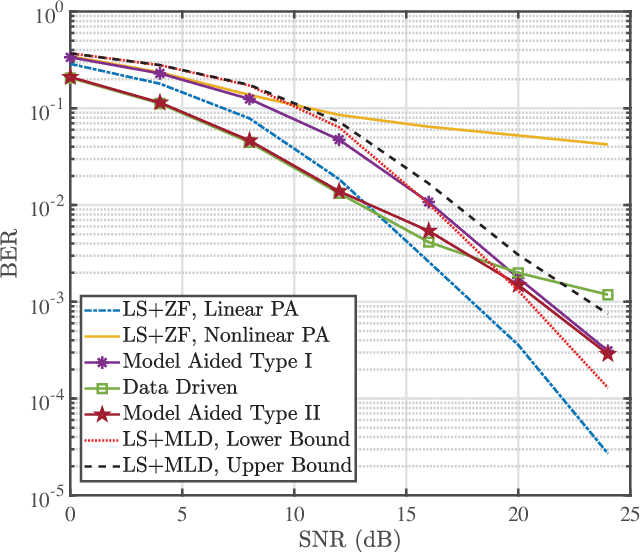

Model Aided Deep Learning Based MIMO OFDM Receiver With Nonlinear Power Amplifiers

May 30, 2021

Abstract:Multi-input multi-output orthogonal frequency division multiplexing (MIMO OFDM) is a key technology for mobile communication systems. However, due to the issue of high peak-to-average power ratio (PAPR), the OFDM symbols may suffer from nonlinear distortions of the power amplifier (PA) at the transmitters, which degrades the channel estimation and detection performances of the receivers. To mitigate the clipping distortions at the receivers end, we leverage deep learning (DL) and devise a DL based receiver which is aided by the traditional least square (LS) channel estimation and the zero-forcing (ZF) equalization models. Moreover, a data driven DL based receiver without explicit channel estimation is proposed and combined with the model aided DL based receiver to further improve the performance. Simulation results showcase that the proposed model aided DL based receiver has superior performance of bit error rate and has robustness over different levels of clipping distortions.

* 6 pages, 4 figures. Submitted to 2021 IEEE Wireless Communications and Networking Conference (WCNC). Already published

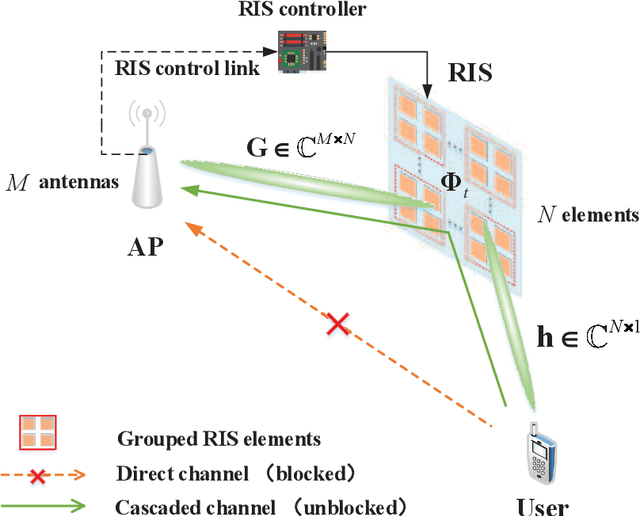

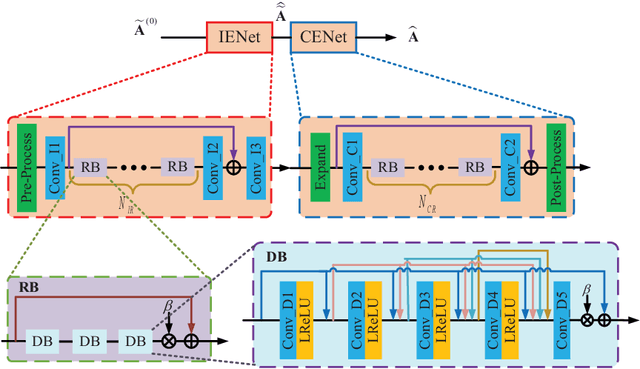

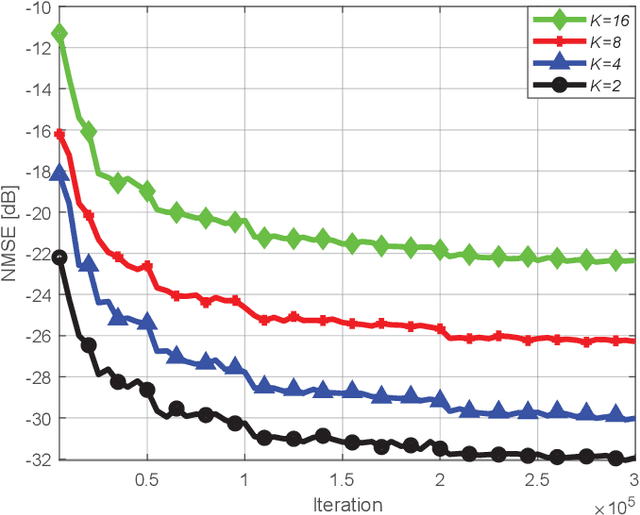

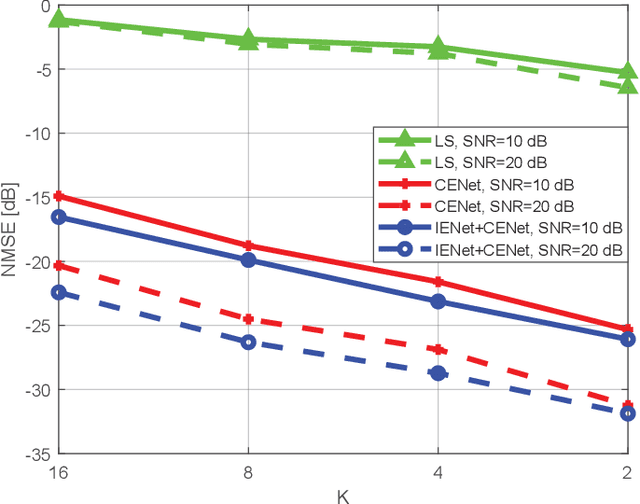

Deep Learning Based RIS Channel Extrapolation with Element-grouping

May 14, 2021

Abstract:Reconfigurable intelligent surface (RIS) is considered as a revolutionary technology for future wireless communication networks. In this letter, we consider the acquisition of the cascaded channels, which is a challenging task due to the massive number of passive RIS elements. To reduce the pilot overhead, we adopt the element-grouping strategy, where each element in one group shares the same reflection coefficient and is assumed to have the same channel condition. We analyze the channel interference caused by the element-grouping strategy and further design two deep learning based networks. The first one aims to refine the partial channels by eliminating the interference, while the second one tries to extrapolate the full channels from the refined partial channels. We cascade the two networks and jointly train them. Simulation results show that the proposed scheme provides significant gain compared to the conventional element-grouping method without interference elimination.

Understanding Deep MIMO Detection

May 11, 2021

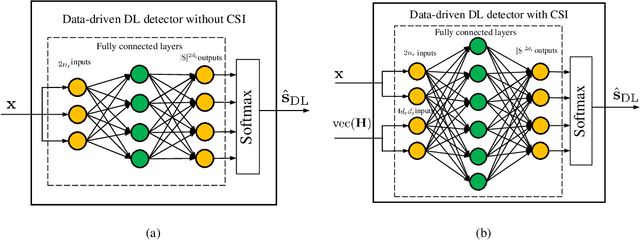

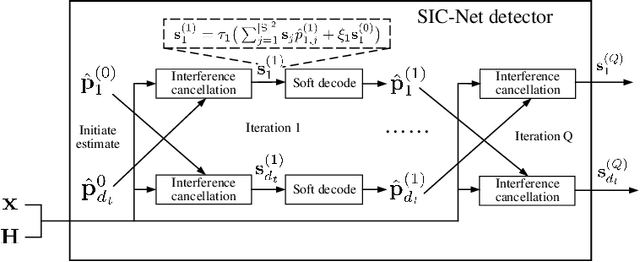

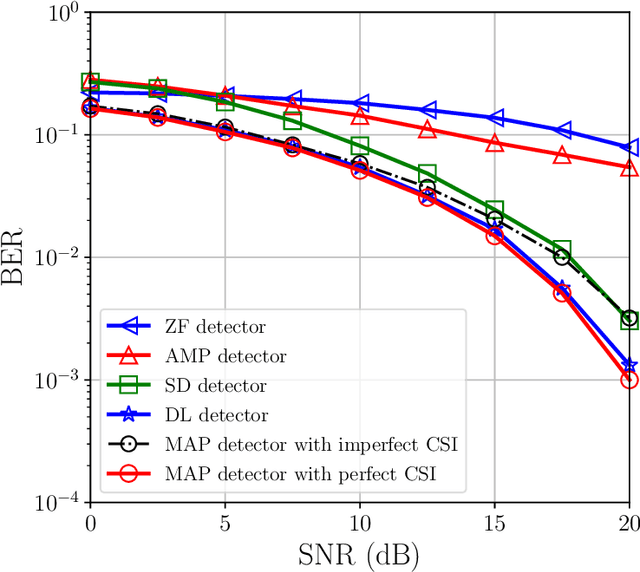

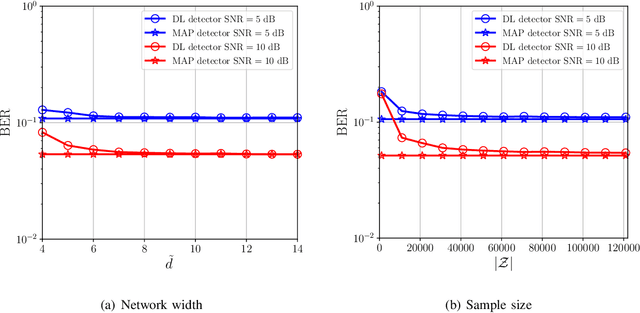

Abstract:Incorporating deep learning (DL) into multiple-input multiple-output (MIMO) detection has been deemed as a promising technique for future wireless communications. However, most DL-based detection algorithms are lack of theoretical interpretation on internal mechanisms and could not provide general guidance on network design. In this paper, we analyze the performance of DL-based MIMO detection to better understand its strengths and weaknesses. We investigate two different architectures: a data-driven DL detector with a neural network activated by rectifier linear unit (ReLU) function and a model-driven DL detector from unfolding a traditional iterative detection algorithm. We demonstrate that data-driven DL detector asymptotically approaches to the maximum a posterior (MAP) detector in various scenarios but requires enough training samples to converge in time-varying channels. On the other hand, the model-driven DL detector utilizes model expert knowledge to alleviate the impact of channels and establish a relatively reliable detection method with a small set of training data. Due to its model specific property, the performance of model-driven DL detector is largely determined by the underlying iterative detection algorithm, which is usually suboptimal compared to the MAP detector. Simulation results confirm our analytical results and demonstrate the effectiveness of DL-based MIMO detection for both linear and nonlinear signal systems.

Model-Driven Deep Learning Based Channel Estimation and Feedback for Millimeter-Wave Massive Hybrid MIMO Systems

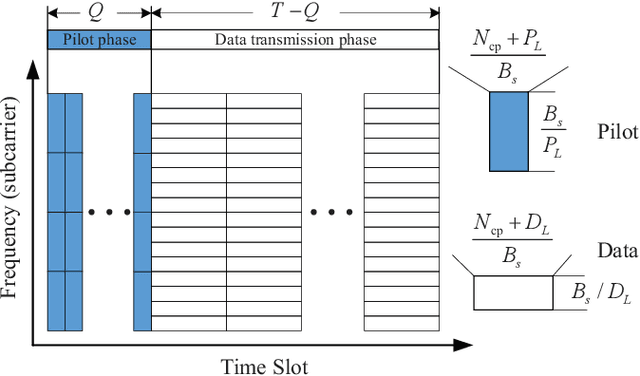

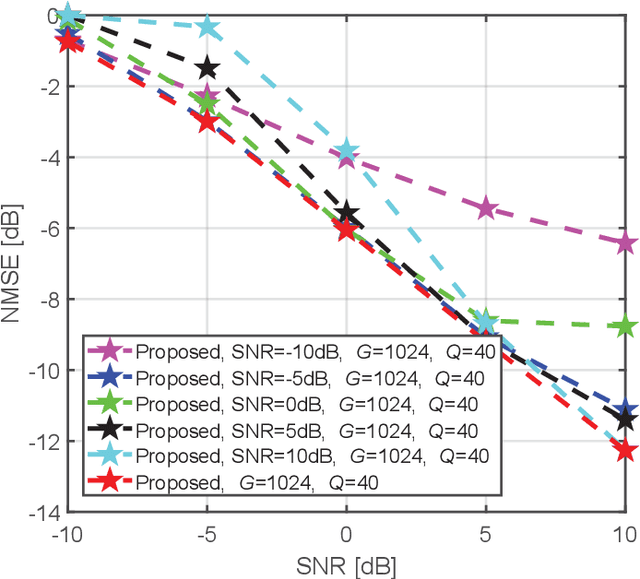

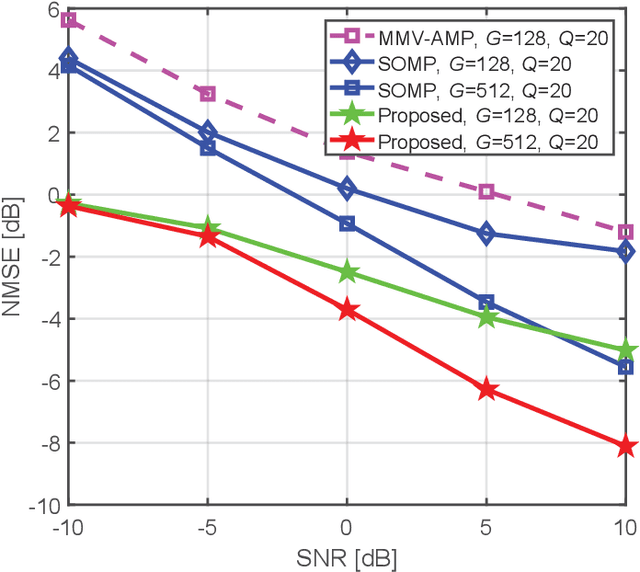

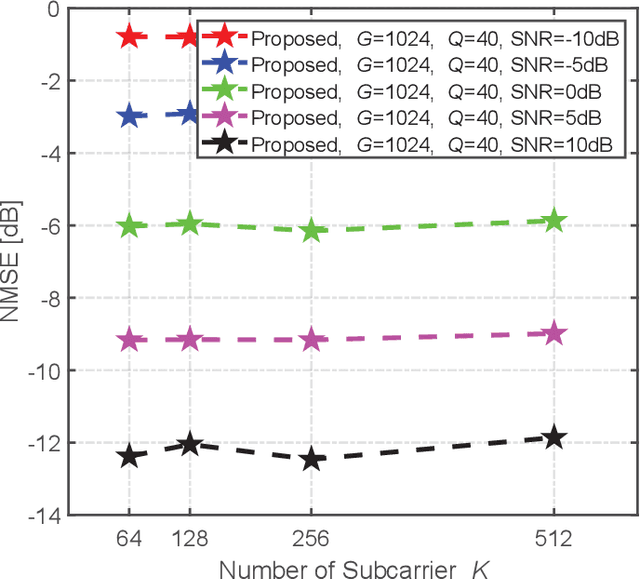

May 06, 2021

Abstract:This paper proposes a model-driven deep learning (MDDL)-based channel estimation and feedback scheme for wideband millimeter-wave (mmWave) massive hybrid multiple-input multiple-output (MIMO) systems, where the angle-delay domain channels' sparsity is exploited for reducing the overhead. Firstly, we consider the uplink channel estimation for time-division duplexing systems. To reduce the uplink pilot overhead for estimating the high-dimensional channels from a limited number of radio frequency (RF) chains at the base station (BS), we propose to jointly train the phase shift network and the channel estimator as an auto-encoder. Particularly, by exploiting the channels' structured sparsity from an a priori model and learning the integrated trainable parameters from the data samples, the proposed multiple-measurement-vectors learned approximate message passing (MMV-LAMP) network with the devised redundant dictionary can jointly recover multiple subcarriers' channels with significantly enhanced performance. Moreover, we consider the downlink channel estimation and feedback for frequency-division duplexing systems. Similarly, the pilots at the BS and channel estimator at the users can be jointly trained as an encoder and a decoder, respectively. Besides, to further reduce the channel feedback overhead, only the received pilots on part of the subcarriers are fed back to the BS, which can exploit the MMV-LAMP network to reconstruct the spatial-frequency channel matrix. Numerical results show that the proposed MDDL-based channel estimation and feedback scheme outperforms the state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge