Darwin G. Caldwell

Department of Advanced Robotics, Istituto Italiano di Tecnologia

Impact of a Lower Limb Exosuit Anchor Points on Energetics and Biomechanics

Jul 31, 2025Abstract:Anchor point placement is a crucial yet often overlooked aspect of exosuit design since it determines how forces interact with the human body. This work analyzes the impact of different anchor point positions on gait kinematics, muscular activation and energetic consumption. A total of six experiments were conducted with 11 subjects wearing the XoSoft exosuit, which assists hip flexion in five configurations. Subjects were instrumented with an IMU-based motion tracking system, EMG sensors, and a mask to measure metabolic consumption. The results show that positioning the knee anchor point on the posterior side while keeping the hip anchor on the anterior part can reduce muscle activation in the hip flexors by up to 10.21\% and metabolic expenditure by up to 18.45\%. Even if the only assisted joint was the hip, all the configurations introduced changes also in the knee and ankle kinematics. Overall, no single configuration was optimal across all subjects, suggesting that a personalized approach is necessary to transmit the assistance forces optimally. These findings emphasize that anchor point position does indeed have a significant impact on exoskeleton effectiveness and efficiency. However, these optimal positions are subject-specific to the exosuit design, and there is a strong need for future work to tailor musculoskeletal models to individual characteristics and validate these results in clinical populations.

* 12 pages, 10 figures

Enhancing Human-Robot Collaboration: A Sim2Real Domain Adaptation Algorithm for Point Cloud Segmentation in Industrial Environments

Jun 11, 2025Abstract:The robust interpretation of 3D environments is crucial for human-robot collaboration (HRC) applications, where safety and operational efficiency are paramount. Semantic segmentation plays a key role in this context by enabling a precise and detailed understanding of the environment. Considering the intense data hunger for real-world industrial annotated data essential for effective semantic segmentation, this paper introduces a pioneering approach in the Sim2Real domain adaptation for semantic segmentation of 3D point cloud data, specifically tailored for HRC. Our focus is on developing a network that robustly transitions from simulated environments to real-world applications, thereby enhancing its practical utility and impact on a safe HRC. In this work, we propose a dual-stream network architecture (FUSION) combining Dynamic Graph Convolutional Neural Networks (DGCNN) and Convolutional Neural Networks (CNN) augmented with residual layers as a Sim2Real domain adaptation algorithm for an industrial environment. The proposed model was evaluated on real-world HRC setups and simulation industrial point clouds, it showed increased state-of-the-art performance, achieving a segmentation accuracy of 97.76%, and superior robustness compared to existing methods.

Real-time Fall Prevention system for the Next-generation of Workers

May 30, 2025

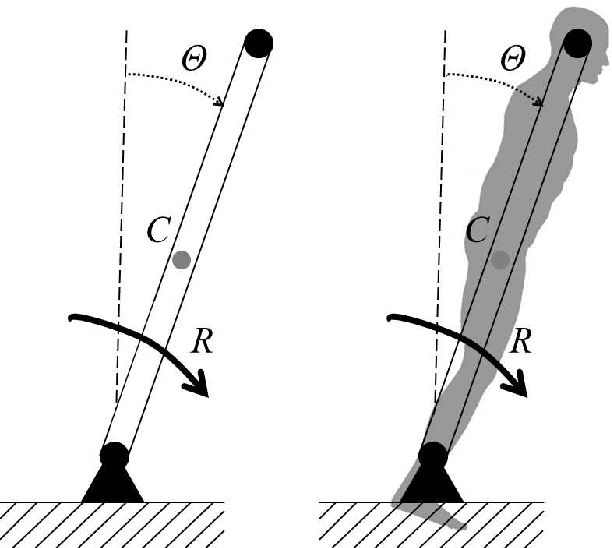

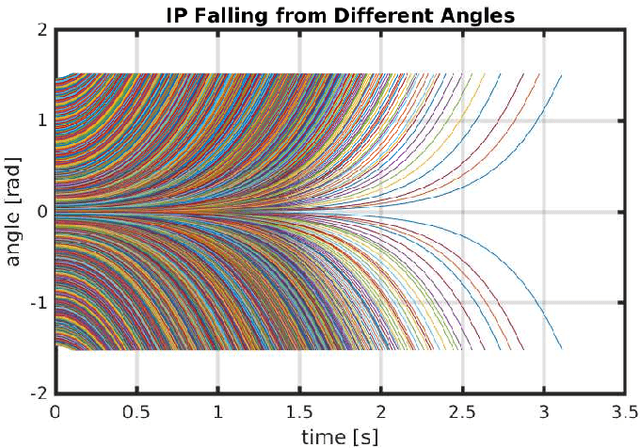

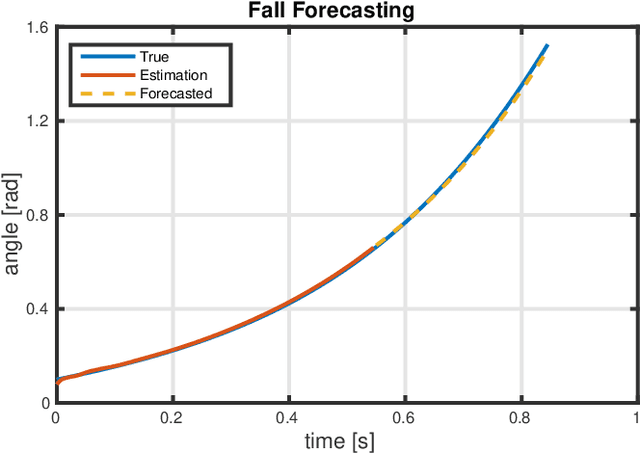

Abstract:Developing a general-purpose wearable real-time fall-detection system is still a challenging task, especially for healthy and strong subjects, such as industrial workers that work in harsh environments. In this work, we present a hybrid approach for fall detection and prevention, which uses the dynamic model of an inverted pendulum to generate simulations of falling that are then fed to a deep learning framework. The output is a signal to activate a fall mitigation mechanism when the subject is at risk of harm. The advantage of this approach is that abstracted models can be used to efficiently generate training data for thousands of different subjects with different falling initial conditions, something that is practically impossible with real experiments. This approach is suitable for a specific type of fall, where the subjects fall without changing their initial configuration significantly, and it is the first step toward a general-purpose wearable device, with the aim of reducing fall-associated injuries in industrial environments, which can improve the safety of workers.

How can AI reduce wrist injuries in the workplace?

May 30, 2025Abstract:This paper explores the development of a control and sensor strategy for an industrial wearable wrist exoskeleton by classifying and predicting workers' actions. The study evaluates the correlation between exerted force and effort intensity, along with sensor strategy optimization, for designing purposes. Using data from six healthy subjects in a manufacturing plant, this paper presents EMG-based models for wrist motion classification and force prediction. Wrist motion recognition is achieved through a pattern recognition algorithm developed with surface EMG data from an 8-channel EMG sensor (Myo Armband); while a force regression model uses wrist and hand force measurements from a commercial handheld dynamometer (Vernier GoDirect Hand Dynamometer). This control strategy forms the foundation for a streamlined exoskeleton architecture designed for industrial applications, focusing on simplicity, reduced costs, and minimal sensor use while ensuring reliable and effective assistance.

* Pages 569-580

How can AI reduce fall injuries in the workplace?

May 30, 2025Abstract:Fall-caused injuries are common in all types of work environments, including offices. They are the main cause of absences longer than three days, especially for small and medium-sized businesses (SMEs). However, data, data amount, data heterogeneity, and stringent processing time constraints continue to pose challenges to real-time fall detection. This work proposes a new approach based on a recurrent neural network (RNN) for Fall Detection and a Kolmogorov-Arnold Network (KAN) to estimate the time of impact of the fall. The approach is tested on SisFall, a dataset consisting of 2706 Activities of Daily Living (ADLs) and 1798 falls recorded by three sensors. The results show that the proposed approach achieves an average TPR of 82.6% and TNR of 98.4% for fall sequences and 94.4% in ADL. Besides, the Root Mean Squared Error of the estimated time of impact is approximately 160ms.

* Pages 557-568

Ergonomic Assessment of Work Activities for an Industrial-oriented Wrist Exoskeleton

May 27, 2025Abstract:Musculoskeletal disorders (MSD) are the most common cause of work-related injuries and lost production involving approximately 1.7 billion people worldwide and mainly affect low back (more than 50%) and upper limbs (more than 40%). It has a profound effect on both the workers affected and the company. This paper provides an ergonomic assessment of different work activities in a horse saddle-making company, involving 5 workers. This aim guides the design of a wrist exoskeleton to reduce the risk of musculoskeletal diseases wherever it is impossible to automate the production process. This evaluation is done either through subjective and objective measurement, respectively using questionnaires and by measurement of muscle activation with sEMG sensors.

Recognition of Physiological Patterns during Activities of Daily Living Using Wearable Biosignal Sensors

May 27, 2025Abstract:A key aspect of developing fall prevention systems is the early prediction of a fall before it occurs. This paper presents a statistical overview of results obtained by analyzing 22 activities of daily living to recognize physiological patterns and estimate the risk of an imminent fall. The results demonstrate distinctive patterns between high-intensity and low-intensity activity using EMG, ECG, and respiration sensors, also indicating the presence of a proportional trend between movement velocity and muscle activity. These outcomes highlight the potential benefits of using these sensors in the future to direct the development of an activity recognition and risk prediction framework for physiological phenomena that can cause fall injuries.

GARField: Addressing the visual Sim-to-Real gap in garment manipulation with mesh-attached radiance fields

Oct 07, 2024

Abstract:While humans intuitively manipulate garments and other textiles items swiftly and accurately, it is a significant challenge for robots. A factor crucial to the human performance is the ability to imagine, a priori, the intended result of the manipulation intents and hence develop predictions on the garment pose. This allows us to plan from highly obstructed states, adapt our plans as we collect more information and react swiftly to unforeseen circumstances. Robots, on the other hand, struggle to establish such intuitions and form tight links between plans and observations. This can be attributed in part to the high cost of obtaining densely labelled data for textile manipulation, both in quality and quantity. The problem of data collection is a long standing issue in data-based approaches to garment manipulation. Currently, the generation of high quality and labelled garment manipulation data is mainly attempted through advanced data capture procedures that create simplified state estimations from real-world observations. In this work, however, we propose to generate real-world observations from given object states. To achieve this, we present GARField (Garment Attached Radiance Field) a differentiable rendering architecture allowing data generation from simulated states stored as triangle meshes. Code will be available on https://ddonatien.github.io/garfield-website/

Kinematically-Decoupled Impedance Control for Fast Object Visual Servoing and Grasping on Quadruped Manipulators

Jul 10, 2023

Abstract:We propose a control pipeline for SAG (Searching, Approaching, and Grasping) of objects, based on a decoupled arm kinematic chain and impedance control, which integrates image-based visual servoing (IBVS). The kinematic decoupling allows for fast end-effector motions and recovery that leads to robust visual servoing. The whole approach and pipeline can be generalized for any mobile platform (wheeled or tracked vehicles), but is most suitable for dynamically moving quadruped manipulators thanks to their reactivity against disturbances. The compliance of the impedance controller makes the robot safer for interactions with humans and the environment. We demonstrate the performance and robustness of the proposed approach with various experiments on our 140 kg HyQReal quadruped robot equipped with a 7-DoF manipulator arm. The experiments consider dynamic locomotion, tracking under external disturbances, and fast motions of the target object.

Reactive Landing Controller for Quadruped Robots

May 12, 2023Abstract:Quadruped robots are machines intended for challenging and harsh environments. Despite the progress in locomotion strategy, safely recovering from unexpected falls or planned drops is still an open problem. It is further made more difficult when high horizontal velocities are involved. In this work, we propose an optimization-based reactive Landing Controller that uses only proprioceptive measures for torque-controlled quadruped robots that free-fall on a flat horizontal ground, knowing neither the distance to the landing surface nor the flight time. Based on an estimate of the Center of Mass horizontal velocity, the method uses the Variable Height Springy Inverted Pendulum model for continuously recomputing the feet position while the robot is falling. In this way, the quadruped is ready to attain a successful landing in all directions, even in the presence of significant horizontal velocities. The method is demonstrated to dramatically enlarge the region of horizontal velocities that can be dealt with by a naive approach that keeps the feet still during the airborne stage. To the best of our knowledge, this is the first time that a quadruped robot can successfully recover from falls with horizontal velocities up to 3 m/s in simulation. Experiments prove that the used platform, Go1, can successfully attain a stable standing configuration from falls with various horizontal velocity and different angular perturbations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge