Dhruv Rohatgi

Is a Good Foundation Necessary for Efficient Reinforcement Learning? The Computational Role of the Base Model in Exploration

Mar 10, 2025Abstract:Language model alignment (or, reinforcement learning) techniques that leverage active exploration -- deliberately encouraging the model to produce diverse, informative responses -- offer the promise of super-human capabilities. However, current understanding of algorithm design primitives for computationally efficient exploration with language models is limited. To better understand how to leverage access to powerful pre-trained generative models to improve the efficiency of exploration, we introduce a new computational framework for RL with language models, in which the learner interacts with the model through a sampling oracle. Focusing on the linear softmax model parameterization, we provide new results that reveal the computational-statistical tradeoffs of efficient exploration: 1. Necessity of coverage: Coverage refers to the extent to which the pre-trained model covers near-optimal responses -- a form of hidden knowledge. We show that coverage, while not necessary for data efficiency, lower bounds the runtime of any algorithm in our framework. 2. Inference-time exploration: We introduce a new algorithm, SpannerSampling, which obtains optimal data efficiency and is computationally efficient whenever the pre-trained model enjoys sufficient coverage, matching our lower bound. SpannerSampling leverages inference-time computation with the pre-trained model to reduce the effective search space for exploration. 3. Insufficiency of training-time interventions: We contrast the result above by showing that training-time interventions that produce proper policies cannot achieve similar guarantees in polynomial time. 4. Computational benefits of multi-turn exploration: Finally, we show that under additional representational assumptions, one can achieve improved runtime (replacing sequence-level coverage with token-level coverage) through multi-turn exploration.

Computational-Statistical Tradeoffs at the Next-Token Prediction Barrier: Autoregressive and Imitation Learning under Misspecification

Feb 18, 2025Abstract:Next-token prediction with the logarithmic loss is a cornerstone of autoregressive sequence modeling, but, in practice, suffers from error amplification, where errors in the model compound and generation quality degrades as sequence length $H$ increases. From a theoretical perspective, this phenomenon should not appear in well-specified settings, and, indeed, a growing body of empirical work hypothesizes that misspecification, where the learner is not sufficiently expressive to represent the target distribution, may be the root cause. Under misspecification -- where the goal is to learn as well as the best-in-class model up to a multiplicative approximation factor $C\geq 1$ -- we confirm that $C$ indeed grows with $H$ for next-token prediction, lending theoretical support to this empirical hypothesis. We then ask whether this mode of error amplification is avoidable algorithmically, computationally, or information-theoretically, and uncover inherent computational-statistical tradeoffs. We show: (1) Information-theoretically, one can avoid error amplification and achieve $C=O(1)$. (2) Next-token prediction can be made robust so as to achieve $C=\tilde O(H)$, representing moderate error amplification, but this is an inherent barrier: any next-token prediction-style objective must suffer $C=\Omega(H)$. (3) For the natural testbed of autoregressive linear models, no computationally efficient algorithm can achieve sub-polynomial approximation factor $C=e^{(\log H)^{1-\Omega(1)}}$; however, at least for binary token spaces, one can smoothly trade compute for statistical power and improve on $C=\Omega(H)$ in sub-exponential time. Our results have consequences in the more general setting of imitation learning, where the widely-used behavior cloning algorithm generalizes next-token prediction.

Necessary and Sufficient Oracles: Toward a Computational Taxonomy For Reinforcement Learning

Feb 12, 2025

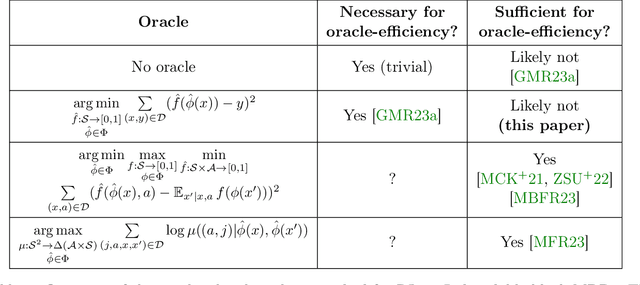

Abstract:Algorithms for reinforcement learning (RL) in large state spaces crucially rely on supervised learning subroutines to estimate objects such as value functions or transition probabilities. Since only the simplest supervised learning problems can be solved provably and efficiently, practical performance of an RL algorithm depends on which of these supervised learning "oracles" it assumes access to (and how they are implemented). But which oracles are better or worse? Is there a minimal oracle? In this work, we clarify the impact of the choice of supervised learning oracle on the computational complexity of RL, as quantified by the oracle strength. First, for the task of reward-free exploration in Block MDPs in the standard episodic access model -- a ubiquitous setting for RL with function approximation -- we identify two-context regression as a minimal oracle, i.e. an oracle that is both necessary and sufficient (under a mild regularity assumption). Second, we identify one-context regression as a near-minimal oracle in the stronger reset access model, establishing a provable computational benefit of resets in the process. Third, we broaden our focus to Low-Rank MDPs, where we give cryptographic evidence that the analogous oracle from the Block MDP setting is insufficient.

Self-Improvement in Language Models: The Sharpening Mechanism

Dec 02, 2024Abstract:Recent work in language modeling has raised the possibility of self-improvement, where a language models evaluates and refines its own generations to achieve higher performance without external feedback. It is impossible for this self-improvement to create information that is not already in the model, so why should we expect that this will lead to improved capabilities? We offer a new perspective on the capabilities of self-improvement through a lens we refer to as sharpening. Motivated by the observation that language models are often better at verifying response quality than they are at generating correct responses, we formalize self-improvement as using the model itself as a verifier during post-training in order to ``sharpen'' the model to one placing large mass on high-quality sequences, thereby amortizing the expensive inference-time computation of generating good sequences. We begin by introducing a new statistical framework for sharpening in which the learner aims to sharpen a pre-trained base policy via sample access, and establish fundamental limits. Then we analyze two natural families of self-improvement algorithms based on SFT and RLHF.

Towards characterizing the value of edge embeddings in Graph Neural Networks

Oct 13, 2024Abstract:Graph neural networks (GNNs) are the dominant approach to solving machine learning problems defined over graphs. Despite much theoretical and empirical work in recent years, our understanding of finer-grained aspects of architectural design for GNNs remains impoverished. In this paper, we consider the benefits of architectures that maintain and update edge embeddings. On the theoretical front, under a suitable computational abstraction for a layer in the model, as well as memory constraints on the embeddings, we show that there are natural tasks on graphical models for which architectures leveraging edge embeddings can be much shallower. Our techniques are inspired by results on time-space tradeoffs in theoretical computer science. Empirically, we show architectures that maintain edge embeddings almost always improve on their node-based counterparts -- frequently significantly so in topologies that have ``hub'' nodes.

Online Control in Population Dynamics

Jun 03, 2024

Abstract:The study of population dynamics originated with early sociological works (Malthus, 1872) but has since extended into many fields, including biology, epidemiology, evolutionary game theory, and economics. Most studies on population dynamics focus on the problem of prediction rather than control. Existing mathematical models for population control are often restricted to specific, noise-free dynamics, while real-world population changes can be complex and adversarial. To address this gap, we propose a new framework based on the paradigm of online control. We first characterize a set of linear dynamical systems that can naturally model evolving populations. We then give an efficient gradient-based controller for these systems, with near-optimal regret bounds with respect to a broad class of linear policies. Our empirical evaluations demonstrate the effectiveness of the proposed algorithm for population control even in non-linear models such as SIR and replicator dynamics.

Exploration is Harder than Prediction: Cryptographically Separating Reinforcement Learning from Supervised Learning

Apr 04, 2024

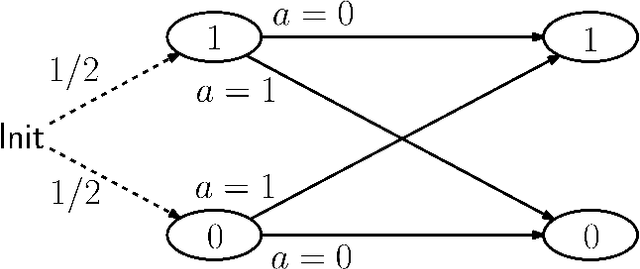

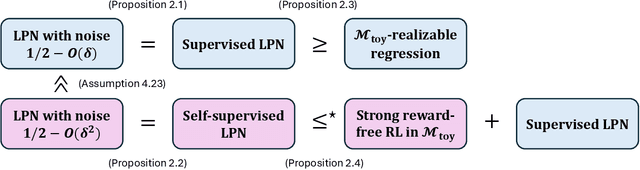

Abstract:Supervised learning is often computationally easy in practice. But to what extent does this mean that other modes of learning, such as reinforcement learning (RL), ought to be computationally easy by extension? In this work we show the first cryptographic separation between RL and supervised learning, by exhibiting a class of block MDPs and associated decoding functions where reward-free exploration is provably computationally harder than the associated regression problem. We also show that there is no computationally efficient algorithm for reward-directed RL in block MDPs, even when given access to an oracle for this regression problem. It is known that being able to perform regression in block MDPs is necessary for finding a good policy; our results suggest that it is not sufficient. Our separation lower bound uses a new robustness property of the Learning Parities with Noise (LPN) hardness assumption, which is crucial in handling the dependent nature of RL data. We argue that separations and oracle lower bounds, such as ours, are a more meaningful way to prove hardness of learning because the constructions better reflect the practical reality that supervised learning by itself is often not the computational bottleneck.

Lasso with Latents: Efficient Estimation, Covariate Rescaling, and Computational-Statistical Gaps

Feb 23, 2024

Abstract:It is well-known that the statistical performance of Lasso can suffer significantly when the covariates of interest have strong correlations. In particular, the prediction error of Lasso becomes much worse than computationally inefficient alternatives like Best Subset Selection. Due to a large conjectured computational-statistical tradeoff in the problem of sparse linear regression, it may be impossible to close this gap in general. In this work, we propose a natural sparse linear regression setting where strong correlations between covariates arise from unobserved latent variables. In this setting, we analyze the problem caused by strong correlations and design a surprisingly simple fix. While Lasso with standard normalization of covariates fails, there exists a heterogeneous scaling of the covariates with which Lasso will suddenly obtain strong provable guarantees for estimation. Moreover, we design a simple, efficient procedure for computing such a "smart scaling." The sample complexity of the resulting "rescaled Lasso" algorithm incurs (in the worst case) quadratic dependence on the sparsity of the underlying signal. While this dependence is not information-theoretically necessary, we give evidence that it is optimal among the class of polynomial-time algorithms, via the method of low-degree polynomials. This argument reveals a new connection between sparse linear regression and a special version of sparse PCA with a near-critical negative spike. The latter problem can be thought of as a real-valued analogue of learning a sparse parity. Using it, we also establish the first computational-statistical gap for the closely related problem of learning a Gaussian Graphical Model.

Exploring and Learning in Sparse Linear MDPs without Computationally Intractable Oracles

Sep 19, 2023Abstract:The key assumption underlying linear Markov Decision Processes (MDPs) is that the learner has access to a known feature map $\phi(x, a)$ that maps state-action pairs to $d$-dimensional vectors, and that the rewards and transitions are linear functions in this representation. But where do these features come from? In the absence of expert domain knowledge, a tempting strategy is to use the ``kitchen sink" approach and hope that the true features are included in a much larger set of potential features. In this paper we revisit linear MDPs from the perspective of feature selection. In a $k$-sparse linear MDP, there is an unknown subset $S \subset [d]$ of size $k$ containing all the relevant features, and the goal is to learn a near-optimal policy in only poly$(k,\log d)$ interactions with the environment. Our main result is the first polynomial-time algorithm for this problem. In contrast, earlier works either made prohibitively strong assumptions that obviated the need for exploration, or required solving computationally intractable optimization problems. Along the way we introduce the notion of an emulator: a succinct approximate representation of the transitions that suffices for computing certain Bellman backups. Since linear MDPs are a non-parametric model, it is not even obvious whether polynomial-sized emulators exist. We show that they do exist and can be computed efficiently via convex programming. As a corollary of our main result, we give an algorithm for learning a near-optimal policy in block MDPs whose decoding function is a low-depth decision tree; the algorithm runs in quasi-polynomial time and takes a polynomial number of samples. This can be seen as a reinforcement learning analogue of classic results in computational learning theory. Furthermore, it gives a natural model where improving the sample complexity via representation learning is computationally feasible.

Provable benefits of score matching

Jun 03, 2023Abstract:Score matching is an alternative to maximum likelihood (ML) for estimating a probability distribution parametrized up to a constant of proportionality. By fitting the ''score'' of the distribution, it sidesteps the need to compute this constant of proportionality (which is often intractable). While score matching and variants thereof are popular in practice, precise theoretical understanding of the benefits and tradeoffs with maximum likelihood -- both computational and statistical -- are not well understood. In this work, we give the first example of a natural exponential family of distributions such that the score matching loss is computationally efficient to optimize, and has a comparable statistical efficiency to ML, while the ML loss is intractable to optimize using a gradient-based method. The family consists of exponentials of polynomials of fixed degree, and our result can be viewed as a continuous analogue of recent developments in the discrete setting. Precisely, we show: (1) Designing a zeroth-order or first-order oracle for optimizing the maximum likelihood loss is NP-hard. (2) Maximum likelihood has a statistical efficiency polynomial in the ambient dimension and the radius of the parameters of the family. (3) Minimizing the score matching loss is both computationally and statistically efficient, with complexity polynomial in the ambient dimension.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge