Devesh K. Jha

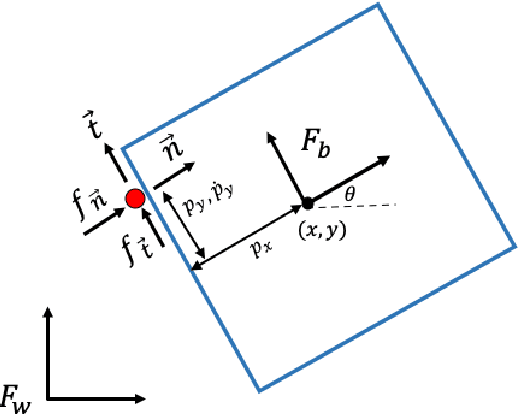

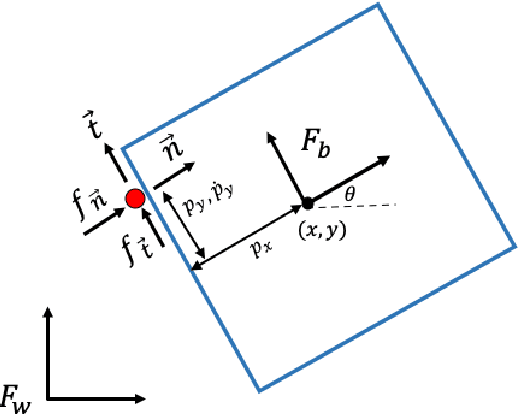

Robust Pivoting: Exploiting Frictional Stability Using Bilevel Optimization

Mar 22, 2022

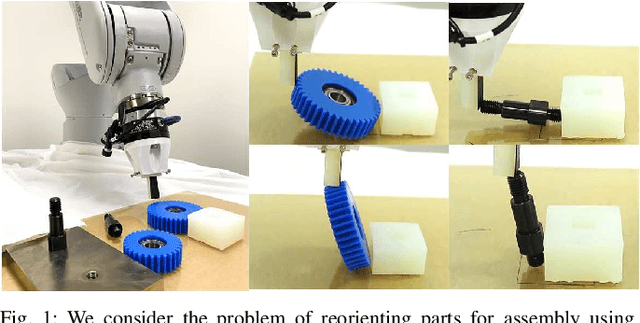

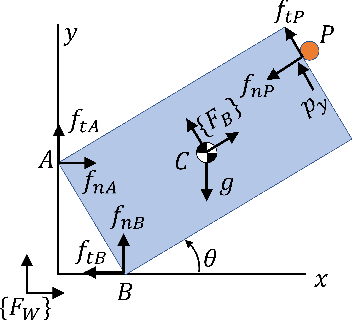

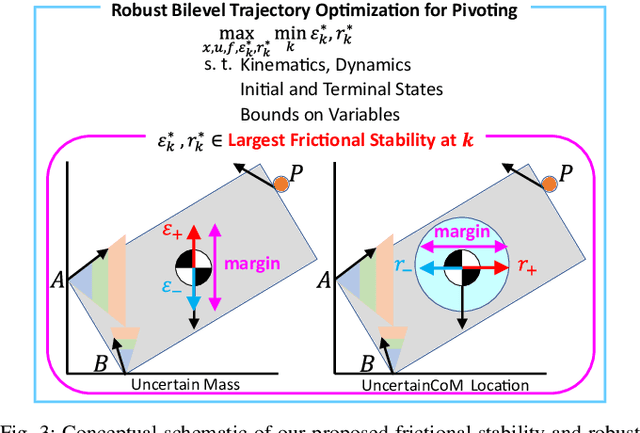

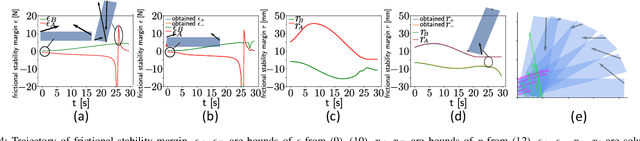

Abstract:Generalizable manipulation requires that robots be able to interact with novel objects and environment. This requirement makes manipulation extremely challenging as a robot has to reason about complex frictional interaction with uncertainty in physical properties of the object. In this paper, we study robust optimization for control of pivoting manipulation in the presence of uncertainties. We present insights about how friction can be exploited to compensate for the inaccuracies in the estimates of the physical properties during manipulation. In particular, we derive analytical expressions for stability margin provided by friction during pivoting manipulation. This margin is then used in a bilevel trajectory optimization algorithm to design a controller that maximizes this stability margin to provide robustness against uncertainty in physical properties of the object. We demonstrate our proposed method using a 6 DoF manipulator for manipulating several different objects.

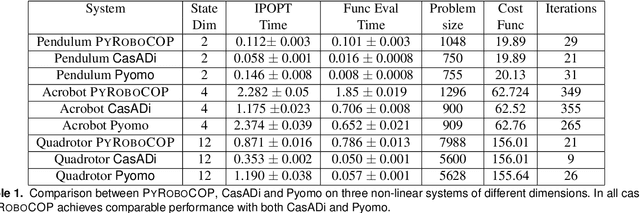

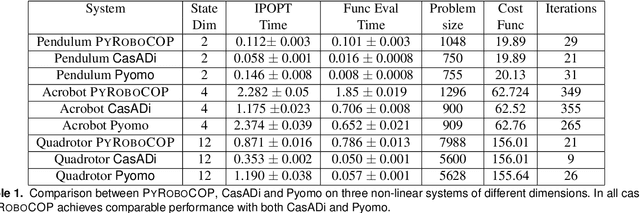

PYROBOCOP: Python-based Robotic Control & Optimization Package for Manipulation

Mar 18, 2022

Abstract:PYROBOCOP is a Python-based package for control, optimization and estimation of robotic systems described by nonlinear Differential Algebraic Equations (DAEs). In particular, the package can handle systems with contacts that are described by complementarity constraints and provides a general framework for specifying obstacle avoidance constraints. The package performs direct transcription of the DAEs into a set of nonlinear equations by performing orthogonal collocation on finite elements. PYROBOCOP provides automatic reformulation of the complementarity constraints that are tractable to NLP solvers to perform optimization of robotic systems. The package is interfaced with ADOL-C[1] for obtaining sparse derivatives by automatic differentiation and IPOPT[2] for performing optimization. We evaluate PYROBOCOP on several manipulation problems for control and estimation.

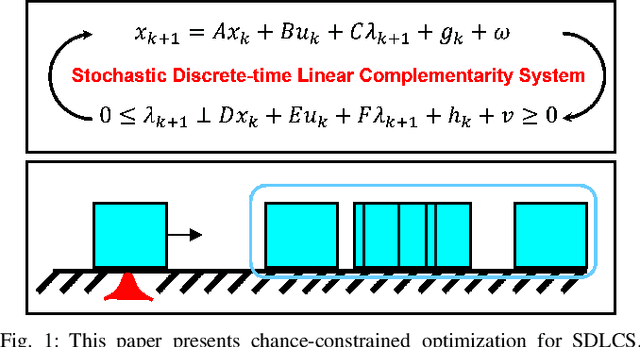

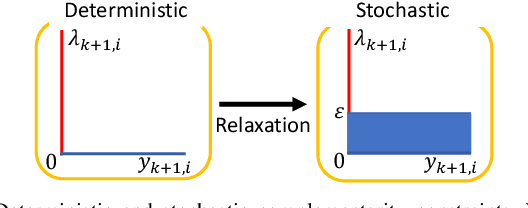

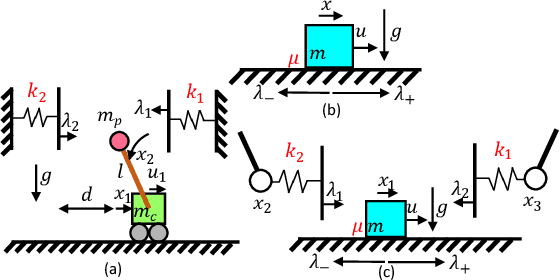

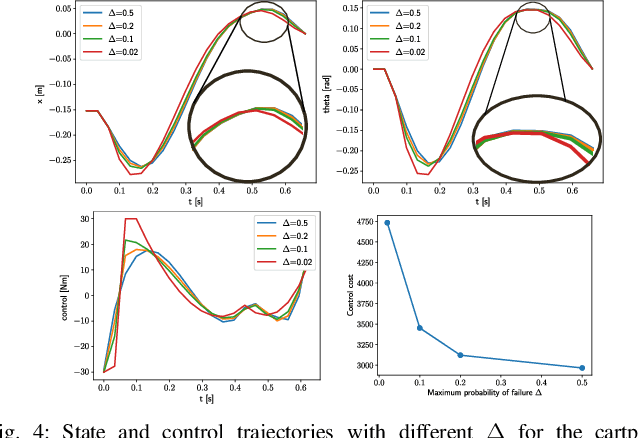

Chance-Constrained Optimization in Contact-Rich Systems for Robust Manipulation

Mar 05, 2022

Abstract:This paper presents a chance-constrained formulation for robust trajectory optimization during manipulation. In particular, we present a chance-constrained optimization for Stochastic Discrete-time Linear Complementarity Systems (SDLCS). To solve the optimization problem, we formulate Mixed-Integer Quadratic Programming with Chance Constraints (MIQPCC). In our formulation, we explicitly consider joint chance constraints for complementarity as well as states to capture the stochastic evolution of dynamics. We evaluate robustness of our optimized trajectories in simulation on several systems. The proposed approach outperforms some recent approaches for robust trajectory optimization for SDLCS.

* 9 pages, 9 figures

Imitation and Supervised Learning of Compliance for Robotic Assembly

Nov 20, 2021

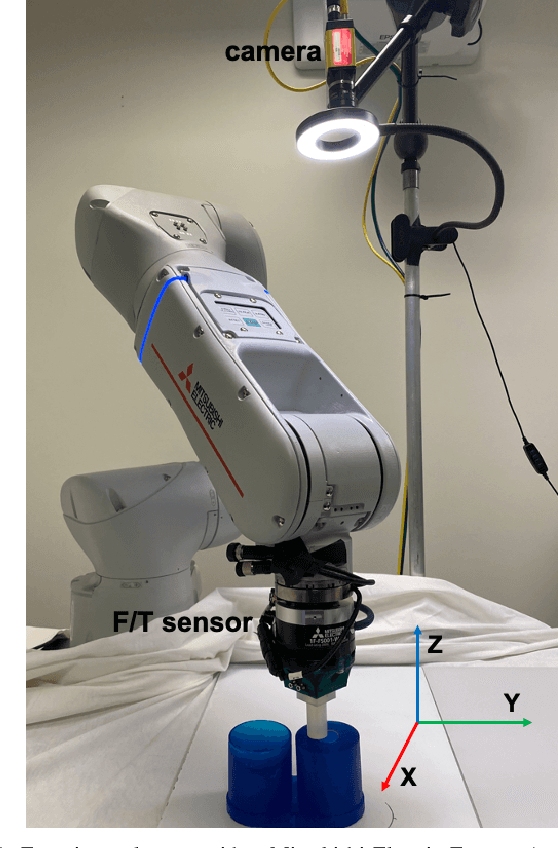

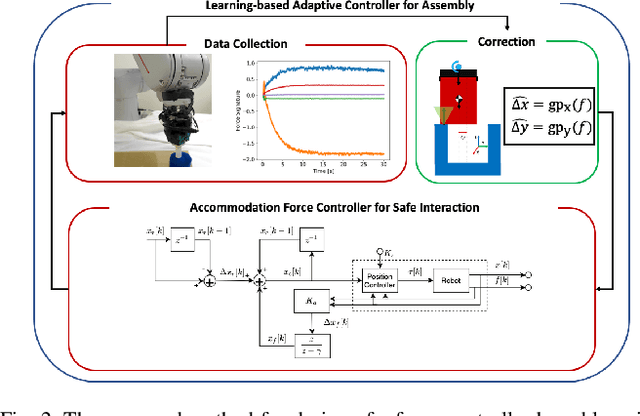

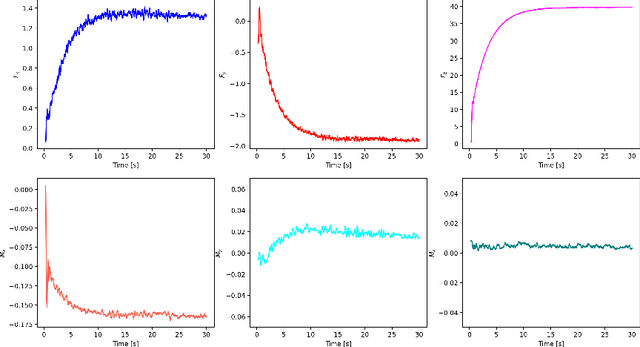

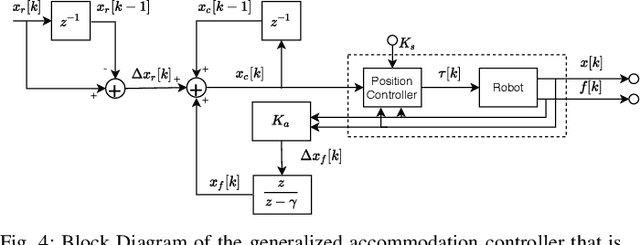

Abstract:We present the design of a learning-based compliance controller for assembly operations for industrial robots. We propose a solution within the general setting of learning from demonstration (LfD), where a nominal trajectory is provided through demonstration by an expert teacher. This can be used to learn a suitable representation of the skill that can be generalized to novel positions of one of the parts involved in the assembly, for example the hole in a peg-in-hole (PiH) insertion task. Under the expectation that this novel position might not be entirely accurately estimated by a vision or other sensing system, the robot will need to further modify the generated trajectory in response to force readings measured by means of a force-torque (F/T) sensor mounted at the wrist of the robot or another suitable location. Under the assumption of constant velocity of traversing the reference trajectory during assembly, we propose a novel accommodation force controller that allows the robot to safely explore different contact configurations. The data collected using this controller is used to train a Gaussian process model to predict the misalignment in the position of the peg with respect to the target hole. We show that the proposed learning-based approach can correct various contact configurations caused by misalignment between the assembled parts in a PiH task, achieving high success rate during insertion. We show results using an industrial manipulator arm, and demonstrate that the proposed method can perform adaptive insertion using force feedback from the trained machine learning models.

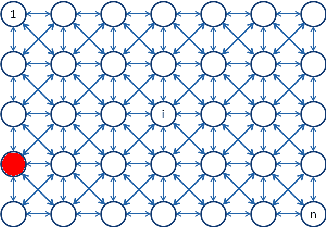

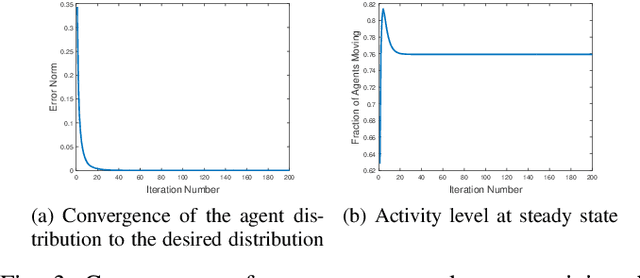

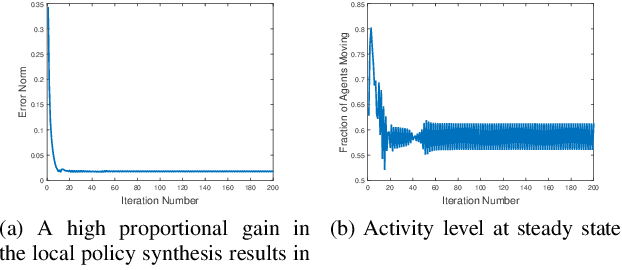

Distributed Task Allocation in Homogeneous Swarms Using Language Measure Theory

Jun 25, 2021

Abstract:In this paper, we present algorithms for synthesizing controllers to distribute a group (possibly swarms) of homogeneous robots (agents) over heterogeneous tasks which are operated in parallel. We present algorithms as well as analysis for global and local-feedback-based controller for the swarms. Using ergodicity property of irreducible Markov chains, we design a controller for global swarm control. Furthermore, to provide some degree of autonomy to the agents, we augment this global controller by a local feedback-based controller using Language measure theory. We provide analysis of the proposed algorithms to show their correctness. Numerical experiments are shown to illustrate the performance of the proposed algorithms.

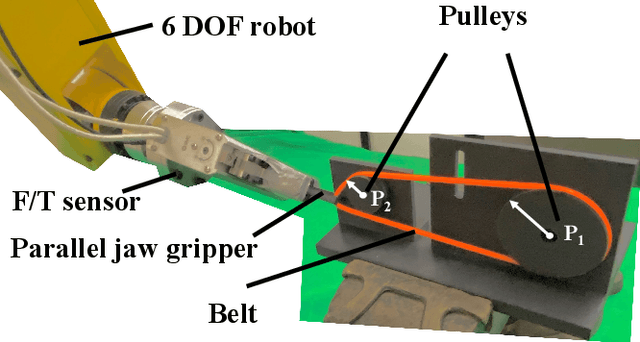

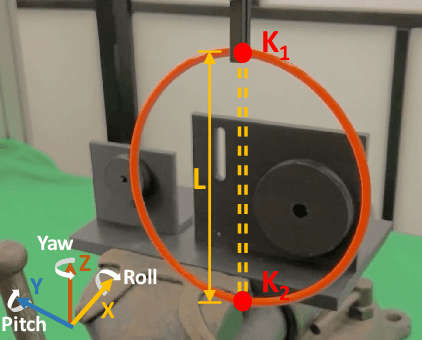

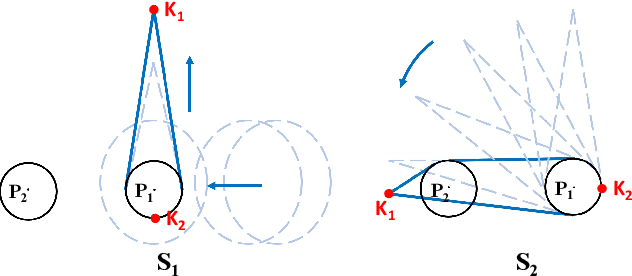

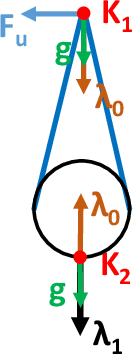

Trajectory Optimization for Manipulation of Deformable Objects: Assembly of Belt Drive Units

Jun 21, 2021

Abstract:This paper presents a novel trajectory optimization formulation to solve the robotic assembly of the belt drive unit. Robotic manipulations involving contacts and deformable objects are challenging in both dynamic modeling and trajectory planning. For modeling, variations in the belt tension and contact forces between the belt and the pulley could dramatically change the system dynamics. For trajectory planning, it is computationally expensive to plan trajectories for such hybrid dynamical systems as it usually requires planning for discrete modes separately. In this work, we formulate the belt drive unit assembly task as a trajectory optimization problem with complementarity constraints to avoid explicitly imposing contact mode sequences. The problem is solved as a mathematical program with complementarity constraints (MPCC) to obtain feasible and efficient assembly trajectories. We validate the proposed method both in simulations with a physics engine and in real-world experiments with a robotic manipulator.

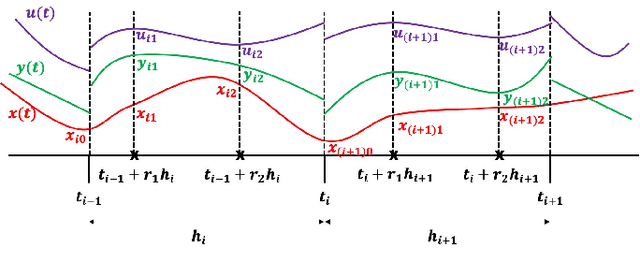

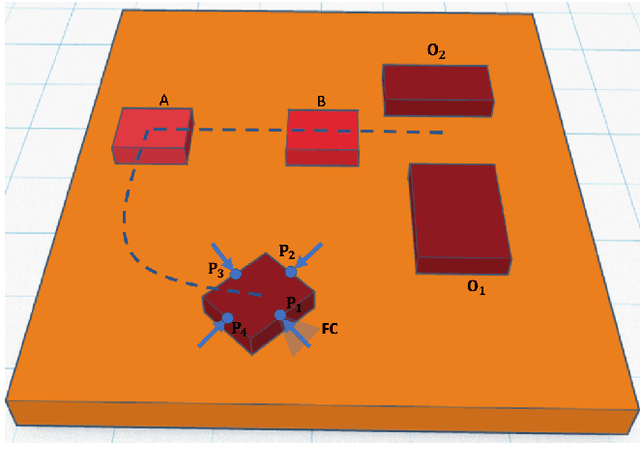

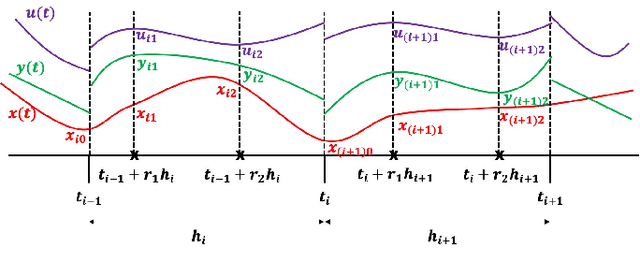

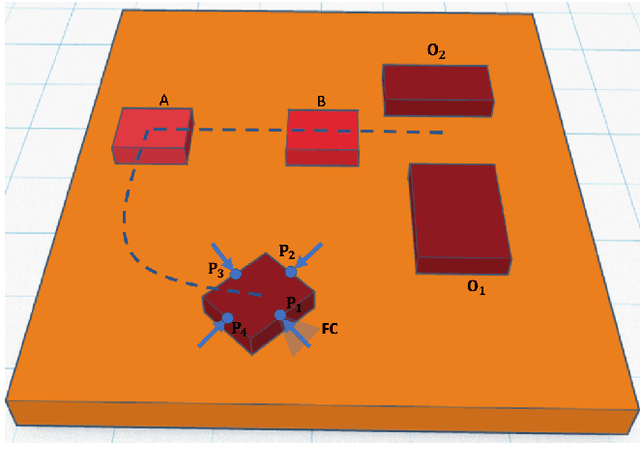

PYROBOCOP : Python-based Robotic Control & Optimization Package for Manipulation and Collision Avoidance

Jun 06, 2021

Abstract:PYROBOCOP is a lightweight Python-based package for control and optimization of robotic systems described by nonlinear Differential Algebraic Equations (DAEs). In particular, the package can handle systems with contacts that are described by complementarity constraints and provides a general framework for specifying obstacle avoidance constraints. The package performs direct transcription of the DAEs into a set of nonlinear equations by performing orthogonal collocation on finite elements. The resulting optimization problem belongs to the class of Mathematical Programs with Complementarity Constraints (MPCCs). MPCCs fail to satisfy commonly assumed constraint qualifications and require special handling of the complementarity constraints in order for NonLinear Program (NLP) solvers to solve them effectively. PYROBOCOP provides automatic reformulation of the complementarity constraints that enables NLP solvers to perform optimization of robotic systems. The package is interfaced with ADOLC for obtaining sparse derivatives by automatic differentiation and IPOPT for performing optimization. We demonstrate the effectiveness of our approach in terms of speed and flexibility. We provide several numerical examples for several robotic systems with collision avoidance as well as contact constraints represented using complementarity constraints. We provide comparisons with other open source optimization packages like CasADi and Pyomo .

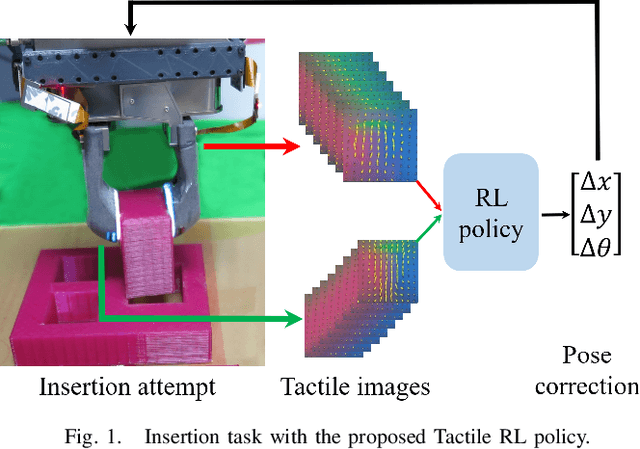

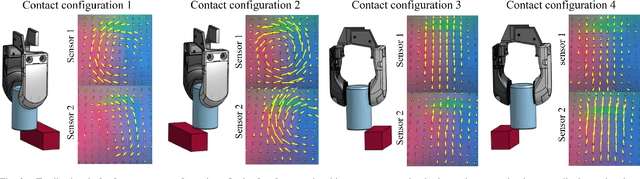

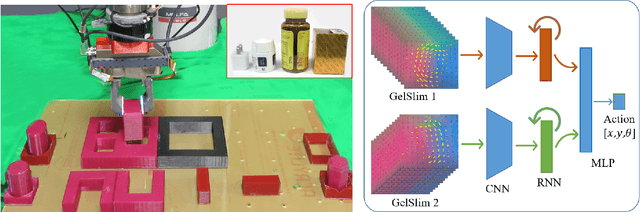

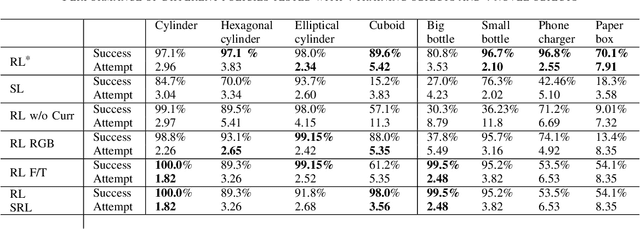

Tactile-RL for Insertion: Generalization to Objects of Unknown Geometry

Apr 02, 2021

Abstract:Object insertion is a classic contact-rich manipulation task. The task remains challenging, especially when considering general objects of unknown geometry, which significantly limits the ability to understand the contact configuration between the object and the environment. We study the problem of aligning the object and environment with a tactile-based feedback insertion policy. The insertion process is modeled as an episodic policy that iterates between insertion attempts followed by pose corrections. We explore different mechanisms to learn such a policy based on Reinforcement Learning. The key contribution of this paper is to demonstrate that it is possible to learn a tactile insertion policy that generalizes across different object geometries, and an ablation study of the key design choices for the learning agent: 1) the type of learning scheme: supervised vs. reinforcement learning; 2) the type of learning schedule: unguided vs. curriculum learning; 3) the type of sensing modality: force/torque (F/T) vs. tactile; and 4) the type of tactile representation: tactile RGB vs. tactile flow. We show that the optimal configuration of the learning agent (RL + curriculum + tactile flow) exposed to 4 training objects yields an insertion policy that inserts 4 novel objects with over 85.0% success rate and within 3~4 attempts. Comparisons between F/T and tactile sensing, shows that while an F/T-based policy learns more efficiently, a tactile-based policy provides better generalization.

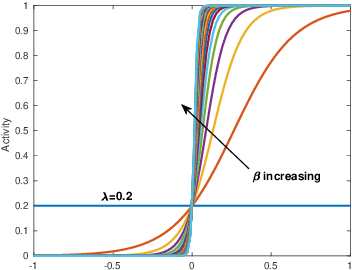

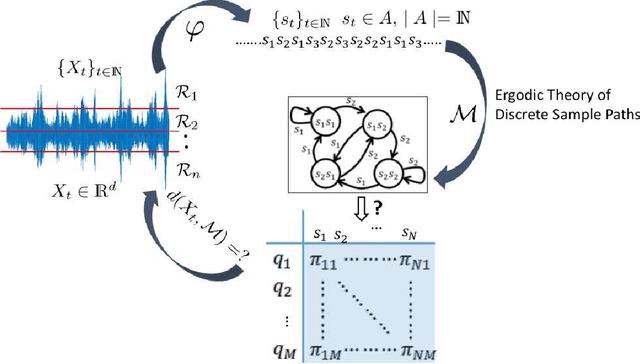

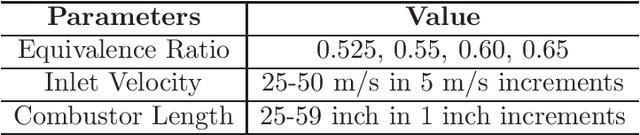

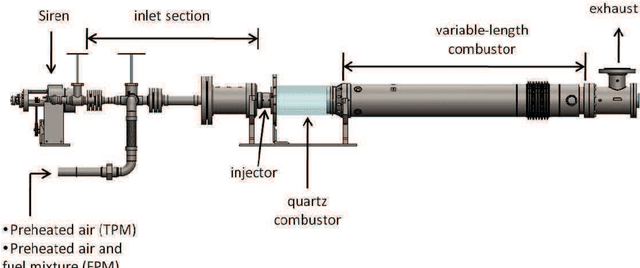

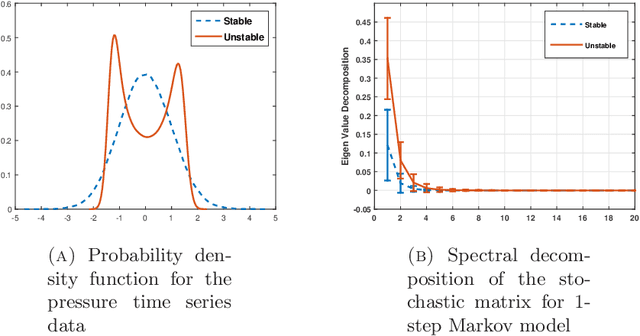

Markov Modeling of Time-Series Data using Symbolic Analysis

Mar 23, 2021

Abstract:Markov models are often used to capture the temporal patterns of sequential data for statistical learning applications. While the Hidden Markov modeling-based learning mechanisms are well studied in literature, we analyze a symbolic-dynamics inspired approach. Under this umbrella, Markov modeling of time-series data consists of two major steps -- discretization of continuous attributes followed by estimating the size of temporal memory of the discretized sequence. These two steps are critical for the accurate and concise representation of time-series data in the discrete space. Discretization governs the information content of the resultant discretized sequence. On the other hand, memory estimation of the symbolic sequence helps to extract the predictive patterns in the discretized data. Clearly, the effectiveness of signal representation as a discrete Markov process depends on both these steps. In this paper, we will review the different techniques for discretization and memory estimation for discrete stochastic processes. In particular, we will focus on the individual problems of discretization and order estimation for discrete stochastic process. We will present some results from literature on partitioning from dynamical systems theory and order estimation using concepts of information theory and statistical learning. The paper also presents some related problem formulations which will be useful for machine learning and statistical learning application using the symbolic framework of data analysis. We present some results of statistical analysis of a complex thermoacoustic instability phenomenon during lean-premixed combustion in jet-turbine engines using the proposed Markov modeling method.

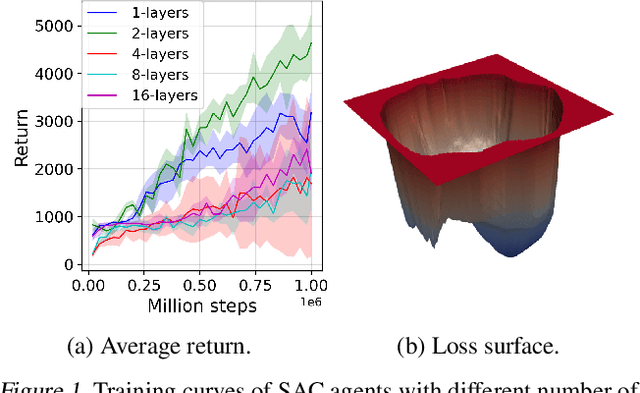

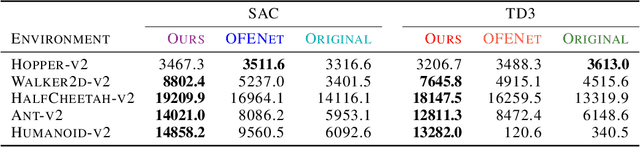

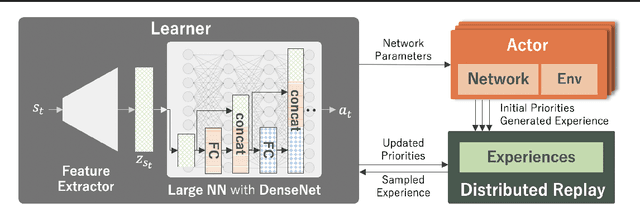

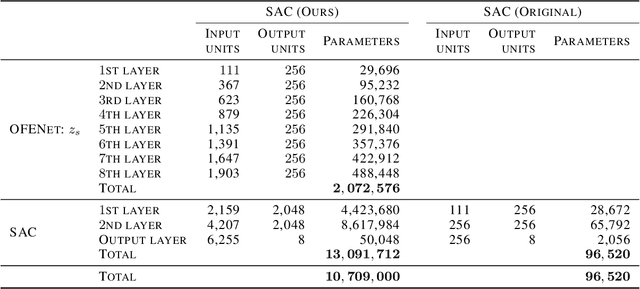

Training Larger Networks for Deep Reinforcement Learning

Feb 16, 2021

Abstract:The success of deep learning in the computer vision and natural language processing communities can be attributed to training of very deep neural networks with millions or billions of parameters which can then be trained with massive amounts of data. However, similar trend has largely eluded training of deep reinforcement learning (RL) algorithms where larger networks do not lead to performance improvement. Previous work has shown that this is mostly due to instability during training of deep RL agents when using larger networks. In this paper, we make an attempt to understand and address training of larger networks for deep RL. We first show that naively increasing network capacity does not improve performance. Then, we propose a novel method that consists of 1) wider networks with DenseNet connection, 2) decoupling representation learning from training of RL, 3) a distributed training method to mitigate overfitting problems. Using this three-fold technique, we show that we can train very large networks that result in significant performance gains. We present several ablation studies to demonstrate the efficacy of the proposed method and some intuitive understanding of the reasons for performance gain. We show that our proposed method outperforms other baseline algorithms on several challenging locomotion tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge